The Correlated Gaussian Sparse Histogram Mechanism

Abstract

We consider the problem of releasing a sparse histogram under -differential privacy.

The stability histogram independently adds noise from a Laplace or Gaussian distribution to the non-zero entries and removes those noisy counts below a threshold. Thereby, the introduction of new non-zero values between neighboring histograms is only revealed with probability at most , and typically, the value of the threshold dominates the error of the mechanism.

We consider the variant of the stability histogram with Gaussian noise.

Recent works ([Joseph and Yu, COLT ’24] and [Lebeda, SOSA ’25]) reduced the error for private histograms

using correlated Gaussian noise.

However, these techniques can not be directly applied in the very sparse setting.

Instead, we adopt Lebeda’s technique and show that adding correlated noise to the non-zero counts only allows us to reduce the magnitude of noise when we have a sparsity bound.

This, in turn, allows us to use a lower threshold by up to a factor of compared to the non-correlated noise mechanism.

We then extend our mechanism to a setting without a known bound on sparsity.

Additionally, we show that correlated noise can give a similar improvement for the more practical discrete Gaussian mechanism.

Keywords and phrases:

differential privacy, correlated noise, sparse gaussian histogramsCopyright and License:

2012 ACM Subject Classification:

Security and privacy Privacy-preserving protocolsFunding:

Retschmeier carried out this work at Basic Algorithms Research Copenhagen (BARC), which was supported by the VILLUM Foundation grant 54451. Providentia, a Data Science Distinguished Investigator grant from the Novo Nordisk Fonden, supported Retschmeier. The work of Lebeda is supported by grant ANR-20-CE23-0015 (Project PRIDE).Editors:

Mark BunSeries and Publisher:

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Releasing approximate counts is a common task in differential private data analysis. For example, the task of releasing a search engine log, where one wants to differentially private release the number of users who have searched each possible search term.

The full domain of search terms is too large to work with directly, but the vector of all search counts is extremely sparse as most phrases are never searched. The standard approach to exploit sparsity while ensuring differential privacy is the stability histogram [3, 15, 5, 1, 12, 13, 25]. The idea is to preserve sparsity by filtering out zero counts and then adding noise from a suitable probability distribution to the remaining ones. Unfortunately, some filtered-out counts could be non-zero in a neighboring dataset, and thus, revealing any of them would violate privacy. To limit this privacy violation, the stability histogram introduces a threshold and releases only those noisy counts that exceed . This way, we might still reveal the true dataset among a pair of neighboring datasets if one of these counters exceeds the threshold, but with an appropriate choice of , this is extremely unlikely to happen.

In our setting, one single user may contribute to many counts; hence, using the Gaussian Sparse Histogram Mechanism (GSHM) [12, 24] might be preferable. The GSHM is similar to the stability histogram, but it replaces noise from the Laplace distribution with Gaussian noise. To analyze the guarantees of the GSHM, one has to find an upper bound for , which is influenced by the standard Gaussian mechanism and the small probability of infinite privacy loss that can happen when the noise exceeds . We denote these two quantities as and . Recently, [24] gave exact privacy guarantees of the GSHM, which improves over the analysis by [12] where and were simply summed. Their main contribution is a more intricate case distinction of a single user’s impact on the values of and . This allows them to use a lower threshold with the same privacy parameters. Their improvement lies in the constants, but it can be significant in practical applications because the tighter analysis essentially gives better utility at no privacy cost.

Our goal is to reduce the error further. Because the previous analysis is exact, we must exploit some additional structure of the problem. Consider a -dimensional histogram for for users with data . Notice that adding a single user to can only increase counts in , whereas removing one can only decrease counts. Lebeda [16] recently showed how to exploit this monotonicity by adding a small amount of correlated noise. This reduces the total magnitude of noise almost by a factor of compared to the standard Gaussian Mechanism. So, it is natural to ask if we can adapt this technique to the setting when the histogram is sparse. This motivates our main research question:

Question: When can we take advantage of monotonicity and use correlated noise to improve the Gaussian Sparse Histogram Mechanism?

1.1 Our Contribution

We answer this question by introducing the Correlated Stability Histogram (CSH). Building on the work by [16], we extend their framework to the setting of releasing a histogram under a sparsity constraint, that is for some known . This is a natural setting that occurs e.g. for Misra-Gries sketches, where is the size of the sketch [21, 18]. Furthermore, enforcing this sparsity constraint is necessary to achieve the structure required to benefit from correlated noise.

Correlated GSHM.

We introduce the Correlated Stability Histogram (CSH), a variant of the GSHM using correlated noise. Our algorithm achieves a better utility-privacy trade-off than GSHM for -sparse histograms. Similar to the result of Lebeda [16], we show that correlated noise can reduce the error by almost a factor of at no additional privacy cost. In particular, our main result lies in the following (informally stated) theorem:

Theorem 1 (The Correlated Stability Histogram Mechanism (Informal)).

Let denote a histogram with bounded sparsity, where and . If the GSHM privately releases under -DP with noise magnitude and removes noisy counters below a threshold , then the Correlated Stability Histogram Mechanism (CSH) releases also under -DP with noise of magnitude and removes noisy counters below a threshold .

As a baseline, we first analyze our mechanism using the add-the-deltas [12] approach and show that even this approach outperforms the exact analysis in [25] in many settings. We then turn our attention to a tighter analysis, which uses an intricate case distinction to upper bound the parameter . Furthermore, we complement our approach with the following results:

Generalization & Extensions.

We extend our mechanism to multiple other settings, including extensions considered in [24]. First, we generalize our approach to allow an additional threshold that filters out infrequent data in a pre-processing step. Second, we discuss how other aggregate database queries can be included in our mechanism. Last, we generalize to top- counting queries when we have no bound for .

Discrete Gaussian Noise.

Our mechanism achieves the same improvement over GSHM when noise is sampled from the discrete Gaussian rather than the continuous distribution. We present a simple modification to make our mechanism compatible with discrete noise. This has practical relevance even for the dense setting considered by Lebeda [16].

Organization.

The rest of the paper is organized as follows. Section 2 introduces the problem formally and reviews the required background. Section 3 introduces the Correlated Stability Histogram, our main algorithmic contribution for the sparse case. Furthermore, in Section 4 we generalize our approach to the non-sparse setting. The extensions of our technique to match the setting of Wilkins et al. [24] are discussed in Section 6. In Section 7, we show how to adapt our mechanism for discrete Gaussian noise. Finally, we conclude the paper in Section 8 and discuss open problems for future work.

Additionally, numerical evaluations in Section 5 confirm our claim that we improve the error over previous techniques.

2 Preliminaries and Background

Given a dataset , where is the set of datasets with any size, we want to perform an aggregate query under differential privacy. We focus on settings where each user has a set of elements, and we want to estimate the count of all elements over the dataset. Therefore, we consider a dataset of data points where each . Our goal is to output a private estimate of the histogram , where 111Note that in the differential privacy literature the term histogram is often used for the special case where users each have a single element. We use it for consistency with related work (e.g. [24]). The setting we consider is closely related to the problem of releasing one-way marginals.. For any vector , we define the support as and denote the norm as , being the number of non-zeroes in . In this work, we focus on settings where the dimension is very large or infinite.

Proposed by [10], differential privacy (DP) is a property of a randomized mechanism. The intuition behind differential privacy is that privacy is preserved by ensuring that the output distribution does not depend much on any individual’s data. In this paper, we consider -differential privacy (sometimes referred to as approximate differential privacy) together with the add-remove variant of neighboring datasets as defined below. Note that by this definition and :

Definition 2 (Neighboring datasets).

A pair of datasets are neighboring if there exists an such that either or holds. We denote the neighboring relationship as .

Definition 3 ([11] -differential privacy).

Given and , a randomized mechanism satisfies -DP, if for every pair of neighboring datasets and every measurable set of outputs it holds that

We shortly denote the case where as -DP. Differential privacy is immune to post-processing. Let be a randomized algorithm that is -DP. Let be an arbitrary randomized mapping. Then is -DP.

An important concept in differential privacy is the sensitivity of a query, which restricts the difference between the output for any pair of neighboring datasets. We consider both the sensitivity and, more generally, the sensitivity space of the queries in this paper.

Definition 4 (Sensitivity space and sensitivity).

The sensitivity space of a deterministic function is the set and denote as the norm of any . Then the sensitivity of is defined as

The standard Gaussian mechanism adds continuous noise from a Gaussian distribution to with magnitude scaled according to Lemma 5.

Lemma 5 ([4, Theorem 8] The Analytical Gaussian Mechanism).

Let denote a function with sensitivity at most . Then the mechanism that outputs , where each are independent and identically distributed, satisfies -differential privacy if the following inequality holds

where denotes the cdf of the Gaussian distribution.

2.1 The Gaussian Sparse Histogram Mechanism

We consider the problem of releasing the histogram of a dataset where each under differential privacy. The standard techniques for releasing a private histogram are the well-known Laplace mechanism [10] and Gaussian mechanism [9, 4], which achieve - and -DP, respectively. Although this works well for dense data, it is unsuited for very sparse data where because adding noise to all entries increases both the maximum error as well as the space and time requirements. Furthermore, the mechanisms are undefined for infinite domains ().

In the classic sparse histogram setting where , the preferred technique under -DP is the stability histogram which combines Laplace noise with a thresholding technique (see [3, 15, 5, 1, 12, 13, 25]).

In this paper, we consider the Gaussian Sparse Histogram Mechanism (see [12, 24]), which replaces Laplace noise in the stability histogram with Gaussian noise. This is often preferred when users can contribute multiple items as the magnitude of noise is scaled by the sensitivity instead of the sensitivity. The GSHM adds Gaussian noise to each non-zero counter of and removes all counters below a threshold . Find the pseudocode in Algorithm 1. We discuss the impact of the parameter below.

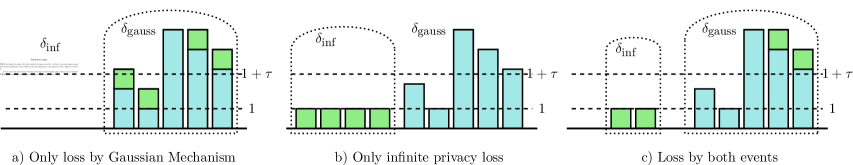

To get differential privacy guarantees, we observe that there are two sources of privacy loss that have to be accounted for by the value of : from the Gaussian noise itself and the probability of infinite privacy loss , when a zero count is deterministically ignored in one dataset, but possibly released in a neighboring one. These events have infinite privacy loss because they can only occur for one of the datasets. Therefore, bounds the possibility of outputting a counter that is not present in the neighboring dataset. Figure 1 gives some intuition about the role of and . Throughout this section, we assume that for some known integer .

2.2 The add-the-deltas Approach

The following approach appeared in a technical report in the Google differential privacy library [12]. Similar techniques have been used elsewhere in the literature (e.g. [25]). We refer to this technique as add-the-deltas. We have to account for the privacy loss due to the magnitude of the Gaussian noise. Therefore, the value of is typically found by considering the worst-case effect of a user that only changes non-zero counters in both histograms. The value of follows from applying Lemma 5 (compare also Figure 1a) ).

The event of infinite privacy loss is captured by . This is bounded by considering the worst-case scenario of changing zero-counters to and the fact that infinite privacy loss occurs exactly when any of these counters exceed the threshold in the non-zero dataset (compare Figure 1b) ).

The observation for the add-the-deltas approach is that is a valid upper bound on the overall value, and hence the condition of Lemma 6 is sufficient to achieve differential privacy.

Lemma 6 ([12] add-the-deltas).

If pairs of neighboring histograms differs in at most counters then the Gaussian Sparse Histogram Mechanism with parameters and satisfies -differential privacy where

2.3 Exact Analysis by Taking the Max over the Sensitivity Space

While the add-the-deltas approach is sufficient to bound it does not give the tightest possible parameters. Wilkins et al. [24] were able to derive an exact value for . Compared to the add-the-deltas approach above, the key insight is that the two worst-case scenarios cannot occur simultaneously for any pair of neighboring histograms. This means that each counter either contributes to or , but never to both. In the extreme cases, either all counters flip from zero to one, from one to zero, or none of them do between neighboring datasets. Thus, we only need to consider a single source of privacy loss. In the other (mixed) cases, we have to consider both sources of privacy loss, but the change is smaller than for the worst-case pair of datasets. A small example is depicted in Figure 1. Wilkins et al. [24] used this fact to reduce the threshold required to satisfy the given privacy parameters using a tighter analysis. We now restate their main result: the exact privacy analysis of the GSHM.

Lemma 7 ([24] Exact Privacy Analysis of the GSHM).

If pairs of neighboring histograms differs in at most counters then the Gaussian Sparse Histogram Mechanism with parameters and satisfies -differential privacy where and

Note that we use a slightly different convention from [24]. We use a threshold of rather than . Furthermore, they also consider a more general mechanism that allows for optional aggregation queries and an additional threshold parameter. Although our work can easily be adapted to this setting as well, these extensions are not the focus of our main work to simplify presentation. A discussion of the extensions can be found in Section 6.

2.4 The Correlated Gaussian Mechanism

Our work builds on a recent result by Lebeda [16] about using correlated noise to improve the utility of the Gaussian mechanism under the add-remove neighboring relationship. They show that when answering counting queries, adding a small amount of correlated noise to all queries can reduce the magnitude of Gaussian noise by almost half. We restate their main result for -differential privacy with a short proof as they use another privacy definition.

Lemma 8 ([16] The Correlated Gaussian Mechanism).

Let where . Then the mechanism that outputs satisfies -DP. Here is the -dimensional vector of all ones, , and each where

Proof.

This follows from combining the inequality for the standard Gaussian mechanism (see Lemma 5) with [16, Lemma 3.5]. Furthermore, if we set as in [16, Theorem 3.1] we minimize the total magnitude of noise. Notice that the value of scales with for the standard Gaussian mechanism and it scales with here. We add two noise samples to each query, and the total error for each data point scales with .

Concurrently with the result discussed above, [14] considered the setting where user contributions are sparse such that for some . They give an algorithm that adds the optimal amount of correlated Gaussian noise for any and . It is natural to ask whether this algorithm can improve error for our setting since they focus on a sparse setting. However, their improvement factor over the standard Gaussian mechanism depends on the sparsity. Naively applying their technique for the setting of the GSHM where yields no practical improvements. We instead focus on adapting the technique from [16]. We discuss a more restrictive setting where the technique of [14] applies in Section 8.

3 Algorithmic Framework

We are now ready to introduce our main contribution, a variant of the Gaussian Sparse Histogram Mechanism using correlated noise. We first introduce our definition of -sparse monotonic histograms. Throughout this section, we assume that all histograms are both -sparse and monotonic. Intuitively, we define monotonicity on a histogram in a way that captures the setting where the counts are either all increasing or all decreasing. Observe that due to the monotonicity constraint, we have that the supports and for two neighboring histograms satisfy either or . We use this property in the privacy proofs later. The histograms are also monotonic in [24], but they do not require -sparsity. We provide a mechanism for a setting where the histograms are not -sparse in Section 4. There we enforce the sparsity constraint using a simple pre-processing step. Both sparsity and monotonicity are required in order to achieve the structure between neighboring histograms required to benefit from correlated noise.

Definition 9 (-sparse monotonic histogram).

We assume that the input histogram is -sparse. That is, for any dataset we have . Furthermore, the sensitivity space of is . That is, the difference between counters of neighboring histograms are either non-decreasing or non-increasing.

One example of -sparse monotonic histograms is Misra-Gries sketches. Merging Misra-Gries sketches is common in practical applications. The sensitivity space of merged Misra-Gries sketches of size exactly matches Definition 9 (See [18]). Our algorithm is more general than Misra-Gries sketches and satisfies differential privacy as long as the structure between neighboring histograms holds for all pairs of neighboring datasets.

Notice that Definition 9 implies that neighboring histograms differ in at most counters. As such, we can release the histogram using the standard Gaussian Sparse Histogram Mechanism. Wilkins et al. [24] already uses the fact that counters are either non-decreasing or non-increasing in their analysis. We intend to further take advantage of the monotonicity by adding a small amount of correlated noise to all non-zero counters. This allows us to reduce the total magnitude of noise similar to [16]. The reduced magnitude of noise in turn allows us to reduce the threshold required for privacy. The pseudocode for our mechanism is in Algorithm 2.

We first give privacy guarantees for Algorithm 2 in a (relatively) simple closed form similar to the add-the-deltas approach. Later, we give tighter bounds using a more complicated analysis similar to Lemma 7. Unfortunately, the proofs from previous work in either case rely on the fact that all noisy counters are independent. That is clearly not the case for our mechanism because the value of is added to all entries. Instead, we use different techniques to give similar results, starting with the add-the-deltas approach. The following lemma gives a general bound for the event that one of correlated noisy terms exceeds a threshold . We use this in the proof for both approaches later.

Lemma 10 (Upper bound for Correlated Noise).

Let be a single sample for a real together with additional samples . Then for any , we have .

Proof.

The proof is in Section B.2.

3.1 The add-the-deltas Analysis

The following Theorem 11 proves privacy guarantees of our mechanism using a similar technique than the one proposed by the Google Anonymization Team [12] and Wilson et al. [25] which is known as add-the-deltas. The total value for is split between and which accounts for the two types of privacy loss that are relevant to the mechanism. Like with the GSHM, these values are found by considering worst-case pairs of neighboring histograms for each case. However, a pair of neighboring histograms cannot be worst-case for both values as seen in Figure 1 which motivates the tighter analysis later in the section.

Theorem 11 (add-the-deltas technique).

Algorithm 2 satisfies -differential privacy for -sparse monotonic histograms where

Proof.

By Definition 3, the lemma holds if for any pair of neighboring -sparse monotonic histograms and and all sets of outputs we have

We prove that the inequality above holds by introducing a third histogram. This new histogram is constructed such that it is between and . We first state the desired properties of this histogram and then show that such a histogram must exist for all neighboring histograms. Assume for now that there exists a histogram where the following two inequalities hold for any set of outputs :

| (1) | ||||

| (2) |

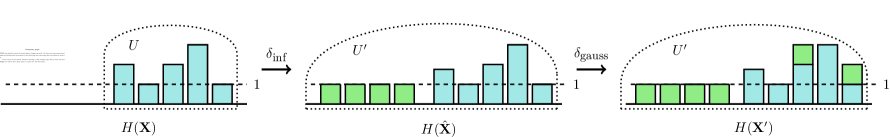

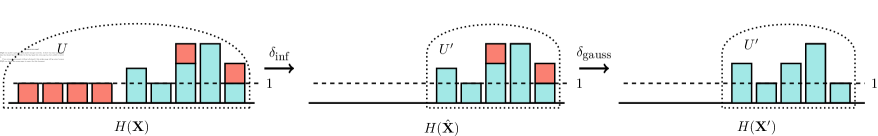

Then the inequality we need for the lemma follows immediately since

Next, we show how to construct from and , and finally we show that each of two inequalities holds using this construction. Let denote the support of and define similarly and . We construct such that it has the same support as , that is . For all we set and for all we set . In other words, we construct such that (1) and only differ in entries that are in both and (2) and only differ in entries that are in only one of and . This allows us to analyze each case separately to derive our values for and . An example of this construction is shown in Figure 2.

We start with the case of using and . Let denote a new mechanism equivalent to Algorithm 2 except that the condition on line 5 is removed. Notice that with or as inputs is equivalent to the Correlated Gaussian Mechanism restricted to . Since we have by Lemma 8 that

Notice that if we post-process the output of by removing entries below , then the output distribution is equivalent to . Equation 1 therefore holds because post-proccessing does not affect differential privacy guarantees (see Definition 3).

The histograms and only differ in entries that all have a count of in one of the histograms while they all have a count of in the other. accounts for the event where any such counter exceeds the threshold because the distributions are identical for the shared support. The probability of this event is increasing in the number of different elements between and and therefore, the worst case happens for neighboring datasets such that and . Note that this bound also hold when zero counters in are non-zero in . We focus on one direction because the proof is almost identical for the symmetric case. We bound the probability of outputting any entries from , that is for at least one . The bound for follows from setting in Lemma 10. In Section B.1, we show how to directly compute the required threshold based on the result in Theorem 11.

3.2 Tighter Analysis

Next, we carry out a more careful analysis that considers all elements from the sensitivity space similar to [24]. As discussed above, we cannot directly translate the analysis by Wilkins et al. because they rely on independence between each entry. Due to space constraints, we refer the full proof to the full version of the paper [17], and we only give a short intuition behind the theorem here.

Theorem 12 (Tighter Analysis).

First define , , and . Then Algorithm 2 with parameters , , and satisfies -differential privacy for -sparse monotonic histograms, where

The result relies on a case by case analysis where each term in the maximum corresponds to a specific difference between neighboring histograms. The first two terms covers cases when we only have to consider either the infinite privacy loss event or the Gaussian noise, respectively. The remaining terms cover the differences when we have to account for both Gaussian noise and the threshold similar to case c) in Figure 1. The first of the internal maximum covers the cases where and , while the second covers the cases where and . For each case in our analysis, we split up the impact from Gaussian noise and the threshold. Together, these cases cover all elements in the sensitivity space for -sparse monotonic histograms.

4 Top-k Counting Queries

The privacy guarantees of Algorithm 2 are conditioned on the histogram being -sparse for all datasets. Here we present a technique when we do not have this guarantee. Specifically, we consider the setting when the input is a dataset where . We want to release a private estimate of , and can have any number of non-zero entries. We use superscript to denote the elements of the histogram in descending order with ties broken arbitrarily such that . This setting is studied in a line of work for Private top-k selection: [8, 23, 22, 19, 2, 26].

Our algorithm in this setting relies on a simple pre-processing step. We first find the value of the ’th largest entry in the histogram. We then subtract that value from all entries in the histogram and remove negative counts. This gives us a new histogram which we use as input for Algorithm 2. We show that this histogram is both -sparse and monotonic which implies that the mechanism has the same privacy guarantees as Algorithm 2.

Lemma 13.

The function in Algorithm 3 produces -sparse monotonic histograms.

Proof.

The proof is in Appendix B.3.

Since Algorithm 3 simply returns the output of running Algorithm 2 with a -sparse monotonic histogram it has the same privacy guarantees.

Corollary 14.

The privacy guarantees specified by Theorems 11 and 12 hold for Algorithm 3.

Algorithm 3 introduces bias when subtracting from each counter as a pre-processing step. If we have access to a private estimate of we can add it to all counters of the output as post-processing. Since this estimate would be used for multiple counters, similar to , we might want to use additional privacy budget to get a more accurate estimate. This can be included directly in the privacy analysis. If we e.g. release the privacy guarantees from Theorem 11 hold if we change to in .

5 Numerical Evaluations

To back up our theoretical claims, we compare the error of the Correlated Stability Histogram against both the add-the-deltas approach [12] and the exact analysis [24] of the uncorrelated GSHM. We consider the error in both privacy analysis approaches for our mechanisms as well (Theorems 11 and 12).

We compare the parameters of the mechanisms when releasing -sparse monotonic histograms. We ran the experiments shown in Figure 3 with the same privacy parameters as in [24]222in turn based on the privacy guarantees of the Facebook URL dataset [20]., which is and . Following their approach, we plot the minimum such that each mechanism satisfies -DP for a given magnitude of noise. Note that our setting of differs from [24], so the experiments are not directly comparable.

Results.

The first plot shown in Figure 3a) resembles the same used in [24, Figure 1 (A)]. In this setting, one can lower the threshold by approximately . Because our technique favors large (we have to scale the noise with instead of ), we also include the case were is small in Figure 3b). The plots show that we make some small improvements even in that case. Even in that case, our looser add-the-deltas analysis for the CSH beats [24]. Note that the values of in Algorithm 1 and Algorithm 2 are not directly comparable, because we add noise twice in Algorithm 2. For a fair comparison, we instead use the total magnitude of noise in the plots. For the CSH we plot the value of . The dotted lines indicated the minimum magnitude of total noise for which GSHM and CSH satisfy -DP.

6 Extensions

The setting in [24] is slightly different from ours. Here we discuss how to adapt our technique to their setting and consider two extensions of the GSHM we did not include in the pseudocode of Algorithm 1.

Additional sparsity threshold.

The mechanism of Wilkins et al. [24] employs a second threshold that allows to filter out infrequent data in a pre-processing step: All counters below are removed before adding noise. The high threshold is then used to remove noisy counters similar to both Algorithms 1 and 2. This generalized setup allows them to account for pre-processing steps that the privacy expert has no control over. Our setting corresponds to the case where , and it is straightforward to incorporate this constraint into our mechanism: For a given , we simply have to replace with the difference between the two thresholds in all theorems. In fact, this situation is very similar to our result in Section 4 where we remove all values below a lower threshold before adding noise.

Aggregator functions.

Wilkins et al. [24],consider a setting where we are given an aggregator function which returns a vector when applied to any dataset .

If any in the privatized released histogram , exceeds the threshold, the aggregator function is applied to all data points where . The aggregated values are then privatized by adding Gaussian noise which shape and magnitude can be set independently of the magnitude of noise added to the count. The privatized aggregate is then released along with . This setup is motivated by group-by database operations where we first want to privately estimate the count of each group together with some aggregates modeled by .

We did not consider aggregate functions in our analysis and instead focused on the classical setting, where we only wanted to privately release a sparse histogram while ignoring zero counters. Wilkins, Kifer, Zhang, and Karrer account for the impact of the aggregator functions in the equivalent of in [24, Theorem 5.4, Corollary 5.4.1].

In short, the effect of aggregators can be accounted for as an increase in the sensitivity using a transformation of the aggregate function. The same technique could be applied to our mechanism. This would give us a similar improvement as Lemma 22 over the standard GSHM. It’s not as clear how the mechanisms compare under the tighter analyses.

The core idea behind the correlated noise can also be used to improve some aggregate queries. This can be used even in settings where we do not want to add correlated noise to the counts. The mechanism of Lebeda uses an estimate of the dataset size to reduce the independent noise added to each query. They spent part of the privacy budget to estimate . However, in our setting, we already have a private estimate for the dataset size for the aggregate query in .

If each query is a sum query where users have a value between and some value , we can reduce the magnitude of noise by a factor of by adding to each sum (It follows from [16, Lemma 3.6]). We can use a similar trick if computes a sum of values in some range . The standard approach would be to add noise with magnitude scaled to (see e.g. [25, Table 1]). Instead, we can recenter values around zero. As an example, contains a sum query with values in . We subtract from each point so that we instead sum values in and reduce the sensitivity from to . As post-processing, we add to the estimate of the new sum. The error then depends on . As with the Correlated Gaussian Mechanism, we gain an advantage over estimating the sum directly if contains multiple such sums because we can reuse the estimate for each sum.

7 From Theory towards Practice: Discrete Gaussian Noise

All mechanisms discussed in this paper achieve privacy guarantees using continuous Gaussian noise which is a standard tool in the differential privacy literature. However, real numbers cannot be represented exactly on computers which makes the implementation challenging. We can instead use the Discrete Gaussian Mechanism [7]. In this section, we modify the technique by Lebeda [16] to make the mechanism compatible with discrete Gaussian noise and then provide privacy guarantees for the discrete analogue to Algorithm 2 using -zCDP. We first list required definitions and the privacy guarantees of the Multivariate Discrete Gaussian:

Definition 15 (Discrete Gaussian Distribution).

Let with . The discrete Gaussian distribution with mean and scale is denoted . It is a probability distribution supported on the integers and defined by .

Definition 16 ([6] Zero-Concentrated Differential Privacy).

Given , a randomized mechanism satisfies -zCDP, if for every pair of neighboring datasets and all it holds that , where denotes the -Rényi divergence between the two distributions and . Furthermore, Zero-Concentrated Differential Privacy is immune to post-processing.

Lemma 17 ([6] zCDP implies approximate DP).

If a randomized mechanism satisfies -zCDP, then is -DP for any and .

Lemma 18 ([7, Theorem 2.13] Multivariate Discrete Gaussian).

Let and . Let satisfy for all neighboring datasets . Define a randomized algorithm by where independently for each . Then satisfies -zCDP.

Next, we present a discrete variant of the Correlated Gaussian Mechanism and prove that it satisfies zCDP. We can then convert the privacy guarantees to approximate differential privacy. We combine this with the add-the-deltas technique for a discrete variant of Algorithm 2. This approach does not give the tightest privacy parameters, but it is significantly simpler than a direct analysis of approximate differential privacy for multivariate discrete Gaussian noise (see [7, Theorem 2.14]). The primary contribution in this section is a simple change that makes the correlated Gaussian mechanism compatible with the discrete Gaussian. We leave providing tighter analysis of the privacy guarantees open for future work.

Lemma 19.

Algorithm 4 satisfies -zCDP where .

Proof.

Fix any pair of neighboring histograms and . We prove the lemma for the case where . The proof is symmetric when .

Construct a new pair of histograms such that for all and . We set for all and finally .

We clearly have that and for all possible as required by Lemma 18. Now, we set for and . We constructed and such that they differ by at most in all entries for any pair of neighboring histograms. We therefore have that

By Lemma 18 we have that for all , where and . The output of can be post-processed such that . Notice that such a post-processing gives us the same output distribution as Algorithm 4 for both and . The algorithm therefore satisfies -zCDP because the -Rényi divergence cannot increase from post-processing by Definition 16.

Note that the scaling step is crucial for the privacy proof. It would not be sufficient to simply replace with . We need to sample noise in discrete steps of length instead of length . Otherwise, the trick of centering the differences between the histograms using the entry as an offset does not work. If we prefer a mechanism that always outputs integers, we can simply post-process the output. Next, we give the privacy guarantees of the Correlated Stability Histogram when using discrete noise.

Lemma 20.

Let and . Then for any we have that:

Proof.

The proof follows the same structure as for Lemma 10. If and then the sums cannot be above . The only difference is that we have to split up the probabilities. In the discrete case the probabilities slightly change when we rescale.

Finally, we can replace the Gaussian noise in Algorithm 2 with discrete Gaussian noise. Using the add-the-deltas approach and the lemmas above we get that.

Theorem 21.

For parameters , , and , consider the mechanism that given a histogram : (1) runs Algorithm 4 with parameters and restricted to the support of (2) removes all noisy counts less than or equal to . Then satisfies -DP for -sparse monotonic histograms, where is such that -zCDP implies -DP and

Proof.

The proof relies on the construction of an intermediate histogram from the proof of Theorem 11. We have that

where the first inequality follows from Lemma 20 and the second inequality follows from Lemma 19. Both inequalities rely on the fact that the histograms are -sparse.

8 Conclusion and Open Problems

We introduced the Correlated Stability Histogram for the setting of -sparse monotonic histograms and provided privacy guarantees using the add-the-deltas approach and a more fine-grained case-by-case analysis. We show that our mechanism outperforms the state-of-the-art – the Gaussian Sparse Histogram Mechanism – and improves the utility by up to a factor of . In addition to various extensions, we enriched our theoretical contributions with a step towards practice by including a version that works with discrete Gaussian noise.

Unlike the previous work [24], our bound in Theorem 12 is not tight. It would be interesting to derive exact bounds for our mechanism as well. Finally, we point out that the uncorrelated GSHM is still preferred in some settings. The CSH requires an upper bound on , whereas GSHM requires a bound on . If we have access to a bound but no sparsity bound, we can apply our technique from Section 4. This approach works well when the dataset is close to -sparse, but if the dataset is large with many high counters the pre-processing step can introduce high error. Furthermore, if histograms are -sparse, but we additionally know that , for some , our improvement factor changes. If , the GSHM has a lower error than the CSH. However, in that setting, it might still be possible to reduce the error using the technique of Joseph and Yu [14]. We leave exploring this regime for future work.

References

- [1] Martin Aumüller, Christian Janos Lebeda, and Rasmus Pagh. Representing sparse vectors with differential privacy, low error, optimal space, and fast access. Journal of Privacy and Confidentiality, 12(2), November 2022. doi:10.29012/jpc.809.

- [2] Mitali Bafna and Jonathan Ullman. The price of selection in differential privacy. In Conference on Learning Theory, pages 151–168. PMLR, 2017. URL: http://proceedings.mlr.press/v65/bafna17a.html.

- [3] Victor Balcer and Salil Vadhan. Differential privacy on finite computers. Journal of Privacy and Confidentiality, 9(2), September 2019. doi:10.29012/jpc.679.

- [4] Borja Balle and Yu-Xiang Wang. Improving the gaussian mechanism for differential privacy: Analytical calibration and optimal denoising. In ICML, volume 80 of Proceedings of Machine Learning Research, pages 403–412. PMLR, 2018. URL: http://proceedings.mlr.press/v80/balle18a.html.

- [5] Mark Bun, Kobbi Nissim, and Uri Stemmer. Simultaneous private learning of multiple concepts. In Madhu Sudan, editor, Proceedings of the 2016 ACM Conference on Innovations in Theoretical Computer Science, Cambridge, MA, USA, January 14-16, 2016, pages 369–380. ACM, 2016. doi:10.1145/2840728.2840747.

- [6] Mark Bun and Thomas Steinke. Concentrated differential privacy: Simplifications, extensions, and lower bounds. In Theory of Cryptography Conference, pages 635–658. Springer, 2016. doi:10.1007/978-3-662-53641-4_24.

- [7] Clement Canonne, Gautam Kamath, and Thomas Steinke. The discrete gaussian for differential privacy. Journal of Privacy and Confidentiality, 12(1), July 2022. doi:10.29012/jpc.784.

- [8] David Durfee and Ryan M. Rogers. Practical differentially private top-k selection with pay-what-you-get composition. In Hanna M. Wallach, Hugo Larochelle, Alina Beygelzimer, Florence d’Alché-Buc, Emily B. Fox, and Roman Garnett, editors, Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, volume 32, pages 3527–3537, Red Hook, NY, USA, 2019. Curran Associates Inc. URL: https://proceedings.neurips.cc/paper/2019/hash/b139e104214a08ae3f2ebcce149cdf6e-Abstract.html.

- [9] Cynthia Dwork, Krishnaram Kenthapadi, Frank McSherry, Ilya Mironov, and Moni Naor. Our data, ourselves: Privacy via distributed noise generation. In Advances in Cryptology - EUROCRYPT 2006, 25th Annual International Conference on the Theory and Applications of Cryptographic Techniques, St. Petersburg, Russia, May 28 - June 1, 2006, Proceedings, volume 4004 of Lecture Notes in Computer Science, pages 486–503. Springer, 2006. doi:10.1007/11761679_29.

- [10] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography: Third Theory of Cryptography Conference, TCC 2006, New York, NY, USA, March 4-7, 2006. Proceedings 3, pages 265–284. Springer, 2006. doi:10.1007/11681878_14.

- [11] Cynthia Dwork and Aaron Roth. The algorithmic foundations of differential privacy. Foundations and Trends in Theoretical Computer Science, 9(3-4):211–407, 2014. doi:10.1561/0400000042.

- [12] Google Anonymization Team. Delta for thresholding. https://github.com/google/differential-privacy/blob/main/common_docs/Delta_For_Thresholding.pdf, 2020. [Online; accessed 8-December-2024].

- [13] Michaela Gotz, Ashwin Machanavajjhala, Guozhang Wang, Xiaokui Xiao, and Johannes Gehrke. Publishing search logs—a comparative study of privacy guarantees. IEEE Transactions on Knowledge and Data Engineering, 24(3):520–532, 2012. doi:10.1109/TKDE.2011.26.

- [14] Matthew Joseph and Alexander Yu. Some constructions of private, efficient, and optimal k-norm and elliptic gaussian noise. In The Thirty Seventh Annual Conference on Learning Theory, June 30 - July 3, 2023, Edmonton, Canada, volume 247 of Proceedings of Machine Learning Research, pages 2723–2766. PMLR, 2024. URL: https://proceedings.mlr.press/v247/joseph24a.html.

- [15] Aleksandra Korolova, Krishnaram Kenthapadi, Nina Mishra, and Alexandros Ntoulas. Releasing search queries and clicks privately. In WWW, pages 171–180. ACM, 2009. doi:10.1145/1526709.1526733.

- [16] Christian Janos Lebeda. Better gaussian mechanism using correlated noise. In 2025 Symposium on Simplicity in Algorithms (SOSA), pages 119–133, 2025. doi:10.1137/1.9781611978315.9.

- [17] Christian Janos Lebeda and Lukas Retschmeier. The correlated gaussian sparse histogram mechanism, 2024. doi:10.48550/arXiv.2412.10357.

- [18] Christian Janos Lebeda and Jakub Tetek. Better differentially private approximate histograms and heavy hitters using the misra-gries sketch. In PODS, pages 79–88. ACM, 2023. doi:10.1145/3584372.3588673.

- [19] Frank McSherry and Kunal Talwar. Mechanism design via differential privacy. In 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS’07), pages 94–103. IEEE, 2007. doi:10.1109/FOCS.2007.41.

- [20] Solomon Messing, Christina DeGregorio, Bennett Hillenbrand, Gary King, Saurav Mahanti, Zagreb Mukerjee, Chaya Nayak, Nate Persily, Bogdan State, and Arjun Wilkins. Facebook Privacy-Protected Full URLs Data Set, 2020. doi:10.7910/DVN/TDOAPG.

- [21] Jayadev Misra and David Gries. Finding repeated elements. Sci. Comput. Program., 2(2):143–152, 1982. doi:10.1016/0167-6423(82)90012-0.

- [22] Gang Qiao, Weijie J. Su, and Li Zhang. Oneshot differentially private top-k selection. In Marina Meila and Tong Zhang, editors, Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event, volume 139 of Proceedings of Machine Learning Research, pages 8672–8681. PMLR, 2021. URL: http://proceedings.mlr.press/v139/qiao21b.html.

- [23] Bengt Rosén. Asymptotic theory for order sampling. Journal of Statistical Planning and Inference, 62(2):135–158, 1997. doi:10.1016/S0378-3758(96)00185-1.

- [24] Arjun Wilkins, Daniel Kifer, Danfeng Zhang, and Brian Karrer. Exact privacy analysis of the gaussian sparse histogram mechanism. Journal of Privacy and Confidentiality, 14(1), February 2024. doi:10.29012/jpc.823.

- [25] Royce J. Wilson, Celia Yuxin Zhang, William Lam, Damien Desfontaines, Daniel Simmons-Marengo, and Bryant Gipson. Differentially private SQL with bounded user contribution. Proc. Priv. Enhancing Technol., 2020(2):230–250, 2020. doi:10.2478/popets-2020-0025.

- [26] Hao Wu and Hanwen Zhang. Faster differentially private top-k selection: A joint exponential mechanism with pruning. In Amir Globersons, Lester Mackey, Danielle Belgrave, Angela Fan, Ulrich Paquet, Jakub M. Tomczak, and Cheng Zhang, editors, Advances in Neural Information Processing Systems 38: Annual Conference on Neural Information Processing Systems 2024, NeurIPS 2024, Vancouver, BC, Canada, December 10 - 15, 2024. NeurIPS, 2024. URL: http://papers.nips.cc/paper_files/paper/2024/hash/82f68b38747c406672f7f9f6bab86775-Abstract-Conference.html.

Appendix A Table of Symbols and Abbreviations

The following Table 1 summarizes the symbols and abbreviations used throughout the paper.

| Symbol | Description |

|---|---|

| GSHM | Gaussian Sparse Histogram Mechanism |

| CSH | Correlated Stability Histogram |

| Any aggregate query | |

| datasets on domain where | |

| Neighboring datasets | |

| Histogram | |

| ’th largest item in | |

| -norm of vector (number of non-zeroes) | |

| Support of | |

| Privacy Parameters | |

| Threshold is | |

| from Gaussian noise | |

| ; | Probability of infinite privacy loss, for counts |

| -centered normal distribution | |

| -centered discrete normal distribution [7] | |

| CDF of the normal distribution; inverse CDF |

Appendix B Omitted Proofs of Lemmas

We omitted the proofs of several lemmas from the main body to fit our main contributions within the recommended page limit. Here, we restate the lemmas and present the proofs.

B.1 Computing the threshold for add-the-deltas

Here we state a short lemma on how to compute the threshold for Algorithm 2 based on the result in Theorem 11.

Lemma 22 (Computing the threshold ).

For a fixed privacy budget and parameters and , the add-the-deltas technique requires where is the inverse CDF of the normal distribution and is defined as in Theorem 11.

Proof.

Observe . Taking on both sides yields . Multiplying by proves the claim.

B.2 Proof of Lemma 10

See 10

Proof.

We first give a bound for and the ’s independently, which is sufficient for us to bound the probability of this event. First, observe that the uncorrelated terms are independent, and we are interested in bounding the maximum of them and hence:

Similarly, we have for that

Notice that if both and hold then . As such, we can prove that the lemma holds since

| (3) | ||||

| (4) | ||||

where step (3) holds by a union bound and step (4) holds because the random variables and are independent.

B.3 Proof of Lemma 13

See 13

Proof.

By Definition 9 we have to show two properties of . It must hold for any that and for any neighboring pair we have or .

The sparsity claim is easy to see. Any counter that is not strictly larger that is removed in line 4. By definition of , there are at most such entries.

To prove the monotonicity property, we must show that counters in either all increase or all decrease by at most one. We only give the proof for the case where is constructed by adding one data point to . The proof is symmetric for the case where is created by removing one data point from .

We first partition into three sets: Let , and . Because we have for all , also . Note that the ’th largest entry might not represent the same element, but we only care about the value.

Consider the case where . We see that for all , and for all we have because we subtract the same value and increment by at most one. Some elements might now be exactly one larger than in , but still by definition. Thus we have that .

Now, consider the case where . No element can become larger or equal to the new ’th largest element, so still . Elements in get reduced by at most one for , because they either are increased together with the and then , or they stay the same in which case . One can also see that for , , depending on whether or not they increase together with . As such we have for all . Therefore, .