Differential Privacy Under Multiple Selections

Abstract

We consider the setting where a user with sensitive features wishes to obtain a recommendation from a server in a differentially private fashion. We propose a “multi-selection” architecture where the server can send back multiple recommendations and the user chooses one from these that matches best with their private features. When the user feature is one-dimensional – on an infinite line – and the accuracy measure is defined w.r.t some increasing function of the distance on the line, we precisely characterize the optimal mechanism that satisfies differential privacy. The specification of the optimal mechanism includes both the distribution of the noise that the user adds to its private value, and the algorithm used by the server to determine the set of results to send back as a response. We show that Laplace is an optimal noise distribution in this setting. Furthermore, we show that this optimal mechanism results in an error that is inversely proportional to the number of results returned when the function is the identity function.

Keywords and phrases:

Differential Privacy, Mechanism Design and Multi-SelectionFunding:

Aleksandra Korolova: Supported by NSF award CNS-2344925.Copyright and License:

2012 ACM Subject Classification:

Security and privacy Privacy-preserving protocolsAcknowledgements:

SS is grateful to Sanath Kumar Krishnamurthy for the helpful discussion on the weak-duality proof and to Mohak Goyal for an insightful conversation on the generalized privacy definition in Appendix A.1.Editors:

Mark BunSeries and Publisher:

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Consider a user who wants to issue a query to an online server (e.g. to retrieve a search result or an advertisement), but the query itself reveals private information about the user. One commonly studied approach to protect user privacy from the server in this context is for the user to send a perturbed query, satisfying differential privacy under the local trust model [13]. However, since the query itself is changed from the original, the server may not be able to return a result that is very accurate for the original query. Our key observation is that in many situations such as search or content recommendations, the server is free to return many results, and the user can choose the one that is the most appropriate, without revealing the choice to the server. In fact, if the server also returns a model for evaluating the quality of these results for the user, then this choice can be made by a software intermediary such as a client running on the user’s device. This software intermediary can also be the one that acts as the user’s privacy delegate and is the one ensuring local differential privacy.

We call this, new for the differential privacy (DP) literature system architecture, the “Multi-Selection” approach to privacy, and the key question we ask is: What is the precise trade-off that can be achieved between the number of returned results and quality under a fixed privacy goal? Of course, had the server simply returned all possible results, there would have been no loss in quality since the client could choose the optimal result. However, this approach is infeasible due to computation and communication costs, as well as due to potential server interest in not revealing proprietary information. We, therefore, restrict the server to return results for small , and study the trade-off between and the quality when the client sends privacy-preserving queries. Our algorithmic design space consists of choosing the client’s algorithm and the server’s algorithm, as well as the space of signals they will be sending.

Although variants of this approach have been suggested (particularly in cryptographic contexts [58]), to the best of our knowledge, no formal results are known on whether this approach does well in terms of reducing the “price of differential privacy”, or how to obtain the optimal privacy-quality trade-offs. Given the dramatic increase in network bandwidth and on-device compute capabilities of the last several decades, this approach has the potential to offer an attractive pathway to making differential privacy practical for personalized queries.

At a high level, in addition to the novel multi-selection framework for differential privacy, our main contributions are two-fold. First, under natural assumptions on the privacy model and the latent space of results and users, we show a tight trade-off between utility and number of returned results via a natural (yet a priori non-obvious) algorithm, with the error perceived by a user decreasing as for results. Secondly, at a technical level, our proof of optimality is via a dual fitting argument and is quite subtle, requiring us to develop a novel duality framework for linear programs over infinite dimensional function spaces, with constraints on both derivatives and integrals of the variables. This framework may be of independent interest for other applications where such linear programs arise.

1.1 System Architecture and Definitions

For simplicity and clarity, we assume that user queries lie on the real number line111We relax this one-dimensional assumption in Appendix A and provide a more detailed discussion in [41, Appendix B.6]. Thus, we denote the set of users by . When referring to a user , we imply a user with a query value . Let represent the set of possible results, and let denote the function that maps user queries to optimal results. In practical applications, the function OPT may represent a machine learning model, such as a deep neural network or a random forest. This function OPT is available to the server but remains unknown to the users.

1.1.1 Privacy

Definition 1 (adapted from [26, 49]).

Let be a desired level of privacy and let be a set of input data and be the set of all possible responses and be the set of all probability distributions (over a sufficiently rich -algebra of given by ). A mechanism is -differentially private if for all and :

We argue that a more relevant and justifiable notion of differential privacy in our context is geographic differential privacy [4, 1] (GDP), which allows the privacy guarantee to decay with the distance between users. Under GDP a user is indistinguishable from “close by” users in the query space, while it may be possible to localize the user to a coarser region in space. This notion has gained widespread adoption for anonymizing location data. In our context, it reflects, for instance, the intuition that the user is more interested in protecting the specifics of a medical query they are posing from the search engine rather than protecting whether they are posing a medical query or an entertainment query. GDP is thus appropriate in scenarios such as search. In [57], we demonstrate how geographical differential privacy applies to movie recommendation systems using distance between user feature vectors. We thus restate the formal definition of GDP from [49] and use it in the rest of the work.

Definition 2 (adapted from [49]).

Let be a desired level of privacy and let (a normed vector space) be a set of input data and be the set of all possible responses and be the set of all probability distributions (over a sufficiently rich -algebra of given by ). A mechanism is -geographic differentially private if for all and :

1.1.2 “Multi-Selection” Architecture

Our “multi-selection” system architecture (shown in Figure 1) relies on the server returning a small set of results in response to the privatized user input, with the on-device software intermediary deciding, unknown to the server, which of these server responses to use.

The mechanisms we consider in this new architecture consists of a triplet :

-

1.

A set of signals that can be sent by users.

-

2.

The actions of users, , which involves a user sampling a signal from a distribution over signals. We use for to denote the distribution of the signals sent by user which is supported on . The set of actions over all users is given by .

-

3.

The distribution over actions of the server, . When the server receives a signal , it responds with , which characterizes the distribution of the results that the server sends (it is supported in ). We denote the set of all such server actions by .222We treat this distribution to be supported on instead of -sized subset of for ease of mathematical typesetting.

Our central question is to find the triplet over that satisfies -GDP constraints on while ensuring the best-possible utility or the smallest-possible disutility.

1.1.3 The disutility model: Measuring the cost of privacy

We now define the disutility of a user from a result . One approach would be to look at the difference between (or the ratio) of the cost of the optimum result for and the cost of the result returned by a privacy-preserving algorithm. However, we are looking for a general framework, and do not want to presume that this cost measure is known to the algorithm designer, or indeed, that it even exists. Hence, we will instead define the disutility of as the amount by which would have to be perturbed for the returned result to become optimum; this only requires a distance measure in the space in which resides, which is needed for the definition of the privacy guarantees anyway. For additional generality, we also allow the disutility to be any increasing function of this perturbation, as defined below.

Definition 3.

The disutility of a user from a result w.r.t some continuously increasing function is given by333If no such exists then the disutility is as infimum of a null set is .

| (1) |

We allow any function that satisfies the following conditions:

| is a continuously increasing function satisfying . | (2) | ||

| There exists s.t. is Lipschitz continuous in . | (3) |

The first condition (2) captures the intuition that disutility for the optimal result is zero. The second condition (3), which bounds the growth of by an exponential function, is a not very restrictive condition required for our mathematical analysis. Quite surprisingly, to show that our multi-selection framework provides a good trade-off in the above model for every as defined above, we only need to consider the case where the is the identity function. The following example further motivates our choice of the disutility measure:

Example 4.

Suppose, one assumes that the result set and the user set are embedded in the same metric space . This setup is similar to the framework studied in the examination of metric distortion of ordinal rankings in social choice [5, 20, 40, 48]. Using triangle inequality, one may argue that where is the optimal result for user (i.e. ) and is the optimal result for user .444This follows since . Thus, gives an upper bound on the disutility of user from result .

1.1.4 Restricting users and results to the same set

For ease of exposition, we study a simplified setup restricting the users and results to the same set . Specifically, since , our simplified setup restricts the users and results to the same set where the disutility of user from a result is given by . Our results extend to the model where the users and results lie in different sets (see Appendix A.4). In the simplified setup, while we use to denote the result, what we mean is that the server sends back .

1.1.5 Definition of the cost function in the simplified setup

We use to convert a vector to a set of at most elements, formally . Recall from Section 1.1.4, the disutility of user from a result in the simplified setup may be written as

| (4) |

Since we restrict the users and results to the same set, is supported on k for every . Thus, the cost for a user from the mechanism is given by

| (5) |

where the expectation is taken over the randomness in the action of user and the server.

We now define the cost of a mechanism by supremizing over all users . Since, we refrain from making any distributional assumptions over the users, supremization rather than mean over the users is the logical choice.

| (6) |

We use to denote the identity function, i.e. for every and thus define the cost function when is an identity function as follows:

| (7) |

Recall our central question is to find the triplet over that ensures the smallest possible disutility / cost while ensuring that satisfies -geographic differential privacy. We denote the value of this cost by and it is formally defined as

| (8) |

,

.

1.2 Our results and key technical contributions

For any satisfying (2) and (3) when the privacy goal is -GDP our main results are:

-

The optimal action for a user , is to add Laplace noise555We use to denote a Laplace distribution of scale centred at . Formally, a distribution has its probability density function given by . of scale to its value (result stated in Theorem 19 and proof sketch described in Sections 2.1, 2.2 and 2.3). Further, we emphasize that the optimality of adding Laplace noise is far from obvious666In fact, only a few optimal DP mechanisms are known [39, 43, 46, 25], and it is known that for certain scenarios, universally optimal mechanisms do not exist [17].. For instance, when users and results are located on a ring, Laplace noise is not optimal (see appendix A.2 for an analysis when ).

Although we do not assume that distributions in admit valid density functions, we prove in Lemma 13 that it suffices to consider only bounded density functions using ideas from mollifier theory [36]. In Appendix A, we generalize our results by allowing user queries to lie on a higher dimensional space and studying -geographic differential privacy (defined in Definition 21) for an increasing convex differentiable function . Formally, our main results can be stated as:

Theorem 5 (corresponds to Theorem 19 and Theorem C.3 in [41]).

For -geographic differential privacy, adding Laplace noise, that is, user sends a signal drawn from distribution , is one of the optimal choices of for users. Further, when , we have and the optimal mechanism (choice of actions of users and server) itself can be computed in closed form. For a generic , the optimal server action may be computed recursively.

In addition to our overall framework and the tightness of the above theorem, a key contribution of our work is in the techniques developed. At a high level, our proof proceeds via constructing an infinite dimensional linear program to encode the optimal algorithm under DP constraints. We then use dual fitting to show the optimality of Laplace noise. Finally, the optimal set of results being computable by a simple dynamic program given such noise.

The technical hurdles arise because the linear program for encoding the optimal mechanism is over infinite-dimensional function spaces with linear constraints on both derivatives and integrals, since the privacy constraint translates to constraints on the derivative of the density encoding the optimal mechanism, while capturing the density itself requires an integral. We call it Differential Integral Linear Program (DILP); see Section 2.2. However, there is no weak duality theory for such linear programs in infinite dimensional function spaces, such results only existing for linear programs with integral constraints [3]. We, therefore, develop a weak duality theory for DILPs (see Section 2.2 with a detailed proof in [41, Appendix C.6]), which to the best of our knowledge is novel. The proof of this result is quite technical and involves a careful application of Fatou’s lemma [56] and the monotone convergence theorem to interchange integrals with limits, and integration by parts to translate the derivative constraints on the primal variables to derivatives constraint on the dual variables. We believe our weak duality framework is of independent interest and has broader implications beyond differential privacy; see [41, Appendix B.7] for two such applications.

1.3 Related Work

1.3.1 Differential Privacy

The notion of differential privacy in the trusted curator model is introduced in [27]; see [29] for a survey of foundational results in this model. The idea of local differential privacy dates back to [47], and the algorithms for satisfying it have been studied extensively following the deployment of DP in this model by Google [31] and Apple [6]; see, e.g. [18, 21, 60, 11] and Bebensee [13] for a survey. Geographic differential privacy was introduced by [4] and has gained widespread adoption for location data. Since GDP utilizes the trust assumptions of the local model, it is only a slight relaxation of the traditional local model of DP.

1.3.2 Multi-Selection

An architecture for multi-selection, particularly with the goal of privacy-preserving advertising, was introduced in Adnostic by [58]. Their proposal was to have a browser extension that would run the targeting and ad selection on the user’s behalf, reporting to the server only click information using cryptographic techniques. Similarly, Privad by [42] propose to use an anonymizing proxy that operates between the client that sends broad interest categories to the proxy and the advertising broker, that transmits all ads matching the broad categories, with the client making appropriate selections from those ads locally. Although both Adnostic and Privad reason about the privacy properties of their proposed systems, unlike our work, neither provides DP guarantees.

Two lines of work in the DP literature can be seen as related to the multi-selection paradigm – the exponential mechanism (see e.g. [52, 16, 50]) and amplification by shuffling (see e.g. [30, 22, 33]). The exponential mechanism focuses on high-utility private selection from multiple alternatives and is usually deployed in the Trusted Curator Model (TCM). Amplification by shuffling analyzes the improvement in the DP guarantees that can be achieved if the locally privatized data is shuffled by an entity before being shared with the server. As far as we are aware, neither of the results from these lines of work can be directly applied to our version of multi-selection, although combining them is an interesting avenue for future work.

Several additional directions within DP research can be viewed as exploring novel system architectures in order to improve privacy-utility trade-offs, e.g., using public data to augment private training [53, 10], combining data from TCM and LM models [8, 7, 14], and others. Our proposed architecture is distinct from all of these. Finally, our work is different from how privacy is applied in federated learning [38] – there, the goal is for a centralized entity to be able to build a machine learning model based on distributed data; whereas our goal is to enable personalized, privacy-preserving retrieval from a global ML model.

The closest work we are aware of is Apple’s very recent system using multiple options presented to users to improve its generation of synthetic data using DP-user feedback [55].

1.3.3 Optimal DP mechanisms

To some extent, previous work in DP can be viewed as searching for the optimal DP mechanism, i.e. one that would achieve the best possible utility given a fixed desired DP level. Only a few optimal mechanisms are known [39, 43, 46, 25], and it is known that for certain scenarios, universally optimal mechanisms do not exist [17]. Most closely related to our work is the foundational work of [39] that derives the optimal mechanism for counting queries via a linear programming formulation; the optimal mechanism turns out to be the discrete version of the Laplace mechanism. Its continuous version was studied in [34], where the Laplace mechanism was shown to be optimal. These works focused on the trusted curator model of differential privacy unlike the local trust model which we study.

In the local model, [49] show Laplace noise to be optimal for -geographic DP. Their proof relies on formulating an infinite dimensional linear program over noise distributions and looking at its dual. Although their proof technique bears a slight resemblance to ours, our proof is different and intricate since it involves the minimisation over the set of returned results in the cost function. A variation of local DP is considered in [37], in which DP constraints are imposed only when the distance between two users is smaller than a threshold. For that setting, the optimal noise is piece-wise constant. However, our setting of choosing from multiple options makes the problems very different.

1.3.4 Homomorphic encryption

Recent work of [45] presents a private web browser where users submit homomorphically encrypted queries, including the cluster center and search text . The server computes the cosine similarity between and every document in cluster , allowing the user to select the index of the most similar document. To retrieve the URL, private information retrieval [24] is used. This approach requires making all cluster centers public and having the user identify the closest , which differs significantly from our multi-selection model.

Additionally, homomorphic encryption for machine learning models [51, 23] faces practical challenges, such as high computational overhead and reduced utility, limiting real-world deployment. While our multi-selection framework provides a weaker privacy guarantee than homomorphic encryption, it avoids requiring the recommendation service to publish its full data or index, which in certain context may be viewed as proprietary information by the service. We thus argue that homomorphic encryption-based and multi-selection based approaches offer distinct trade-offs, making them complementary tools for private recommendation systems.

1.3.5 Related work on duality theory for infinite dimensional LPs

Strong duality is known to hold for finite dimensional linear programs [19]. However, for infinite dimensional linear programs, strong duality may not always hold (see [3] for a survey). Sufficient conditions for strong duality are presented and discussed in [59, 12] for generalized vector spaces. A class of linear programs (called SCLPs) over bounded measurable function spaces have been studied in [54, 15] with integral constraints on the functions. However, these works do not consider the case with derivative constraints on the functional variables. In [49, Equation 7] a linear program with derivative and integral constraints (DILPs) is formulated to show the optimality of Laplace noise for geographic differential privacy. However, their duality result does not directly generalize to our case since our objective function and constraints are far more complex as it involves minimization over a set of results.

We thus need to reprove the weak-duality theorem for our DILPs, the proof of which is technical and involves a careful application of integration by parts to translate the derivative constraint on the primal variable to a derivative constraint on the dual variable. Further, we require a careful application of Fatou’s lemma [56] and monotone convergence theorem to exchange limits and integrals. Our weak duality result generalizes beyond our specific example and is applicable to a broader class of DILPs. Furthermore, we discuss two problems (one from job scheduling [2] and one from control theory [32]) in [41, Appendix B.7] which may be formulated as a DILP.

2 Characterizing the Optimal Mechanism: Proof Sketch of Theorem 5

We now present a sketch of the proof of Theorem 5; the full proof involving the many technical details is presented in the Appendix. We first show that for the sake of analysis, the server can be removed by making the signal set coincide with result sets (Section 2.1) assuming that the function OPT is publicly known both to the user and server.777One should note that this removal is just for analysis and the server is needed since the OPT function is unknown to the user. Then in Section 2.2 we construct a primal linear program for encoding the optimal mechanism, and show that it falls in a class of infinite dimensional linear programs that we call DILPs, as defined below.

Definition 6.

Differential-integral linear program (DILP) is a linear program over Riemann integrable function spaces involving constraints on both derivatives and integrals.

A simple example is given in Equation (9). Observe that in equation (9), we define to be a continuously differentiable function.

| (9) |

We next construct a dual DILP formulation in Section 2.2, and show that the formulation satisfies weak duality. As mentioned before, this is the most technically intricate result since we need to develop a duality theory for DILPs. We relegate the details of the proof here to the Appendix.

Next, in Section 2.3, we show the optimality of the Laplace noise mechanism via dual-fitting, i.e., by constructing a feasible solution to DILP with objective identical to that of the Laplace noise mechanism. Finally, in Section 2.4, we show how to find the optimal set of results given Laplace noise. We give a construction for general functions as well as a closed form for the canonical case of . This establishes the error bound and concludes the proof of Theorem 5.

2.1 Restricting the signal set to

We first show that it is sufficient to consider a more simplified setup where the user sends a signal set in k and the server sends back the results corresponding to the signal set. Since we assumed users and results lie in the same set, for the purpose of analysis, this removes the server from the picture. To see this, note that for the setting discussed in Section 1.1.4, the optimal result for user is the result itself, where when we refer to “result ”, we refer to the result .

Thus, this approach is used only for a simplified analysis as the OPT function is not known to the user and our final mechanism will actually split the computation between the user and the server.

Therefore a user can draw a result set directly from the distribution over the server’s action and send the set as the signal. The server returns the received signal, hence removing it from the picture. In other words, it is sufficient to consider mechanisms in , which are in the following form (corresponding to Theorem 8).

-

1.

User reports that is drawn from a distribution over k.

-

2.

The server receives and returns .

We give an example to illustrate this statement below.

Example 7.

Fix a user and let and and consider a mechanism where user sends and with equal probability. The server returns on receiving signal , with the following probability.

Then the probability that receives is 0.3 and it receives is 0.7. Now consider another mechanism with the same cost satisfying differential privacy constraints, where , with user sending signal and with probabilities 0.3 and 0.7. When the server receives , it returns a.

We show the new mechanism satisfies differential privacy assuming the original mechanism satisfies it. As a result, we can assume when finding the optimal mechanism.

The following theorem states that it is sufficient to study a setup removing the server from the picture and consider mechanisms in set of probability distributions supported on k satisfying -geographic differential privacy ( as defined in Section 1.1.5).

Theorem 8 (detailed proof in Appendix C.1 of [41]).

It is sufficient to remove the server from the cost function and pretend the user has full-information. Mathematically, it maybe stated as follows.

| (10) |

Proof Sketch.

Fix . For and , let be the probability that the server returns a set in to user . Then for any , we can show that using post-processing theorem, and thus because is a distribution on k for any . At the same time,

2.2 Differential integral linear programs to represent and a weak duality result

Recall the definition of DILP from Definition 6. In this section, we construct an infimizing DILP to represent the constraints and the objective in the cost function . We then construct a dual DILP , and provide some intuition for this formulation. The proof of weak duality is our main technical result, and its proof is defered to the Appendix.

2.2.1 Construction of DILP to represent cost function

We now define the cost of a mechanism which overloads the cost definition in Equation 6

Definition 9.

Cost of mechanism : We define the cost of mechanism P as

| (11) |

Observe that in Definition 9 we just use instead of the tuple as in Equation (6). Observe that it is sufficient to consider in the cost since simulates the entire combined action of the user and the server as shown in Theorem 10 in Section 2.1. We now define the notion of approximation using cost of mechanism by a sequence of mechanisms which is used in the construction of DILP .

Definition 10.

Arbitrary cost approximation: We call mechanisms an arbitrary cost approximation of mechanisms if

Now we define the DILP to characterise in Equation (10). In this formulation, the variables are , which we assume are non-negative Riemann integrable bounded functions. These variables capture the density function .

| (12) |

In DILP , we define and to be the lower and upper partial derivative of at . Now observe that, we use lower and upper derivatives instead of directly using derivatives as the derivatives of a probability density function may not always be defined (for example, the left and right derivatives are unequal in the Laplace distribution at origin).

Note that the DILP involves integrals and thus requires mechanisms to have a valid probability density function, however not every distribution is continuous, and, as a result, may not have a density function (e.g. point mass distributions like defined in Definition 15). Using ideas from mollifier theory [35] we construct mechanisms with a valid probability density function that are an arbitrary good approximation of mechanism P in Lemma 13, hence showing that it suffices to define over bounded, non-negative Reimann integrable functions . We now prove that the DILP constructed above captures the optimal mechanism, in other words, .

Lemma 11.

Let denote the optimal value of DILP (12), then .

To prove this lemma, we show Lemma 12, which relates the last two constraints of the DILP to -geographic differential privacy, and Lemma 13, which shows that an arbitrary cost approximation of mechanism P can be constructed with valid probability density functions.

Lemma 12.

Let have a probability density function given by for every . Then, satisfies -geographic differential privacy iff 888, denote the lower and upper partial derivative w.r.t.

The proof of this lemma ([41, Appendix C.2.1]) proceeds by showing that -geographic differential privacy is equivalent to Lipschitz continuity of in .999We handle the case when the is not defined as the density is zero at some point separately in the proof.

Lemma 13.

(Proven in [41, Appendix C.2.5]) Given any mechanism (satisfying -geographic differential privacy), we can construct a sequence of mechanisms with bounded probability density functions such that is an arbitrary cost approximation of mechanism .

Proof of Lemma 11.

2.2.2 Dual DILP and statement of weak duality theorem

Now, we write the dual of the DILP as the DILP in Equation (13). Observe that, we have the constraint that and is non-negative, (continuous) and is a function i.e. is continuously differentiable in and continuous in v. Thus, we may rewrite the equations as

| (13) |

To get intuition behind the construction of our dual DILP , relate the equations in DILP to the dual variables of DILP as follows. The first equation denoted by , second equation denoted by and the last two equations are jointly denoted by 101010Note that the variable is constructed from the difference of two non-negative variables corresponding to third and fourth equations, respectively. The detailed proof is in [41, Appendix C.6]. The last two terms in the second constraint of DILP are a consequence of the last two equations on DILP and observe that it involves a derivative of the dual variable . The linear constraint on the derivative of the primal variable translates to a derivative constraint on the dual variable by a careful application of integration by parts, discussed in detail in [41, Appendix C.6].

Observe that in our framework we have to prove the weak-duality result as, to the best of our knowledge, existing duality of linear programs in infinite dimensional spaces work for cases involving just integrals. The proof of this Theorem 14 is technical and we defer the details to [41, Appendix C.6].

Theorem 14.

.

2.3 Dual fitting to show the optimality of Laplace noise addition

Before starting this section, we first define a function which characterises the optimal placement of points in to minimise the expected minimum disutility among these points measured with respect to some user sampled from a Laplace distribution. As we shall prove in Theorem 16 that it bounds the cost of the Laplace noise addition mechanism.

| (14) |

In this section, we first define a mechanism in Definition 15 which simulates the action of the server corresponding to the Laplace noise addition mechanism in Section 2.3.1 and show that the cost of Laplace noise addition mechanism is . We finally show the optimality of Laplace noise addition mechanism via dual fitting i.e. constructing a feasible solution to the dual DILP with an objective function in Section 2.3.2.

2.3.1 Bounding cost function by the cost of Laplace noise adding mechanism

We now define the mechanism which corresponds to simulating the action of the server on receiving signal from user . We often call this in short as the Laplace noise addition mechanism.

Definition 15.

The distribution is defined as follows for every .

| (15) | ||||

| (16) |

Equality follows from the fact that for every .

Observe that the server responds with set of points for some so as to minimise the expected cost with respect to some user sampled from a Laplace distribution centred at . We show that the following lemma which states that satisfies -geographic differential privacy constraints and bound by .

Lemma 16 (detailed proof in Appendix C.3 of [41]).

satisfies -geographic differential-privacy constraints i.e. and thus, we have

Proof Sketch.

2.3.2 Obtaining a feasible solution to DILP

We now construct feasible solutions to DILP . For some and , we define

| (17) |

Now define and for every , we consider the following Differential Equation (18) in .

| (18) |

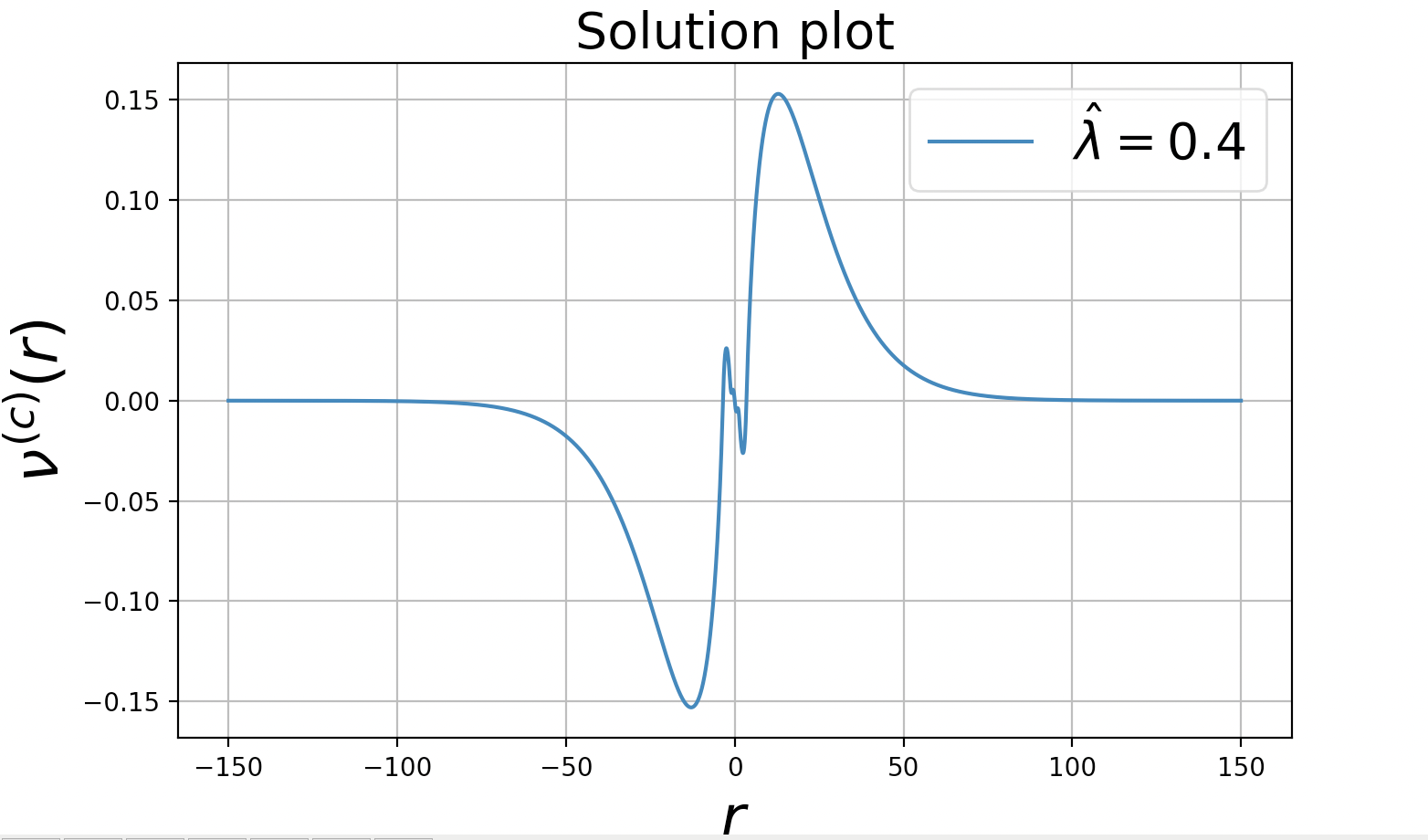

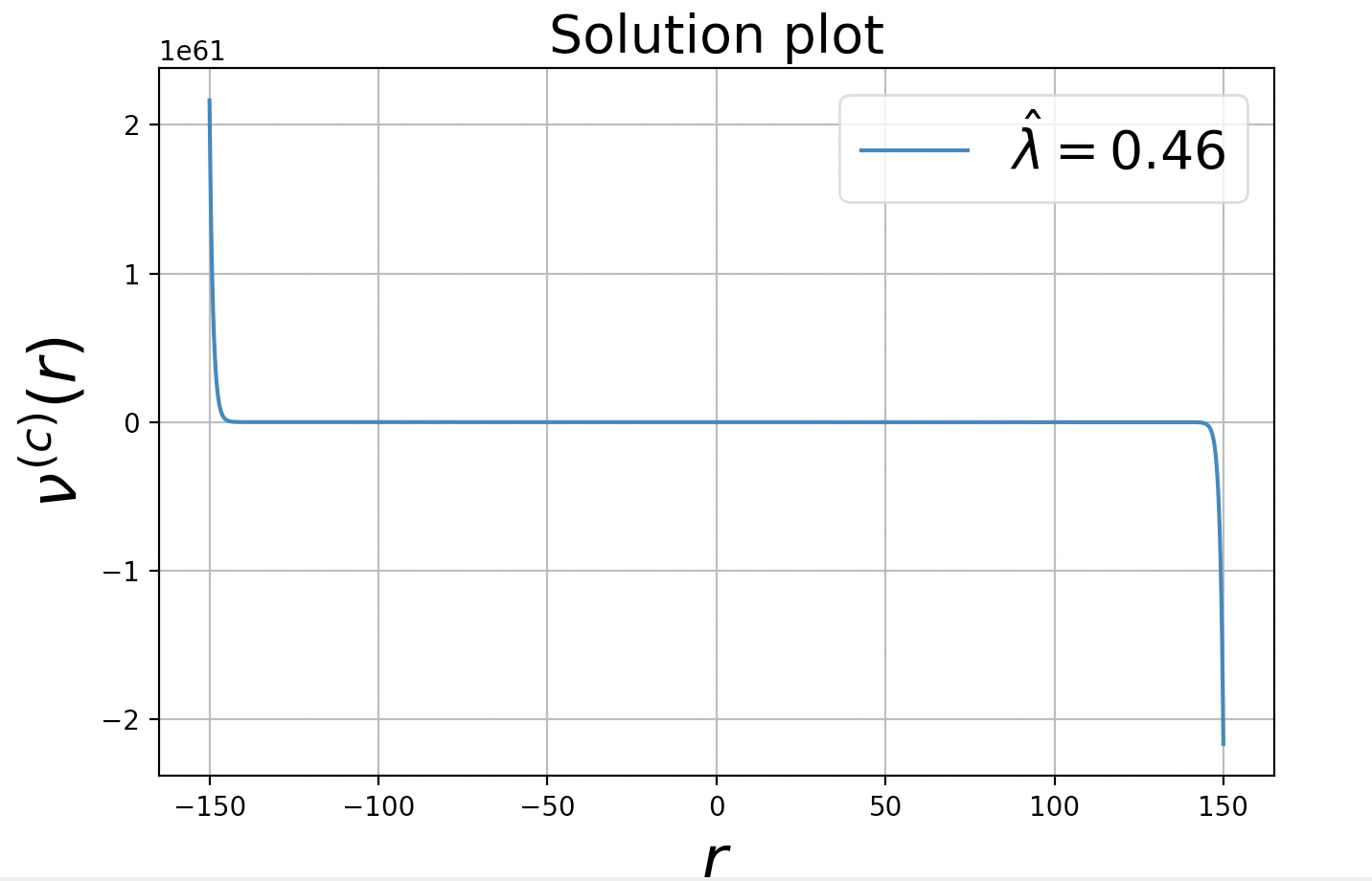

Observe that this equation precisely corresponds to the second constraint of DILP (inequality replaced by equality) with an initial value. We now show that a solution to differential equation (18) exists such that is non-negative for sufficiently large and non-positive for sufficiently small to satisfy the last two constraints of DILP in Lemma 17. Observe that the structure of our differential equation is similar to that in [49, Equation 19]. However, our differential equation has significantly more complexity since we are minimising over a set of points and also our equation has to be solved for every making it more complex.

Lemma 17 (Proof in Appendix C.4.2 of [41]).

Choose and , then equation has a unique solution and there exists satisfying and .

Intuitive explanation.

We just give an intuition for this proof for the case where exceeds by showing two plots in Figure 3(a) and 3(b) for the two cases where and respectively. In the first case, is positive for sufficiently large and in second case, it goes negative for large demonstrating the requirement of the bound on . The two plots are for the case when , , and thus may be approximately by as shown in Section 2.4. For the purpose of the plots, we choose 131313We choose this vector as it minimises equation (14) with detailed calculation is given in Section 2.4. and demonstrate the point in the Lemma.

These spikes in the solution may be observed due to the selection of due to the term in the differential equation.

Lemma 18 (detailed proof in Appendix C.4.2 of [41]).

.

We present a proof sketch where we do not explicitly show the continuity of the bounds and . In [41, Appendix C.7], we prove a claim showing such an existence.

Proof Sketch.

Recall the functions defined in (17). Also for every , we obtain a function [solution of Equation (18)] with bounds and satisfying and and this solution is feasible.

The objective value of this feasible solution is and the constructed solution is feasible for any and . Now, since is continuous in , choosing to be arbitrarily small enables us to obtain the objective value of the solution arbitrarily close to and thus, .

Observe that although we defined the Laplace noise addition mechanism (see Definition 15) entirely in terms of the user’s action, we can consider an alternate mechanism splitting into user’s action and server’s response attaining the same cost:

-

User sends to the server.

-

The server on receiving responds with a vector .

Theorem 19.

For -geographic differential privacy, sending Laplace noise, that is, user sends a signal drawn from distribution is one of the optimal choices of for users, and in this case .

Proof.

2.4 Server response given the user sends Laplace Noise

Recall that we proved in Theorem 19 that the Laplace noise addition mechanism is an optimal action for the users. We now focus on the construction of an optimal server action on receiving the signal from an user.

-

1.

User with value reports after adding Laplace noise of scale .

-

2.

The server receives and respond , where are fixed values.

For the case of , the optimal mechanism is simple enough that the values can be computed by dynamic programming, as we sketch in Appendix B, and this concludes the proof of Theorem 5. For other general increasing functions, the optimal solution for may not always be written in closed form, however we can always write a recursive expression to compute the points.

Based on the above arguments in the four sections, we have the main Theorem 5.

3 Conclusion

We have defined a new “multi-selection” architecture for differential privacy that takes advantage of technological advances that enable a server to send a small number of multiple recommendations to the user. We have shown in a stylized model that the architecture enables significant improvements in the achievable privacy-accuracy trade-offs. We conduct experiments using the MovieLens dataset [44] to empirically demonstrate the privacy-utility tradeoffs within our multi-selection framework [57]. Our analysis disregards some practical considerations, namely, that the client’s requests are in a high dimensional feature space (and not in one-dimension), and that the server may rely on a machine learning model to evaluate the quality of a result that it may need to convey to the client in some compressed form, which we leave to future work.

References

- [1] Mário S. Alvim, Konstantinos Chatzikokolakis, Catuscia Palamidessi, and Anna Pazii. Metric-based local differential privacy for statistical applications, 2018. arXiv:1805.01456.

- [2] EJ Anderson. A new continuous model for job-shop scheduling. International journal of systems science, 12(12):1469–1475, 1981.

- [3] E.J. Anderson and P. Nash. Linear Programming in Infinite-dimensional Spaces: Theory and Applications. A Wiley-Interscience publication. Wiley, 1987. URL: https://books.google.com/books?id=O2VRAAAAMAAJ.

- [4] Miguel E Andrés, Nicolás E Bordenabe, Konstantinos Chatzikokolakis, and Catuscia Palamidessi. Geo-indistinguishability: Differential privacy for location-based systems. In Proceedings of the 2013 ACM SIGSAC conference on Computer & communications security, pages 901–914, 2013.

- [5] Elliot Anshelevich and John Postl. Randomized social choice functions under metric preferences, 2016. arXiv:1512.07590.

- [6] Apple. Learning with privacy at scale. Apple Machine Learning Journal, 1, 2017. URL: https://machinelearning.apple.com/2017/12/06/learning-with-privacy-at-scale.html.

- [7] Brendan Avent, Yatharth Dubey, and Aleksandra Korolova. The power of the hybrid model for mean estimation. The 20th Privacy Enhancing Technologies Symposium (PETS), 2020.

- [8] Brendan Avent, Aleksandra Korolova, David Zeber, Torgeir Hovden, and Benjamin Livshits. BLENDER: Enabling local search with a hybrid differential privacy model. In 26th USENIX Security Symposium (USENIX Security 17); Journal of Privacy and Confidentiality, 2019.

- [9] M. Bagnoli and T. Bergstrom. Log-Concave Probability And Its Applications. Papers 89-23, Michigan - Center for Research on Economic & Social Theory, 1989. URL: https://ideas.repec.org/p/fth/michet/89-23.html.

- [10] Raef Bassily, Albert Cheu, Shay Moran, Aleksandar Nikolov, Jonathan Ullman, and Steven Wu. Private query release assisted by public data. In International Conference on Machine Learning, pages 695–703. PMLR, 2020.

- [11] Raef Bassily, Kobbi Nissim, Uri Stemmer, and Abhradeep Guha Thakurta. Practical locally private heavy hitters. Advances in Neural Information Processing Systems, 30, 2017.

- [12] Amitabh Basu, Kipp Martin, and Christopher Thomas Ryan. Strong duality and sensitivity analysis in semi-infinite linear programming, 2015. arXiv:1510.07531.

- [13] Björn Bebensee. Local differential privacy: a tutorial. arXiv preprint arXiv:1907.11908, 2019. arXiv:1907.11908.

- [14] Amos Beimel, Aleksandra Korolova, Kobbi Nissim, Or Sheffet, and Uri Stemmer. The power of synergy in differential privacy: Combining a small curator with local randomizers. In Information-Theoretic Cryptography (ITC), 2020.

- [15] R. Bellman. Dynamic Programming. Princeton Landmarks in Mathematics and Physics. Princeton University Press, 2010. URL: https://books.google.co.in/books?id=92aYDwAAQBAJ.

- [16] Avrim Blum, Katrina Ligett, and Aaron Roth. A learning theory approach to noninteractive database privacy. Journal of the ACM (JACM), 60(2):1–25, 2013. doi:10.1145/2450142.2450148.

- [17] Hai Brenner and Kobbi Nissim. Impossibility of differentially private universally optimal mechanisms. SIAM Journal on Computing, 43(5):1513–1540, 2014. doi:10.1137/110846671.

- [18] Mark Bun, Jelani Nelson, and Uri Stemmer. Heavy hitters and the structure of local privacy. ACM Transactions on Algorithms (TALG), 15(4):1–40, 2019. doi:10.1145/3344722.

- [19] James Burke. Duality in linear programming. https://sites.math.washington.edu//˜burke/crs/407/notes/section4.pdf. Accessed: 2010-09-30.

- [20] Ioannis Caragiannis, Nisarg Shah, and Alexandros A. Voudouris. The metric distortion of multiwinner voting. Artificial Intelligence, 313:103802, 2022. doi:10.1016/j.artint.2022.103802.

- [21] Rui Chen, Haoran Li, A Kai Qin, Shiva Prasad Kasiviswanathan, and Hongxia Jin. Private spatial data aggregation in the local setting. In 2016 IEEE 32nd International Conference on Data Engineering (ICDE), pages 289–300. IEEE, 2016. doi:10.1109/ICDE.2016.7498248.

- [22] Albert Cheu, Adam Smith, Jonathan Ullman, David Zeber, and Maxim Zhilyaev. Distributed differential privacy via shuffling. In Advances in Cryptology–EUROCRYPT 2019: 38th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Darmstadt, Germany, May 19–23, 2019, Proceedings, Part I 38, pages 375–403. Springer, 2019. doi:10.1007/978-3-030-17653-2_13.

- [23] Ilaria Chillotti, Marc Joye, and Pascal Paillier. New challenges for fully homomorphic encryption. In Privacy Preserving Machine Learning - PriML and PPML Joint Edition Workshop, NeurIPS 2020, December 2020. URL: https://neurips.cc/virtual/2020/19640.

- [24] B. Chor, O. Goldreich, E. Kushilevitz, and M. Sudan. Private information retrieval. In Proceedings of the 36th Annual Symposium on Foundations of Computer Science, FOCS ’95, page 41, USA, 1995. IEEE Computer Society.

- [25] Bolin Ding, Janardhan Kulkarni, and Sergey Yekhanin. Collecting telemetry data privately. Advances in Neural Information Processing Systems, 30, 2017.

- [26] John C. Duchi, Michael I. Jordan, and Martin J. Wainwright. Local privacy and statistical minimax rates. In 2013 IEEE 54th Annual Symposium on Foundations of Computer Science, pages 429–438, 2013. doi:10.1109/FOCS.2013.53.

- [27] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography: Third Theory of Cryptography Conference, TCC 2006, New York, NY, USA, March 4-7, 2006. Proceedings 3, pages 265–284. Springer, 2006. doi:10.1007/11681878_14.

- [28] Cynthia Dwork and Aaron Roth. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014. doi:10.1561/0400000042.

- [29] Cynthia Dwork, Aaron Roth, et al. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014. doi:10.1561/0400000042.

- [30] Úlfar Erlingsson, Vitaly Feldman, Ilya Mironov, Ananth Raghunathan, Kunal Talwar, and Abhradeep Thakurta. Amplification by shuffling: From local to central differential privacy via anonymity. In Proceedings of the Thirtieth Annual ACM-SIAM Symposium on Discrete Algorithms, pages 2468–2479. SIAM, 2019. doi:10.1137/1.9781611975482.151.

- [31] Úlfar Erlingsson, Vasyl Pihur, and Aleksandra Korolova. RAPPOR: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, CCS ’14, pages 1054–1067, New York, NY, USA, 2014. ACM. doi:10.1145/2660267.2660348.

- [32] Lawrence C Evans. An introduction to mathematical optimal control theory version 0.2. Lecture notes available at http://math. berkeley. edu/˜ evans/control. course. pdf, 1983.

- [33] Vitaly Feldman, Audra McMillan, and Kunal Talwar. Hiding among the clones: A simple and nearly optimal analysis of privacy amplification by shuffling. In 2021 IEEE 62nd Annual Symposium on Foundations of Computer Science (FOCS), pages 954–964. IEEE, 2022.

- [34] Natasha Fernandes, Annabelle McIver, and Carroll Morgan. The laplace mechanism has optimal utility for differential privacy over continuous queries. In 2021 36th Annual ACM/IEEE Symposium on Logic in Computer Science (LICS), pages 1–12. IEEE, 2021. doi:10.1109/LICS52264.2021.9470718.

- [35] K. O. Friedrichs. The identity of weak and strong extensions of differential operators. Transactions of the American Mathematical Society, 55(1):132–151, 1944. URL: http://www.jstor.org/stable/1990143.

- [36] Kurt Otto Friedrichs. The identity of weak and strong extensions of differential operators. Transactions of the American Mathematical Society, 55(1):132–151, 1944.

- [37] Quan Geng and Pramod Viswanath. The optimal noise-adding mechanism in differential privacy. IEEE Transactions on Information Theory, 62(2):925–951, 2015. doi:10.1109/TIT.2015.2504967.

- [38] Robin C. Geyer, Tassilo J. Klein, and Moin Nabi. Differentially private federated learning: A client level perspective. arXiv preprint arXiv:1712.07557, 2017.

- [39] Arpita Ghosh, Tim Roughgarden, and Mukund Sundararajan. Universally utility-maximizing privacy mechanisms. SIAM Journal on Computing, 41(6):1673–1693, 2012. doi:10.1137/09076828X.

- [40] Vasilis Gkatzelis, Daniel Halpern, and Nisarg Shah. Resolving the optimal metric distortion conjecture. In 2020 IEEE 61st Annual Symposium on Foundations of Computer Science (FOCS), pages 1427–1438. IEEE, 2020. doi:10.1109/FOCS46700.2020.00134.

- [41] Ashish Goel, Zhihao Jiang, Aleksandra Korolova, Kamesh Munagala, and Sahasrajit Sarmasarkar. Differential privacy with multiple selections, 2024. doi:10.48550/arXiv.2407.14641.

- [42] Saikat Guha, Bin Cheng, and Paul Francis. Privad: Practical privacy in online advertising. In USENIX conference on Networked systems design and implementation, pages 169–182, 2011.

- [43] Mangesh Gupte and Mukund Sundararajan. Universally optimal privacy mechanisms for minimax agents. In Proceedings of the twenty-ninth ACM SIGMOD-SIGACT-SIGART symposium on Principles of database systems, pages 135–146, 2010. doi:10.1145/1807085.1807105.

- [44] F. Maxwell Harper and Joseph A. Konstan. The movielens datasets: History and context. ACM Trans. Interact. Intell. Syst., 5(4), December 2015. doi:10.1145/2827872.

- [45] Alexandra Henzinger, Emma Dauterman, Henry Corrigan-Gibbs, and Nickolai Zeldovich. Private web search with Tiptoe. In 29th ACM Symposium on Operating Systems Principles (SOSP), Koblenz, Germany, October 2023.

- [46] Peter Kairouz, Sewoong Oh, and Pramod Viswanath. Extremal mechanisms for local differential privacy. Advances in neural information processing systems, 27, 2014.

- [47] Shiva Prasad Kasiviswanathan, Homin K Lee, Kobbi Nissim, Sofya Raskhodnikova, and Adam Smith. What can we learn privately? SIAM Journal on Computing, 40(3):793–826, 2011. doi:10.1137/090756090.

- [48] Fatih Erdem Kizilkaya and David Kempe. Plurality veto: A simple voting rule achieving optimal metric distortion. arXiv preprint arXiv:2206.07098, 2022. doi:10.48550/arXiv.2206.07098.

- [49] Fragkiskos Koufogiannis, Shuo Han, and George J Pappas. Optimality of the laplace mechanism in differential privacy. arXiv preprint arXiv:1504.00065, 2015. arXiv:1504.00065.

- [50] Jingcheng Liu and Kunal Talwar. Private selection from private candidates. In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, pages 298–309, 2019. doi:10.1145/3313276.3316377.

- [51] Chiara Marcolla, Victor Sucasas, Marc Manzano, Riccardo Bassoli, Frank H.P. Fitzek, and Najwa Aaraj. Survey on fully homomorphic encryption, theory, and applications. Cryptology ePrint Archive, Paper 2022/1602, 2022. doi:10.1109/JPROC.2022.3205665.

- [52] Frank McSherry and Kunal Talwar. Mechanism design via differential privacy. In 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS’07), pages 94–103. IEEE, 2007. doi:10.1109/FOCS.2007.41.

- [53] Nicolas Papernot, Shuang Song, Ilya Mironov, Ananth Raghunathan, Kunal Talwar, and Úlfar Erlingsson. Scalable private learning with pate, 2018. arXiv:1802.08908.

- [54] Malcolm C. Pullan. An algorithm for a class of continuous linear programs. SIAM Journal on Control and Optimization, 31(6):1558–1577, 1993. doi:10.1137/0331073.

- [55] Apple Machine Learning Research. Learning with privacy at scale: Differential privacy for aggregate trend analysis. https://machinelearning.apple.com/research/differential-privacy-aggregate-trends, 2023. Accessed: 28th May 2025.

- [56] W. Rudin. Principles of Mathematical Analysis. International series in pure and applied mathematics. McGraw-Hill, 1976. URL: https://books.google.com/books?id=kwqzPAAACAAJ.

- [57] Sahasrajit Sarmasarkar, Zhihao Jiang, Ashish Goel, Aleksandra Korolova, and Kamesh Munagala. Multi-selection for recommendation systems, 2025. arXiv:2504.07403.

- [58] Vincent Toubiana, Arvind Narayanan, Dan Boneh, Helen Nissenbaum, and Solon Barocas. Adnostic: Privacy preserving targeted advertising. NDSS, 2010.

- [59] N.T. Vinh, D.S. Kim, N.N. Tam, and N.D. Yen. Duality gap function in infinite dimensional linear programming. Journal of Mathematical Analysis and Applications, 437(1):1–15, 2016. doi:10.1016/j.jmaa.2015.12.043.

- [60] Tianhao Wang, Jeremiah Blocki, Ninghui Li, and Somesh Jha. Locally differentially private protocols for frequency estimation. In 26th USENIX Security Symposium (USENIX Security 17), pages 729–745, 2017. URL: https://www.usenix.org/conference/usenixsecurity17/technical-sessions/presentation/wang-tianhao.

Appendix A Further extensions

We describe some additional results below.

-

When the user is not able to perform the optimal action, we show in Appendix A.3 that for an appropriate server response 141414The server’s action involves sampling from the posterior of the noise distribution. if the user’s action consists of adding symmetric noise whose distribution satisfies log-concave property151515If the random noise with log-concave distribution is given by , then we have and .. Observe that this property is satisfied by most natural distributions like Exponential and Gaussian.

-

We further show that Laplace noise continues to be an optimal noise distribution for the user even under a relaxed definition of geographic differential privacy (defined in Definition 21) in Section A.1. This definition captures cases when privacy guarantees are imposed only when the distance between users is below some threshold (recall from Section 1.3.3 that such a setup was studied in [37]).

-

Often, the set of users may not belong to but in many cases may have a feature vector embedding in d. Here, a server could employ dimensionality reduction techniques such as Principal Component Analysis (PCA) to create a small number of dimensions which have the strongest correlation to the disutility of a hypothetical user with features identical to the received signal. The server may project the received signal only along these dimensions to select the set of results. Here, we show that under some assumptions as discussed in [41, Appendix B.6] when the user’s action consists of adding independent Gaussian noise to every feature.

A.1 A generalization of Geographic differential privacy

Here we consider a generalization of -geographic differential privacy and define -geographic differential privacy for an increasing convex function satisfying Assumption 20.

Assumption 20.

is a increasing convex function satisfying and is differentiable at 0 with .

Definition 21 (alternate definition of geo-DP).

Let be a desired level of privacy and let be a set of input data and be the set of all possible responses and be the set of all probability distributions (over a sufficiently rich -algebra of given by ). For any satisfying Assumption 20 a mechanism is -geographic differentially private if for all and :

Since this definition allows the privacy guarantee to decay non-linearly with the distance between the user values, it is a relaxation of -geographic DP as defined in Definition 2. Observe that this definition captures cases where the privacy guarantees exist only when the distance between users is below some threshold by defining to be if for some threshold . Under this notion of differential privacy, we may redefine cost function as follows.

where . The definition of are similar to that in Section 1.1.2.

We now show that adding Laplace noise continues to remain an optimal action for the users even under this relaxed model of geographic differential privacy.

Theorem 22.

For -geographic differential privacy, adding Laplace noise, whose density function is , is one of the optimal choices of for users. Further, when , we have and the optimal mechanism (choice of actions of users and server) itself can be computed in closed form.

Proof.

The proof of this theorem follows identically to that of Theorem 5. However, we require a slight modification of Lemma 12 to prove it as stated and proven in Lemma 23.

Lemma 23.

Suppose, has a probability density function given by for every . Then, P satisfies -geographic differential privacy iff whenever satisfies Assumption 20.

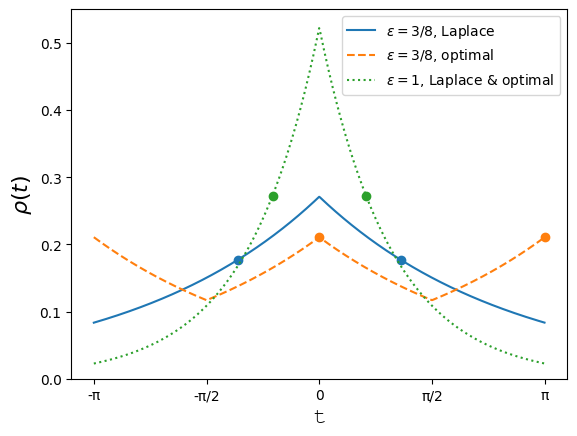

A.2 Calculation of optimal mechanism on a ring for the case of

We calculate the optimal mechanism in geographic differential privacy setting, on a unit ring, when , and the number of results is . Further, the dis-utility of an user from a result is given by .

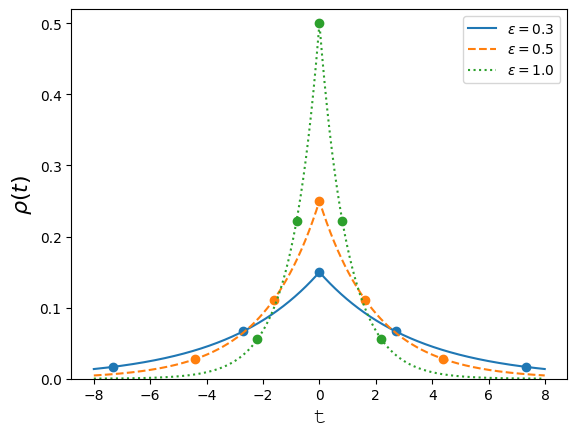

We use real numbers in to denote users and results on a unit ring, and denotes . Figure 4 illustrates the optimal mechanism under geographic DP for . This mechanism uses noise that is a piece-wise composition of Laplace noises; we obtain a cost of 0.72 whereas Laplace noise gives a cost of 0.75. To find the optimal mechanism for the case of the ring, we solve the DILP using a linear program solver and obtain the plot shown in Figure 4 with cost of 0.72. However, when the user sends Laplace noise, the server on receiving signal responds with two points and which maybe calculated by the following problem.

where is a density function for the Laplace distribution,

A.3 Noise satisfying Monotone Hazard Rate property

Let denote the random noise with density . We assume is symmetric about the origin, and let . Let denote the density function of (so that for ), and let . We assume that .161616This implies that a large is equivalent to the magnitude of noise being smaller and vice-versa. Although this distribution does not satisfy geographic differential privacy, this follows a similar trend w.r.t . We assume is continuously differentiable and log-concave. By [9], we have is also log-concave. Note that several natural distributions such as Exponential (Laplace noise) and Gaussian are log-concave.

We are interested in choosing values such that for a random draw , the expected error in approximating by its closest point in is small. For , we will choose . Let . Note that the error of with respect to is exactly .

Our main result is the following theorem:

Theorem 24.

.

Proof.

Let for . For upper bounding , we map each to the immediately smaller value in . If we draw uniformly at random, the error is upper bounded as:

Let Then the above can be rewritten as: . Next, it follows from [9] that if is log-concave, then so is . This means the function is non-increasing in . Therefore,

Let . Then, is convex and increasing. Further, . Let so that . Since , we have . Further, so that . Since and , we have . Therefore, . Putting this together,

Therefore,

completing the proof.

A.4 Restricted and Unrestricted Setup of the Multi-Selection model

Recall the setup in Section 1 where the users and results belonged to different sets and with the definition of disutility in Definition 3. In section 1.1.4, we considered an alternate setup where the users and results belonged to the same set and the optimal result for an user was the result itself. In this section, we call these setups unrestricted and restricted respectively and define our “multi-selection” model separately for both these setups. Finally, we bound the cost function in the unrestricted setup by the cost function in the restricted setup in Theorem 25 thus, showing that it is sufficient to consider the cost function in the restricted setup.

Unrestricted setup.

Recall that results and users are located in sets and respectively and function maps every user to its optimal result(ad). Recall that the disutility of an user from a result is defined in Definition 3.

Restricted setup.

A.4.1 The space of server/user actions

Recall that the goal is to determine a mechanism that has the following ingredients:

-

1.

A set of signals .

-

2.

The action of users, which involves choosing a signal from a distribution over signals. We use for to denote the distribution of the signals sent by user . This distribution is supported on .

-

3.

The distribution over actions of the server, when it receives . This distribution denoting the distribution of the result set returned by the server given signal may be supported on either k or , for the restricted setup and unrestricted setup respectively.

The optimal mechanism is computed by jointly optimizing over the tuple . And thus, we define the set of server responses by and for unrestricted and restricted setup respectively.

-

.

-

.

In any feasible geographic DP mechanism, the user behavior should satisfy -geographic differential privacy: for any , it should hold that where is any measurable subset of . For any fixed response size , in order to maximize utility while ensuring the specified level of privacy, the goal is to minimize the disutility of the user from the result that the gives the user minimum disutility where the minimisation is the worst case user in .

A.4.2 Cost functions in both the setups

For the unrestricted and restricted setups, we define the cost functions and respectively below. Recall that may denote any set.

| (19) | ||||

| (20) |

.

We state a theorem upper bounding by .

Theorem 25.

For any satisfying equation (2), we have .

A detailed proof for the same is provided in [41, Appendix B.4.4] using the fact that .

Appendix B Server response for odd when is an identity function

We now show the optimal choice of to optimize cost function [in Equation (14)]. Specifically, we assume odd in this section. The solution for even (refer [41, Theorem C.3]) can be constructed using a similar induction where the base case for can be directly optimized. Assuming the symmetry of , let , where are positive numbers in increasing order. We will construct the set inductively. Let be a random variable drawn from Laplace distribution with parameter , and the goal is to minimize . Since the density function of satisfies , we have

i.e. the user has a positive private value. Under this conditioning, the variable is an exponential random variable of mean 1. In this case, the search result being used by the server will be one of , …, . Clearly, . To compute , let . Then using the memorylessness property of exponential random variables, we get the recurrence

The optimal given can be computed by minimising over all . However, for the case where is an identity function, we may give a closed form expression below.

Setting , and minimizing by taking derivatives, we get which in turn gives and . Plugging in the inductive hypothesis of , we get . Further, we get . Thus, by returning results, the expected “cost of privacy” can be reduced by a factor of . To obtain the actual positions we have to unroll the induction. For , the position is given by: