Sparser Abelian High Dimensional Expanders

Abstract

The focus of this paper is the development of new elementary techniques for the construction and analysis of high dimensional expanders. Specifically, we present two new explicit constructions of Cayley high dimensional expanders (HDXs) over the abelian group . Our expansion proofs use only linear algebra and combinatorial arguments.

The first construction gives local spectral HDXs of any constant dimension and subpolynomial degree for every , improving on a construction by Golowich [50] which achieves . [50] derives these HDXs by sparsifying the complete Grassmann poset of subspaces. The novelty in our construction is the ability to sparsify any expanding Grassmann posets, leading to iterated sparsification and much smaller degrees. The sparse Grassmannian (which is of independent interest in the theory of HDXs) serves as the generating set of the Cayley graph.

Our second construction gives a 2-dimensional HDX of any polynomial degree ) for any constant , which is simultaneously a spectral expander and a coboundary expander. To the best of our knowledge, this is the first such non-trivial construction. We name it the Johnson complex, as it is derived from the classical Johnson scheme, whose vertices serve as the generating set of this Cayley graph. This construction may be viewed as a derandomization of the recent random geometric complexes of [74]. Establishing coboundary expansion through Gromov’s “cone method” and the associated isoperimetric inequalities is the most intricate aspect of this construction.

While these two constructions are quite different, we show that they both share a common structure, resembling the intersection patterns of vectors in the Hadamard code. We propose a general framework of such “Hadamard-like” constructions in the hope that it will yield new HDXs.

Keywords and phrases:

Local spectral expander, coboundary expander, Grassmannian expanderFunding:

Yotam Dikstein: This material is based upon work supported by the National Science Foundation under Grant No. DMS-1926686.Copyright and License:

2012 ACM Subject Classification:

Theory of computation ; Mathematics of computing Spectra of graphsAcknowledgements:

We thank Louis Golowich for helpful discussions and Gil Melnik for assistance with the figures.Editors:

Srikanth SrinivasanSeries and Publisher:

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Expander graphs are of central importance in many diverse parts of computer science and mathematics. Their structure and applications have been well studied for half a century, resulting in a rich theory (see e.g. [56, 76]). First, different definitions of expansion (spectral, combinatorial, probabilistic) are all known to be essentially equivalent. Second, we know that expanders abound; a random sparse graph is almost surely an expander [89]. Finally, there is a variety of methods for constructing and analyzing explicit expander graphs. Initially, these relied on deep algebraic and number theoretic methods [84, 45, 78]. However, the quest for elementary (e.g. combinatorial and linear-algebraic) constructions has been extremely fertile, and resulted in major breakthroughs in computer science and math; the zigzag method of [93] lead to [33, 92, 96], and the lifting method of [14] lead to [83, 82].

In contrast, the expansion of sparse high dimensional complexes (or simply hypergraphs) is still a young and growing research topic. The importance of high dimensional expanders (HDXs) in computer science and mathematics is consistently unfolding, with a number of recent breakthroughs which essentially depend on them, such as locally testable codes [34, 88], quantum coding [6] and Markov chains [5] as a partial list. High dimensional expanders differ from expander graphs in several important ways. First, different definitions of expansion are known to be non-equivalent [53]. Second, they seem rare, and no natural distributions of complexes are known which almost surely produce bounded-degree expanding complexes. Finally, so far the few existing explicit constructions of bounded degree HDXs use algebraic methods [12, 16, 72, 79, 68, 86, 25]. No elementary constructions are known where high dimensional expansion follows from a clear (combinatorial or linear algebraic) argument. This demands further understanding of HDXs and motivates much prior work as well as this one.

In this work we take a step towards answering this question by constructing sparser HDXs by elementary means. We construct sparse HDXs with two central notions of high dimensional expansion: local spectral expansion and coboundary expansion.

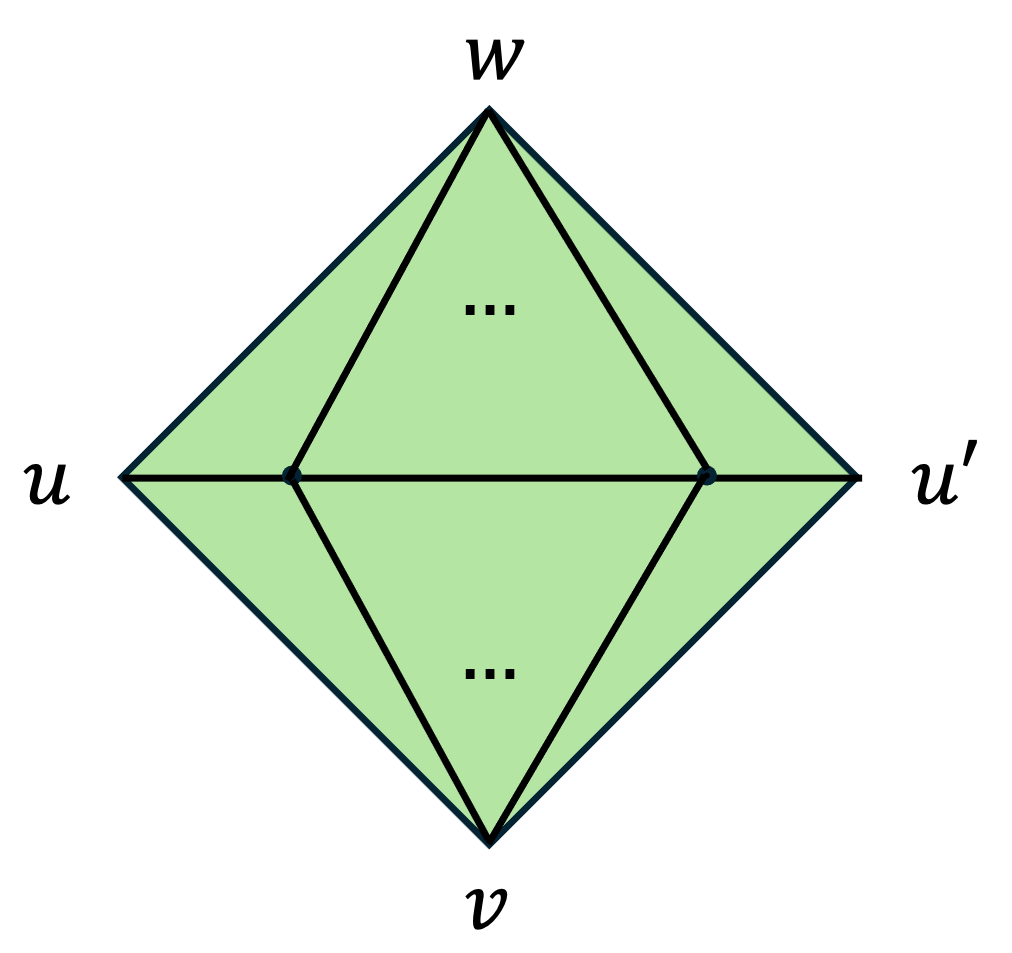

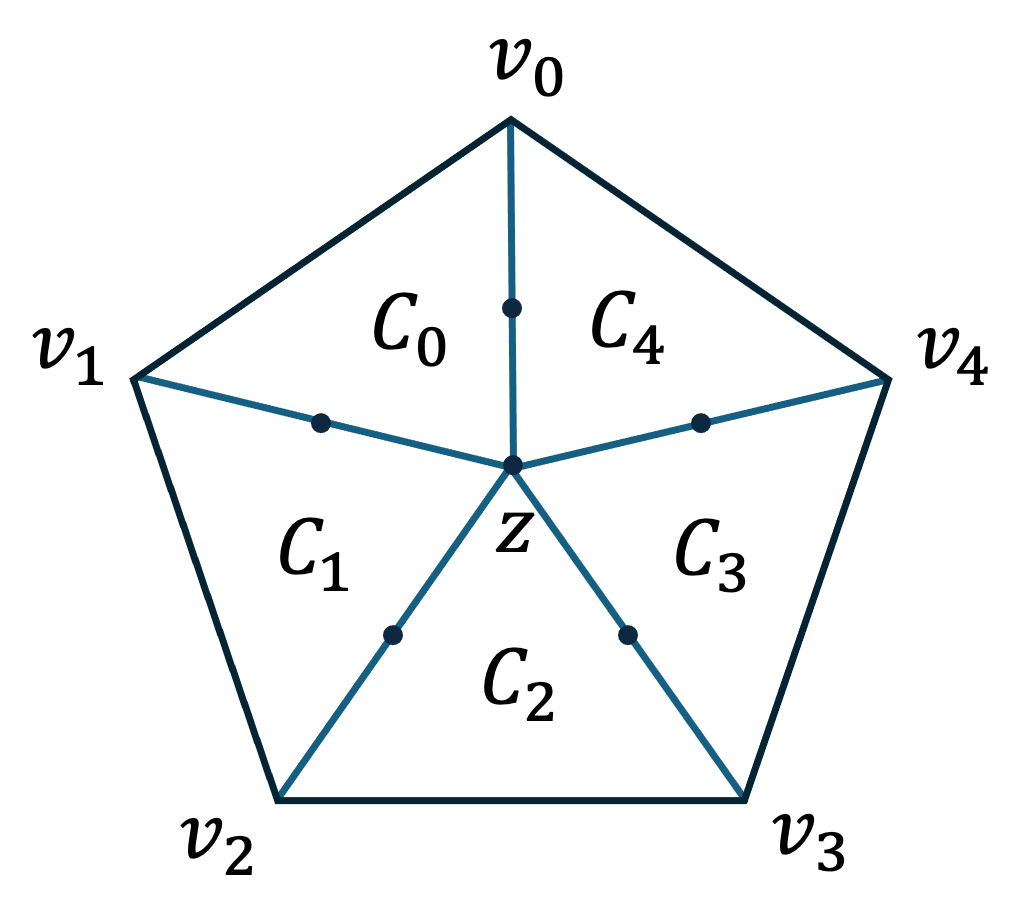

To better discuss our results, we define some notions of simplicial complexes and expansion. For simplicity, we only define them in the -dimensional case, though many of our results extend to arbitrary constant dimensions. For the full definitions see Section 2. A 2-dimensional simplicial complex is a hypergraph with vertices , edges and triangles (sets of size ). We require that if then all its edges are in , and all its vertices are in . We denote by the number of vertices. Given a vertex , its degree is the number of edges adjacent to it. An essential notion for HDXs is the local property of its links. A link of a vertex is the graph whose set of vertices is the neighborhood of ,

The edges correspond to the triangles incident on :

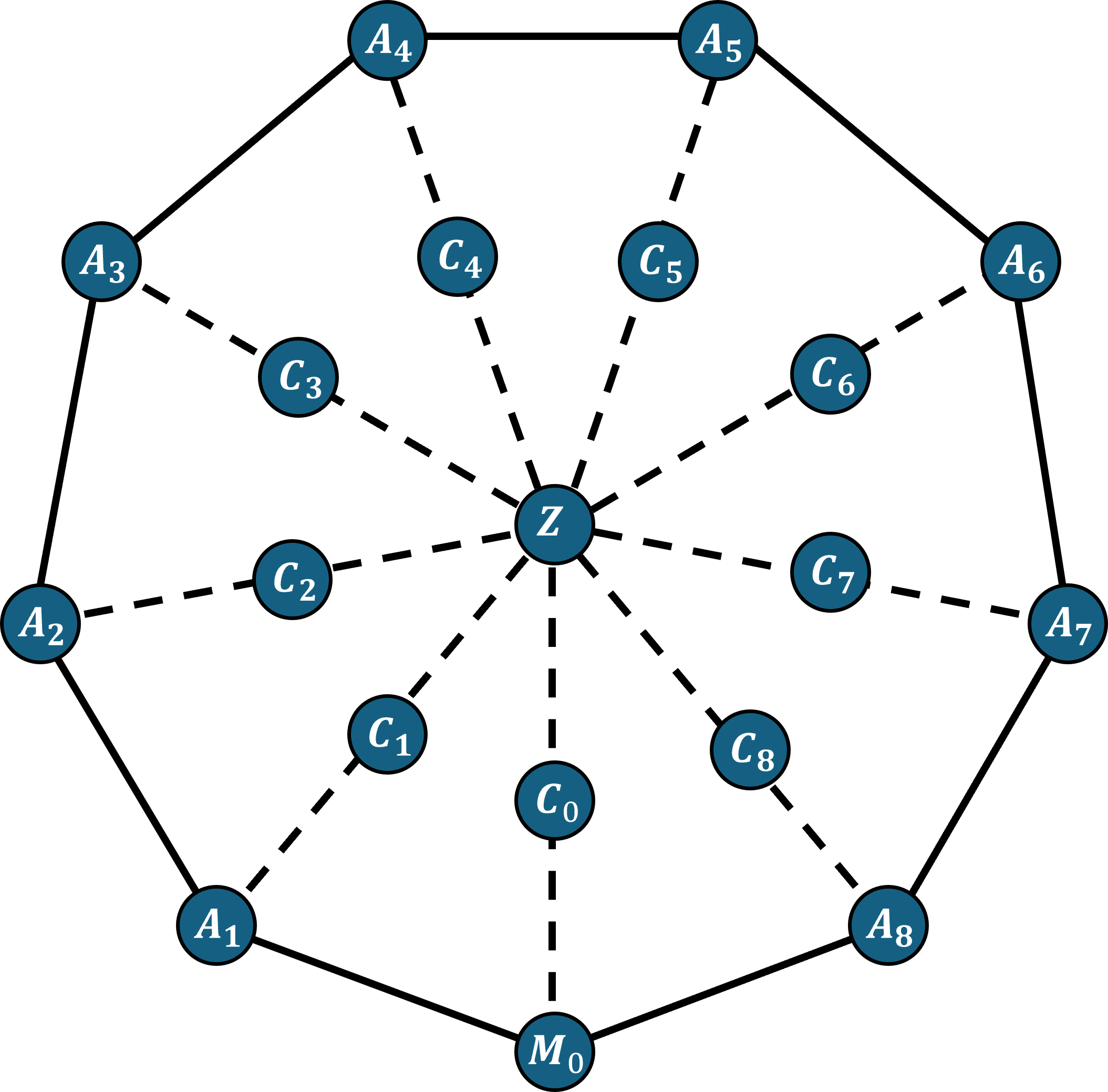

In this paper we focus on a particularly simple class of complexes, called Cayley complexes over . These are simplicial complexes whose underlying graph is a Cayley graph over , and so have vertices. It is well-known that constant-degree Cayley complexes over abelian groups cannot be high dimensional expanders . In fact, logarithmic degree is needed for a -dimensional complex, namely a graph, to be an expander [3]. In particular, random Cayley graphs over with degree (logarithmic in the number of vertices) are expanders with high probability. However, no abelian Cayley complex constructions with remotely close degree are known to have higher dimensional expansion, and a guiding question of this paper is to develop techniques for constructing and analyzing abliean Cayley HDXs of smallest possible degree.

In the rest of the introduction we will explain our constructions. In Section 1.1 we present our construction of local spectral Cayley complexes with arbitrarily good spectral expansion and a subpolynomial degree. In Section 1.2 we present our family of Cayley complexes that are both coboundary expanders and non-trivial local spectral expanders, with arbitrarily small polynomial degree. In Section 1.3 we discuss the common structure of our two constructions that relies on the Hadamard code. In Section 1.4 we present some open questions.

1.1 Local spectral expanders

We start with definitions and motivation, review past results and then state ours. The first notion of high dimensional expansion we consider is local spectral expansion. The spectral expansion of a graph is the second largest eigenvalue of its random walk matrix in absolute value, denoted . Local spectral expansion of complexes is the spectral expansion of every link.

Definition 1 (Local spectral expansion).

Let . A -dimensional simplicial complex is a -local spectral expander if the underlying graph is connected and for every , .

Local spectral expansion is the most well-known definition of high dimensional expansion. It was first introduced implicitly by [47] for studying the real cohomology of simplicial complexes. A few decades later, a few other works observed that properties of specific local spectral expanders are of interest to the computer science community [79, 60, 62]. The definition above was given in [38], for the purpose of constructing agreement tests. These are strong property tests that are a staple in PCPs (see further discussion in Section 1.2). Since then they have found many more applications that we now discuss.

Applications of local spectral expanders

One important application of local spectral expansion is in approximate sampling and Glauber dynamics. Local spectral expansion implies optimal spectral bounds on random walks defined on the sets of the complex. These are known as the Glauber dynamics or the up-down walks. This property has lead to a lot of results in approximate sampling and counting. These include works on Matroid sampling [5], random graph coloring [20] and mixing of Glauber dynamics on the Ising model [4].

Local spectral expanders have also found applications in error correcting codes. Their sampling properties give rise to construction of good codes with efficient list-decoding algorithms [1, 37, 59]. One can also use the to construct locally testable codes that sparsify the Reed-Muller codes [39].

The list decoding algorithms in [1, 59] rely on their work on constraint satisfaction problems on local spectral expanders. These works generalize classical algorithms for solving CSPs on dense hypergraphs, to algorithms that solve CSPs on local spectral expanders. These works build on the “dense-like” properties of these complexes, and prove that CSPs on local spectral expanders are easy. It is interesting to note that local spectral expanders have rich enough structure so that one can also construct CSPs over them that are also hard for Sum-of-Squares semidefinite programs [35, 57]111In fact, [1] uses Sum-of-Squares to solve CSPs on local spectral expanders. It seems contradictory, but the hardness results in [35, 57] use CSPs where the variables are the edges of the HDX. The CSPs in [1] use its vertices as variables..

Some of the most important applications of local spectral expansion are constructions of derandomized agreement tests [38, 26, 51, 9, 31] and even PCPs [11]. Most of these works also involve coboundary expansion so we discuss these in Section 1.2.

Local spectral expanders have other applications in combinatorics as well. They are especially useful in sparsifying central objects like the complete uniform hypergraph and the boolean hypercube. The HDX based sparsifications maintain many of their important properties such as spectral gap, Chernoff bounds and hypercontractivity (see [62, 30, 67, 54, 8, 32] as a partial list).

Previous constructions

Attempts to construct bounded degree local spectral expanders with sufficient expansion for applications have limited success besides the algebraic constructions mentioned above [12, 16, 72, 79, 68, 86, 25]. Random complexes in the high dimensional Erdős–Rényi model defined by [73] are not local spectral expanders with overwhelming probability when the average degree is below and no other bounded degree model is known. The current state of the art is the random geometric graphs on the sphere [74], that achieve non-trivial local spectral expansion and arbitrarily small polynomial degree (but their expansion is also bounded from below, see discussion in Section 1.2). Even allowing an unbounded degree, the only other known construction that is non-trivially sparse complexes is the one in [50] mentioned in the abstract.

Our result

The first construction we present is a family of Cayley local spectral expanders. Namely,

Theorem 2.

For every and integer there exists an infinite family of -dimensional Cayley -local spectral expanders over the vertices with degree at most .

This construction builds on an ingenious construction by Golowich [50], which proved this theorem for . As far as we know, this is the sparsest construction that achieves an arbitrarily small -local spectral expansion other than the algebraic constructions.

Proof overview

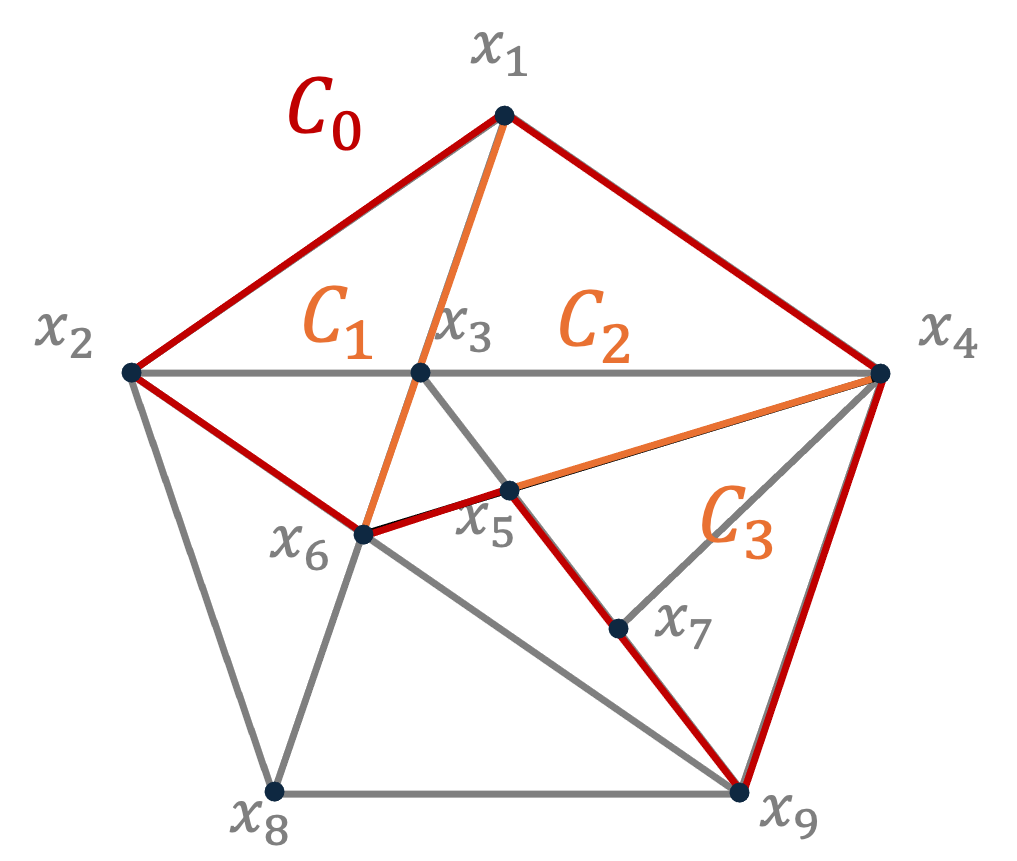

Our construction is based on the well studied Grassmann poset, the partially ordered set of subspaces of , ordered by containment. This object is the vector space equivalent of high dimensional expander, and was previously studied in [30, 69, 46, 50]. To understand our construction, we first describe the one in [50]. Golowich begins by sparsifying the Grassmann poset, to obtain a subposet. The generators of the Cayley complex in [50] are all (non-zero vectors in) the one-dimensional subspaces in the subposet. The analysis of expansion in [50] depends on the expansion properties of the subposet. The degree is just the number of one-dimensional vector spaces in the subposet, which is .

Our construction takes a modular approach to this idea. We modify the poset sparsification in [50] so that instead of the entire Grassmann poset, we can plug in any subposet of the Grassmann, and obtain a sparsification whose size depends on (not the complete Grassmann).

Having this flexible sparsification step, we iterate. We start with , the complete Grassmann poset, we obtain a sequence of sparser and sparser subposets. The vectors in are low-rank matrices whose rows and columns are vectors in subspaces of .

We comment that while this is a powerful paradigm, it does have a drawback. The dimension of is logarithmic in the dimension of (the dimension of is the maximal dimension of a space inside ). Thus for a given complex we can only perform this sparsification a constant number of steps keeping constant. Our infinite family is generated by taking an infinite family of ’s, and using our sparsification procedure on every one of them separately.

This construction of sparsified posets produces local spectral expanders of any constant dimension, as observed by [50]. The higher dimensional sets in the resulting simplicial complex correspond to the higher dimensional subspaces of the sparsification we obtain. We refer the reader to Section 3 for more details.

The analysis of the local spectral expansion is delicate, so we will not describe it here. One property that is necessary for it is the fact that every rank- matrix has many decompositions into sums of rank- matrices. Therefore, to upper bound spectral expansion we study graphs that arise from decompositions of matrices.

In addition, we wish to highlight a local-to-global argument in our analysis that uses a theorem by [81]. Given a graph that we wish to analyze, we find a set of expanding subgraphs of (that are allowed to overlap). We consider the decomposition graph whose vertices are and whose edges are all such that there exists an such that . If every is an expander and the decomposition graph is also an expander, then itself is an expander [81]. This decomposition is particularly useful in our setup. The graphs we need to analyze in order to prove the expansion of our complexes, can be decomposed into smaller subgraphs . These ’s are isomorphic to graphs coming from the original construction of [50]. Using this decomposition we are able to reduce the expansion of the links to the expansion of some well studied containment graphs in the original Grassmann we sparsify.

Remark 3 (Degree lower bounds for Cayley local spectral expanders).

The best known lower bound on the degree of Cayley -local spectral expanders over is [2]. This bound is obtained simply because the underlying graph of a Cayley -local spectral expander is an -spectral expander itself [87]. In Appendix I we investigate this question further, and provide some additional lower bounds on the degree of Cayley complexes based on their link structure. We prove that if the Cayley complex has a connected link then its degree is lower bounded by (compared to if the link is not connected). If the link mildly expands, we provide a bound that grows with the degree of a vertex inside the link. However, these bounds do not rule out Cayley -local spectral expanders whose generator set has size . The best trade-off between local spectral expansion and the degree of the complex is still open.

1.2 Our coboundary expanders

Our next main result is an explicit construction of a family of -dimensional complexes with an arbitrarily small polynomial degree, that have both non-trivial local spectral expansion and coboundary expansion. To the best of our knowledge, this is the first such nontrivial construction. Again, these are Cayley complexes of .

Coboundary expansion is a less understood notion compared to local spectral expansion. Therefore, before presenting our result we build intuition for it slowly by introducing a testing-based definition of coboundary expanders. After the definition, we will discuss the motivation behind coboundary expansion and applications of known coboundary expanders.

As mentioned before, there are several nonequivalent definitions of expansion for higher-dimensional complexes. In particular, coboundary expansion generalizes the notion of edge expansion in graphs to simplicial complexes. Coboundary expansion was defined independently by [73],[85] and [52]. The original motivation for this definition came from discrete topology; more connections to property testing were discovered later. We will expand on these connections after giving the definition. This definition we give is equivalent to the standard definition, but is described in the language of property testing rather than cohomology.

The more general and conventional definition for arbitrary groups can be found in Section 5.1.

Definition 4.

Let be a -dimensional simplicial complex. Consider the code whose generating matrix is the vertex-edge incidence matrix , and the local test of membership in this code for given by first sampling a uniformly random triangle and then accepting if and only if over .

Let . is a -coboundary expander over if

where is the relative distance from to the closest codeword in .

Observe that the triangles are parity checks of , and so if the input is a codeword, the test always accepts. A coboundary expander’s local test should reject inputs which are “far” from the code with probability proportional to their distance from it. The proportionality constant captures the quality of the tester (and coboundary expansion). We note that although the triangles are some parity checks of the code , they do not necessarily span all parity checks. In such cases, is not a coboundary expander for any .

Definition 5.

A set of -dimensional simplicial complexes is a family of coboundary expanders if there exists some such that for all , is a -coboundary expander.

Applications of coboundary expansion

We mention three different motivations to the study of coboundary expansion: from agreement testing, discrete geometry, and algebraic topology.

Coboundary expanders are useful for constructing derandomized agreement tests for the low-soundness (list-decoding) regime. As we mentioned before, agreement tests (also known as derandomized direct product tests) are a strong property test that arise naturally in many PCP constructions [33, 90, 48, 58] and low degree tests [94, 7, 91]. They were introduced by [48]. In these tests one gets oracle access to “local” partial functions on small subsets of a variable set, and is supposed to determine by as few queries as possible, whether these functions are correlated with a “global” function on the whole set of variables. The “small soundness regime”, is the regime of tests where one wants to argue about closeness to a “global” function even when the “local” partial functions pass the test with very small success probability (see [36] for a more formal definition). Works such as [36, 58, 40] studied this regime over complete simplicial complexes and Grassmanians, but until recently these were essentially the only known examples. Works by [51, 10, 27] reduced the agreement testing problems to unique games constraint satisfaction problems, and showed existence of a solution via coboundary expansion (over some symmetric groups), following an idea by [41]. This lead to derandomized tests that come from bounded degree complexes [31, 9].

In discrete geometry, a classical result asserts that for any points in the plane, there exists a point that is contained in at least a constant fraction of the triangles spanned by the points [13] 222This can also be generalized to any dimension by replacing triangles with simplices [15].. In the language of complexes, for every embedding of the vertices of the complete -dimensional complex to the plane, such a heavily covered point exists. One can ask whether such a point exists even when one allows the edges in the embedding to be arbitrary continuous curves. If for every embedding of a complex to the plane (where the edges are continuous curves), there exists a point that lays inside a constant fraction of the triangles of , we say has the topological overlapping property. The celebrated result by Gromov states that the complete complex indeed has this property [52]. It is more challenging to prove this property for a given complex.

In [52], Gromov asked whether there exists a family of bounded-degree complexes with the topological overlapping property. Towards finding an answer, Gromov proved that every coboundary expander has this property. This question has been extremely difficult. An important progress is made in [43], where they defined cosystolic expansion, a relaxation of coboundary expansion, and proved that this weaker expansion also implies the topological overlapping property. The problem was eventually resolved in [60] for dimension and in [44] for dimension where the authors show that the [79] Ramanujan complexes are bounded-degree cosystolic expanders.

Coboundary expansion has other applications in algebraic topology as well. Linial, Meshulam, and Wallach defined coboundary expansion independently, initiating a study on high dimensional connectivity and cohomologies of random complexes [73, 85]. Lower bounding coboundary expansion turned out to be a powerful method to argue about the vanishing of cohomology in high dimensional random complexes [42, 55].

Known coboundary expanders

Most of the applications mentioned above call for complexes that are simultaneously coboundary expanders and non-trivial local spectral expanders. So far there are no known constructions of such complexes with arbitrarily small polynomial degree even in dimension 333By coboundary expanders, we are referring to complexes that have coboundary expansion over every group, not just .. Here we summarize some known results.

Spherical buildings of type are dense complexes that appear as the links of the Ramanujan complexes in [79]. The vertex set of a spherical building consists of all proper subspaces of some ambient vector space . [52] proved that spherical buildings are coboundary expanders (see also [77]). Geometric lattices are simplicial complexes that generalize spherical buildings. They were first defined in [71]. Most recently, [28] show that geometric lattices are coboundary expanders. [68] show that the -dimensional vertex links of the coset geometry complexes from [66] are coboundary expanders.

If we restrict our interest to coboundary expanders over a single finite group instead of all groups, bounded degree coboundary expanders that are local spectral expanders are known. [60] solved this for the case conditioned on a conjecture by Serre. [19] constructed such complexes for the unconditionally, and their idea was extended by [31, 9] to every fixed finite group, which lead to the aforementioned agreement soundness result. Unfortunately, their approach cannot be leveraged to constructing a coboundary expander with respect to all groups simultaneously.

We note that the coboundary expanders mentioned above are also local spectral expanders and that if we do not enforce the local spectral expansion property, coboundary expanders are trivial yet less useful.

To the best of our knowledge, all known constructions of -dimensional coboundary expanders (over all groups) that are also non-trivial local spectral expanders have vertex degree at least for some fixed , where is the number of vertices (see e.g. [79, 66]).444To the best of our knowledge, this constant is . The diameter of all of those complexes is at most a fixed constant, which implies this lower bound on the maximal degree. In this work, we give the first such construction with arbitrarily small polynomial degree.

Our result

We now state our main result in this subsection. For every integer , and constant we construct a family of -dimensional simplicial complexes called the Johnson complexes. For any -dimensional simplicial complex , we use the notation (the -skeleton of ) to denote the -dimensional simplicial complex consists of the vertex, edge, and triangle sets of .

Theorem 6 (Informal version of Theorem 86, Lemma 59, and Lemma 91).

For any integer , , the -skeletons of the Johnson complexes are -local spectral expanders and -coboundary expanders (over every group ).

Moreover, if , the -skeletons of s’ vertex links are -coboundary expanders .

Fix . For every satisfying , we briefly describe the -skeletons of . The underlying graph of is simply the Cayley graph where consists of vectors with even Hamming weight, and is the set of vectors with Hamming weight . Thus the number of vertices in the graph is while the vertex degree is 555Here is the binary entropy function.. The triangles are given by:

Observe that the link of every vertex is isomorphic to the classically studied Johnson graph of -subsets of , that are connected if their intersection is (for ). We will use some of the known properties of this graph in our analysis.

We additionally show that when , we can extend the above construction to get -dimensional simplicial complexes with the exact same vertex set, edge set, and triangle set as defined above. Moreover we show that for every integer , the link of an -face in the complex is also a non-trivial local spectral expander (Lemma 59).

Remark 7.

Let . We note that all vertices in are in edges and all vertices in the links of are in edges. We remark that using a graph sparsification result due to [21], we can randomly subsample the triangles in to obtain a random subcomplex which is still a local spectral expander with high probability but whose links have vertex degree . A detailed discussion can be found in Appendix F.

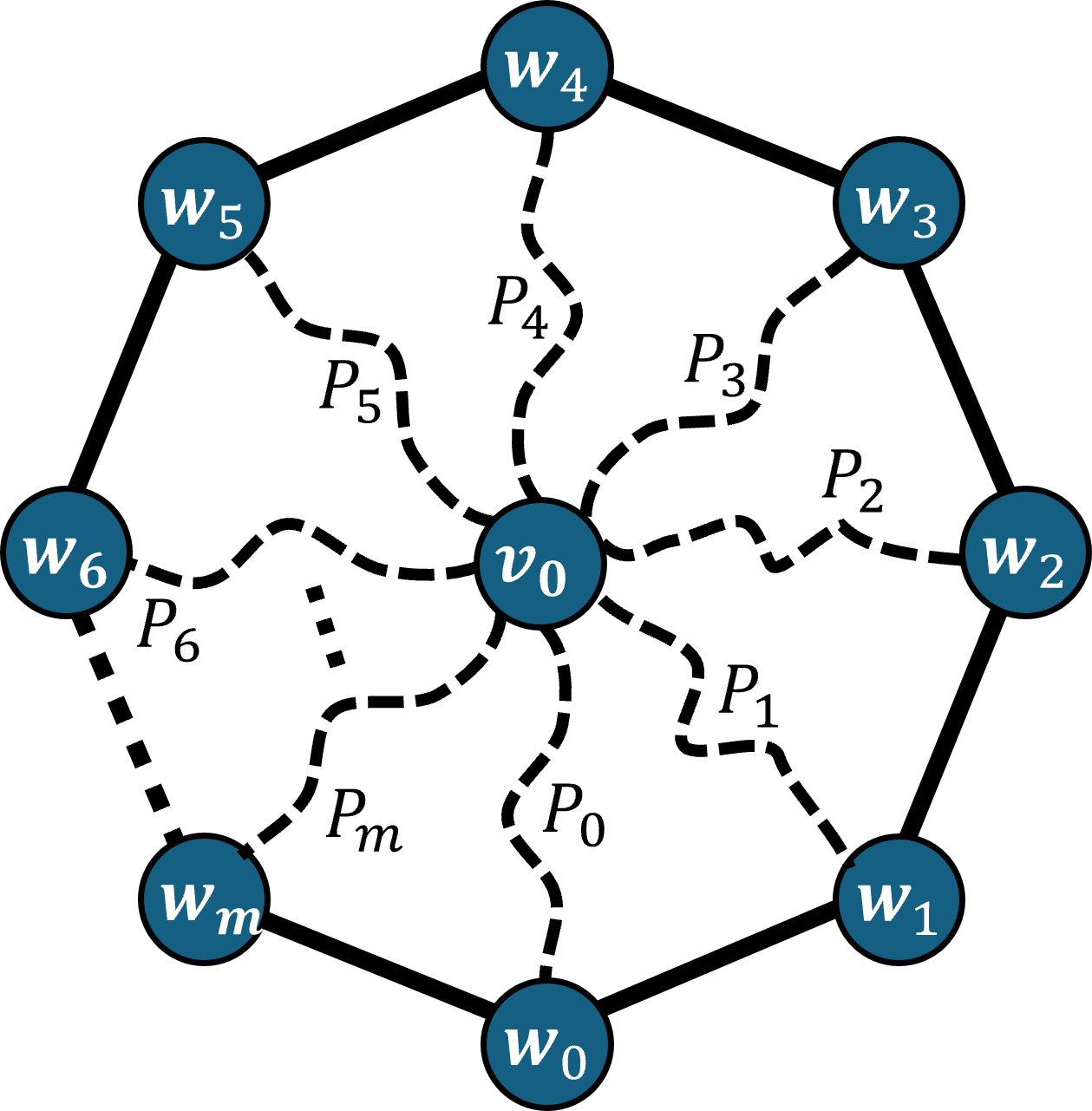

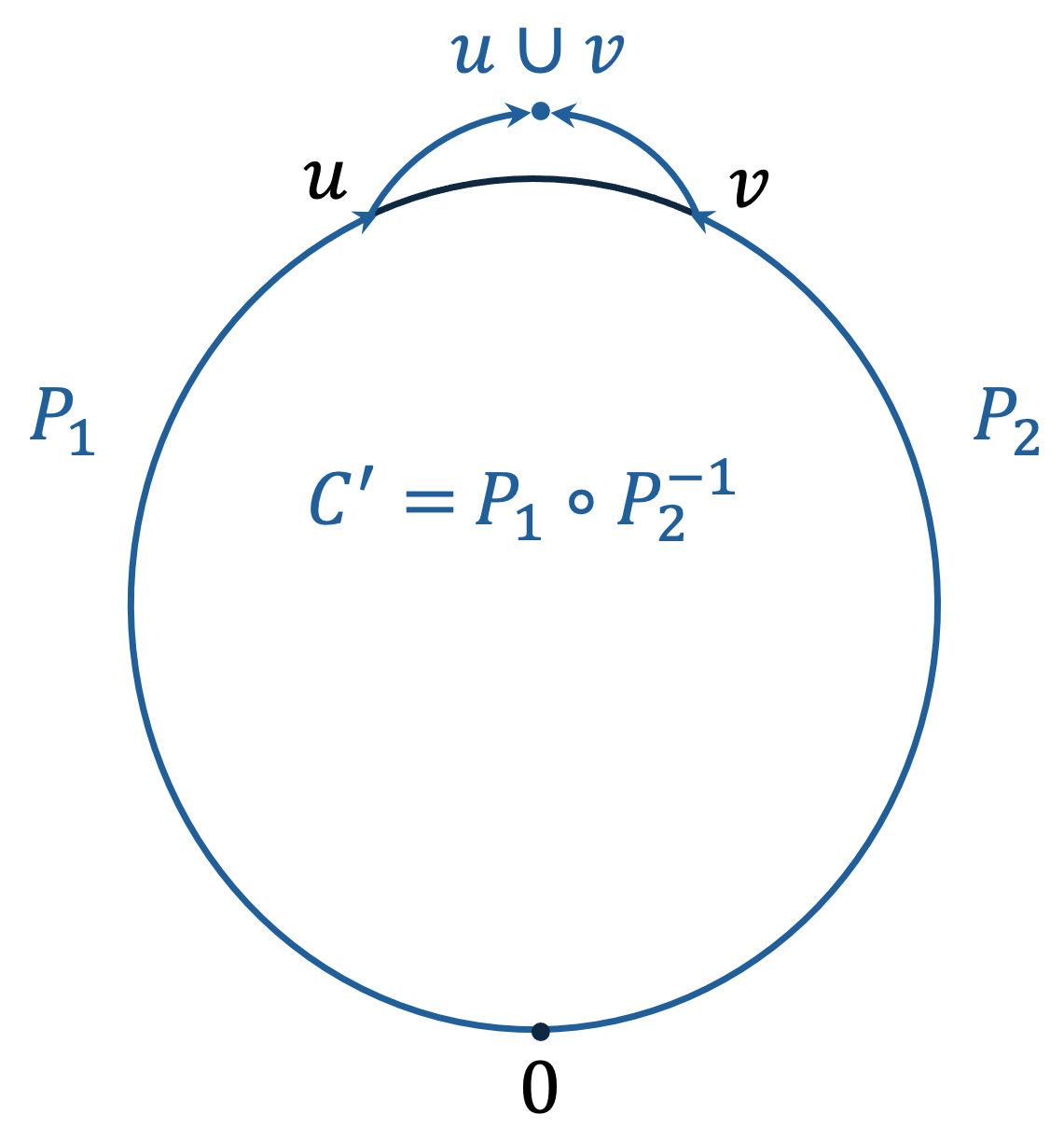

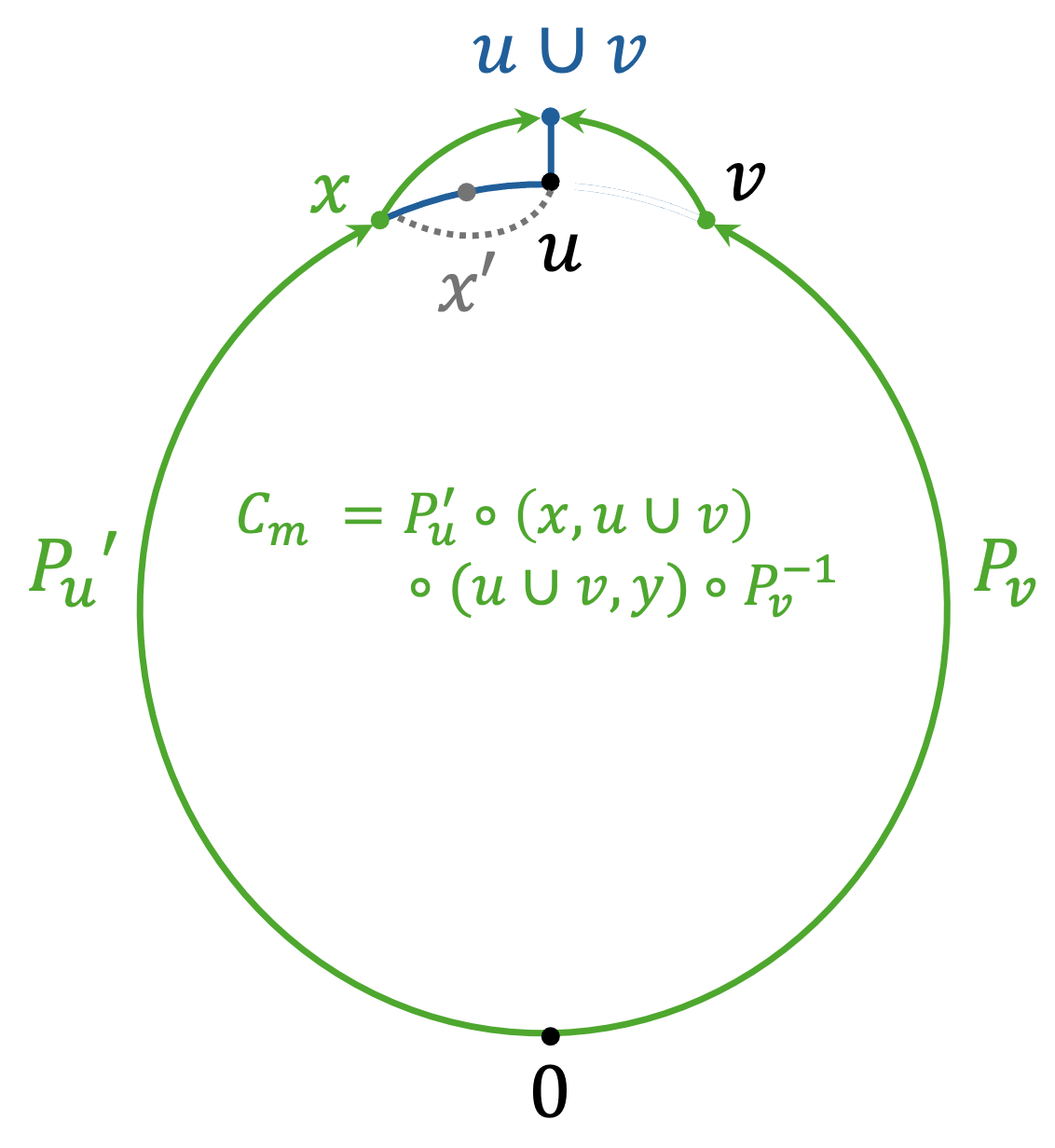

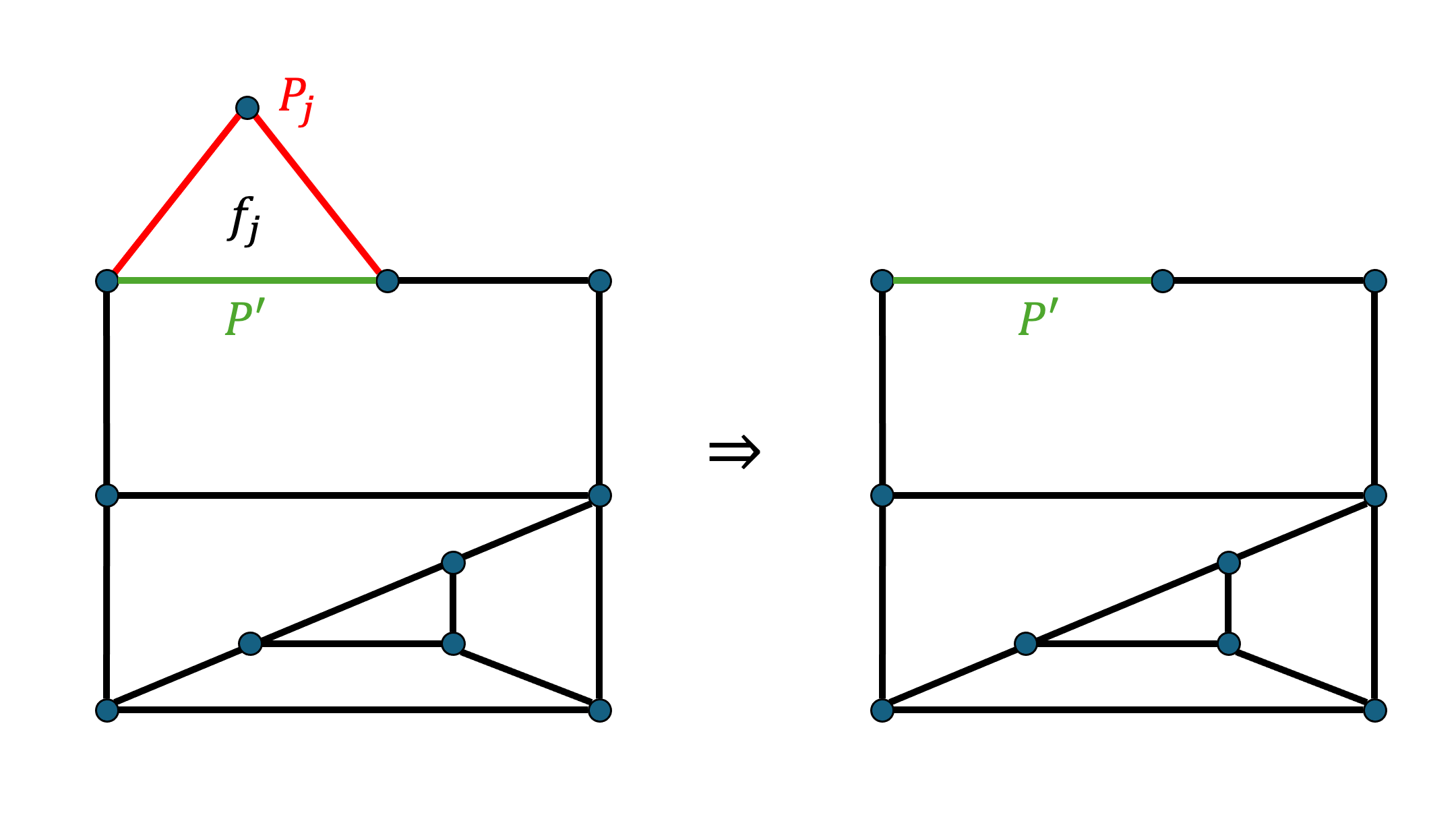

Before giving a high-level overview of the proof for Theorem 6, we describe a general approach for showing coboundary expansion called the cone method. Appearing implicitly in [52], it was used in a variety of works (see [77, 71, 68] as a partial list). We also take this approach to show that the Johnson complexes are coboundary expanders.

Coboundary expansion for links of the [50] Cayley local spectral expanders

Even though most of our results on coboundary expansion are for the Johnson complexes, we can also use the methods in this paper to prove that the vertex links of the [50] Cayley local spectral expanders are coboundary expanders. This implies that these Cayley local spectral expanders are cosystolic expanders (see [60, 28]), the relaxation of coboundary expansion mentioned above. This makes them useful for constructing agreement tests and complexes with the topological overlapping property. We note that [50] observed that these complexes are not coboundary expanders so this relaxation is necessary. A definition of cosystolic expansion and a detailed proof can be found in Section 6.3.

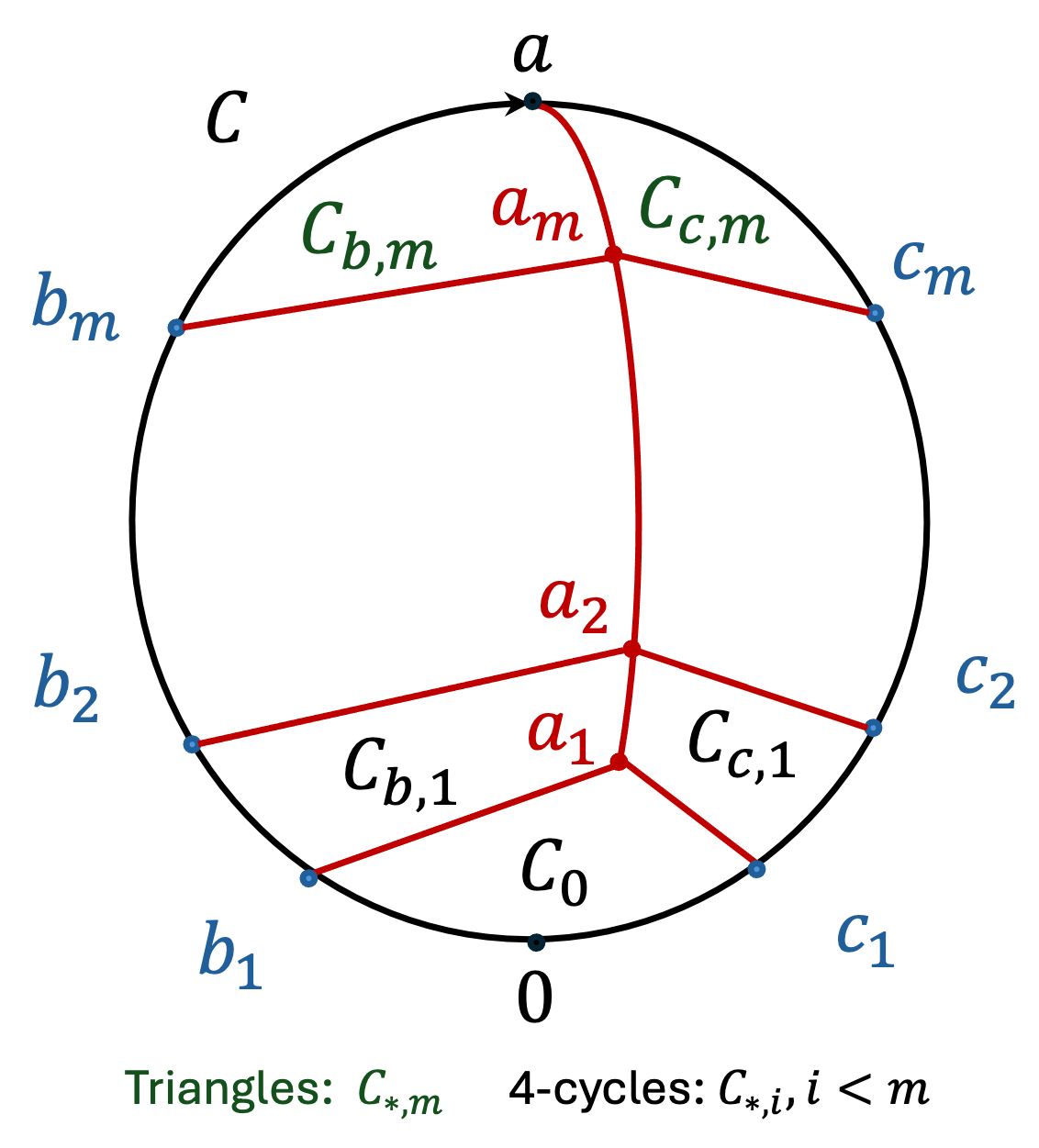

Cones and isoperimetry

Recall first the definition of -coboundary expansion above. We start with the code of edge functions which arise from vertex labelings by elements of some group . This implies that composing (in order) the values of along the edges of any cycle will give the identity element . This holds in particular for triangles, which are our “parity checks”.

We digress to discuss an analogous situation in geometric group theory, of the word problem for finitely presented groups. In this context, we are given a word in the generators of a group and need to check if it simplifies to the identity under the given relations. In our context the given word is the labeling of along some cycle, and a sequence of relations (here, triangles) is applied to successively “contract” this word to the identity. The tight upper bound on the number of such contractions in terms of the length of the word (called the Dehn function of the presentation), captures important properties of the group, e.g. whether or not it is hyperbolic. This ratio between the contraction size and the word length is key to the cone method.

One convenient way of capturing the number of contractions is the so-called “van Kampen diagram”, which simply “tiles” the cycle with triangles in the plane (all edges are consistently labeled by group elements). The van Kampen lemma ensures that if a given word can be tiled with tiles, then there is a sequence of contractions that reduce it to the identity [97]. The value in this viewpoint is that tiling is a static object, which can be drawn on the plane, and allows one to forget about the ordering of the contractions. We will make use of this in our arguments, and for completeness derive the van Kampen lemma in our context. Note that a bound on the minimum number of tiles (a notion of area) needed to cover any cycle of a given length (a notion of boundary length) can be easily seen as an isoperimetric inequality! The cone method will require such isoperimetric inequality, and the Dehn function gives an upper bound on it.

Back to -coboundary expansion. Here the given may not be in , and we need to prove that if it is “far” from , then the proportion of triangles which do not compose to the identity on will be at least . The cone method localizes this task. To use this method, one needs to specify a family of cycles (also called a cone) in the underlying graph of the complex. Gromov observed that the complex’s coboundary expansion has a lower bound that is inverse proportional to the number of triangles (in the complex) needed to tile a cycle from this cone [52]. This number is also referred to as the cone area. Thus, bounding the coboundary expansion of the complex reduces to computing the cone area of some cone. Needless to say, an upper bound on the Dehn function, which gives the worst case area for all cycles, certainly suffices for bounding the cone area of any cone.666We note that in a sense the converse is also true - computing the cone area for cones with cycles of “minimal” length suffices for computing the Dehn function. We indeed give such a strong bound.

Proof overview

It remains to construct a cone in and upper bound its cone area. We now provide a high level intuition for our approach. First observe that the diameter of the complex is . The proof then proceeds as follows.

-

1.

We move to a denser -dimensional complex whose underlying graph is the Cayley graph where consists of vectors with even Hamming weight, and is the set of vectors with even Hamming weight . The triangle set consists of all -cliques in the Cayley graph. We note that has the same vertex set as but has more edges and triangles than the Johnson complex.

-

2.

Then we carefully construct a cone in with cone area .

-

3.

We show that every edge in translates to a length- path in , and every -cycle (boundary of a triangle) in translates to a -cycle in which can be tiled by triangles from .

-

4.

We translate the cone in into a cone in by replacing every edge in the cycles of with a certain length- path in . Thus every cycle can be tiled by first tiling its corresponding cycle with triangles and then tiling each of the triangles with triangles. Thereby we conclude that has cone area .

Local spectral expansion and comparison to random geometric complexes

These Johnson complexes also have non-trivial local spectral expansion. While they do not achieve arbitrarily small -local spectral expansion, they do pass the barrier. From a combinatorial point of view, is an important threshold. For , there are elementary bounded-degree constructions of -local spectral expanders [22, 17, 23, 75, 49] but these fail to satisfy any of the local-to-global properties in HDXs. For all these constructions break. For this regime, all bounded degree constructions rely on algebraic tools. This is not by accident; below there are a number of high dimensional global properties suddenly begin to hold. For instance, a theorem by [47] implies that when all real cohomologies of the complex vanish. Another example is the “Trickle-Down” theorem by [87] which says that the expansion of the skeleton is non-trivial whenever . These are strong properties, and we do not know how produce them in elementary constructions. Therefore, when constructions of local spectral expanders are considered non-trivial only when .

To show local spectral expansion, we first note that the Johnson complex is symmetric for each vertex. Hence, it suffices to focus on the link of . Because the triangle set is well-structured, we can show that the link graph of is isomorphic to tensor products of Johnson graphs (Proposition 62). This allows us to use the theory of association schemes to bound their eigenvalues.

We also note that since all boolean vectors in lie on a sphere in centered at , the Johnson complex can be viewed as a geometric complex whose vertices are associated with points over a sphere and two vertices form an edge if and only if their corresponding points’ distance is . Previously [74] prove that randomized -dimensional geometric complexes are local spectral expanders. Comparing the two constructions, we conclude that Johnson complexes are sparser local spectral expanders than random geometric complexes.

1.3 The common structure between the two constructions

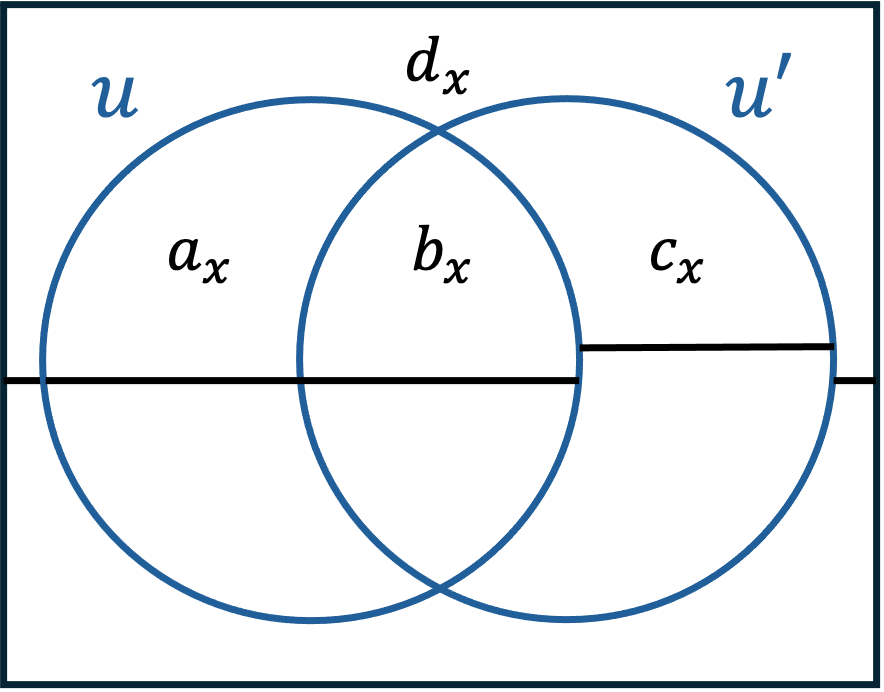

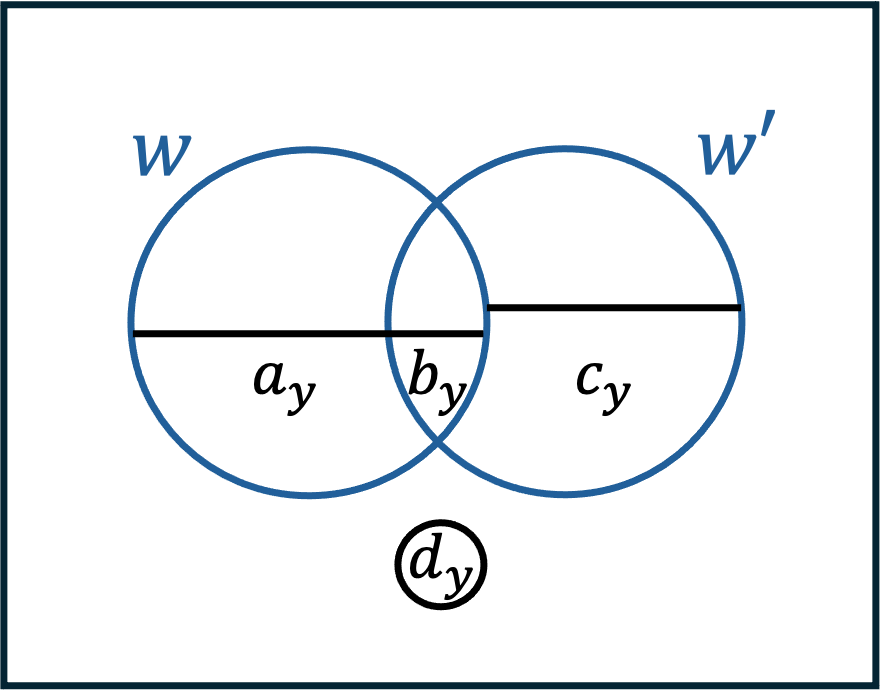

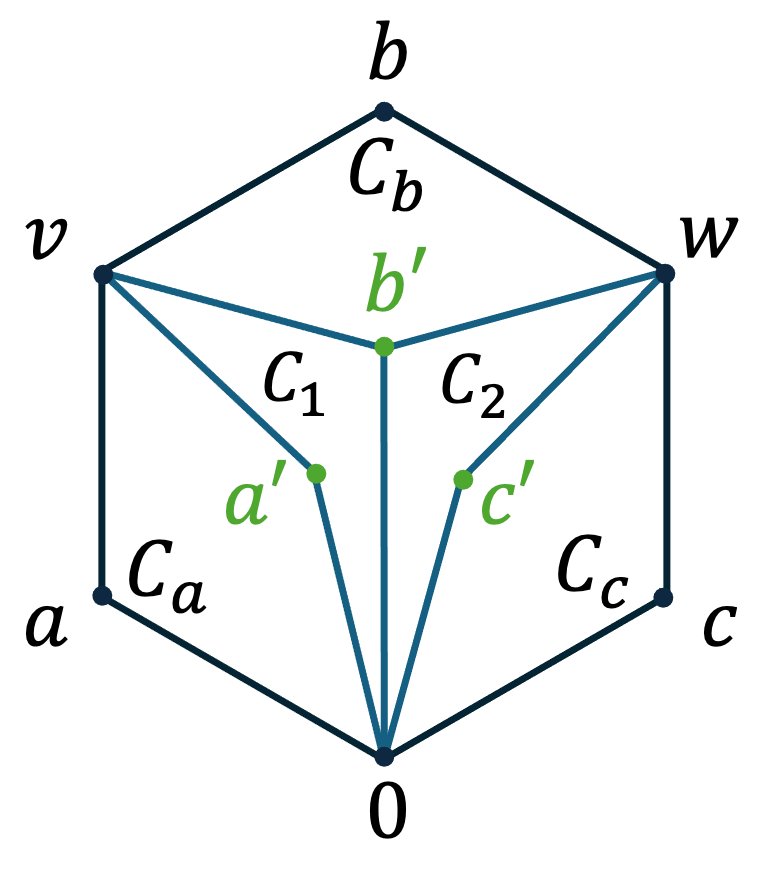

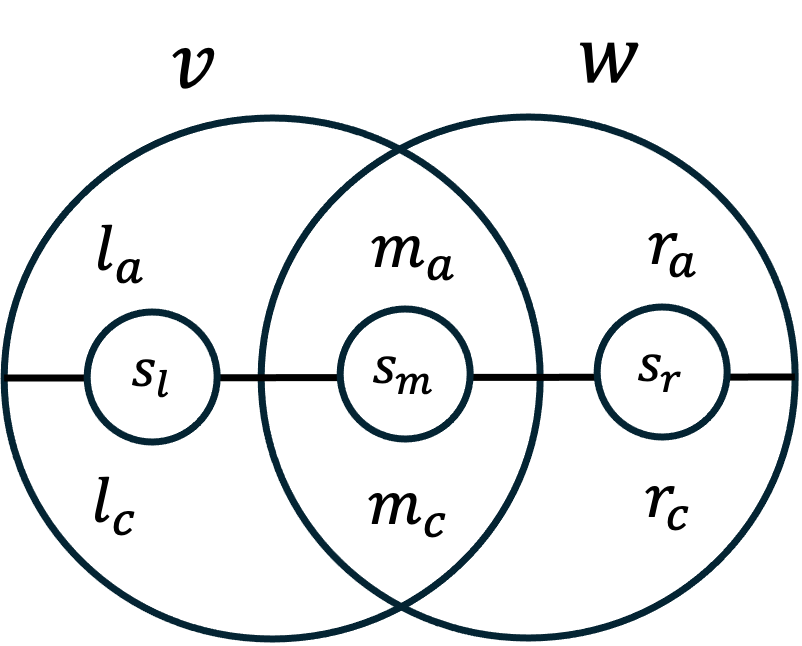

Taking a step back, we wish to highlight that the two constructions in our paper share a common structure. Both of these constructions can be described via “induced Hadamard encoding”. For a (not necessarily linear) function , the “induced” Hadamard encoding is the linear map given by

where and the sum is over . Our two constructions of Cayley HDXs take the generating set of the Cayley graph to be all bases of spaces , for carefully chosen functions as above; the choice determines the construction. We note that the “orthogonality” of vectors of the Hadamard code manifests itself very differently in the two constructions: in the Johnson complexes it can be viewed via the Hamming weight metric, while in the other construction it may be viewed via the matrix rank metric. We connect the structure of links in our constructions, to the restrictions of these induced Hadamard encodings to affine subspaces of . Using this connection we show that one can decompose the link into a tensor product of simpler graphs, which are amenable to our analysis. A special case of this observation was also used in the analysis of the complexes constructed by [50].

1.4 Open questions

Local spectral expanders

As mentioned above, we do not know how sparse a Cayley local spectral expander can be. To what extent can we close the gaps between the lower and upper bounds for various types of abelian Cayley HDXs? In particular, can we show that any nontrivial abelian Cayley expanders must have degree ? Conversely, can we construct Cayley HDXs where the degree is upper bounded by some polynomial in ? Limits of our iterated sparsification technique would also be of interest.

So far, the approach of constructing Cayley local spectral expanders over by sparsifying Grassmann posets yields the sparsest known such complexes. In contrast to the success of this approach, we have limited understanding of its full power. To this end, we propose several questions: what codes can be used in place of the Hadamard codes so that the sparsification step preserves local spectral expansion? Could this approach be generalized to obtain local spectral expanders over other abelian groups? As mentioned above, we know that the approach we use can not give a complex of polynomial degree in without introducing new ideas.

Coboundary expanders

Another fundamental question regards the isoperimetric inequalities we describe above. In this paper and many others, one uses the approach pioneered by Gromov [52] that applies isoperimetric inequalities to lower bounding coboundary expansion. A natural question is whether an isoperimetric inequality is also necessary to obtain coboundary expansion. An equivalence between coboundary expansion and isoperimetry will give us a simple alternative description for coboundary expansion. A counterexample to such a statement would motivate finding alternative approaches for showing coboundary expansion.

We call our family of -dimensional simplicial complexes that are both local spectral expanders and -dimensional coboundary expanders Johnson complexes. This construction can be generalized to yield families of -dimensional Johnson complexes that are still local spectral expanders. However, it remains open whether they are also coboundary expanders in dimension .

1.5 Organization of this paper

In Section 2, we give preliminaries on simplicial complexes and local spectral expansion. We also define Grassmann posets–the vector space analogue for HDXs–which we use in our constructions. Other elementary backgrounds on graphs and expansion are given there as well.

In Section 3, we construct the subpolynomial degree Cayley complexes, proving Theorem 2. Most of this section discusses Grassmann posets instead of Cayley HDXs, but we describe the connection between the two given by [50] in this section too. We defer some of the more technical expansion upper bounds to Appendix D.

In Section 4 we construct the Johnson complexes which are both coboundary expanders and local spectral expanders. In this section we prove that they are local spectral expanders, leaving coboundary expansion to be the focus of the next two sections. We also give a detailed comparison of this construction to the one in [74], and discuss how to further sparsify our complexes by random subsampling.

In Section 5 we take a detour to formally define coboundary expansion and discuss its connection to isoperimetric inequalities. In this section we also give our version of the van-Kampen lemma, generalized to the setting of coboundary expansion. This may be of independent interest, as the van-Kampen lemma simplifies many proofs of coboundary expansion.

In Section 6 we prove that the Johnson complexes are coboundary expanders (Theorem 86), and local coboundary expanders (Theorem 89). We also prove that links in the construction of [50] are coboundary expanders.

In Section 7 we show that both the Johnson complex and the matrix complexes are special cases of a more general construction. In this section we show that the two complexes have a similar link structure, which is necessary for analyzing the local spectral expansion in both Cayley HDXs.

The appendices contain some of the more technical claims for ease of reading. In Appendix I we also give a lower bound on the degree of Cayley local spectral expanders, based on the degree of the link.

2 Preliminaries

We denote by .

2.1 Graphs and expansion

Let be a weighted graph where are the weights. In this paper we assume that is a probability distribution that gives every edge a positive measure. When is clear from context we do not specify it. We extend to a measure on vertices where . When is connected this is the stationary distribution of the graph. The adjacency operator of the graph sends to with . We denote by the usual inner product, and recall the classic fact that is self adjoint with respect to this inner product. We denote by its second largest eigenvalue in absolute value. Sometimes we also write instead of . It is well-known that .

Definition 8 (Expander graph).

Let . A graph is a -expander if .

This definition is usually referred to as -two-sided spectral expansion. We note that the one-sided definition where one only gets an upper bound on the eigenvalues of is also interesting, but is out of our paper’s scope (see e.g. [56]).

When is a bipartite graph, we also have inner products on each side defined by , with respect to restricted to (and similarly to real valued functions on ). The left-to-right bipartite graph operator that sends to with (resp. right-to-left bipartite operator). It is well known that is equal to the second largest eigenvalue of the non bipartite adjacency operator defined above (not in absolute value). We also denote this quantity (it will be clear that whenever is bipartite this is the expansion parameter in question).

Definition 9 (Bipartite expander graph).

Let . A bipartite graph is a -bipartite expander if .

We use the following standard auxiliary statements.

Claim 10.

Let be adjacency matrices of two graphs over the same vertex set and have the same stationary distribution. Let . Then .

We will usually instantiate this claim with being the complete graph with self loops, i.e. the edge distribution is just two independent choices of vertices. This has so we get this corollary.

Corollary 11.

Let be an adjacency matrix of a weighted graph .

-

1.

.

-

2.

In particular, if is uniform over a set with then .

We also define a tensor product of graphs.

Definition 12.

Let be graphs. The tensor product is a graph with vertices and edges if and . The measure on is the product of the original graphs’ measures.

We record the following well-known fact.

| (2.1) |

In fact, if are the eigenvalues of and are the eigenvalues of , then the eigenvalues of are the multiset .

We also need the notion of a double cover.

Definition 13 (Double cover).

Let be a graph and be the graph that contains a single edge. The double cover of is the graph . Explicitly, its vertex set is and we connect if and .

The following observation is well known.

Observation 14.

A graph is a -expander if and only if its double cover is a -bipartite expander.

2.1.1 A local to global lemma

We will use the following “local-to-global” proposition due to [81, Theorem 1.1]. We prove it in Appendix A to be self contained. This lemma is an application of the Garland method [47], which is quite common in the high dimensional expander literature (see e.g. [87]). Let us set it up as follows.

Let be a graph where is the distribution over edges. A local-decomposition of is a pair as follows. is a set such that are a subgraph of and is a weight distribution over . is a distribution over . Assume that is sampled according to the following process:

-

1.

Sample .

-

2.

Sample .

The local-to-global graph is the bipartite graph on and whose edge distribution is

-

1.

Sample .

-

2.

Sample a vertex 777i.e. is sampled with probability ..

-

3.

Output .

Note that the probability of under and that under (conditioned on the side) are the same.

Lemma 15 ([81, Theorem 1.1]).

Let be as above. Let and . Then .

We will also use the one-sided version of [81, Theorem 1.1]. For a formal proof see [26, Lemma 4.14]. We formalize it in our language.

Lemma 16.

Let be a bipartite graph. Let be a local decomposition. Let be the restriction of to the vertices of (resp. ). Then

2.2 Vector spaces

Unless stated otherwise, all vector spaces are over . For a vector space and subspaces we say their intersection is trivial if it is equal to . We denote the sum . If the sum is direct, that is the intersection of and is trivial, we write . We shall use this notation to refer both to the statement that and to the object . We need the following claim.

Claim 17.

Let be a vector space. Let be three subspaces such that . Then .

Proof.

Let where . Thus which by assumption means that and . As it follows that and the intersection is trivial.

2.3 Grassmann posets

In this paper all vector spaces are with respect to unless otherwise stated. Let be a vector space. The Grassmann partially ordered set (poset) is the poset of subspaces of ordered by containment. When is clear from the context we sometimes just write .

Definition 18 (Grassman Subposet).

Let , be an -dimensional vector space, and . An -dimensional Grassmann subposet is a subposet of such that

-

1.

every maximal subspace in has dimension ,

-

2.

if and , then .

We sometimes write . The -dimensional subspaces in are denoted . The vector space is sometimes called the ambient space of .999formally there could be many such spaces, but in this paper we usually assume that is spanned by the subspaces in .

A measured subposet is a pair where is an -dimensional Grassmann subposet and is a probability distribution over -dimensional subspaces such that . We extend to a distribution on flags of subspaces by sampling and then sampling a flag uniformly at random. Sometimes we abuse notation and write where is the marginal over said flags. We sometimes say that is measured without specifying for brevity. When we write we mean that the distribution is uniform.

The containment graph is a bipartite graph with sides and whose edges are such that . There is a natural distribution on the edges of this graph induced by the marginal of of the flag above.

Let be an -dimensional Grassmann poset. For the Grassmann poset is the poset that contains every subspace in of dimension .

A link of a subspace is denoted . It consists of all subspaces in the poset that contain , namely . If is measured, the distribution over is the distribution conditioned on the sampled flag having . We note that if is the ambient space of then is (isomorphic to) a Grassmann subposet with ambient space by the usual quotient map.

For any -dimensional subposet , any , , and , we denote . The underlying graph of the link is the following graph (which we also denote by when there is no confusion). The vertices are

and the edges are

We note that sometimes the link graph is defined by taking the vertices to be and the edges to be all such that . These two graphs are closely related:

Claim 19.

Let be a Grassmann poset. Let be a subspace and let be the underlying graph of its link as defined above. Let be the graph whose vertices are and whose edges are such that . Then .

Proof.

Let be the complete graph over vertices including self loops. We prove that . As it will follow by (2.1) that . For every we order the vectors in according to some arbitrary ordering . Our isomorphism from to sends where and the in the subscript is according to the ordering of . It is direct to check that this is a bijection.

Moreover, in if and only if . This span is equal to . Therefore if and only if the left coordinates of the images and are connected in . As is an edge in the complete graph with self loops, it follows that is in if and only if the corresponding images are connected in . The claim follows.

Thus we henceforth use our definition of a link.

We say that such a subposet is a -expander if for every and the graph is a -two-sided spectral expander. We also state the following claim, whose proof is standard.

Claim 20.

The poset is a -expander.

Sketch.

Let . By Claim 19, to analyze the expansion of the link of , it suffices to consider the graph whose vertices are the subspaces and whose edges are if . In this case, it is easy to see that this graph is just the complete graph (without self loops) with vertices. The claim follows by the expansion of the complete complex.

We also need the following claim.

Claim 21 ([30]).

Let be a Grassmann poset that is -expander. Then for every and , the containment graph between and is a -bipartite expander.

This claim was proven [30] without the explicit constant . Hence we reprove it in Appendix H with the constant.

2.4 The matrix domination poset

In this section we give preliminaries on the matrix domination posets. These posets are essential for proving that the HDX construction given in Section 3 sufficiently expands. They were a crucial component in the construction of abelian HDXs in [50]. We believe that the matrix domination posets are also interesting on their own right, being another important family of posets to be studied as those in [30, 69].

Let be matrices over for some fixed . Let . The partial order on this set is the domination order.

Definition 22 (Matrix domination partial order).

Let . We say that dominates if . In this case we write .

Another important notion is the direct sum.

Definition 23 (Matrix direct sum).

We say that two matrices and have a direct sum if . In this case we write .

We note that this definition is equivalent to saying that . We also clarify that when we write , this refers both to the object , and is a statement that . This is similar to the use of when describing a sum of subspaces ; the term refers both to the sum of subspaces, and is a statement that . We will see below that is equivalent to , so we view this notation as a natural analogue of what happens in subspaces.

In Section 3 we define and analyze expansion of graphs coming from Hasse diagrams of dominance orders. These graphs appear in the analysis of the construction in Section 3. Before doing so, let us state some preliminary properties of this partial order, all of which are proven in Appendix C. More specifically, we will show that this is a valid partial order (as already observed in [50]), discuss the properties of pairs of matrices that have a direct sum, and study structures of intervals in this poset (see definition below).

Claim 24.

The pair is a partially ordered set.

We move on to characterize when , that is, when the sum of and is direct. This will be important in the subsequent analyses.

Claim 25.

For any matrices the following are equivalent:

-

1.

, i.e. .

-

2.

The spaces and are trivial.

-

3.

The spaces and .

-

4.

There exists a decomposition and such that the disjoint unions and are independent sets. Indeed, the two sets span and respectively.

We will also use this elementary (but important) observation throughout.

Claim 26 (Direct sum is associative).

Let . If and have a direct sum, and has a direct sum with , then has a direct sum with . Stated differently, if and , then . The same holds for any other permutation of and .

Henceforth, we just write to indicate that for any three distinct .

Finally, we will analyze subposets of that correspond to local components which are analogous to the links in simplicial complexes and Grassmann posets.

Definition 27 (Intervals).

Let be two matrices. The interval between and is the subposet of induced by the set

Similar to the above, we also denote by the matrices in of rank . When we just write .

The main observation we need on these intervals is this.

Proposition 28.

Let be of ranks . The subposets and are isomorphic by . This isomorphism has the property that

Another subposet we will be interested in is the subposet of matrices that are “disjoint” from some . That is,

Definition 29.

Let . The subposet is the subposet of consisting of all .

We note that by Claim 25 .

Claim 30.

Let . Then is an order preserving isomorphism.

2.5 Simplicial complexes and high dimensional expanders

A pure -dimensional simplicial complex is a hypergraph that consists of an arbitrary collection of sets of size together with all their subsets. The -faces are sets of size in , denoted by . We identify the vertices of with its singletons and for good measure always think of . The -skeleton of is . In particular the -skeleton of is a graph. We denote by the diameter of the graph underlying .

2.5.1 Probability over simplicial complexes

Let be a simplicial complex and let be a density function on (that is, ). This density function induces densities on lower level faces by . We omit the level of the faces When it is clear from the context, and just write or for a set .

2.5.2 Links and local spectral expansion

Let be a -dimensional simplicial complex and let be a face. The link of is the -dimensional complex

For a simplicial complex with a measure , the induced measure on is

Definition 31 (Local spectral expander).

Let be a -dimensional simplicial complex and let . We say that is a -local spectral expander if for every , the link of is connected, and for every it holds that .

We stress that this definition includes connectivity of the underlying graph of , because of the face . In addition, in some of the literature the definition of local spectral expanders includes a spectral bound for all and . Even though the definition we use only requires a spectral bound for links of faces , it is well known that when is sufficiently small, this actually implies bounds on for all and (see [87] for the theorem and more discussion).

Cayley Graphs and Cayley Local Spectral Expanders

Definition 32 (Cayley graph over ).

Let . A Cayley graph over with generating set is a graph whose vertices are and whose edges are . We denote such a graph by .

Note that we can label every edge in by its corresponding generator. A Cayley complex is a simplicial complex such that is a Cayley graph (as a set) and such that all edges with the same label have the same weight. We say that is a -two-sided (one-sided) Cayley local spectral expander if it is a Cayley complex and a -two-sided (one-sided) local spectral expander.

3 Subpolynomial degree Cayley local spectral expanders

In this section we construct Cayley local spectral expander with a subpolynomial degree, improving a construction by [50].

Theorem 33.

For every and integer there exists an infinite family of -dimensional -local spectral expanding Cayley complexes with . The degree is upper bounded by .

Golowich proved this theorem for .

As in Golowich’s construction, we proceed as follows. We first construct an expanding family of Grassmann posets over (see Section 2.3), and then we use a proposition by [50] (Proposition 37 below) to construct said Cayley local spectral expanders. The main novelty in this part of the paper is showing that this idea can be iterated, leading to a successive reduction of the degree.

The following notation will be useful later.

Definition 34.

Let be integers and . We say a Grassmann poset is a -poset if is a -dimensional -local spectral expander whose ambient space is , with .

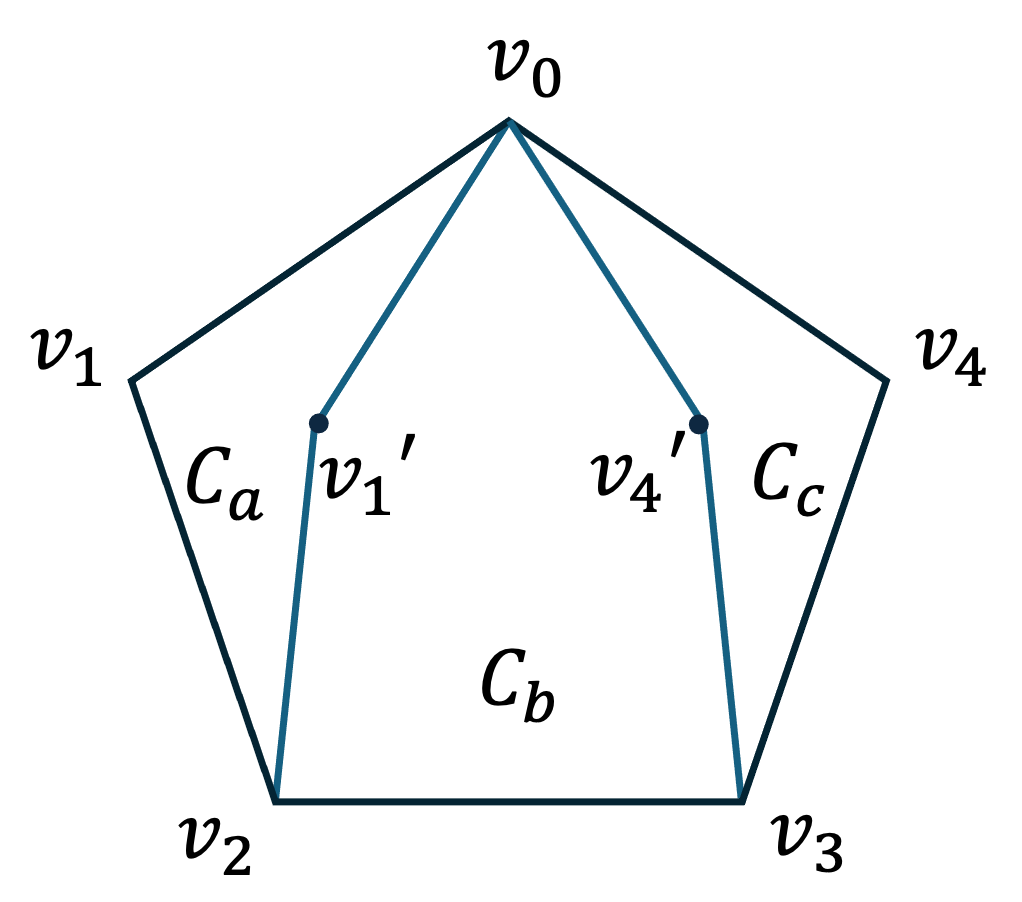

[50] introduces a general approach that turns a Grassmann poset into a simplicial complex called the basification of . The formal definition of this complex is as follows.

Definition 35.

Given a -dimensional Grassmann poset . Its basification is a -dimensional simplicial complex such that for every

In [50] it is observed that the local spectral expansion of is identical to that of . Golowich constructs a Cayley complex whose vertex links are (copies of) the basification. The construction is given below.

Definition 36.

Given the basification of a -dimensional Grassmann complex over the ambient space , define the -dimensional abelian complex such that and for

We observe that the underlying graph is and therefore its degree is .

Proposition 37 ([50]).

Let be a -dimensional Grassmann poset over that is -expanding. Let be the abelian complex given by its basification. Then is a -local spectral expander. In addition, the underlying graph of is a -expander.

Thus our new goal is to prove the following theorem about expanding posets.

Theorem 38.

For every , and there exists infinite family of integers and of -posets .

This is sufficient to prove Theorem 33:

Proof of Theorem 33, assuming Theorem 38.

Let be an infinite family of in Theorem 38 for such that . Let be their basifications. By Proposition 37. The complexes have ambient subspace , degree , and expansion .

3.1 Expanding Grassmann posets from matrices

Let be a -poset. We now describe how to construct from it a -poset where , , and when . In other words, we describe a transformation

After this, we will iterate this construction, this time taking to be the input, producing an even sparser poset etc., as we illustrate further below.

Without loss of generality we identify the ambient space of with and set the ambient space of to be matrices (). The -dimensional subspaces of are

| (3.1) |

In words, these are all matrices whose rank is so that their row and column spaces are in . As we can see, and and therefore . We postpone the construction higher dimensional faces (which determine the dimension ) and the intuition behind the expansion of for later. Let us first show how an iteration of this construction will construct sparser Grassmann posets.

This single step can be iterated any constant number of times, starting from a high enough initial dimension . Let us denote by the operator that takes as input one Grassmann poset and outputs the sparser one as above. Fix and fix a target dimension . Let and let be sufficiently large. We set to be such that (which is a -poset for and that goes to with ) and . The final poset is a

Here , , and when .

The above construction works for any large enough . This gives us an infinite family of Grassmann complexes whose ambient dimension is and whose degree is (where ). This infinite family proves Theorem 38.

3.1.1 The construction of the Grassmann poset

We are ready to give the formal definition of the poset. We construct the top level subspaces, and then take their downward closure to be our Grassmann poset. Every top level face is going to be an image of a “non-standard” Hadamard encoding. The “standard” Hadamard could be described as follows. Let be a vector space, and let be indexed by the vectors in . That is where is the the standard basis vector that is in the -th coordinate and elsewhere. With this notation the “standard” Hadamard encoding maps

where is the standard bilinear form. A “non-standard” Hadamard encoding in this context is the same as above, only replacing the standard vectors with another basis for a subspace, represented by matrices. It is defined by a set satisfying some properties described formally below. The encoding is the linear map ,

similar to the formula above. We continue with a formal definition.

We start with , a -poset (and assume without loss of generality that is a power of ) and let 101010Although we define this poset to the highest dimension possible. Our construction will eventually take a lower dimensional skeleton of it..

Definition 39 (Admissible function).

Consider a -poset with . Let , a (not necessarily linear) function is admissible to if:

-

1.

For every , .

-

2.

The sum matrix is a direct sum of the ’s, that is,

-

3.

The spaces .

We remark that for the construction we only need to consider admissible functions for , that is, functions , where , and . The other admissible functions will be used later in the analysis (see Section 7).

We denote by the set of admissible functions . The Hadamard encoding of a function is the linear function given by

Observe that is an isomorphism so the image of is an -dimensional subspace. This of course means that every subspace is mapped isomorphically to a subspace of the same dimension . Thus, the Grassmann poset is isomorphic to the complete Grassmann .

Definition 40 (Matrix poset).

The poset is a -dimensional poset . We denote by the operator that takes and produces as above.

In other words, is clearly a Grassmann poset and it is a union of for all admissible mappings .

The admissible functions on level give us a natural description for :

Claim 41.

Let be as in Definition 40. Then

We prove this claim in Appendix B.

This poset also comes with a natural distribution over -subspaces is the follows.

-

1.

Sample two -dimensional subspaces independently according to the distribution of .

-

2.

Sample uniformly an admissible function such that the matrix has and .

-

3.

Output .

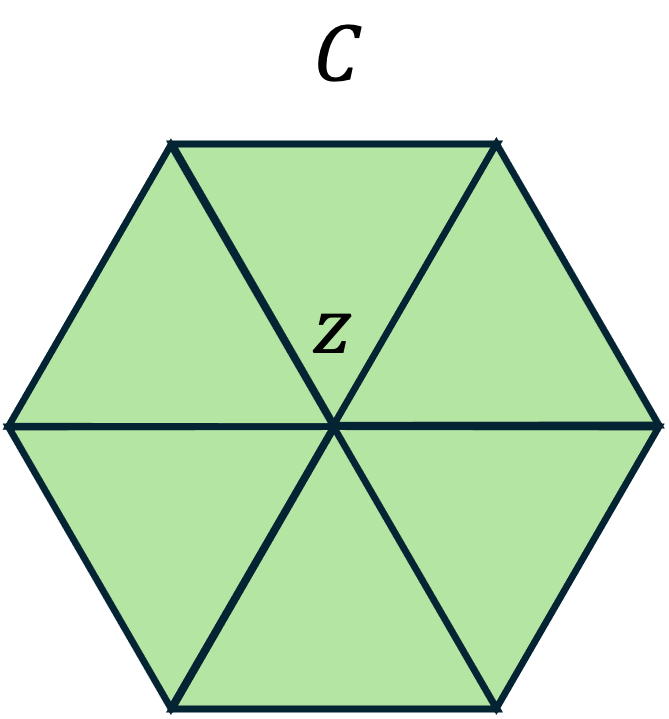

We remark that we can generalize this construction by changing the definition of “admissible” to other settings. One such generalization that replaces the rank function with the hamming weight results in the Johnson poset which we describe in Section 4. We explore this more generally in Section 7.

We finally reach the main technical theorem of this section, Theorem 42. This theorem proves that if is an expanding poset, then so is a skeleton of . Using this theorem inductively is the key to proving Theorem 38.

Theorem 42.

There exists a constant such that the following holds. Let , and be an integer. Let and let . If is a -poset then the -skeleton of , namely , is a -poset where and .

Proof of Theorem 38, assuming Theorem 42.

Fix and without loss of generality for some integer . Changing variables, it is enough to construct a family of -posets for an infinite sequence of integers , such that the constant in the big only depends on and but not on . Then by setting gives us the result 111111Technically this only gives a family -posets. But can be arbitrarily small. Thus for any , constructing -posets is also a construction of -posets, since eventually for any constant independent of .

We prove this by induction on for all and simultaneously. For we take the complete Grassmanns, namely and the family that are -posets for sufficiently large (the first three parameters are clear. By Claim 20 the links expansion of the complete Grassmann is which goes to with , hence for every and large enough they are -expanders).

Let us assume the induction hypothesis holds for and prove it for . Fix and . By Theorem 42, there exists some and such that if we can find a family of -posets , then the posets are -posets. By the induction hypothesis (applied for and ) such a family indeed exists. The theorem is proven.

3.2 A Proof Roadmap for a single iteration of - Theorem 42

The rest of this section is devoted to proving Theorem 42, and mainly to bounding the expansion of links in . Recall the notation to be the poset of matrices with the domination relation.

We fix the parameters and . Without loss of generality we take to be large enough so that (this requires that for the constant in Theorem 42).

Recall the partial order defined in Section 2.4 on matrices where we write for two matrices if .

Fix , and let . Let be a subspace (where ). In the next two subsections we describe the two steps of the proof: decomposition and upper bounding expansion.

3.2.1 Step I: decomposition

We will show that a link of a subspace decomposes to a tensor product of simpler components.

For this we must define two types of graphs, relying on the domination relation of matrices and the direct sum.

Definition 43 (Subposet graph).

Let and let . The graph has vertices and edges such that there exists such that .

For any two of rank , the graphs so we denote this graph by when we do not care about the specific matrix.

Definition 44 (Disjoint graph).

Let and let . The graph has vertices and edges such that there exists such that we have .

For any and two , the graphs so we denote this graph by when we do not care about the specific matrix.

The first component in the decomposition in the link of is the subposet graph. The second component is the sparsified link graph. This graph depends on , unlike the previous component. In particular, the matrices in this component will have row and column spaces in . For the rest of this section we fix . Let be any matrix whose row span and column span are and . Note that both and have dimension (that is, ). The following definition will only depend on these row and column spaces, but it will be easier in the analysis to give the definition using this matrix .

Definition 45 (Sparsified link graph).

The graph is the graph with vertex set

The edges are all such that there exists a matrix such that , and are an edge in .

Formally, the distribution over edges in the sparsified link graph is the following.

-

1.

Sample and according to their respective distributions.

-

2.

Uniformly sample a matrix such that and .

-

3.

Sample an edge in .

We can now state our decomposition lemma:

Lemma 46.

.

This lemma is proven in Section 3.3.

3.2.2 Step II: upper bounding expansion

It remains to show that and are expander graphs. We prove the following two lemmas.

Lemma 47.

There exists an absolute constant such that for all .

In particular, there is an absolute constant such that the following holds: Let . If and , then

Lemma 48.

There exists a constant such that the following holds. Let be a -poset for , and let where and . Then .

In particular, there is an absolute constant such that the following holds: Let . If , and , then

The constant in Theorem 42 is chosen to be . We now give an high level explanation of the proofs of Lemma 47 and Lemma 48, and afterwards we use them to prove Theorem 42.

The graph does not depend on and the proof of Lemma 47 relies only on the properties of the matrix domination poset. Therefore it is deferred to Appendix D. In a bird’s-eye view, we use Lemma 16, the decomposition lemma, to decompose the graph into smaller subgraphs, and show that every subgraph is close to a uniform subgraph.

We prove Lemma 48 in Section 3.4. Its proof also revolves around Lemma 16, only this time the subgraphs in the decomposition come from (pairs of) subspaces in . Observe that in the first step in sampling an edge in Definition 45, one first samples a pair . The subgraph induced by fixing and in the first step is isomorphic to a graph that contains all rank matrices in for some , that are a direct sum with some matrix (independent of ). Thus every such subgraph is analyzed using the same tools and ideas as in the proof of Lemma 47.

In order to apply Lemma 16, we also need to show that the decomposition graph expands (see its definition in Section 2.1.1). This is the bipartite graph where one side is the vertices in , and the other side are the pairs of subspaces . A matrix is connected to if and .

To analyze this graph, we reduce its expansion to the expansion of containment graphs in . We make the observation that we only care about and , since if and have the same rowspan and colspan, then they also have the same neighbors. To say this in more detail, where is some complete bipartite graph, and replaces the matrices in with (here every matrix is projected to in ). The graph itself is a tensor product of the containment graph of and . Fortunately, as we discussed in Section 2.3, this graph is an excellent expander, which concludes the proof.

Given these components we prove Theorem 42.

Proof of Theorem 42.

Let us verify all parameters . The dimension is clear from the construction.

The size since it is enough to specify vectors to construct a rank matrix (of course we have also double counted since many decompositions correspond to the same matrix). Thus .

Let us verify that the ambient space is dimensional, or in other words that really spans all matrices (recall that we assumed without loss of generality that ’s ambient space is ). For this it is enough to show that there are linearly independent matrices inside . Let be linearly independent vectors. The ambient space of is . Let us show that is spanned by the matrices inside . Indeed, choose some subspaces such that . Then contains all rank matrices in whose row and column spans are inside . These matrices span all matrices whose row and column spans are in and in particularly they span .

Finally let us show that all links are -expanders. Fix . By Lemma 46

so . Both are -expanders by Lemma 47 and Lemma 48 when the conditions of the theorem are met.

To sum up, is a -expanding poset.

Remark 49.

For the readers who are familiar with [50], we remark that contrary to that paper, we do not use the strong trickling down machinery developed in [69]. [69] show that expansion in the top-dimensional links trickles down to the links of lower dimension (with a worse constant, but still sufficient for our theorem). A priori, one may think that using [69] could simplify the proof. However, in our poset all links have the same abstract structure, so we might as well analyze all dimensions at once. Moreover, one observes that in many cases the bound on the expansion of these posets actually improves for lower dimensions.

3.3 Proof of the decomposition lemma - Lemma 46

Claim 50 ([50, Proposition 55]).

Let and let . Then there exists matrices of of rank , whose direct sum is denoted by , such that the link graph

This is not just an abstract isomorphism. The details are as follows. First, [50] observes that given , for some admissible , the set does not depend on , only on . We denote it by .

Intuitively, the following proposition follows from the ’Hadamard-like’ construction of the Grassmann, and the intersection patterns of Hadamard code.

Proposition 51 ([50, Lemmas 59,61,62]).

Let , let , let be as above, with . For every matrix such that there exists unique matrices such that

-

1.

.

-

2.

.

-

3.

For the matrices . That is, .

-

4.

The matrix . That is, (resp. ) intersects trivially with (resp. ).

-

5.

The function is an isomorphism

We restate and reprove this proposition in Section 7. The proof our decomposition lemma is short given the one from [50]. For this (and this only) we need to slightly alter Definition 40 and Definition 45 so that we take into account two posets instead of one. Let be two posets of the same dimension .

Definition 52 (Two-poset admissible function, generalizing Definition 39).

An admissible function with respect to to be a function as in Definition 39 with the distinction that the row and column spaces of the matrix are

Definition 53 (Two-poset matrix poset, generalizing Definition 40).

We denote by the matrix poset defined in Definition 40 such that the admissible functions are with respect to and .

Definition 54 (Two-poset sparsified link graph, generalizing Definition 45).

For we denote by the graph in Definition 45, with the distinction that all sums of row spaces are required to be in and all column spaces are required to be in (instead of them all being in the same poset ).

Proof of Lemma 46.

Fix and its corresponding and . Conditioned on , the first step of the sampling distribution in Definition 40 is the same as sampling and (where ). Fixing the pair and conditioning on all three , sampling an edge in the link is the same as sampling an edge in the link of in the complex . As both and are isomorphic, 121212Recall that is the Grassmann poset containing all subspaces of .. We are back to the complete Grassmann case and are allowed to use Proposition 51. Let be the subgraph obtained when fixing and conditioning on . By Proposition 51, we can decompose this graph to a tensor product

The first components are independent of and ; they are constant among all conditionals (and are all isomorphic to ). Hence this is isomorphic to where is the graph corresponding to a subgraph of obtained by conditioning the edge-sampling process of to sampling and in the first step. Thus the graph and the lemma is proven.

3.4 Expansion of the sparsified link graph

In this section we use the decomposition lemma to reduce the analysis of the sparsified link graph to the case of the complete Grassmann and prove Lemma 48.

We use the following claim on the complete Grassmann.

Claim 55.

Let be the poset of rank matrices for a sufficiently large . Let and let be such that . Then for some universal constant .

We prove this claim in the next section.

Proof of Lemma 48.

Fix the subspace and recall the notation . We intend to use Lemma 15. The local decomposition we use is the following . For every pair we define the graph which is the subgraph obtained by conditioning on being sampled in the first step of Definition 45. The distribution is the distribution that samples independently. By definition of this is indeed a local-decomposition.

We observe that the subgraph of obtained by fixing and in the first step is just and hence by Claim 55 it is a -expander.

Observe that we only care about , and any two with , have the same neighbor set. In other words, this graph is isomorphic to the bipartite tensor product where is the containment graph between and (resp. and ), and is the complete bipartite graph with vertices on one side and a single vertex on the other side. The number is the number of matrices such that , for any pair of fixed subspaces and . By Claim 21 and the fact that is -expanding, the graph is a , where is some constant and the last inequality is because .

By Lemma 15, .

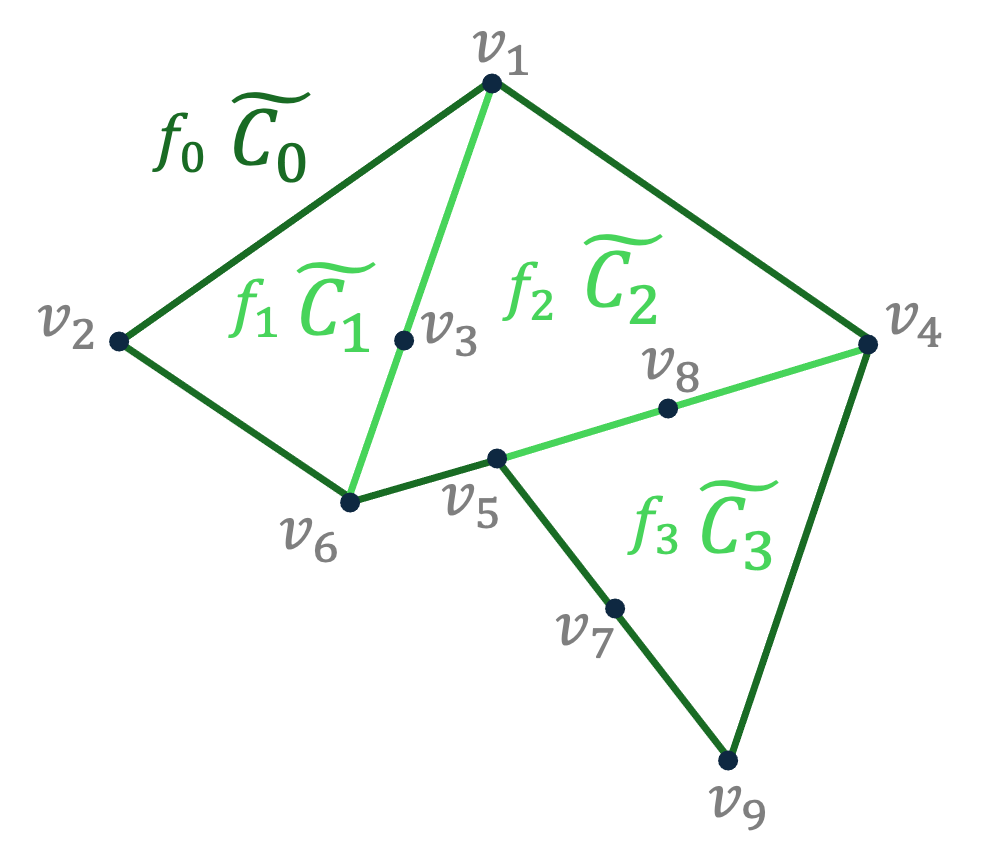

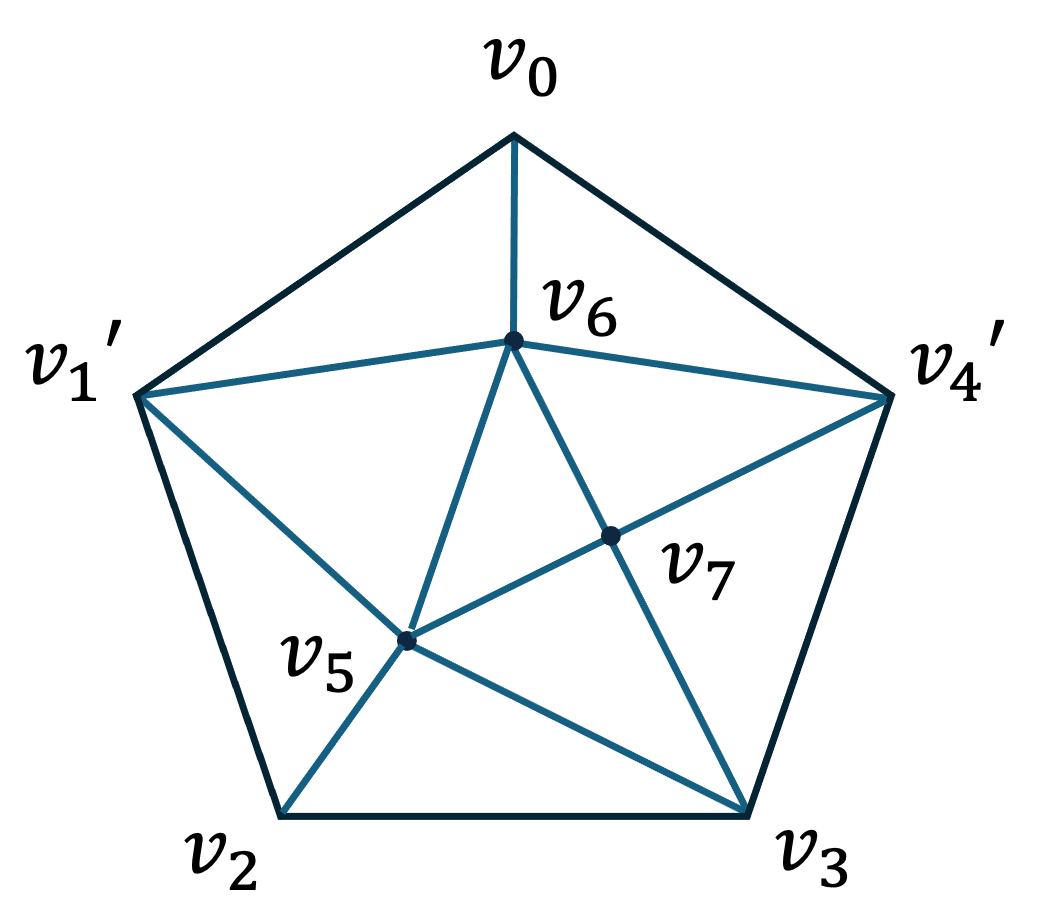

4 Johnson complexes and local spectral expansion

In this section we construct a family of simplicial complexes which we call Johnson complexes whose degree is polynomial in their size. The underlying -skeletons of Johnson complexes are abelian Cayley graphs . We prove in this section that these complexes are local spectral expanders and in particular the links of all faces except for the trivial face have spectral expansion strictly smaller than . We then compare the Johnson complexes with the -dimensional local spectral expanders constructed from random geometric graphs [74] and show that the former complexes can be viewed as a derandomization of the latter random complexes. On the way we also define Johnson Grassmann posets , analogous to the matrix posets given in Section 3.1, and prove in Section 4.4 that Johnson complexes are exactly , which are Cayley complexes whose vertex links are basifications of s (Definition 36).

Remark 56.

Later in Section 7, we shall define the induced Grassmann posets which generalize both the Johnson Grassmann posets and the matrix Grassmann posets, and we shall provide a unified approach to show local spectral expansion for induced Grassmann posets. However in this section, we will present a more direct proof of local expansion that is specific to Johnson complexes.

We name our construction Johnson complexes due to their connection to Johnson graphs. In particular, all the vertex links in a Johnson complex are generalization of Johnson graphs to simplicial complexes. So we start by giving the definition of Johnson graphs and then provide the construction of Johnson complexes.

Definition 57 (Johnson graph).

Let be integers. The adjacency matrix Johnson graph is the (uniformly weighted) graph whose vertices are all sets of size inside and two sets are connected if .

Next we define Johnson complexes. We shall use to denote the Hamming weight of a binary string .

Definition 58 (Johnson complex ).

Let and be any integer. Let and let be such that . Let (abbreviate as when is clear from context). The -weight Johnson complex is the -dimensional simplicial complex defined as follows.

For the rest of this work, we fix as above.

It turns out that the weight constraints on -faces in a Johnson complex gives rise to a Hadamard-like intersection pattern for elements in the link faces. Any -face in the link of can be written as . If we identify each with the set of nonzero coordinates in it, these sets always satisfy that any two distinct sets intersect at exactly coordinates, and in general any distinct s intersect at coordinates. We note that this is similar to the intersection pattern of distinct codewords in a Hadamard code.

The main result of this section is proving that all links of this complex are good expanders.

Lemma 59 (Local spectral expansion of Johnson complexes).

For any , the link graph of any -face in the -dimensional Johnson complex is a two-sided -spectral expander. In particular, is a -dimensional two-sided -local spectral expander.

The key observation (Proposition 62) towards showing this lemma is that the link graphs of -faces are the tensor product of Johnson graphs.

Once this decomposition lemma is proven, we can use the second eigenvalue bounds for Johnson graphs to show that the links are -spectral expanders. We note that this construction breaks the link expansion barrier of known elementary constructions of local spectral HDXs (discussed in the introduction). Because our construction breaks this barrier, using the Oppenheim’s trickle down theorem [87], we directly get that the -skeleton of the Johnson complex is also a -spectral expander.

We now outline the rest of this section. First, we prove the link decomposition lemma Proposition 62. Then using this lemma we show that Johnson complexes are basifications of Johnson Grassmann posets. Afterwards, we apply this lemma to prove the main result. Finally, we conclude this section by comparing the density parameters of the Johnson complexes with those of spectrally expanding random geometric complexes.

4.1 Link decomposition

In this part we prove the link decomposition result. Note that by construction the links of all vertices are isomorphic. So it suffices to focus on the link of (denoted by ) to show local spectral expansion for . The link decomposition is a consequence of the Hadmard-like intersection patterns of the faces in as explained earlier.

For the rest of the section, we use to denote the set of nonzero coordinates in a vector and use to denote . We now state the face intersection pattern lemma.

Lemma 60.

Let be an integer. Given a set and a subset , define

Then if and only if for any subset ,

Proof.

We first verify that every set satisfying the cardinality constraints above is in . Recall that by definition if and only if they satisfy the weight equations

Observe that the set of nonzero coordinates in are precisely that are nonzero in odd number of vectors in

and that by definition the set is a partition of . Therefore the weights can be rewritten as

| (4.1) |

Furthermore, for any nonempty subset there are nonempty sets whose intersection with has odd cardinality: let , then the number of ’s subsets of odd cardinality is

So the total number of such is . Therefore if when , then . Thus under this condition .