A k-mer-Based Estimator of the Substitution Rate Between Repetitive Sequences

Abstract

K-mer-based analysis of genomic data is ubiquitous, but the presence of repetitive k-mers continues to pose problems for the accuracy of many methods. For example, the Mash tool (Ondov et al. 2016) can accurately estimate the substitution rate between two low-repetitive sequences from their k-mer sketches; however, it is inaccurate on repetitive sequences such as the centromere of a human chromosome. Follow-up work by Blanca et al. (2021) has attempted to model how mutations affect k-mer sets based on strong assumptions that the sequence is non-repetitive and that mutations do not create spurious k-mer matches. However, the theoretical foundations for extending an estimator like Mash to work in the presence of repeat sequences have been lacking.

In this work, we relax the non-repetitive assumption and propose a novel estimator for the mutation rate. We derive theoretical bounds on our estimator’s bias. Our experiments show that it remains accurate for repetitive genomic sequences, such as the alpha satellite higher order repeats in centromeres. We demonstrate our estimator’s robustness across diverse datasets and various ranges of the substitution rate and k-mer size. Finally, we show how sketching can be used to avoid dealing with large k-mer sets while retaining accuracy. Our software is available at https://github.com/medvedevgroup/Repeat-Aware_Substitution_Rate_Estimator.

Keywords and phrases:

k-mers, sketching, mutation ratesCopyright and License:

2012 ACM Subject Classification:

Applied computing Bioinformatics ; Applied computing Computational biologyAcknowledgements:

We thank Amatur Rahman for initial work on the project and Qunhua Li and David Koslicki for helpful discussions. We thank Mahmudur Rahman for the helpful discussion about hash functions. We thank Bob Harris for the idea of using dynamic programming to compute the probability of the destruction of all k-spans.Funding:

This material is based upon work supported by the National Science Foundation under Grants No. DBI2138585 and OAC1931531. Research reported in this publication was supported by the National Institute Of General Medical Sciences of the National Institutes of Health under Award Number R01GM146462. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.Supplementary Material:

Software (Source Code): https://github.com/medvedevgroup/Repeat-Aware_Substitution_Rate_Estimator [32]archived at

swh:1:dir:258c949c42d162c56f1e09a0ece39722a5076601

swh:1:dir:258c949c42d162c56f1e09a0ece39722a5076601

Editors:

Broňa Brejová and Rob PatroSeries and Publisher:

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Leibniz International Proceedings in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

-mer-based analysis of genomic data is ubiquitous. e.g. in genome assembly [1], error correction [2], read mapping [13], variant calling [29], genotyping [30, 7], database search [14, 9], metagenomic sequence comparison [26], and alignment-free sequence comparison [28, 20, 24]. One of the major challenges is the presence of repetitive -mers, which adversely affects the practical performance as well as the theoretical analysis of downstream algorithms. One example is that heuristic read aligners like minimap2 [15] and even more rigorous ones like Eskemap [25] filter out highly repetitive -mers in order to avoid explosive run times. Another example is the recent paper [27] that proved that sequence alignment can on average be done in almost time but could not account for sequences with a high number of repeats.

One of the major advantage of -mer-based methods is that they lend themselves more easily to sketching [16, 22], which is important for scaling to large-scale data. The groundbreaking Mash paper [20] was able to estimate the mutation rate between two genomes fast enough to be able to construct a phylogeny of 17 primate species in a tiny fraction of the time it would take an alignment-based method. Their approach uses an estimator based on the Jaccard similarity between the -mer sketches of two sequences. However, the derivation behind their estimator assumes that the genomes have no repeats, making it inaccurate in highly repetitive regions. Other methods for estimating mutation rates are not designed for and/or not tested on highly repetitive sequences [34, 10, 18, 23].

In this paper we tackle the challenge of accounting for repeats when estimating the mutation rate. We assume that a string is generated from a string through a simple substitution process [5], where every nucleotide of mutates with a fixed probability . Given the number of shared -mers between and and the -mer abundance histogram of , we define our estimator as the solution to a polynomial equation, which can be solved using Newton’s method. We give a theorem to bound its bias, in terms of properties of (Theorem 3). Our estimator is designed to capture the most salient properties of the repeat structure of the genome, with the rest of the information being captured in the bias bounds. As a result, a user can decide a priori whether to trust our estimator, based on the quality of the bias bounds and on another heuristic we provide (Theorem 4).

We evaluate our estimator empirically on various sequences, including the alpha satellite centromeric region of human chr21 and the highly repetitive human RBMY gene. For such repetitive sequences, our estimator remains highly accurate, while the repeat-oblivious estimator of the kind used by Mash is unreliable. We make a comprehensive evaluation of across the spectrum of and values, which can guide a user towards choosing a value for their analysis. We also show that our estimator can be used on top of a FracMinHash sketch, without systematically effecting the bias. Our software is available on GitHub [32].

2 Preliminaries

Let be a string and let be a parameter indicating the -mer size. We will index string positions from 1. We further assume in this paper that is at least nucleotides long. We use to denote the number of nucleotides in the string minus , or, equivalently, the number of -mers in . For , let be the -mer starting at position of . Let be the set of all distinct -mers in , also called the -spectrum of . We let be the size of , i.e. the number of distinct -mers in . Given a -mer , we will use the shorthand to mean . Given two strings and , we define as the number of -mers shared between them. We will usually use the shorthand of for . Given two -mers and , we use to denote their Hamming distance.

Let be a set of -mers and let be a string. We let denote the number of positions in such that . When consists of a single element , we simply write . A set of positions is said to be a set of occurrences of if for all , we have . A set of occurrences is said to be non-overlapping if, for all distinct , . We let be the maximum size of a set of non-overlapping occurrences of , also referred to as the separated occurrence count. Observe that . The abundance histogram of a string is the sequence where is the number of -mers in that occur times in . Note that .

We will consider the following random substitution process, parameterized by a rate . Given a string , it generates an equal-length string where, independently, the character at each position is unchanged from with probability and changed to one of the three other nucleotides with probability .

3 Problem overview and proposed solution

In this paper, we address the following problem. Let be a substitution rate. Let be a string and let be generated from using the substitution process parametrized by . Let be the observed spectrum intersection size. Given and the abundance histogram of , the problem is to estimate the mutation rate .

The bias of an estimator for is defined as . A good estimator should have a small absolute bias, one that is within the error tolerance of downstream applications. For our problem, directly finding an estimator for with provably small bias turned out to be technically challenging. Instead, we provide an estimator for , which corresponds to the probability that a -mer occurrence contains at least one substitution. There is a natural one-to-one correspondence between an estimator of and an estimator of via the equation . Thus, an alternative to bounding the bias of is to bound that of ; i.e., bound . While the difference between the two approaches may intuitively seem minor, bounding the bias of turned out to be more technically feasible.

The only previously known estimator for this problem is what we refer to as the repeat-oblivious estimator111 What we describe is based on the estimators used in [8, 20, 12], but with two important differences. The first is that we use the modification adopted in the follow up work of [24] and described in Appendix A.6 of [3]. The second is that the original estimator was calculated from the Jaccard similarity between two sequences; however, under our substitution process model, we can state more simply in terms of the spectrum intersection size. and denote by . The derivation of this estimator assumes that 1) has no repeats and 2) if a -mer mutates, it never mutates to anything that is already in . The estimator is then derived using a simple two step technique, called the method of moments [6]. The first step is to derive in terms of . Under the assumptions (1) and (2), . The second step is to take the observed value , plug it in place of , and solve for . In this case, one solves the equation and gets the estimator and the corresponding estimator . The estimator is an unbiased estimator of when (1) and (2) hold, but the bias for general sequences is not known. We are also not aware of any results about the bias of , even under assumptions (1) and (2). As we will show in Section 6, the repeat-oblivious estimator has a large empirical bias when the assumptions are substantially violated.

On the one hand, the repeat-oblivious estimator does not at all account for the repeat structure of . On the other hand, an estimator that would fully account for it seems to be challenging to derive, analyze, and compute. Moreover, such an estimator would likely be superfluous for real data. Instead, our approach is intended to achieve a middle ground between accuracy and complexity by accounting for the most essential part of the repeat structure in the estimator and expressing the non-captured structure in the bias formula. We will show that under assumption (2) and the assumption that all -mer occurrences are non-overlapping in ,

| (1) |

Following the method of moments approach, we define our estimator as the unique solution (the uniqueness is shown in Lemma A.2) to this equation when plugging in in place of . Though we are not able to analytically solve for , we can find the solution numerically using Newton’s Method.

Note that the assumptions we make are not necessary to compute and only represent the ideal condition for our estimator. Our theoretical and experimental results will quantify more precisely how the deviation from our assumptions is reflected in the bias.

4 Estimator bias

Recall that we define and, as mentioned earlier, we will prove the theoretical results on the bias of , rather than . First, we need to derive the expectation and variance of the intersection size. A closed-form expression for even the expectation is elusive, so we will instead use an approximation and derive bounds on the error. The idea behind our bounds is that the error becomes small on the types of sequences that occur in biological data.

We want to underscore that when we make probabilistic statements, it is with regard to the substitution process and not with regard to . We do not make any assumptions about , and, in particular, we are not considering the situation where itself is generated randomly.

First, it is useful to express as a sum of indicator random variables. Let us define as event that and as the event that at least one position in contains . By linearity of expectation, we have

Let . Relying on the approximation (i.e. Equation 1), we define as the solution to , or, equivalently, . We show that this approximation holds when we assume that 1) when (see footnote222 We note that this assumption is not theoretically precise, because forbidding a -mer at position from mutating to usually implies that there is at least one that the -mer at position can no longer mutate to. Because of these dependencies, there are downstream effects on the probability space that are complex to track. A theoretically robust alternative was given in [5] via the -span formulation of the problem. It could be used to formalize the assumption here, however, in this paper, we only use the assumption to give intuition for the estimator and do not use it in any formal theorem statements or proofs. ) and 2) all occurrences of are non-overlapping in :

| (because of (1)) | ||||

| (because of (2)) | ||||

The underlying philosophy for our estimator is that while these assumptions are not perfectly satisfied on real data, in most cases the contribution due to violations of these assumptions is small. To make this mathematically precise, we will bound the difference between and in terms of an expression that can be calculated for any .

Theorem 1.

We have that , where

The difference between and (i.e. ) is close to 0 when the number of -mers with overlapping occurrences is close to 0. On the other hand, the difference between and (i.e. ) is never zero (except in corner cases). However, the largest terms contributing to this difference are due to pairs of non-identical -mers that have a small Hamming distance to each other. Thus, the difference becomes small when the number of “near-repeats” is small.

Next, we upper bound the variance.

Theorem 2.

We have that

where

Theorem 2 gives an upper bound on in two forms. The first one is more precise because it is a function of . However, since we are not able to compute exactly, the second form allows us to plug in the upper bound on from Theorem 1.

Given the bounds on and , we are now able to bound the bias of .

Theorem 3.

Let be a sequence with at least one -mer that occurs exactly once. The bias of is where is bounded as

and where and

The derivatives of have straightforward closed-form expressions, since is a polynomial in . We do not have a closed-form solution for , but it can be evaluated numerically using Newton’s method. Thus, for any given sequence , we can precompute the bounds of our estimator bias for any value of . Due to space limitations, we do not further elaborate on the algorithm to compute the bounds in Theorem 3 or on its runtime analysis.

When the observed intersection is empty, there is a loss of signal and it becomes challenging for any intersection-based estimator to differentiate the true substitution rate from 100%. The following theorem gives an upper bound on the probability that the intersection is empty, as a function of , and . In Section 6, we will show how it can be used to make a conservative decision that the computed estimate is unreliable.

Theorem 4.

Let be a string of length at least . The probability that every interval of length in has at least one substitution can be computed in time with a dynamic programming algorithm that takes as input only , , (not ).

5 Proofs

This section contains the proofs of our theoretical results. In particular, we will prove Theorems 1, 2, and 3 from the previous section. The proof of Theorem 4 is left for the Appendix. We start by proving a couple of preliminary facts that will be used in the proofs of these theorems. First, we consider the probability of the event , which is straightforward to derive.

Lemma 5.

For all , .

Proof.

In order for to be equal to after the mutation process, exactly positions must remain unmutated (which happens with probability ) and exactly positions must mutate to the needed nucleotide (which happens with probability ).

Next, we will bound the probability that all the occurrences of a -mer become mutated; i.e. a -mer does not survive the mutation process.

Lemma 6.

Let be a -mer with occurrence locations denoted by . For all ,

-

1.

, and

-

2.

.

Proof.

We drop from the notation since it remains constant throughout the proof. We first prove the first statement of the lemma. Let us consider the intervals associated with and , denoted by and , respectively. If these intervals are disjoint, then we are done. Otherwise, the union of these intervals can be partitioned into three regions: 1) the part of the interval of that does not intersect with the interval of , 2) the intersection of the two intervals, and 3) the part of the interval of that does not intersect with the interval of . We denote the lengths of these intervals as , , and , respectively, and we denote the event that no mutation occurs in the intervals as , , and , respectively. Let , i.e. we need to calculate . First, we reduce the calculation to as follows:

| (2) |

Next, to calculate , we proceed by conditioning on :

First note that

and so . To bound , consider all the intervals , for , that intersect with ’s interval. Formally, let . Note that all intervals indexed by necessarily contain ’s interval. Therefore, the event implies . We can now write

Therefore, and plugging this bound into Equation 2, we get the first statement of the lemma.

To prove the second statement of the lemma, we apply the chain rule together with the first statement:

We can now prove Theorem 1:

Proof of Theorem 1.

It suffices to prove that for every k-mer , it holds that . For the lower bound, let be a non-overlapping set of occurrences of of size . Then we have

| (3) |

where we use the independence of the events when they are non-overlapping. For the upper bound, let and let . Then, by Lemma 6,

To bound observe that , and by Lemma 5:

Moreover, by Equation 3, and the result follows.

The proof of the variance bound is more straightforward:

Proof of Theorem 2.

Since is a sum of indicator random variables (i.e. ), we can write the variance as

for completeness we include a proof of this fact in the appendix (Lemma A.1).

Consider some and let be a non-overlapping set of occurrences of . Let be the positions where occurs and let be the positions where occurs. Then,

This gives the first form of the upper bound on the variance. The upper bound is derived from the fact that is monotonically increasing on and decreasing on . Therefore, the maximum of is achieved at .

Proof of Theorem 3.

In Lemma A.2 in the Appendix, we show that is well-defined. We will only consider on the interval . Throughout the proof, we will rely on the facts that 1) on the interval , , , ; 2) for , and ; 3) the first three derivatives of can be expressed in terms of and the derivatives of . These properties follow by basic calculus and are stated formally in Lemma A.2. Recall that . To get the upper bound, we use the fact that is decreasing and concave. We apply Jensen’s inequality followed by Theorem 1 to get that .

For the lower bound, since we cannot analytically derive , we derive a reverse Jensen inequality using the Taylor expansion of around . Specifically, using the Lagrange Remainder, we know that there exists some between and such that

Since we are interested in the expected value of , we take expectations on both sides:

We will bound the terms separately by writing with , , , and . For the first term, we use the fact that is decreasing and apply Theorem 1 to get that . For the second term , we first plug in the second derivative of and then apply monotonicity properties together with Theorem 1 to get

For , we use the fact that , which implies that , and thus

For ,

6 Experimental results

In this section, we evaluate the empirical accuracy and robustness of our estimator, used by itself or in combination with sketching.

6.1 Datasets

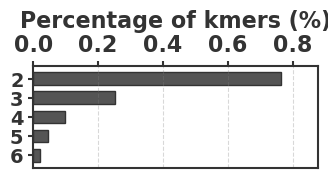

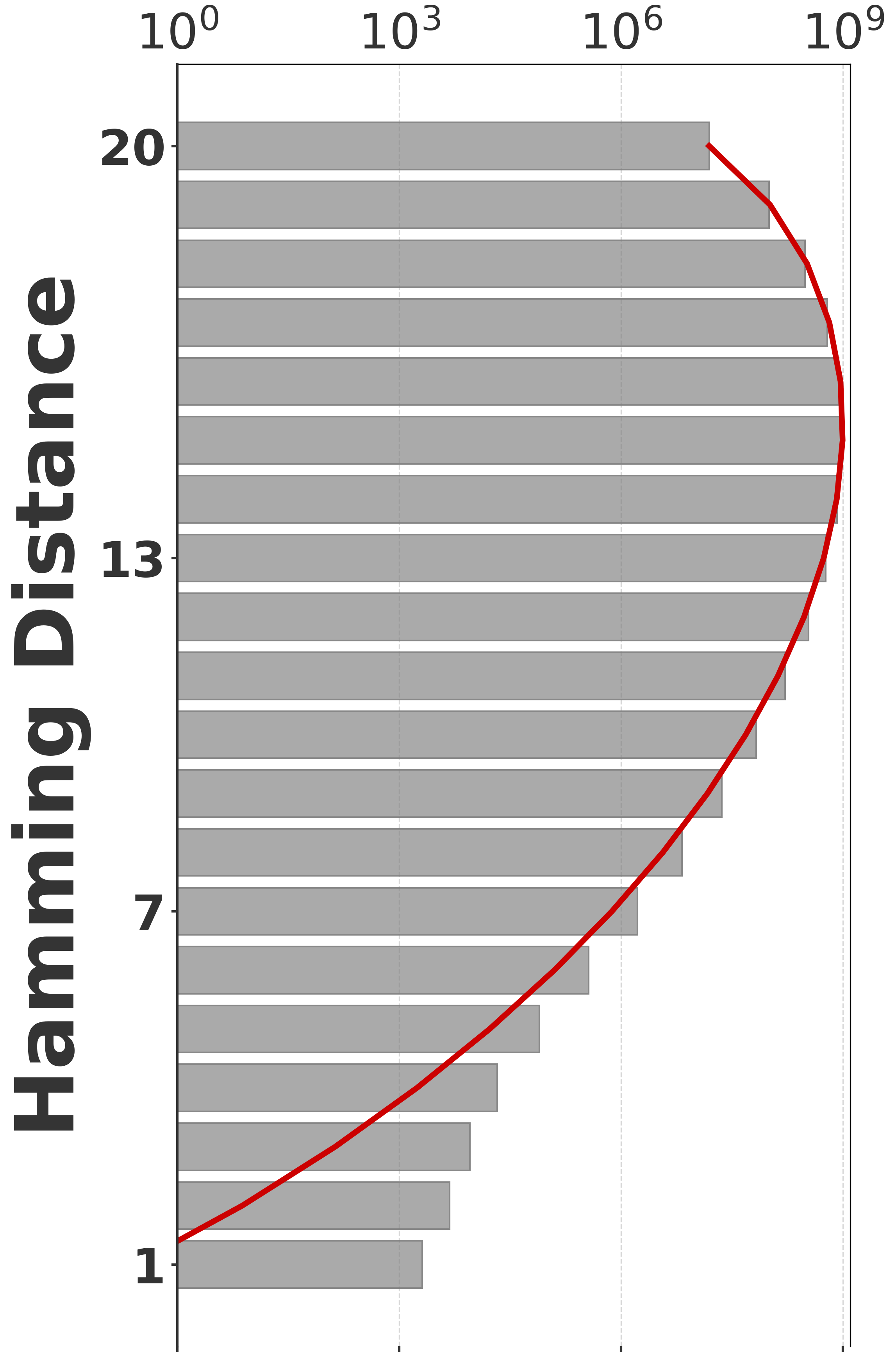

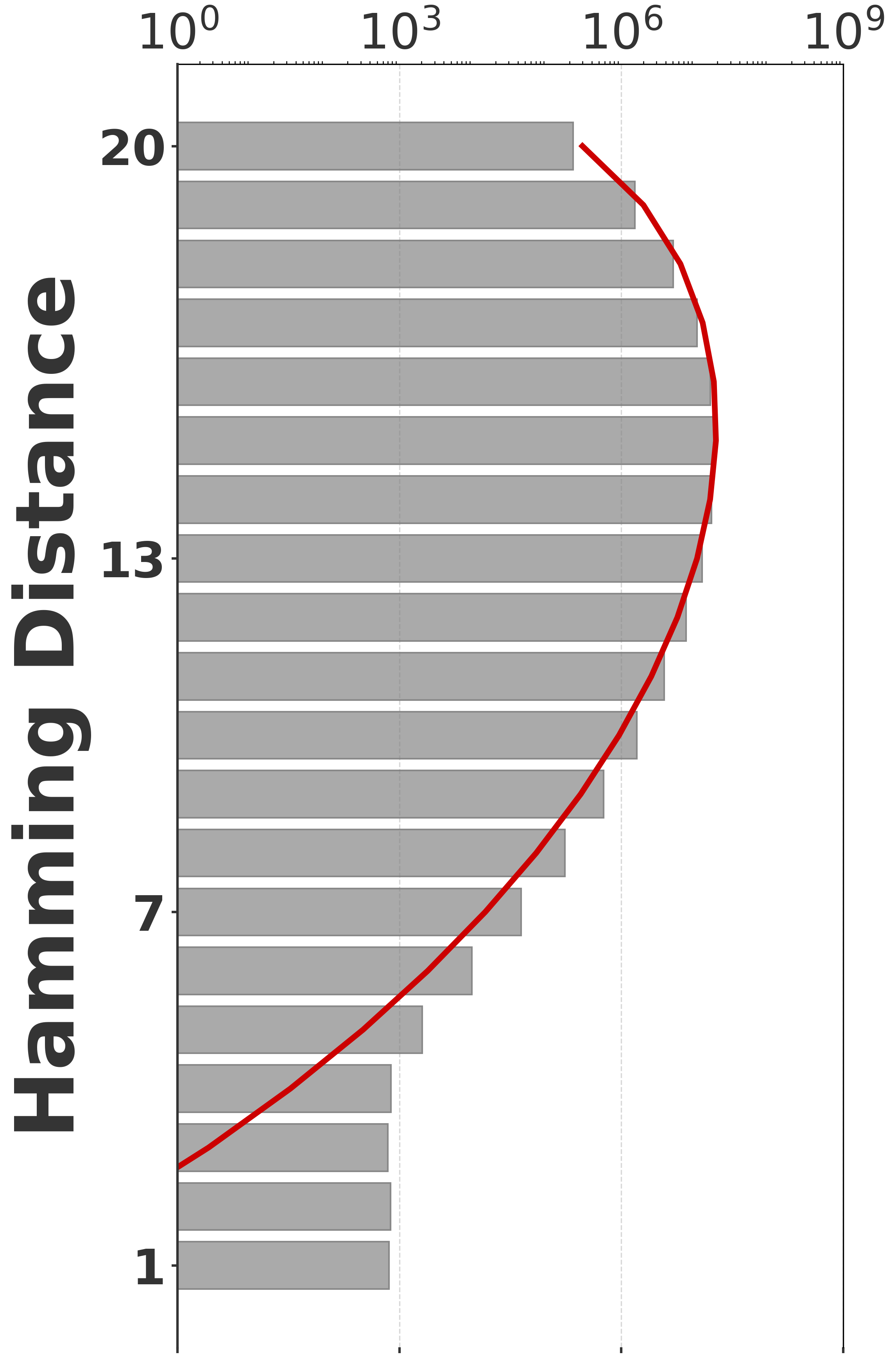

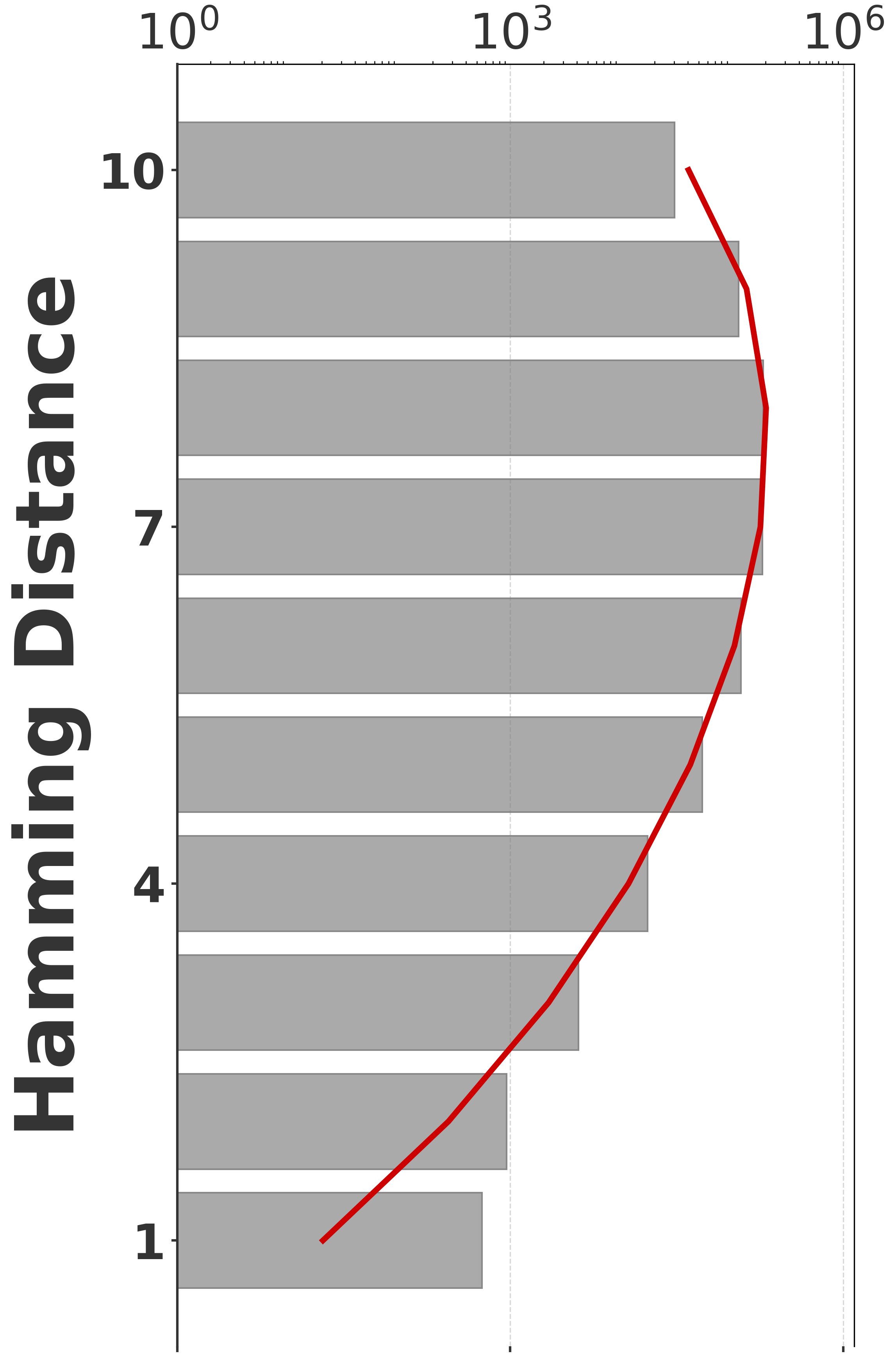

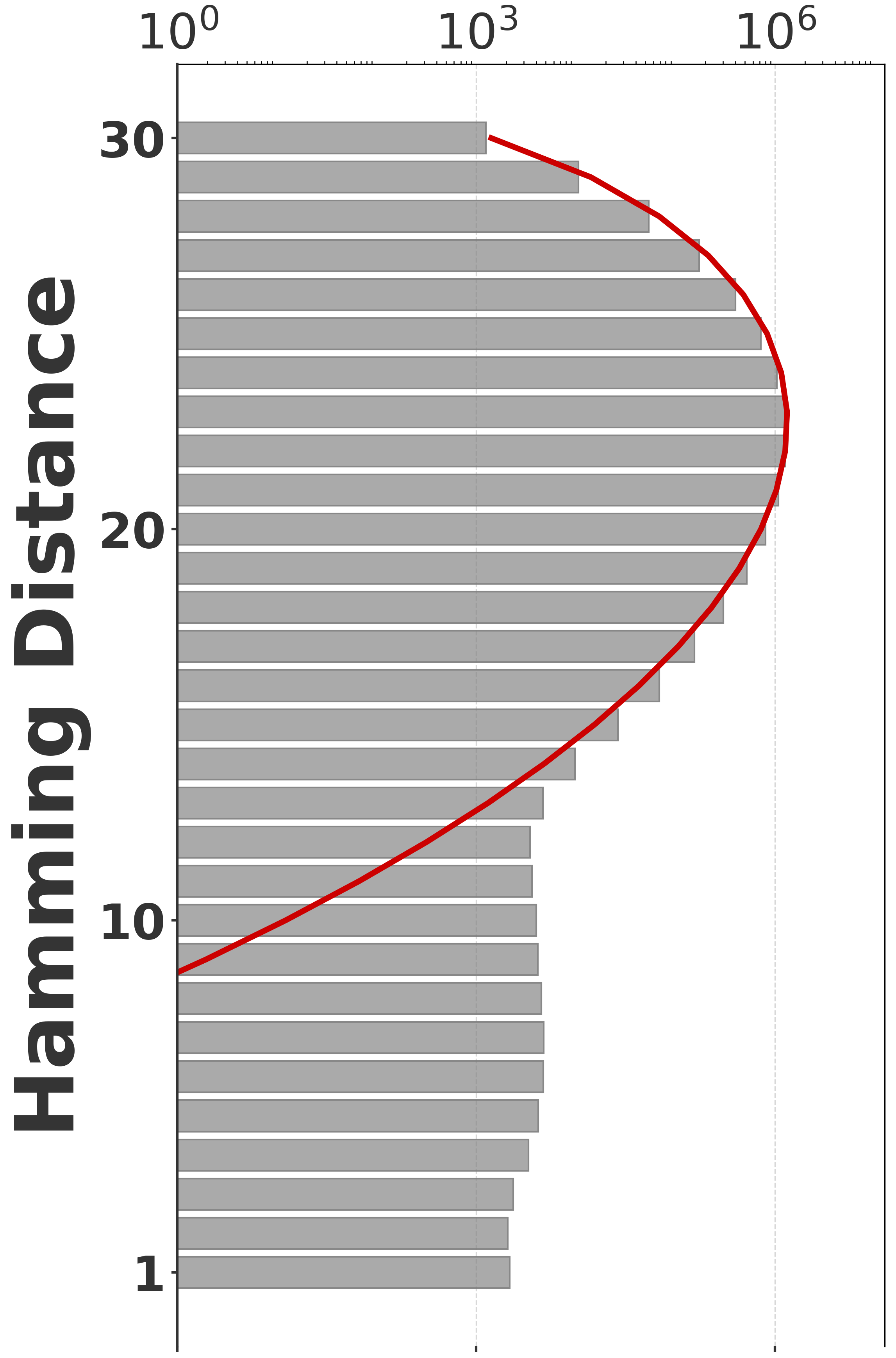

To evaluate our estimator, we use four sequences to capture various degrees of repetitiveness. The sequences are extracted from the human T2T-CHM13v2.0 reference [19]. The sequences and their coordinates are available at our reproducibility GitHub page [33]. Table 1 shows properties of these sequences and Figure 1 shows their cumulative abundance histograms.

-

1.

D-easy: This is an arbitrarily chosen substring from chr6, which had no unusual repeat annotations. We set for this sequence, which is similar to what was used in the Mash paper [20]. Less than 1% of the -mers are non-singletons.

-

2.

D-med: This is the sequence of RBMY1A1, a chrY gene that is composed of ALUs, SINEs, LINEs, simple repeats, and other repeat elements [Fig 2C in [21]]. We also use for this sequence. Approximately 3% of -mers are non-singletons.

-

3.

D-hard: This is a subsequence of RBMY1A that is annotated as a simple repeat by Tandem Repeats Finder [4], containing 4.2 similar copies of a repeat unit of length 545nt. We use , which is large enough to avoid spurious repeats in this short sequence but small enough to capture its repetitive structure. More than 40% of the -mers are non-singletons.

-

4.

D-hardest: This is a subsequence (100k long) of a region that is annotated as ‘Active Sat HOR’ in the chr21 centromere. The location of the subsequence within the region is arbitrary. Alpha satellite (Sat) DNA consists of 171-bp monomers arranged into higher-order repeats, and is notoriously difficult to assemble or map to [17]. We use for this sequence, as a user dealing with such a sequence is likely to choose a higher value. Over 70% of the -mers are non-singletons.

| Name | Default | N. -mers | N. distinct | N. of overlapping | Biological |

|---|---|---|---|---|---|

| () | -mers () | -mers | significance | ||

| D-easy | 20 | 100,000 | 98,786 | 15 | arbitrary region |

| D-med | 20 | 14,400 | 13,727 | 2 | RBMYA1 gene |

| D-hard | 10 | 2,264 | 1,199 | 0 | simple repeat |

| D-hardest | 30 | 100,000 | 3,987 | 0 | centromere |

Number of -mer Pairs (log scale)

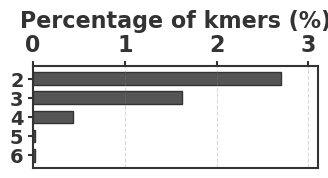

Before proceeding with experiments, we assess the validity of the two approximations made in the derivation of our estimator. The first approximation is ignoring the dependency between overlapping occurrences of a -mer. The -mers where this happens, i.e. -mers where , contribute to inaccuracy. As shown in Table 1, this is exceedingly rare. The second approximation is ignoring the possibility that a -mer mutates to another -mer in the spectrum. -mer pairs in that have a low Hamming distance will contribute to the bias. Figure 2 shows the distribution of all-vs-all pairwise -mer Hamming distances. The D-hard and D-hardest datasets indeed have a large amount of “near-repeat” -mers, which should make these datasets challenging for our estimator.

6.2 Comparison of our estimator to the repeat-oblivious estimator

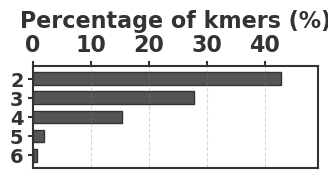

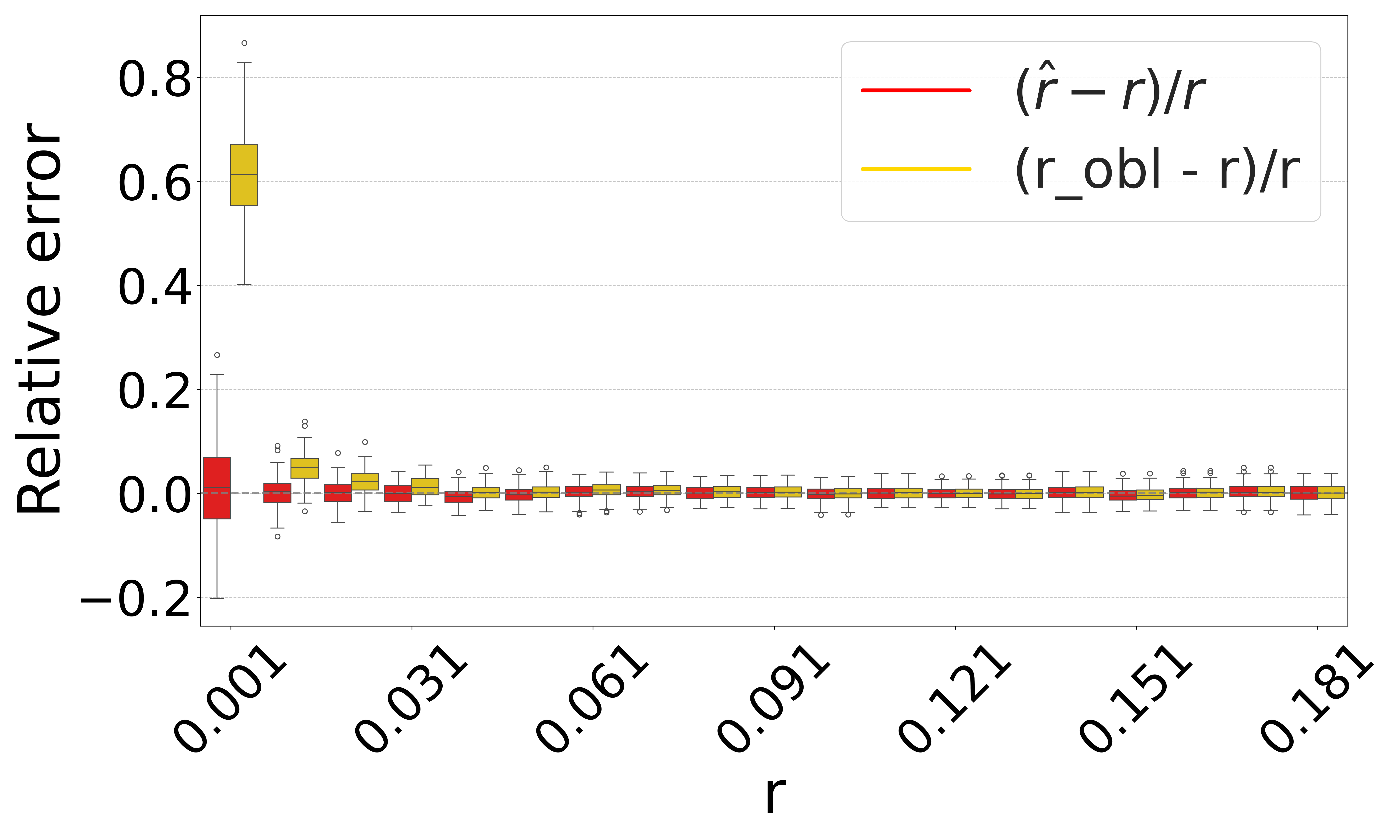

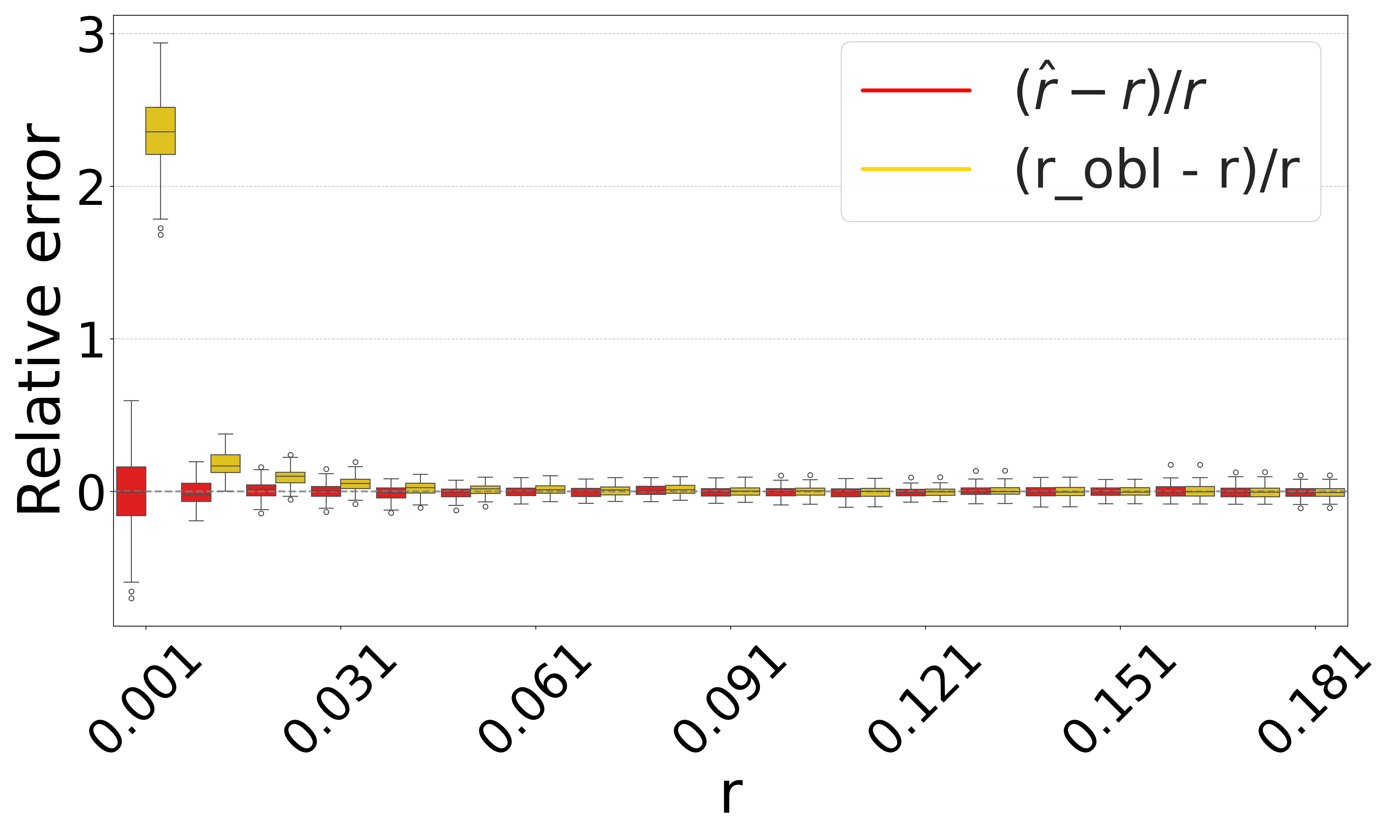

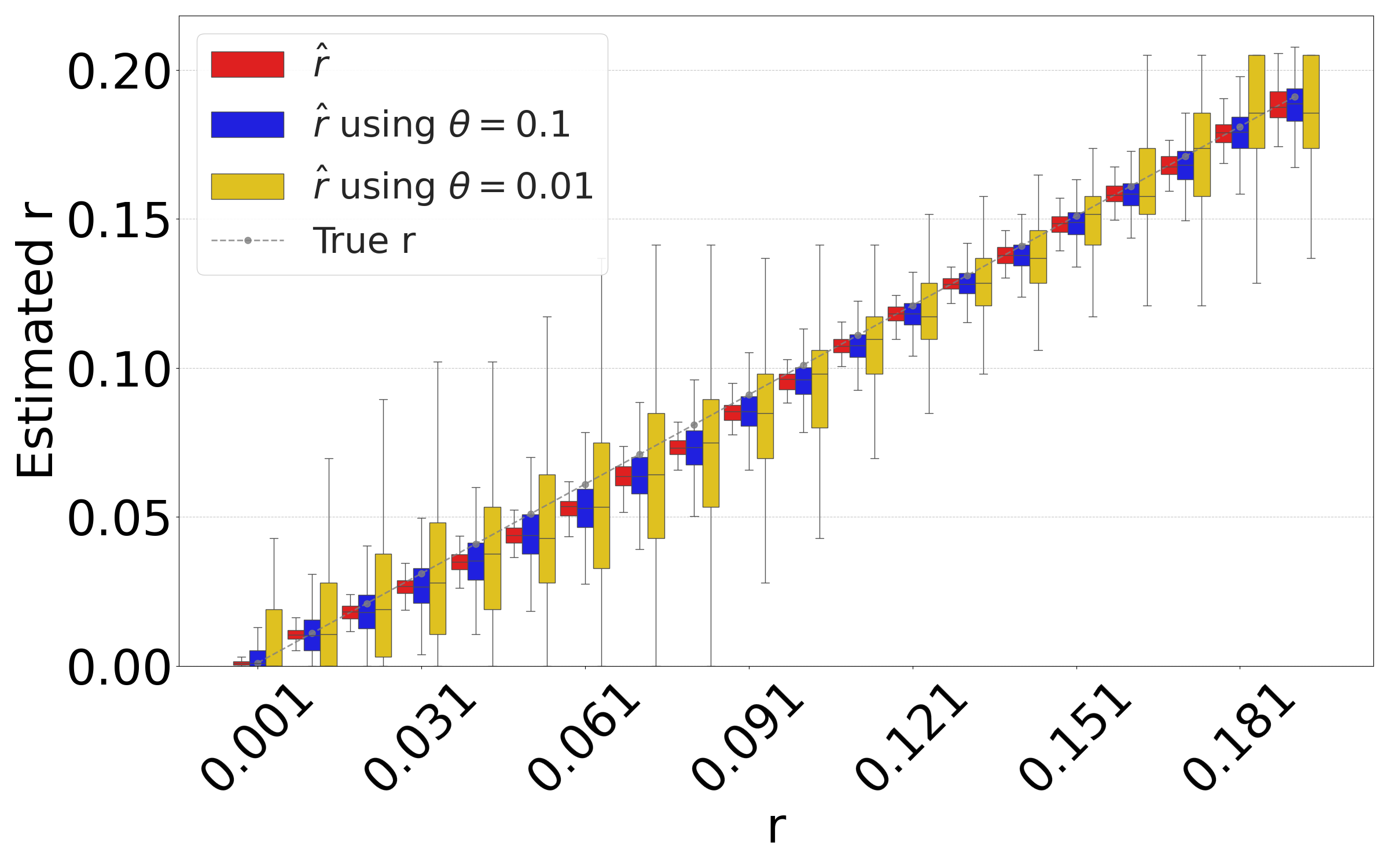

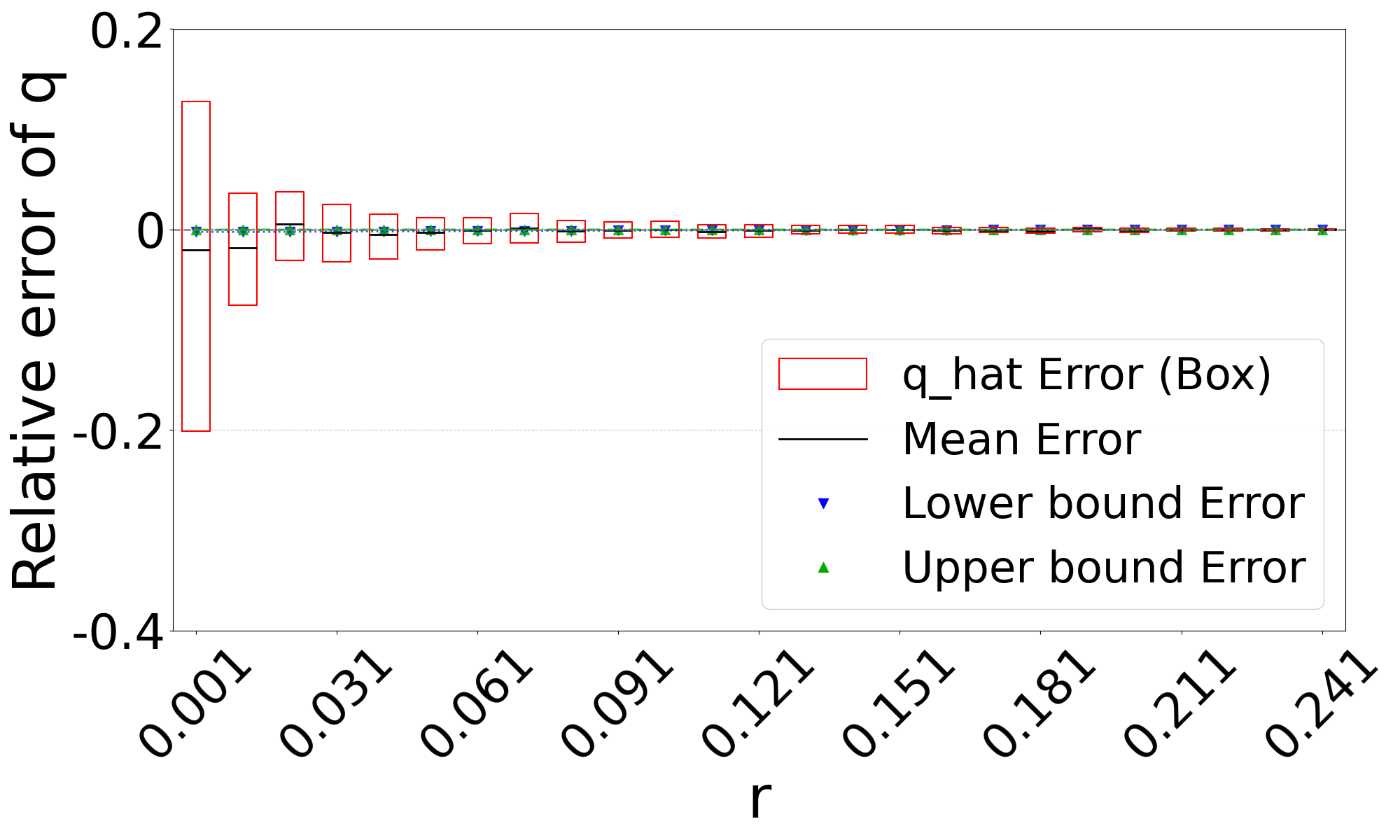

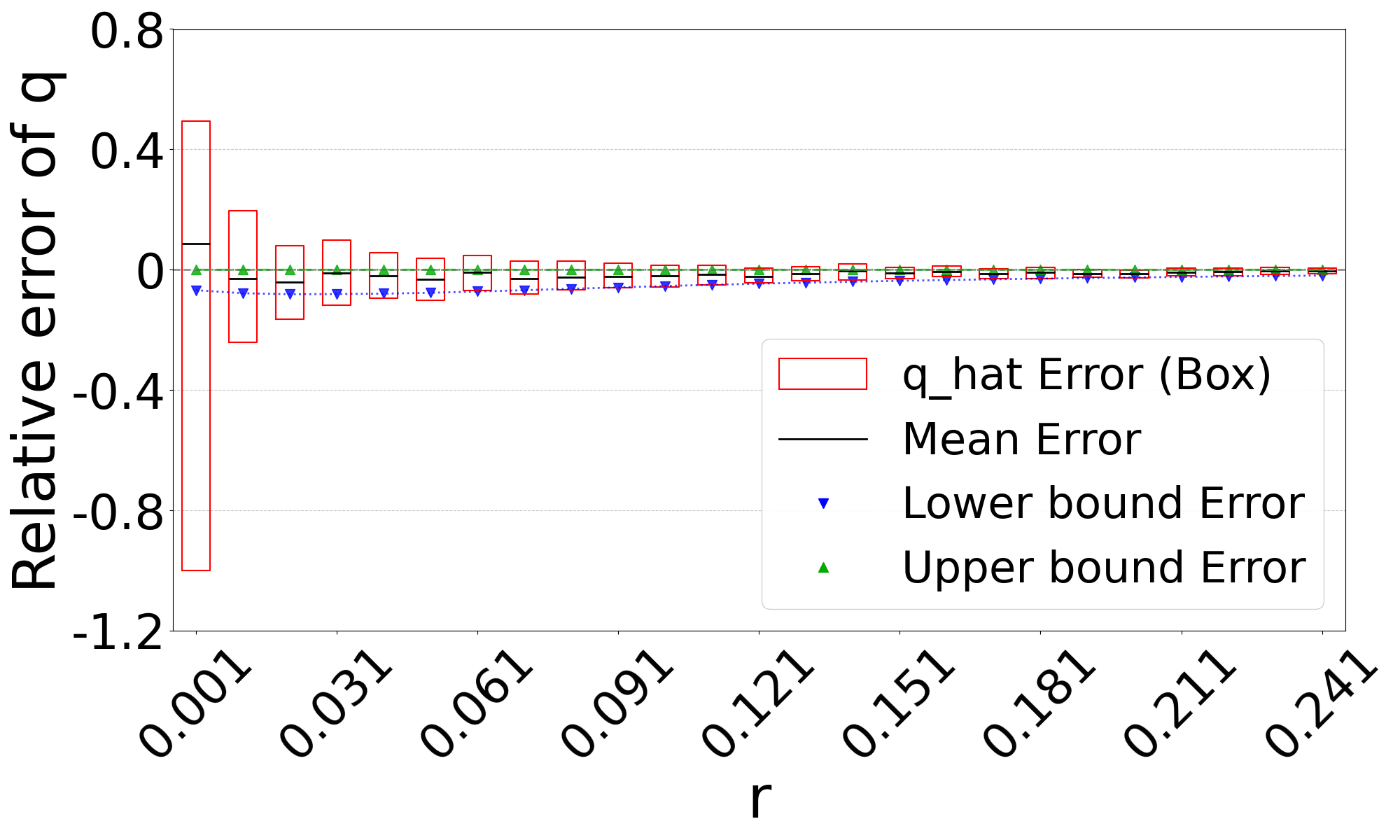

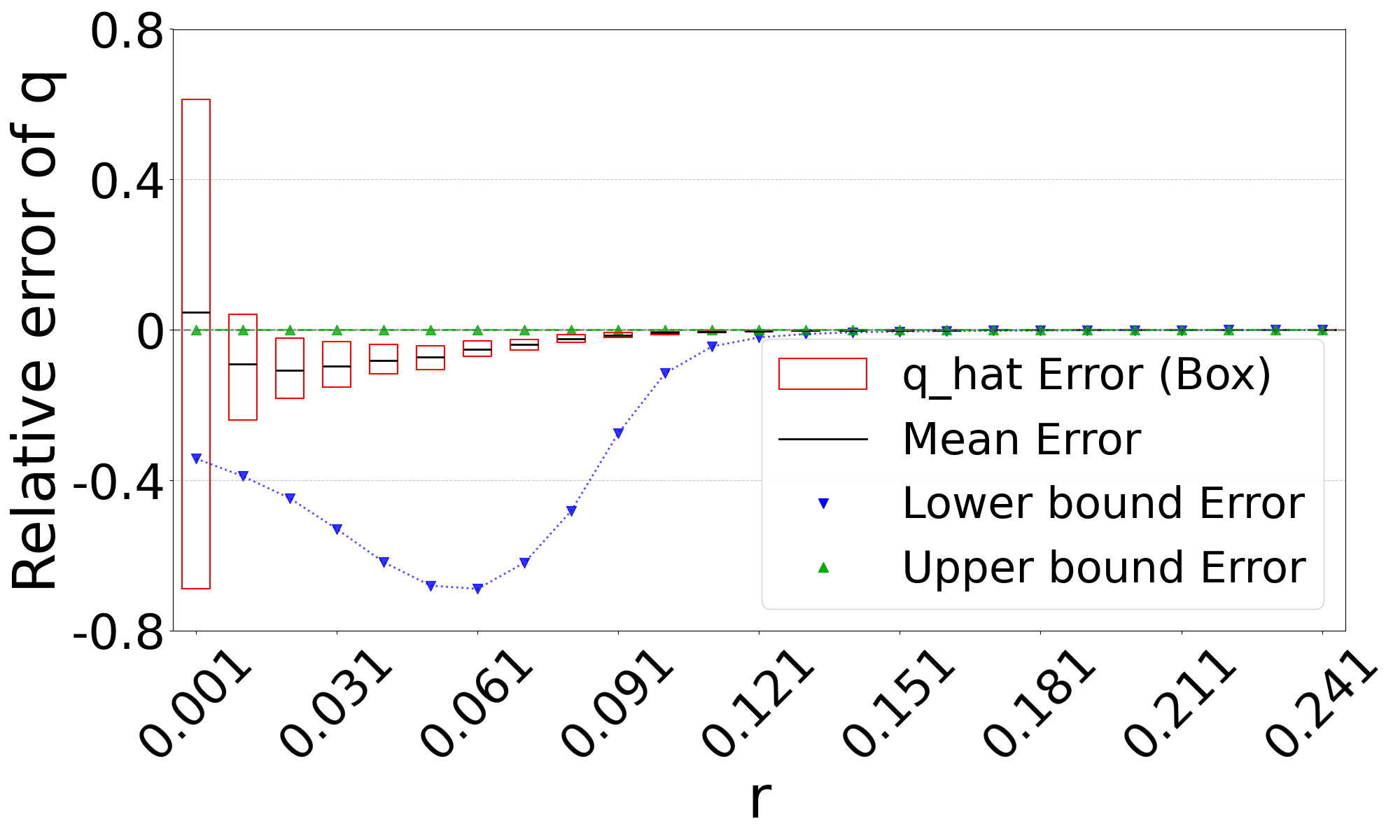

Figure 3 shows the performance on a range of substitution rates, . For D-hard and D-hardest, our estimator has a high accuracy (within a few percent of the true value), in the range of around . The estimator, on the other hand, has a much smaller reliability range, e.g. in D-hardest. For example, when the substitution rate is , the average of is , while the average of is . For , the observed intersection size was frequently 0; both estimators estimate at this point, making them unstable. For D-easy and D-med, the performance of is nearly as good as our estimator, except at very low values of (e.g. has a 230% relative error at on D-med).

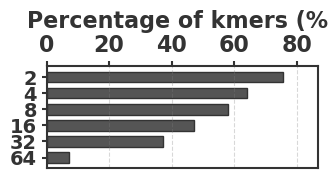

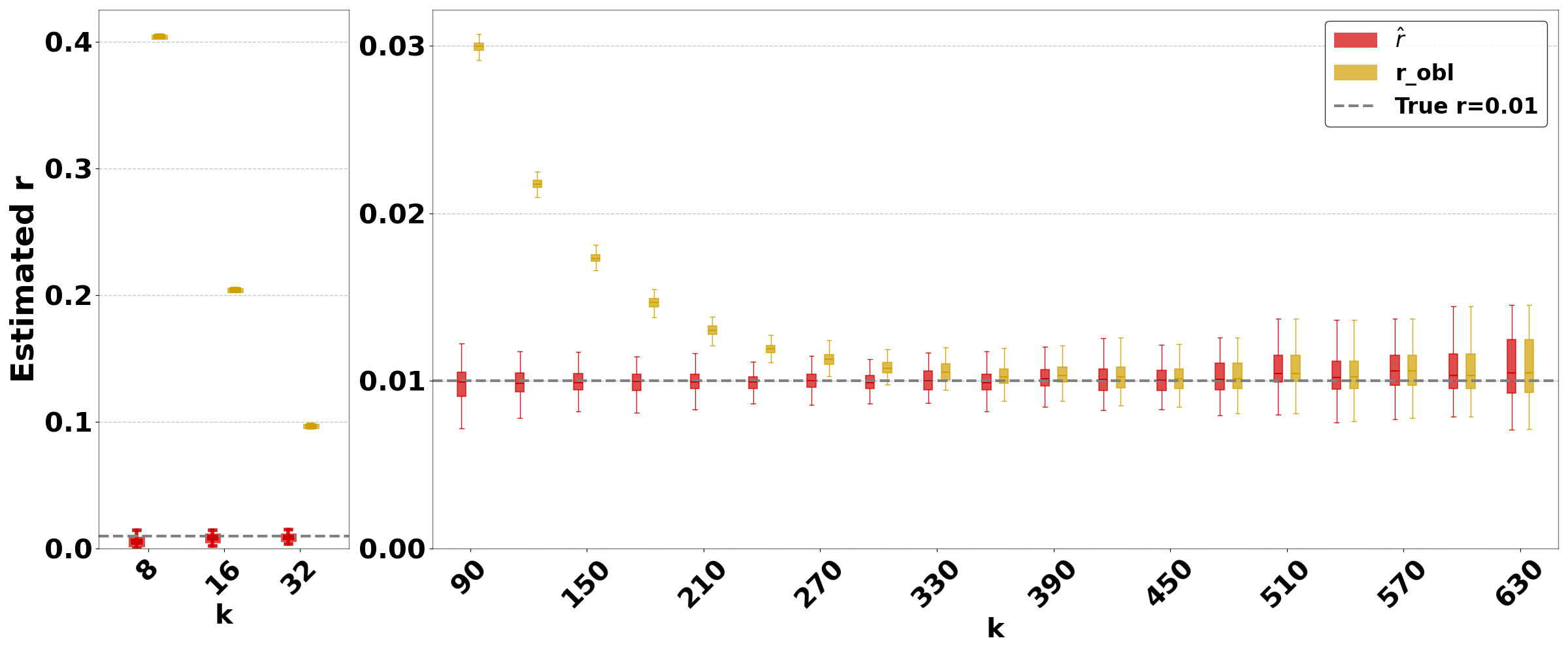

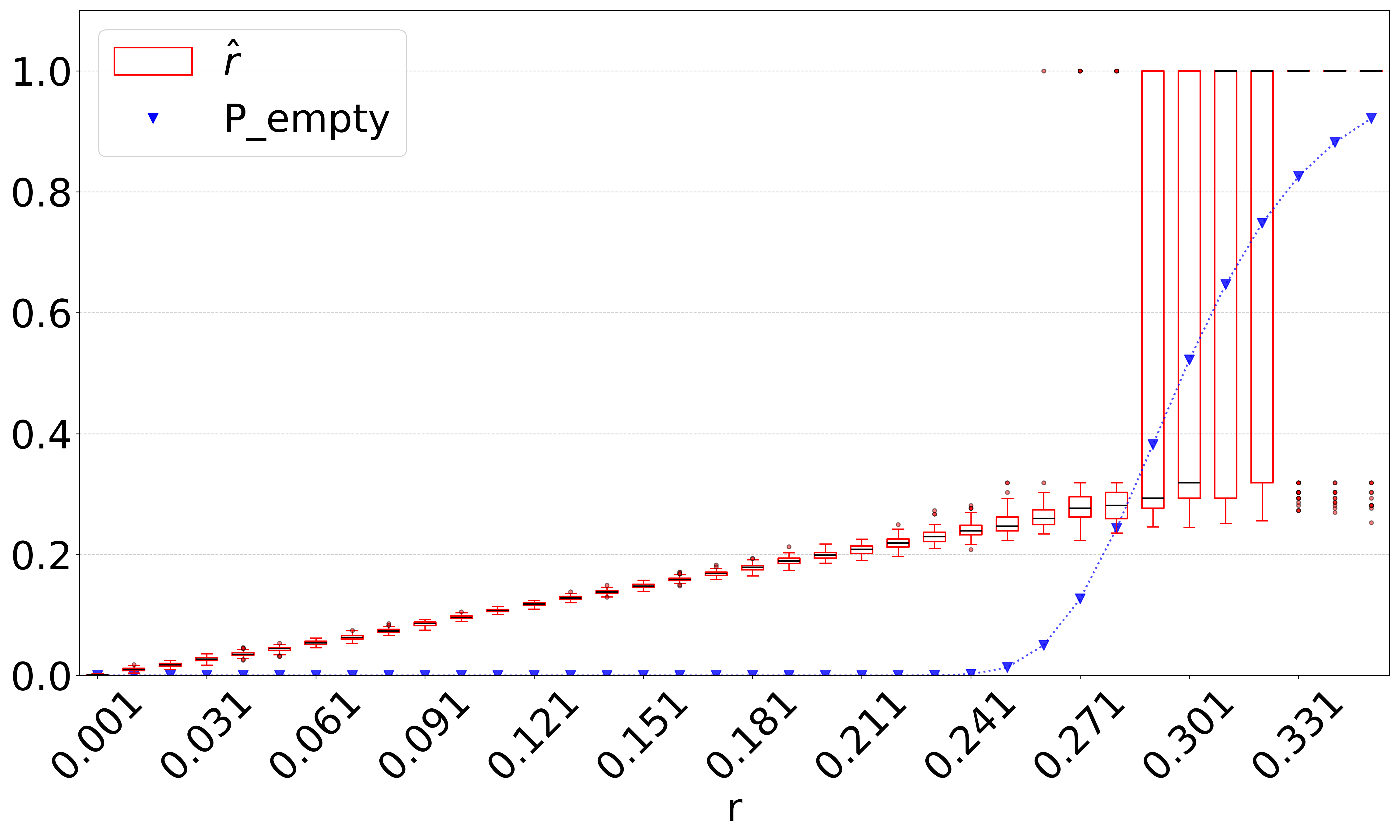

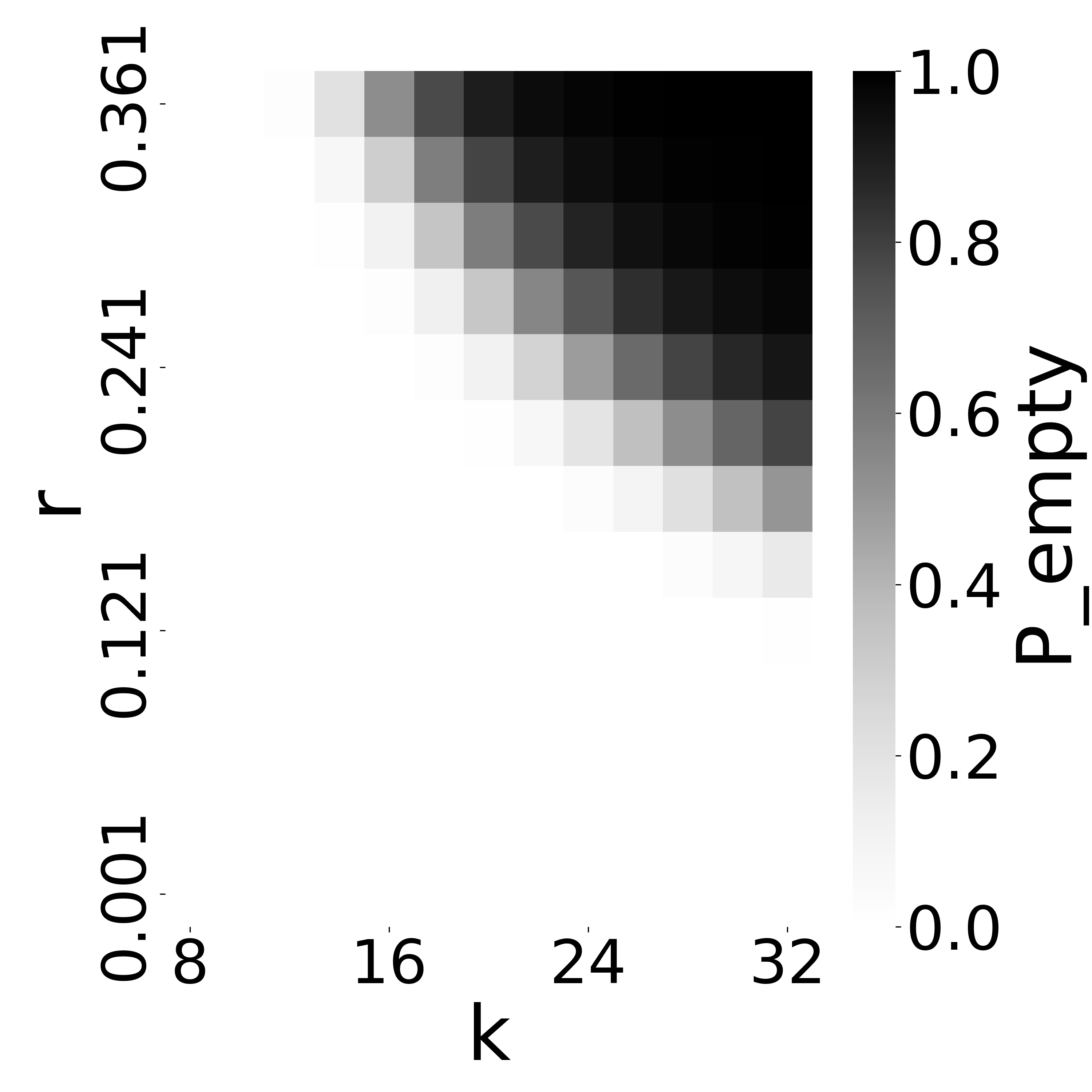

Figure 4 evaluates the estimators on D-hardest while fixing and varying . For , both estimators become unstable (not shown in figure); similar to the case of high substitution rates, the observed intersection size was frequently 0. For smaller , our estimator performed much better than , e.g. for , the average was .

The relative performance of the two estimators can be explained algebraically. The estimator is derived using the approximation that the probability that a -mer from remains after substitutions as . Our estimator uses the approximation that remains as . For singleton -mers, these probabilities are equal, but for repetitive sequences, the effect of cannot be neglected; therefore, gets progressively worse as the datasets become more repetitive. Furthermore, on . Consequently, tends to be higher than . The difference between and increases as decreases. Hence, the gap between and is larger for smaller and smaller , as Figures 3 and 4 show. Finally, as approaches 1, the probability of an empty intersection becomes greater, leading all estimators to output . This explains the pattern for large in Figure 3(d).

6.3 Combination with sketching

Sketching is a powerful technique that can make it possible to quickly compute all-pairs estimates on large datasets [20]. Our estimator lends itself to being applied on the sketched (rather than full) intersection, as follows. Given a threshold , one can use a hash function to uniformly map each -mer to a real number in . A FracMinHash sketch of a sequence is defined as the subset of the -spectrum of that hashes below [11]. In this way, one can compute , the size of the intersection between the sketches of and .

Recall that our estimator is defined by finding the unique value to solve , where is the size of the observed (non-sketched) intersection. It is easy to show that the expected value of over the sketching process is . A natural extension is then to find the unique value of to solve . The only caveat is that in some rare cases for very low mutation rates, may exceed and result in a lack of unique solution; in such cases, we hard code the estimator to return 0.

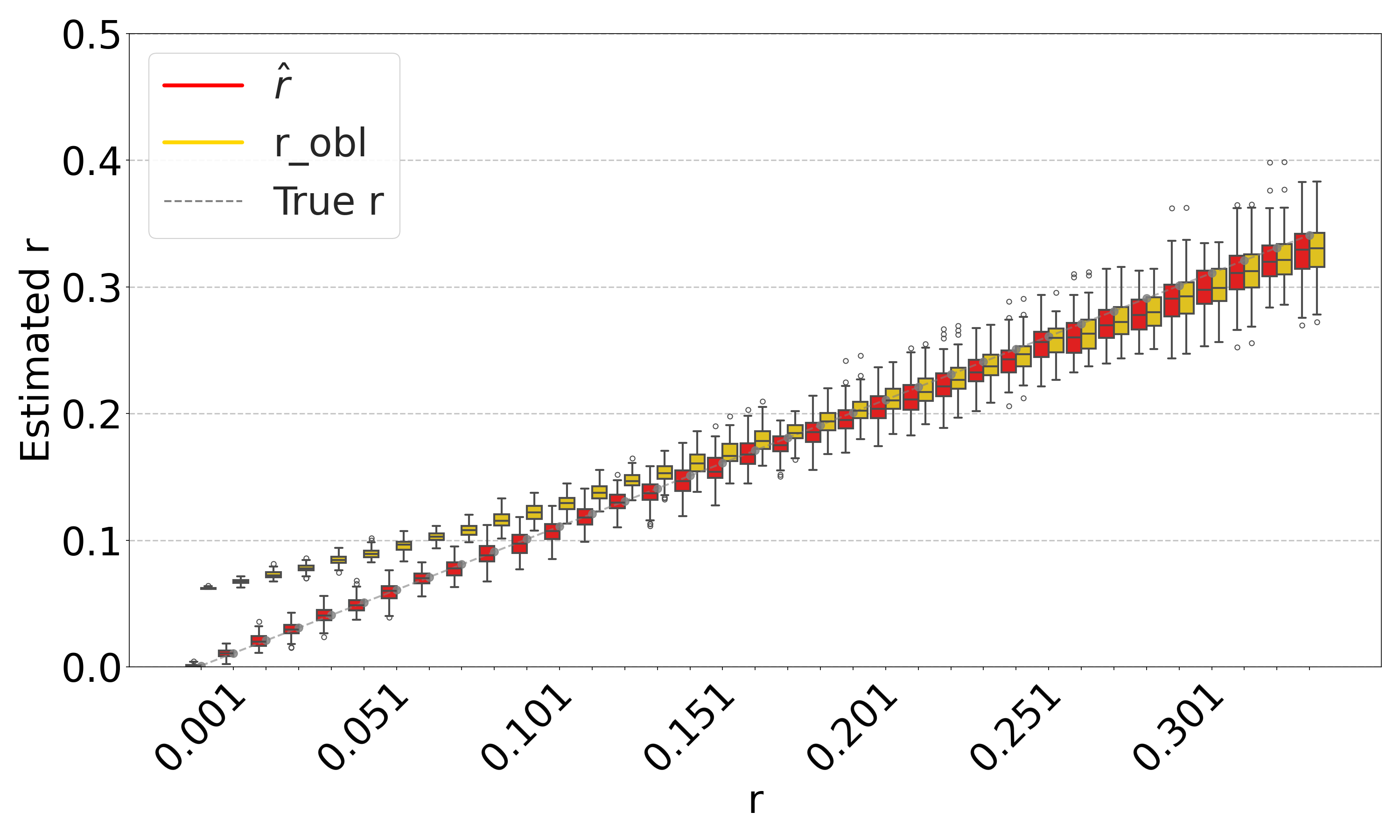

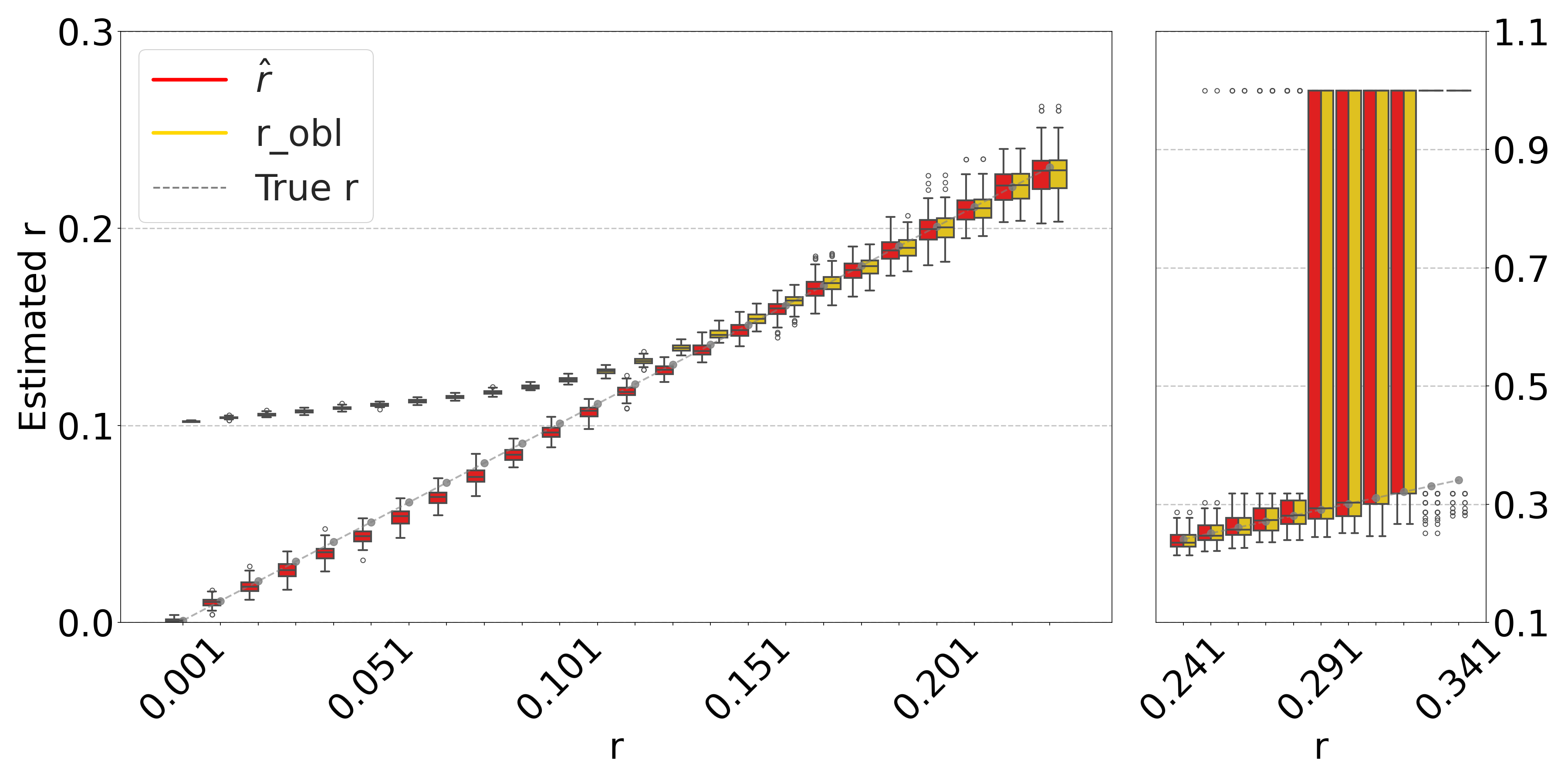

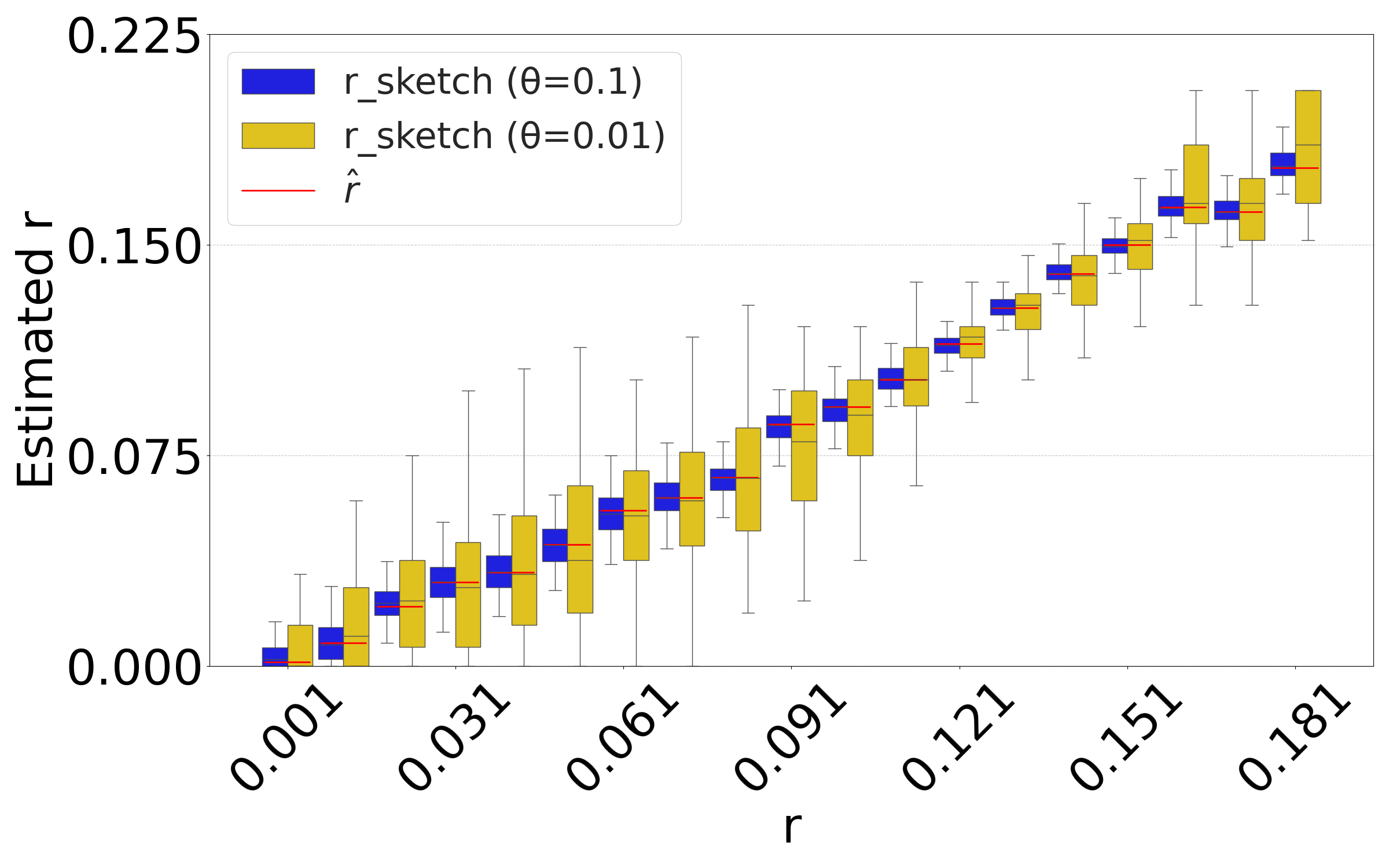

Figure 5(a) shows the accuracy of the resulting estimator on D-hardest, averaged over the combined replicates of the substitution and sketching process. The sketching does not introduce any systematic bias, but, as expected, increases the variance of our estimator. The variance is larger for smaller values. These results indicate that our estimator can indeed be applied to FracMinHashed sequences, with the threshold parameter controlling the trade-off between sketch size and the estimator’s variance.

Figure 5(b) evaluates the isolated impact of the sketching process for a fixed string , which better reflects the typical user scenario. For each substitution rate , we generate a single mutated string and compute the estimate based on the non-sketched intersection. We then replicate the sketching procedure for and and compare the distribution of the sketched estimator to the value of the non-sketched estimate (shown as red bar). The results demonstrate that sketching can accelerate the estimation process, at the cost of introducing controlled variance in the estimates.

6.4 Accuracy as a combined function of and

The accuracy of our estimator ultimately depends on an intricate interplay between and . A smaller increases the number of repeats, making estimation more challenging. On the other hand, as or increases, the probability increases, leading to a higher chance of an empty intersection size and an unreliable estimator. To more thoroughly explore the space of all values, Figure 6 evaluates the average relative absolute error, defined as , over a wide range of and . This combines our estimator’s empirical bias and variance, indicating the parameter ranges at which our estimator is reliable.

We note that a user is usually able to choose but not . For , they typically have only a rough range on what it might be. For instance, substitution rates of more than are unlikely for biologically functional sequences. Therefore, choosing a boils down to choosing a column from the heatmap that is good for the desired range. Figure 6 shows that choosing a in the range of 10 to 20 would work well for all of our datasets.

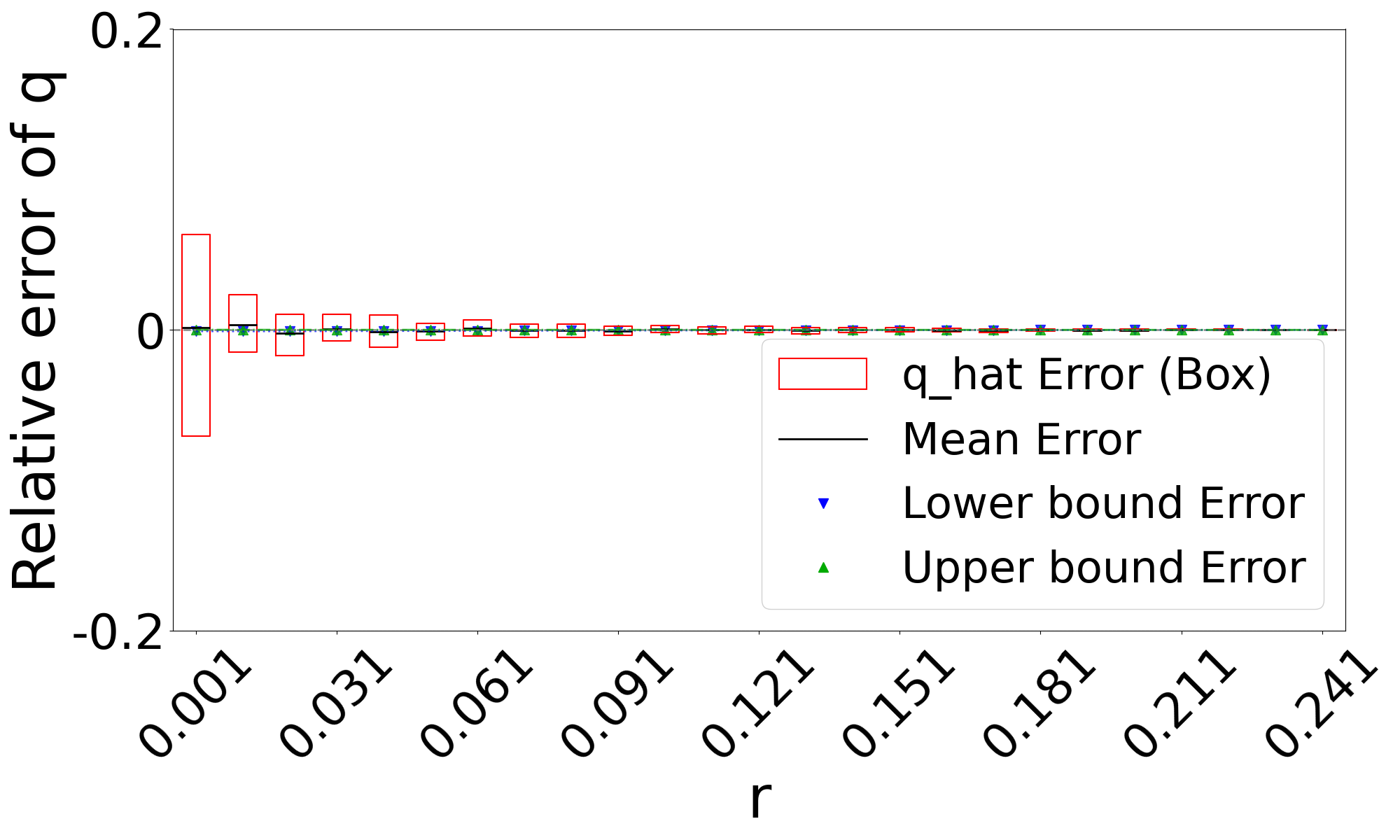

6.5 Theoretical bounds on the bias

Theorem 3 gives theoretical bounds on the bias of . To validate these bounds empirically, we run simulations, using the same setup as in Figure 3. Figure 7 shows that the empirical mean usually lies within the bias bounds, as the theory predicts. In cases where it does not, the empirical variance is high, indicating that the empirical mean has not yet converged to within the bounds. Furthermore, we see that the upper bound is nearly tight. This is consistent with the fact that overlapping -mers are rare (Table 1), implying that that is approximately equal to our lower bound on the expected intersection size (i.e. ).

The lower bound tracks the true value closely, except in the range of of D-hardest. We believe this is primarily due to the looseness of the variance upper bound in Theorem 2. When we plugged the observed empirical variance of in place of in Theorem 3, the lower bound curve no longer behaved abnormally in D-hardest (plot not shown). Furthermore, when we additionally replaced both and with the observed empirical mean of , the bounds closely captured the empirical mean of . These empirical results suggest that the estimator satisfies the approximation . In other words, when we have looseness in the bias bounds, it is due to the looseness of Theorems 1 and 2 rather than Theorem 3.

6.6 Identifying unstable parameters using Theorem 4

Figure 6 indicates that when and are large enough to lead to a high , our estimator becomes unstable. Our observations indicate that this happens because the intersection becomes empty, resulting in regardless of the true mutation rate. This limitation is anticipated and reflects a fundamental constraint shared by any intersection-based estimator. Figure 7 does not reflect this limitation, because in such cases, the relative error is small simply by virtue of being close to (even though the estimate of is not accurate). We therefore looked for an alternative method to a priori determine, given a high value of , which values of high make our estimator unstable.

We hypothesized that computing the probability of an empty intersection size a priori can identify such unstable regions of the parameter space, without needing to do simulations as for Figure 6. Though computing this probability is challenging in the general case, Theorem 4 gives an upper bound based on only , , and . The upper bound is approximately tight when not considering the effect of repeats. We therefore hypothesized that when is high, our estimator becomes unstable.

Figure 8(a) plots against the accuracy of our estimator. As hypothesized, the substitution rate at which our estimator starts to becomes unstable (around ) coincides with a sharp increase in . To test this more thoroughly, we computed for all values of and for which we evaluated D-hard in Figure 6(c). Figure 8(c) shows that there is a close correspondence between and values where our estimator’s relative error is high and is high. These observations suggest that is a useful diagnostic criterion for determining values of , and when may fail.

7 Conclusion

In this paper, we propose an estimator for the substitution rate between two sequences that is robust in highly repetitive regions such as centromeres. Our experiments validated its performance across a broad range of and values. We provide theoretical bounds on our estimator’s bias (specifically on the bias of ), and show that it accurately captures the estimator’s empirical mean in most scenarios.

For large values of and , i.e., when is large, the intersection of the -spectra tends to be empty with high probability, which is a foreseeable limitation for all intersection-based estimators. To address this, we introduce a heuristic criterion, , which depends only on the number of -mers , the -mer size , and the substitution rate . This criterion allows us to heuristically identify parameter settings under which the estimator becomes unstable.

We also showed how our estimator can be easily combined with FracMinHashing. Empirical results show that sketching does not introduce systematic bias, albeit at the cost of increased variance.

We do not perform a runtime analysis of our estimator because it completes in less than a second on our data. The runtime of our estimator is the time it takes to solve an equation numerically using Newton’s method. Since is a polynomial and the solution is constrained to the interval , Newton’s method converges in iterations, where is the target precision. Each iteration involves evaluating and its derivative, which takes time proportional to the number of non-zero terms. Except for esoteric corner cases, the number of such terms is small in practice.

The immediate open problem is to tighten the theoretical bounds on the bias. Future work could thus focus on deriving a tighter variance bound to strengthen the theoretical characterization of . A bigger open question is how to derive confidence intervals. This is a more challenging problem than bounding the bias because it requires a deeper understanding of the estimator’s distribution.

Our estimator could potentially be extended to work on unassembled sequencing reads, as opposed to assembled genomes. Our method does not rely on the -mer multiplicities in the intersection size, making it amenable to such a scenario. Still, one of the limitations of our estimator is the need to know the abundance histogram of the source string. A tool like GenomeScope [31] can estimate the abundance histogram from sequence data -mer counts. Alternatively, the user may choose to use an abundance histogram from a related genome, as related genomes are likely to have similar abundance histograms. Fully adapting this estimator to work with sequencing data remains an important future work.

References

- [1] Anton Bankevich, Andrey V. Bzikadze, Mikhail Kolmogorov, Dmitry Antipov, and Pavel A. Pevzner. Multiplex de Bruijn graphs enable genome assembly from long, high-fidelity reads. Nature Biotechnology, 40(7):1075–1081, 2022.

- [2] Anton Bankevich, Sergey Nurk, Dmitry Antipov, Alexey A Gurevich, Mikhail Dvorkin, Alexander S Kulikov, Valery M Lesin, Sergey I Nikolenko, Son Pham, Andrey D Prjibelski, et al. SPAdes: a new genome assembly algorithm and its applications to single-cell sequencing. Journal of computational biology, 19(5):455–477, 2012. doi:10.1089/cmb.2012.0021.

- [3] Mahdi Belbasi, Antonio Blanca, Robert S Harris, David Koslicki, and Paul Medvedev. The minimizer jaccard estimator is biased and inconsistent. Bioinformatics, 38(Supplement_1):i169–i176, June 2022. doi:10.1093/bioinformatics/btac244.

- [4] G. Benson. Tandem repeats finder: a program to analyze DNA sequences. Nucleic Acids Research, 27(2):573–580, 1999.

- [5] Antonio Blanca, Robert S Harris, David Koslicki, and Paul Medvedev. The statistics of k-mers from a sequence undergoing a simple mutation process without spurious matches. Journal of Computational Biology, 29(2):155–168, 2022. doi:10.1089/cmb.2021.0431.

- [6] George Casella and Roger L. Berger. Statistical inference. Duxbury, Pacific Grove, Calif., 2. ed. edition, 2002.

- [7] Luca Denti, Marco Previtali, Giulia Bernardini, Alexander Schönhuth, and Paola Bonizzoni. MALVA: genotyping by Mapping-free ALlele detection of known VAriants. iScience, 18:20–27, 2019.

- [8] Huan Fan, Anthony R Ives, Yann Surget-Groba, and Charles H Cannon. An assembly and alignment-free method of phylogeny reconstruction from next-generation sequencing data. BMC genomics, 16(1):522, 2015.

- [9] Robert S. Harris and Paul Medvedev. Improved representation of Sequence Bloom Trees. Bioinformatics, 36(3):721–727, 2020. doi:10.1093/bioinformatics/btz662.

- [10] Bernhard Haubold, Fabian Klötzl, and Peter Pfaffelhuber. andi: Fast and accurate estimation of evolutionary distances between closely related genomes. Bioinformatics, 31(8):1169–1175, 2014. doi:10.1093/bioinformatics/btu815.

- [11] Mahmudur Rahman Hera, N Tessa Pierce-Ward, and David Koslicki. Deriving confidence intervals for mutation rates across a wide range of evolutionary distances using fracminhash. Genome research, 33(7):1061–1068, 2023.

- [12] Chirag Jain, Luis M Rodriguez-R, Adam M Phillippy, Konstantinos T Konstantinidis, and Srinivas Aluru. High throughput ani analysis of 90k prokaryotic genomes reveals clear species boundaries. Nature communications, 9(1):1–8, 2018.

- [13] Bryce Kille, Erik Garrison, Todd J Treangen, and Adam M Phillippy. Minmers are a generalization of minimizers that enable unbiased local Jaccard estimation. Bioinformatics, 39(9), 2023. doi:10.1093/bioinformatics/btad512.

- [14] Téo Lemane, Nolan Lezzoche, Julien Lecubin, Eric Pelletier, Magali Lescot, Rayan Chikhi, and Pierre Peterlongo. Indexing and real-time user-friendly queries in terabyte-sized complex genomic datasets with kmindex and ORA. Nature Computational Science, 4(2):104–109, 2024. doi:10.1038/s43588-024-00596-6.

- [15] Heng Li. Minimap2: pairwise alignment for nucleotide sequences. Bioinformatics, 34(18):3094–3100, 2018. doi:10.1093/bioinformatics/bty191.

- [16] Guillaume Marçais, Brad Solomon, Rob Patro, and Carl Kingsford. Sketching and sublinear data structures in genomics. Annual Review of Biomedical Data Science, 2(1):93–118, 2019.

- [17] Shannon M McNulty and Beth A Sullivan. Alpha satellite DNA biology: finding function in the recesses of the genome. Chromosome research, 26:115–138, 2018.

- [18] Burkhard Morgenstern, Bingyao Zhu, Sebastian Horwege, and Chris André Leimeister. Estimating evolutionary distances between genomic sequences from spaced-word matches. Algorithms for Molecular Biology, 10(1), 2015.

- [19] Sergey Nurk, Sergey Koren, Arang Rhie, Mikko Rautiainen, Andrey V Bzikadze, Alla Mikheenko, Mitchell R Vollger, Nicolas Altemose, Lev Uralsky, Ariel Gershman, et al. The complete sequence of a human genome. Science, 376(6588):44–53, 2022.

- [20] Brian D Ondov, Todd J Treangen, Páll Melsted, Adam B Mallonee, Nicholas H Bergman, Sergey Koren, and Adam M Phillippy. Mash: fast genome and metagenome distance estimation using MinHash. Genome Biology, 17(1):132, 2016.

- [21] Arang Rhie, Sergey Nurk, Monika Cechova, Savannah J. Hoyt, Dylan J. Taylor, Nicolas Altemose, Paul W. Hook, Sergey Koren, Mikko Rautiainen, Ivan A. Alexandrov, Jamie Allen, Mobin Asri, Andrey V. Bzikadze, Nae-Chyun Chen, Chen-Shan Chin, Mark Diekhans, Paul Flicek, Giulio Formenti, Arkarachai Fungtammasan, Carlos Garcia Giron, Erik Garrison, Ariel Gershman, Jennifer L. Gerton, Patrick G. S. Grady, Andrea Guarracino, Leanne Haggerty, Reza Halabian, Nancy F. Hansen, Robert Harris, Gabrielle A. Hartley, William T. Harvey, Marina Haukness, Jakob Heinz, Thibaut Hourlier, Robert M. Hubley, Sarah E. Hunt, Stephen Hwang, Miten Jain, Rupesh K. Kesharwani, Alexandra P. Lewis, Heng Li, Glennis A. Logsdon, Julian K. Lucas, Wojciech Makalowski, Christopher Markovic, Fergal J. Martin, Ann M. Mc Cartney, Rajiv C. McCoy, Jennifer McDaniel, Brandy M. McNulty, Paul Medvedev, Alla Mikheenko, Katherine M. Munson, Terence D. Murphy, Hugh E. Olsen, Nathan D. Olson, Luis F. Paulin, David Porubsky, Tamara Potapova, Fedor Ryabov, Steven L. Salzberg, Michael E. G. Sauria, Fritz J. Sedlazeck, Kishwar Shafin, Valery A. Shepelev, Alaina Shumate, Jessica M. Storer, Likhitha Surapaneni, Angela M. Taravella Oill, Françoise Thibaud-Nissen, Winston Timp, Marta Tomaszkiewicz, Mitchell R. Vollger, Brian P. Walenz, Allison C. Watwood, Matthias H. Weissensteiner, Aaron M. Wenger, Melissa A. Wilson, Samantha Zarate, Yiming Zhu, Justin M. Zook, Evan E. Eichler, Rachel J. O’Neill, Michael C. Schatz, Karen H. Miga, Kateryna D. Makova, and Adam M. Phillippy. The complete sequence of a human y chromosome. Nature, 621(7978):344–354, 2023.

- [22] Will P. M. Rowe. When the levee breaks: a practical guide to sketching algorithms for processing the flood of genomic data. Genome Biology, 20(1):199, 2019.

- [23] Sophie Röhling, Alexander Linne, Jendrik Schellhorn, Morteza Hosseini, Thomas Dencker, and Burkhard Morgenstern. The number of k-mer matches between two dna sequences as a function of k and applications to estimate phylogenetic distances. Plos one, 15(2):e0228070, 2020.

- [24] Shahab Sarmashghi, Kristine Bohmann, M Thomas P Gilbert, Vineet Bafna, and Siavash Mirarab. Skmer: assembly-free and alignment-free sample identification using genome skims. Genome Biology, 20(1):1–20, 2019.

- [25] Tizian Schulz and Paul Medvedev. ESKEMAP: exact sketch-based read mapping. Algorithms for Molecular Biology, 19(1):19, 2024. doi:10.1186/s13015-024-00261-7.

- [26] Jim Shaw and Yun William Yu. Fast and robust metagenomic sequence comparison through sparse chaining with skani. Nature Methods, 20(11):1661–1665, 2023.

- [27] Jim Shaw and Yun William Yu. Proving sequence aligners can guarantee accuracy in almost time through an average-case analysis of the seed-chain-extend heuristic. Genome Research, 33(7):1175–1187, 2023.

- [28] Kai Song, Jie Ren, Gesine Reinert, Minghua Deng, Michael S Waterman, and Fengzhu Sun. New developments of alignment-free sequence comparison: measures, statistics and next-generation sequencing. Briefings in bioinformatics, 15(3):343–353, 2014. doi:10.1093/bib/bbt067.

- [29] Daniel S Standage, C Titus Brown, and Fereydoun Hormozdiari. Kevlar: a mapping-free framework for accurate discovery of de novo variants. iScience, 18:28–36, 2019.

- [30] Chen Sun and Paul Medvedev. Toward fast and accurate snp genotyping from whole genome sequencing data for bedside diagnostics. Bioinformatics, 35(3):415–420, 2018. doi:10.1093/bioinformatics/bty641.

- [31] Gregory W Vurture, Fritz J Sedlazeck, Maria Nattestad, Charles J Underwood, Han Fang, James Gurtowski, and Michael C Schatz. Genomescope: fast reference-free genome profiling from short reads. Bioinformatics, 33(14):2202–2204, 2017. doi:10.1093/bioinformatics/btx153.

- [32] Haonan Wu, Antonio Blanca, and Paul Medvedev. Repeat-Aware_Substitution_Rate_Estimator. Software, swhId: swh:1:dir:258c949c42d162c56f1e09a0ece39722a5076601 (visited on 2025-08-04). URL: https://github.com/medvedevgroup/Repeat-Aware_Substitution_Rate_Estimator, doi:10.4230/artifacts.24318.

- [33] Haonan Wu, Antonio Blanca, and Paul Medvedev. Reproducibility repository. https://github.com/medvedevgroup/kmer-stats-repeat_Reproduce.

- [34] Huiguang Yi and Li Jin. Co-phylog: an assembly-free phylogenomic approach for closely related organisms. Nucleic Acids Research, 41(7):e75–e75, 2013.

Appendix A Appendix: Missing Proofs

Theorem 4.

[Restated, see original statement.]

Let be a string of length at least . The probability that every interval of length in has at least one substitution can be computed in time with a dynamic programming algorithm that takes as input only , , (not ).

Proof.

Let be the event that every interval of length in has at least one substitution. We claim that the following recurrence holds, which automatically leads to the dynamic programming algorithm of the desired time.

We will use -span to denote an interval of length in . For the case that , is the probability that the first -mer mutates, which is . For , we do the following. We denote as the event that there is at least one substitution in , as the event that there is at least one substitution in , and as the event that there is at least one substitution in . Then we have

| (4) | ||||

| (5) | ||||

| (6) |

For the last case (), let be the event that is the position of the rightmost substitution in . Observe that for , and are mutually exclusive. Furthermore, observe that the rightmost mutation position must be at least , otherwise the -span starting at is not mutated. Therefore, by the law of total probability, we have

| (7) |

Observe that if there is a substitution at position , then all the -spans beginning at positions are mutated. Therefore, . Hence,

| (8) | ||||

| (9) |

Lemma A.1.

Let be a sum of random variables , and let . Then

Proof.

Lemma A.2.

is invertible on . Moreover, if there exists at least one -mer with , then, on the intervals and , and letting denote the inverse of , we have that

| (10) | ||||

| (11) | ||||

| (12) | ||||

| (13) |

Proof.

Recall that is the number of -mers that have copies, and that . Since all values are non-negative, all derivatives of are non-positive on . Moreover, since we assume that is strictly positive, the first derivative of is strictly negative on . The derivatives of can be expressed in terms of the derivatives of and by applying the inverse function rule from basic calculus.