MUSE: Designing Immersive Virtual Realities for Spaceflight UX Research

Abstract

Virtual reality (VR) provides unique opportunities for assessing early spacecraft design and usability by employing human-centered narrative and scenario-driven design methods. This paper details a narrative-focused VR simulation of a speculative spaceflight scenario, emphasizing narrative techniques for enhancing user immersion and user testing in evaluating operational usability aspects inside a spacecraft capsule. We designed a Modular User-centric Spaceflight Experience (MUSE) including a spacecraft capsule design and virtual mission scenario based on the findings and suggestions in Human Inspirator Co-Engineering (HICE) study. Results from user testing with MUSE underline the effectiveness and opportunities of narrative scenarios in early UX- evaluations in improving experience flow, operational understanding and user engagement. At the same time there remains several questions in defining best methodology to measure users insight and action motivation born from narrative immersion with the VR- experience.

Keywords and phrases:

Virtual Reality, Spaceflight Simulation, Narrative Design, Game Design, Scenario Design, Immersive ExperienceCopyright and License:

2012 ACM Subject Classification:

Human-centered computing Virtual reality ; Human-centered computing Usability testing ; Human-centered computing Scenario-based design ; Human-centered computing User centered designAcknowledgements:

Many thanks to Stefano Scolari and Tommy Nilsson for their contribution in the research leading into the publication. Stefano for his support in the interactions and software development of the VR experience, and Tommy for providing design support with 3D and visual elements for the VR experience.Editors:

Leonie Bensch, Tommy Nilsson, Martin Nisser, Pat Pataranutaporn, Albrecht Schmidt, and Valentina SuminiSeries and Publisher:

Open Access Series in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Open Access Series in Informatics, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Virtual Reality (VR) and Extended Reality (XR) technologies have increasingly become essential tools in astronaut and space operations training, primarily due to their flexibility, cost efficiency, and immersive capabilities [19, 18, 2, 3, 10]. This versatility means that VR can address particular training needs, such as the execution of operational activity and manipulation of machines. An emerging trend identifies that providing a user with immersive environments and using narrative capabilities within these environments provides a much deeper emotional connection and action-readiness through dramatic or structured storytelling pacing. [8, 21, 4]. Developing holistic narrative immersion experiences provides notable advantages for early mission design by leveraging VR environments to improve user interfaces and user experience, especially regarding scenario realism, intuitive decision-making, and creativity enhancement. A deeper immersion with a virtual experience is found to offer cognitive benefits to the user that support intuitive decision-making and engage creativity [5, 17, 11]. The research on Modular User-centric Spaceflight Experience (MUSE) builds upon the existing research carried out at the European Astronaut Centre (EAC) on immersive virtual operations [19, 18] and expands the horizon of immersive virtual experiences into a mission onboard a small speculative crewed spacecraft. This was a development opportunity identified by ESA’s Human Inspirator Co-Engineering (HICE) study. MUSE addresses the previously identified narrative human-centered opportunities of VR by integrating a narrative-driven design framework into a modular project structure, combined with user testing done during simulated capsule docking operations. By utilizing immersive frameworks to enhance the realism and effectiveness of operational training scenarios, we gathered insight-based user feedback to understand better how game engine flexibility serves experience immersion and narrative structure, and may help or hinder operational awareness during a simulated mission in a spacecraft capsule environment [15, 25, 13]. Based on these goals, the study addresses two core research questions. Firstly, how can immersive narrative-based VR experiences be designed to foster user interest and engagement with human spaceflight missions to LEO and lunar orbit? Secondly, in what ways can a speculative VR simulation, based on current mission knowledge, be used to anticipate and inform the design of future spacecraft operations?

2 Related Work and Theoretical Foundations

2.1 VR/XR Uses in Spaceflight Training

Virtual Reality (VR) and Extended Reality (XR) technologies are increasingly prominent tools in astronaut training and space mission preparation, primarily due to their development flexibility, reduced physical risks to testers, and cost efficiency at large. Traditional astronaut training methods, such as analogue environments, neutral buoyancy pools, and parabolic flights remain invaluable, but they are also increasingly resource-intensive, may require additional specific training, entail physical risks, and may have limitations concerning repeatability and scenario realism. In contrast, VR and XR offer highly controlled and reproducible simulation environments that facilitate extensive rehearsal of operational tasks with significantly lower resource demands [19, 3]. There remain several challenges, too, regarding the use of VR for training, particularly aspects of technology constraints, user comfort and user interface design needs [16].

With these challenges and opportunities in mind, VR and XR technologies are studied to anticipate their potential to support human and robotic mission training in the field of space systems development. Immersive technologies, such as immersive VR experiences, have been identified for their opportunity to support task-focused training and machine operation, largely focused on areas of skill development and performing specific procedural tasks that support memorization and recall [12]. Other examples of immersive VR use include engaging with the user in a more personalized level in areas of customized training, team building, inspiration and motivation. Various studies also demonstrate VR environments’ potential for design and evaluation of usability [10, 19, 18, 8]. The need for immersive enrichment in VR-based scenario training has been clearly identified within European Space Agency’s (ESA) operational contexts, extensively used for both astronaut training and as an aspects of operational ergonomics during early mission design. For example, in a case study on EL3 lander done at the European Astronaut Center (EAC) by Nilsson et al (2022) the aspects of structured timelines and scenario-based interaction in benefit of enhancing immersion and crafting meaningful scenarios are highlighted. Structuring the VR experience narrative for immersive realism was seen crucial for the value of the experience [19].

Narrative elements in VR experiences are more commonly employed in VR games and cultural visual experiences, where they can host aspects of psychological realism, storytelling, and emotionally engaging action [11, 17]. Recent research indicates that immersion within narrative-rich virtual experiences significantly enhances user creativity and the capacity for insight-based problem-solving by evoking awe and promoting creative problem-solving through deeper cognitive and emotional engagement [17, 11, 25, 14].

2.2 The Key Advantages of Using Narrative and Scenario-Driven Design in Game Design

Game design theory and narrative design methodologies offer useful tools for structuring immersive virtual reality (VR) environments, particularly in contexts of training new tasks and speculative scenario development. Frameworks like Freytag’s Pyramid and the Hero’s Journey, are commonly used in interactive media and serve not only as storytelling devices but also as models for guiding emotional pacing, cognitive engagement, and decision-making within a simulated context [21, 4, 1]. These narrative structures can be aligned with Csikszentmihalyi’s theory of Flow, where users experience a high level of focus and involvement when the balance between task difficulty and user skill is carefully managed [7]. When integrated into VR simulations, these frameworks can support emotional involvement in the simulated world and promote intuitive responses to emerging events. This aligns with Carpenter’s description of insight as a moment of sudden understanding born from unconscious cognitive processing, contributing to adaptive thinking and creative way to solve problems [5].

From a user interface (UI) and user experience (UX) perspective, narrative immersion offers several practical benefits. It can reduce cognitive load, support attentional focus, and enable users to respond more effectively in high-pressure operational contexts [2, 23]. Narrative components in user interfaces may also help with memory retention and emotional relevance, which can be especially helpful in training settings where task repetition alone may not address all learning goals. By applying narrative-oriented methods from game design, developers can create virtual training tools that foster adaptability, creativity, and engagement. These qualities are relevant for astronaut task and mission preparation, particularly in complex scenarios involving different tasks [15, 25, 19]. Including game design methodology more centrally in design of VR experiences can further advance the development of systematic design practices that link performance requirements of these experiences with the cognitive and emotional dimensions of users in space environments.

3 Methodology

3.1 Scenario Ideation and Narrative Development Techniques

The scenario developed for this research adopted iterative and participatory design methods, explicitly structured around the well-established narrative framework called Freytag’s Pyramid, consisting of clearly defined phases including a rising action, climax and falling action, to create a dramatic and comprehensible narrative arc. This structured approach (see Fig. 1) facilitated operational realism of having a structured phasing to the mission and immersive storytelling by providing users with emotional and cognitive pacing for a deeper immersion and enhanced user engagement throughout the experience [21, 4].

Based on ESA’s Human Inspirator Co-Engineering (HICE) study, the MUSE VR experience aimed to assess the technical and programmatic feasibility of extended human missions, including existing and new opportunities to Low Earth Orbit (LEO), Lunar Gateway station, and the lunar surface. This initiative offered multiple realistic use-cases, of which one scenario was adopted as the narrative framework for this research (see Fig. 2). Following the recommendations and preliminary findings of the HICE study, the scenario closely adhered to the identified challenges and opportunities of crewed missions starting from LEO and culminating in docking operations at the Lunar Gateway station, including astronaut transfer from the spacecraft to the station. The architectural elements of the spacecraft, particularly its capsule environment and its configurations, were likewise informed by the HICE study outcomes, ensuring that the virtual experience maintained authenticity and relevance to current real-world considerations while offering valuable insights into user interface (UI) and user experience (UX) design.

Given that mission timelines and specific operational details within the HICE study remain speculative, the scenario and spacecraft design required considerable adaptability to consider the needs of several stakeholders and technology opportunities. Thus, a modularization strategy was adopted (see Fig. 3 ). Leveraging the flexibility provided by Unreal Engine 5.3. VR development pipeline the modularity enabled interchangeable scenario components, and 3D model configurations, addressing evolving knowledge and iterative refinements throughout development. This approach allowed scenario elements such as spacecraft modules and narrative mission parts to be quickly adapted or replaced as new insights emerged, enhancing responsiveness to changing scenario understanding and facilitating future scalability and refinements in the training experience [6].

3.2 Speculative Mission Scenario and Spacecraft Design Rationale

While inspired by existing and conceptual spacecraft designs, such as the Orion and Crew Dragon capsules, the spacecraft developed for the virtual scenario in MUSE incorporated further findings from the HICE study, such as the earlier mentioned need for adaptability to evolving mission design requirements. The design aimed to balance narrative-driven immersion with operational authenticity by managing constraints like spacecraft scale, visual fidelity, and internal functionality through strategic planning of interchangeable and adjustable parts (see Fig. 3 and Fig. 5). This modular methodology aligns with established best practices from previous immersive virtual experiences developed at ESA, where structural flexibility and adaptability have supported ongoing iteration for mission prototyping and user testing [19, 2].

In addition to the physical and spatial design, narrative immersion was further grounded through the integration of a voice-loop system modeled on real docking procedures with Crew Dragon spacecraft and the International Space Station (ISS). Audio material was referenced from public Apollo mission archives and supplemented by live dialogue data recorded during a recent Crew Dragon docking operations. This audio was simplified, processed and adapted into a three-way communication structure that reflects the style and content of authentic operational voice loops. By embedding this system into the VR narrative, the experience preserved operational realism, for example in simulating realistic challenges in docking operations, while introducing new narrative elements, effectively bridging fictional scenario development with authentic astronaut communication dynamics.

Special emphasis was placed on the spacecraft capsule design, carefully refining both external geometry and internal layouts to align closely with the HICE study’s identified goals, including habitability, ergonomics, and operational efficiency. Modularity of 3D parts ensured that the spacecraft interior and scenario interactions could remain dynamically aligned with evolving mission requirements and new ergonomic insights as they arose through ongoing research and user feedback. Modular design allows for targeted testing and training scenarios, offering insights into user interaction design, mission planning, and decision-making effectiveness across diverse user groups, from mission planners to astronauts [6].

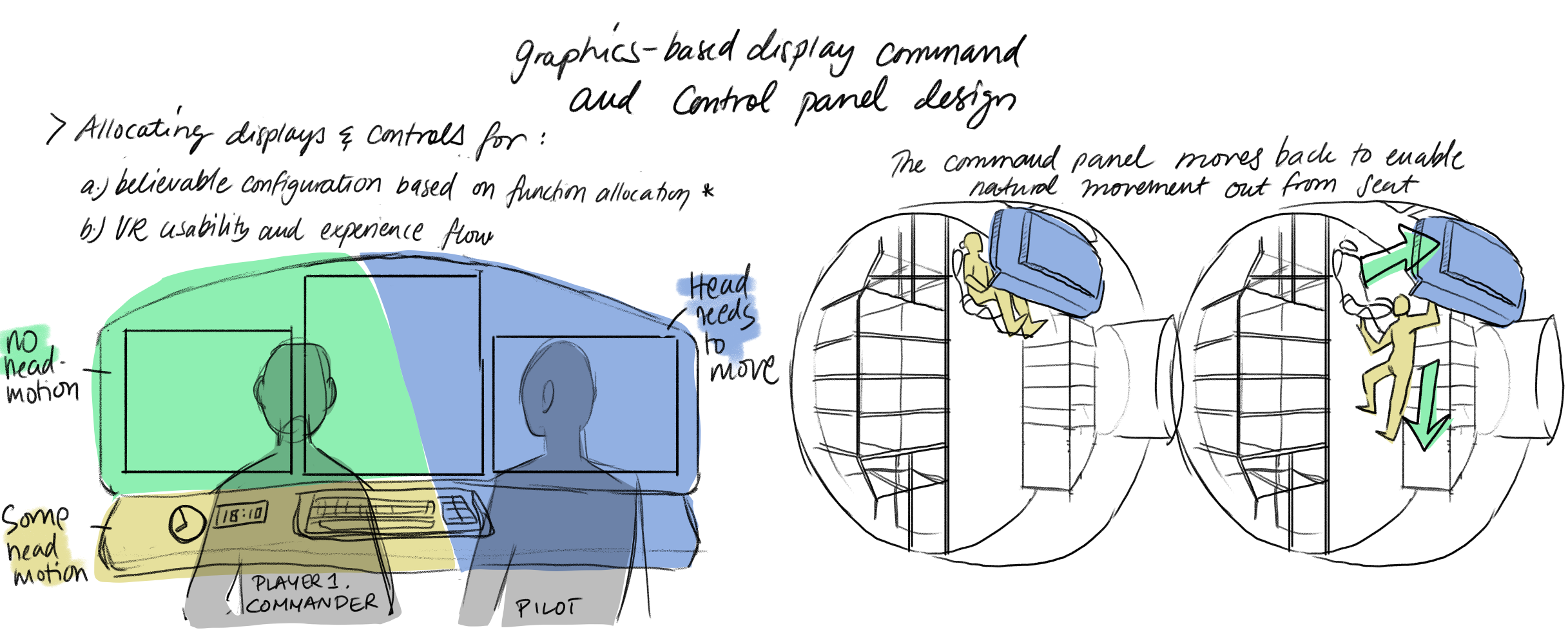

Interior design specifically emphasized immersive user experiences, aligning narrative interactions with functional and ergonomic realities of the spacecraft environment (see Fig. 4), such as identified division of onboard workspace intended for the VR user [20]. Environmental storytelling techniques, such as integrating a narrator’s voice, was added to enhance intuitive decision-making processes. This narrative approach aimed to complement traditional heuristic-based training methods by actively facilitating intuitive, insight-driven strategies among users, and fostering deeper cognitive engagement and situational awareness [5, 11, 10].

4 Experimental Setup and Evaluation Methods

The immersive narrative-driven VR experience that was created for the speculative mission served as an experimental setup for gathering user feedback. In particular we wanted to gather data of the user experience during the spaceflight scenario, including the more task oriented docking operations. An evaluation framework was created to serve the different aspects of the experience to retrieve quantitative and qualitative research data.

4.1 Narrative and Situational Scenario Evaluation Methodology

The evaluation framework combined semi-structured quantitative assessments and qualitative exploratory approaches, employing think-aloud protocols, observational methods, structured surveys, and more detailed qualitative interviews regarding the overall experience.

Our first research question required understanding both when and how much the user was engaged with the experience. We combined in our methodology the monitoring of users movements and engagement activity to understand cognitive investment at any given situation. Similar technique has been described for example in research by Sun et al. [24], to observe how users physically engaged with critical scenario events. User behaviors, such as turning towards audio cues, manipulating interface elements, or physically leaning into tasks, were logged and cross-referenced with quantitative post-experience assessment. Makransky et al. (2019) emphasize the value of combining self-report measures (e.g., enjoyment, motivation, and self-efficacy) with behavioral transfer tests to comprehensively evaluate immersive VR training [14]. Emphasis was therefor placed on capturing intuitive decision-making processes in the moment and conducting an after testing qualitative interview [23, 16, 19].

To explore our second research question, the VR simulation incorporated a simplified Situation Awareness Global Assessment Technique (SAGAT) to assess user responses to modular capsule environments. In particular this was done by measuring user responsiveness to operational, mostly audio cues through timed reactions, underlining how narrative-driven scenarios influenced intuitive decision-making during operation. Furthermore a situational awareness rating technique was used as a quantitative method and self assessment after the testing, keeping in mind that self-rating after testing is subject to for example poor recall rates [6, 9, 22].

These methods enabled us to look at immersion patterns and align user reactions with narrative turning points within the experience. This UX assessment strategy was the foundation for exploring cognitive processes like intuitive decision-making and situational awareness in narrative-driven VR scenarios.

4.2 User Testing Procedures

The participants were selected with a view to having a high degree of role variability as stakeholders for the MUSE experience. Before the actual testing the users were identified for their potential stakeholder role and experience of using VR, and invited individually into the testing session. Upon entering the testing session the user was greeted and offered a brief overlook into the upcoming testing, and given a quick introduction to using the VR-headset and hand controls before donning the headset. Users were also informed about the general narrative goal of the experience, flying onboard a small spacecraft to the Lunar Gateway and being tested for situational awareness during docking operations. Similarly to the testing setup done by Nilsson et al. for the EL3 lander, the users were encouraged to verbalize their experiences, and reasoning during the docking operations [19]. After the testing session, which took about 10-15 minutes, depending on the users curiosity to explore the experience longer, the users were asked to fill in two questionnaires right after the testing, and further verbalize their thoughts in a general manner regarding the two research goals we had.

The user testing involved surveys, observational protocols, and emotional and physical response tracking to help heuristic evaluation and apply a multi-level assessment [23, 16, 8]. Collecting such varied data however posed a challenge of how to combine the data in a meaningful way to offer insight in the best way. The testing did not include tools, such as eye or position tracking, or measure precise moment when users pressed for example a button. The test included two monitors who marked the information regarding these events manually. The users were instructed before the testing of the correct way to react during the situational awareness test to tick a box (press a button) in the VR interface on the capsules command panel. Outside the docking operations the users were monitored also for their other physical reactivity, such as naturally occurring reactions towards narrative elements when there was no particular voiced command to interact with the Command Panel.

4.3 Findings: Usability, Immersion, and Emotional Engagement

The experience was structured into sequential narrative stages: beginning with mission preparations in Low Earth Orbit (LEO), followed by lunar flyby transit, and concluding with approach, docking operations and entering the Lunar Gateway. While one phase was focused on situational awareness and explicitly incorporated a task-based cognitive evaluation (see Fig. 6 and Fig. 7), user feedback and observations across the entire experience consistently revealed high levels of emotional and cognitive engagement. In our assessment of the outcomes it seemed that the relative experience of an individual dealing with similar operational scenarios correlated with faster and more precise reaction times during docking operations.

Participants described a strong sense of excitement and curiosity throughout, particularly in quieter moments before and after operational tasks. Many explored the interior environment of the capsule early to the experience, moving between seats and physically leaning toward the windows to observe landmarks passing by the window such as Earth, the Moon, and the Gateway station (see Fig. 8). These patterns mirror findings by Sun et al. [24], who demonstrated that heightened movement within VR scenarios correlates with stronger presence and task engagement. While functional interaction was concentrated around the command panel due to its central role in the experience management, user movement patterns also indicated spontaneous interest in non-operational elements of the environment. However, participants generally avoided interacting with passive props like cargo racks, indicating selective engagement shaped by narrative relevance.

These behavioral insights align with Makransky et al.’s [14] findings on immersive learning, which emphasize that immersive VR environments are most effective when users are emotionally engaged and immersed, even if not all elements contribute to learning actively. In our study, physical engagement often increased in anticipation of narrative turning points, reinforcing the important role of storytelling in guiding embodied presence. This also resonates with Carpenter’s work on insight and creative cognition, which highlights that intuitive realizations of information can arise during periods of passive observation or cognitive rest [5]. These moments of exploration and reflective curiosity may thus support the conditions necessary for intuitive insight, underscoring the value of designing narrative structures that allow for such psychological incubation.

Despite the overall success in immersion and reports of generally good user experience, several usability concerns emerged during the whole length of the experience (see Fig. 9). Participants occasionally struggled to return to optimal viewing positions after moving around the capsule (spatial navigation issue), sometimes leading to confusion or diminished visibility of essential UI elements like the mission timeline or command panel. During the situational awareness testing, some users inadvertently marked mission steps as complete prematurely. These incidents were often due to misunderstandings of audio cues (audio instruction issue), over-anticipation of events to come, or accidental controller contact with the user interface in the limited capsule space represented in the VR experience around them. Such errors emphasize the importance of multi-modal narrative design, combining clear audio cues, visual reinforcement, and spatial UI alignment to support reliable user interaction. Participants also reported cases of immersion being abruptly disrupted by external environmental noises, highlighting the fragile nature of deep presence and the need for consistent sensory control in high-fidelity training simulations, especially for experiences that rely on audio ques and concentrating on listening activities [8, 19, 2, 25].

Importantly, instances of intuitive decision-making were observed, especially when users acted under uncertainty, when they were either misinterpreting technical terminology in the audio loop or attempting to multitask. These moments, although sometimes resulting in operational errors, are informative for understanding how narrative and scenario structure influence not only task accuracy but also individual intuitive cognitive processing. Awareness of such insight-based behavior complements earlier findings by Makransky et al. [14], who advocate for multi-level assessments for VR use. In narrative VR contexts, this represents an insight towards testing individual situational adaptation in both active and passive stages of the experience, rather than merely during the actual mission procedure.

5 Discussion: Narrative’s Role in VR User Experience

According to user feedback, the narrative-driven design significantly enhanced the overall user experience, influencing emotional immersion, intuitive decision-making, and creativity during scenario interactions. Users described experiencing emotionally charged moments like excitement, curiosity, or concern especially when embedded in key scenario transitions, such as approaching the Lunar Gateway and listening to mission-critical voice loops during docking. These reactions support the idea that a coherent narrative structure strengthens engagement by offering emotionally resonant context and purpose.

However, the immersive quality was vulnerable to disruption. Breaks in immersion were commonly reported when users encountered either unclear or overly simplistic UI interactions, audio misalignments, or external distractions (real-world noise bleeding into the experience). Participants reported that in such moments, immersion became more difficult, highlighting the importance of narrative coherence supported by user comfort and consistent sensory input [8, 19, 2, 25].

A particularly notable theme in post-experience interviews was the difficulty many users had articulating why the experience felt meaningful or immersive. While most users reported a strong sense of presence and enjoyment, they often struggled to explain the cognitive processes that led to those impressions. This observation aligns with Carpenter’s insights into intuitive cognition: people often cannot consciously retrace the steps behind intuitive decisions or insight moments due to their unconscious nature [5].

This raises a pressing methodological challenge for further VR research on understanding immersion: if users’ compelling reactions are driven by subconscious, intuitive processes, how can we best observe and interpret them? Future research must grapple with this question, especially when evaluating how immersive storytelling influences cognition in operational scenarios. A best way forward may be drawing on Makransky et al. [14] research data advocating for development of multi-level assessments – combining behavioral tracking, task performance, and motivational responses as an effective way to assess how deeply users internalize and respond to immersive environments.

Our findings echo users often displaying physical behaviors such as orienting toward the windows, leaning toward control panel, or exploring the capsule interior that reflect engagement with the narrative, even in the absence of active task demands. This suggests that narrative-driven immersion on its own can provoke embodied presence that supports user insight and situational adaptation. Instead of limiting operational evaluations to procedural correctness, narrative immersion provides fertile ground for observing how users adapt creatively or intuitively in speculative spaceflight or other future space mission scenarios.

6 Practical Implications and Future Research

This research underscores the growing value of narrative-driven immersive VR scenarios in crew and astronaut training and early-stage UX/UI exploration for spaceflight. Beyond operational task rehearsal, these experiences demonstrate how storytelling and structured immersion foster deeper emotional involvement, enhance intuitive adaptability, and reinforce situational awareness. These are key skills in unpredictable, high-stakes environments such as long-duration space missions.

Feedback gathered from extended testings, both with research participants and at later point in public outreach events where the VR experience was presented indicates strong potential for educational deployment. The emotionally engaging nature of narrative VR makes it suitable not only for astronaut training but also for public engagement and science communication. Story-rich scenarios appear to increase layperson understanding of complex mission architectures and human factors on a basic level in spaceflight, echoing findings by Murray-Smith et al. [15] and Nilsson et al. [18].

Future studies should aim to develop robust methodologies for capturing subconscious or intuitive reactions during immersive experiences. This might include combining movement tracking, emotion recognition, and self-assessment tools, and adapting cognitive science frameworks to interpret spontaneous, insight-based decisions. Such methods would offer valuable new ways to assess non-obvious cognitive processes and to evaluate UI designs intended for fluid, intuitive use in spaceflight systems.

7 Conclusion

Narrative-driven immersive VR emerges as a promising methodology for supporting human-centered UX design and training in speculative spaceflight contexts. Our findings show that immersive storytelling not only fosters emotional engagement but also encourages intuitive decision-making and embodied user presence.

By embedding users within a coherent and emotionally resonant story arc, narrative VR enables researchers and designers to observe complex cognitive and behavioral dynamics, including user intuition, situational adaptability, and motivational engagement. The unconscious nature of insight formation further underlines the need for innovative assessment tools capable of capturing these cognitive responses.

Ultimately, narrative-driven VR holds strong potential to enhance existing training tools for astronaut preparedness, support mapping adaptable thinking, and advance UI and UX design for mission-critical systems. Its utility extends beyond operational rehearsal to science communication, outreach, and scenario-based research. As new mission architectures, space ecosystem stakeholders and deep-space exploration strategies evolve, VR environments that mirror complex cognitive and emotional realities will become essential to preparing humans for space.

References

- [1] Noora Archer. Visual design of quantum physics – lessons learned from nine gamified and artistic quantum physics projects. Master’s thesis, Aalto University, School of Arts, Design and Architecture, Espoo, Finland, 2022. URL: http://urn.fi/URN:NBN:fi:aalto-202301291591.

- [2] Leonie Bensch, Tommy Nilsson, Jan Wulkop, Paul Demedeiros, Nicolas Daniel Herzberger, Michael Preutenborbeck, Andreas Gerndt, Frank Flemisch, Florian Dufresne, Georgia Albuquerque, and Aidan Cowley. Designing for human operations on the moon: Challenges and opportunities of navigational hud interfaces. Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, 2024. doi:10.1145/3613904.3642859.

- [3] Angelica M. Bonilla Fominaya, Rong Kang (Ron) Chew, Matthew L. Komar, Jeremia Lo, Alexandra Slabakis, Ningjing (Anita) Sun, Yunyi (Joyce) Zhang, and David Lindlbauer. Moonbuddy: A voice-based augmented reality user interface that supports astronauts during extravehicular activities. In Adjunct Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology (UIST ’22 Adjunct), 2022. doi:10.1145/3526114.3558690.

- [4] John Bucher. Storytelling for Virtual Reality: Methods and Principles for Crafting Immersive Narratives. Routledge, New York, 2018. URL: https://www.routledge.com/Storytelling-for-Virtual-Reality-Methods-and-Principles-for-Crafting-Immersive-Narratives/Bucher/p/book/9781138629660.

- [5] Wesley Carpenter. The aha! moment: The science behind creative insights. In Toward Super-Creativity, chapter 2. IntechOpen, Rijeka, 2019. doi:10.5772/intechopen.84973.

- [6] Pasquale Castellano. Modularization of vr experience for different user needs and situational awareness framework for muse. Internship report, European Astronaut Centre (EAC), March 2024.

- [7] Mihaly Csikszentmihalyi. Flow: The Psychology of Optimal Experience. Harper and Row, New York, 1990.

- [8] Florian Dufresne, Tommy Nilsson, Geoffrey Gorisse, Enrico Guerra, André Zenner, Olivier Christmann, Leonie Bensch, Nikolai Anton Callus, and Aidan Cowley. Touching the moon: Leveraging passive haptics, embodiment and presence for operational assessments. Acta Astronautica, 2024. doi:10.1145/3613904.3642292.

- [9] Mica R. Endsley. Measurement of situation awareness in dynamic systems. Human Factors, 37(1):65–84, 1995. doi:10.1518/001872095779049499.

- [10] Vanshika Garg, Vaishnavi Singh, and Lav Soni. Preparing for space: How virtual reality is revolutionizing astronaut training. In 2024 IEEE International Conference for Women in Innovation, Technology & Entrepreneurship (ICWITE), pages 78–84. IEEE, 2024. doi:10.1109/ICWITE59797.2024.10503238.

- [11] J. Kidd and E. Nieto McAvoy. Immersive experiences in museums, galleries and heritage sites, 2019. URL: https://www.pec.ac.uk/discussion-papers/immersive-experiences-in-museums-galleries-and-heritage-sites-a-review-of-research-findings-and-issues.

- [12] Mohammad Amin Kuhail, Aymen Zekeria Abdulkerim, Erik Thornquist, and Saron Yemane Haile. A review on the use of immersive technology in space research. Telematics and Informatics Reports, 17:100191, 2025. doi:10.1016/j.teler.2025.100191.

- [13] Hyejin Kwon, Youngok Choi, Xiaoyang Zhao, Hua Min, Wei Wang, Vanja Garaj, and Busayawan Lam. How immersive and interactive technologies affect user experience, 2025. doi:10.18848/1835-2014/CGP/v18i01/135-160.

- [14] Guido Makransky, Stefan Borre-Gude, and Richard E. Mayer. Motivational and cognitive benefits of training in immersive virtual reality based on multiple assessments. Journal of Computer Assisted Learning, July 2019. doi:10.1111/jcal.12375.

- [15] Roderick Murray-Smith, Antti Oulasvirta, Andrew Howes, Jörg Müller, Aleksi Ikkala, Miroslav Bachinski, Arthur Fleig, Florian Fischer, and Markus Klar. What simulation can do for hci research. Interactions, 29(6), 2022. doi:10.1145/3564038.

- [16] Rabia Murtza, Stephen Monroe, and Robert Youmans. Heuristic evaluation for virtual reality systems. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 61:2067–2071, September 2017. doi:10.1177/1541931213602000.

- [17] Vijayakumar Nanjappan, Akseli Uunila, Jukka Vaulanen, Julius Välimaa, and Georgi Georgiev. Effects of immersive virtual reality in enhancing creativity. Proceedings of the Design Society, 3:1585–1594, June 2023. doi:10.1017/pds.2023.159.

- [18] Tommy Nilsson, Flavie Rometsch, Leonie Becker, Florian Dufresne, Paul Demedeiros, Enrico Guerra, Andrea Emanuele Maria Casini, Anna Vock, Florian Gaeremynck, and Aidan Cowley. Using virtual reality to shape humanity’s return to the moon: Key takeaways from a design study. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23), 2023. doi:10.1145/3544548.3580718.

- [19] Tommy Nilsson, Flavie Rometsch, Andrea Emanuele Maria Casini, Enrico Guerra, Leonie Becker, Andreas Treuer, Paul de Medeiros, Hanjo Schnellbaecher, Anna Vock, and Aidan Cowley. Using virtual reality to design and evaluate a lunar lander: The el3 case study. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23), 2022. doi:10.1145/3491101.3519775.

- [20] Olu Olofinboba, Chris Hamblin, Michael C. Dorneich, Bob DeMers, and John A. Wise. The design of controls for nasa’s orion crew exploration vehicle. In Proceedings of the European Association for Aviation Psychology (EAAP) Conference, Valencia, Spain, october 27–31 2008. European Association for Aviation Psychology. Supported by Lockheed Martin under NASA contract RH6-118204. URL: https://www.nasa.gov/mission_pages/constellation/orion/.

- [21] Ben Rolfe, Christian Martyn Jones, and Helen Wallace. Designing dramatic play: Story and game structure. In Designing Dramatic Play: Story and Game Structure, pages 448–452. ACM, September 2010. doi:10.14236/ewic/HCI2010.54.

- [22] Paul M. Salmon, Neville A. Stanton, Guy H. Walker, Daniel P. Jenkins, Laura Rafferty, and Mark S. Young. Measuring situation awareness in complex systems: Comparison of measures study. International Journal of Industrial Ergonomics, 39(3):490–500, 2009. doi:10.1016/j.ergon.2008.10.010.

- [23] Sebastian Stadler, Henriette Cornet, and Fritz Frenkler. Assessing heuristic evaluation in immersive virtual reality—a case study on future guidance systems. Multimodal Technologies and Interaction, 7(2), 2023. doi:10.3390/mti7020019.

- [24] Yilu Sun, Swati Pandita, Jack Madden, Byungdoo Kim, N.G. Holmes, and Andrea Stevenson Won. Exploring interaction, movement and video game experience in an educational vr experience. In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (CHI EA ’23), 2023. doi:10.1145/3544549.3585882.

- [25] Karima Toumi, Nathalie Bonnardel, and Fabien Girandola. Technologies for supporting creativity in design: A view of physical and virtual environments with regard to cognitive and social processes. Creativity Theories – Research – Applications, July 2021. doi:10.2478/ctra-2021-0012.