How Does Knowledge Evolve in Open Knowledge Graphs?

Abstract

Openly available, collaboratively edited Knowledge Graphs (KGs) are key platforms for the collective management of evolving knowledge. The present work aims t o provide an analysis of the obstacles related to investigating and processing specifically this central aspect of evolution in KGs. To this end, we discuss (i) the dimensions of evolution in KGs, (ii) the observability of evolution in existing, open, collaboratively constructed Knowledge Graphs over time, and (iii) possible metrics to analyse this evolution. We provide an overview of relevant state-of-the-art research, ranging from metrics developed for Knowledge Graphs specifically to potential methods from related fields such as network science. Additionally, we discuss technical approaches – and their current limitations – related to storing, analysing and processing large and evolving KGs in terms of handling typical KG downstream tasks.

Keywords and phrases:

KG evolution, temporal KG, versioned KG, dynamic KGCategory:

SurveyFunding:

Axel Polleres: supported by the European Union’s Horizon 2020 research and innovation program under grant agreement No 957402 (Teaming.AI).Copyright and License:

2012 ACM Subject Classification:

Information systems Graph-based database models ; Information systems Data streaming ; Information systems Web data description languagesAcknowledgements:

We would like to thank the reviewers for their invaluable comments that helped to improve our manuscript.DOI:

10.4230/TGDK.1.1.11Received:

2023-06-30Accepted:

2023-11-17Published:

2023-12-19Part Of:

TGDK, Volume 1, Issue 1 (Trends in Graph Data and Knowledge)Journal and Publisher:

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Knowledge Graphs (KGs) [112] are graph-structured representations intended to capture the semantics about how entities relate to each other, used as a general tool for the symbolic representation and integration of knowledge in a structured manner. The actual semantics or schema of such graphs can be formally described using expressive logic-based languages such as the Web Ontology Language (OWL) [101], as well as in terms of constraint languages such as the Shapes Constraint Language (SHACL) [135] or Shape Expressions (ShEx) [195]. Thanks to the expressivity provided by such formalisations, KGs have become a de-facto standard data model for integrating information across organisations and public institutions. It also facilitates the collaborative construction of structured knowledge on the Web by dispersed communities. In other words, KGs serve as intermediate layers of abstraction between raw data and decision support systems. Raising the level of abstraction has allowed us to ask more sophisticated questions, integrate data from heterogeneous sources, and spark collaborations between groups with different perspectives and views on business problems.

As a result of their function as a basis for knowledge integration, KGs are rarely produced in a single one-shot process. Instead, KGs are often collaboratively built and accessed over time. As such, KGs have become a significant driver for the collaborative management of evolving knowledge, integrating knowledge provided by different actors and multiple stakeholders: use cases range from the collaborative collection of factual base knowledge in general-purpose Open KGs such as Wikidata [242] to capturing specialised collaborative knowledge about engineering processes in manufacturing [110].

However, the sheer scale of – in particular – openly available, collaborative KGs has exacerbated the challenge of managing their evolution, be it in terms of (i) the size and temporal nature of the data, (ii) heterogeneity and evolution of the communities of their contributors, or (iii) the development of information, knowledge, and semantics captured within these graphs over time.

Even though analysis of the content, nature, and quality of KGs has already attracted a vast amount of research (i. e. [192, 104, 202] and references therein), these works focus less on how their structure and contents change over time, indeed how these systems evolve.

With the present article, we aim to shift the focus on precisely this matter. In particular, we try to answer the following main questions:

-

RQ1

Which publicly accessible, open KGs are observable in a manner that would allow a longitudinal analysis of their evolution and how? That is, how could we obtain historical data about their development, or which infrastructures and techniques would we need to monitor their growth and changes in the future?

-

RQ2

Which metrics could be used to compare the evolution and structure over time, and how could existing static metrics be adapted accordingly? Here, we are particularly interested in approaches from other adjacent fields, such as network science, and how those could be adapted and applied to specifically analyse the evolution of knowledge graphs.

-

RQ3

Finally, do we have the right techniques to process evolving KGs, both in terms of scaling monitoring and computing the necessary metrics, but also in terms of enabling longitudinal queries, or other downstream tasks such as reasoning and learning in the context of change – facing the rapid growth and evolution of existing KGs?

To approach these questions, the remainder of this article surveys existing approaches and works and raises open questions in four directions: observing, studying, managing and spreading KG evolution. Before elaborating on these directions, we first discuss the different dimensions of evolution in Section 2, introducing relevant terminology. In Section 3, we discuss to what extent data about the evolution of open KGs (like Wikidata or DBpedia) is available and what evolution trends have been observed so far in prior literature. In Section 4, we discuss different types of metrics to study evolving KGs; starting from state-of-the-art graph and ontology metrics, we also discuss metrics related to quality and consistency, as well as potentially valuable works and metrics from the area from network science. In Section 5, we discuss data management problems for evolving knowledge graphs, i. e. data models that capture temporality as well as storage approaches and schema mappings for versioned and dynamic KGs. In Section 6, we focus on downstream tasks on KGs in the specific context of evolution. More precisely, we discuss how querying, reasoning, and learning approaches can be tailored for evolving KGs. We also address the exploration of KGs, an essential aspect of evolving KGs. We conclude with a summary of the main research challenges we currently see unaddressed (or only partially addressed) in Section 7.

2 Dimensions of Evolution

The temporal evolution of graphs, knowledge graphs (KGs), and collaboratively edited KGs has multiple dimensions that we outline in this section, along with relevant terminology. That is to say, there are multiple coherent perspectives we can use to talk about the “evolution” of KGs, ranging from considering time and evolution as being part of the data itself to considering evolution and change over time on a meta-level. We illustrate these perspectives in Figure 1.

Temporal KGs: Time as data

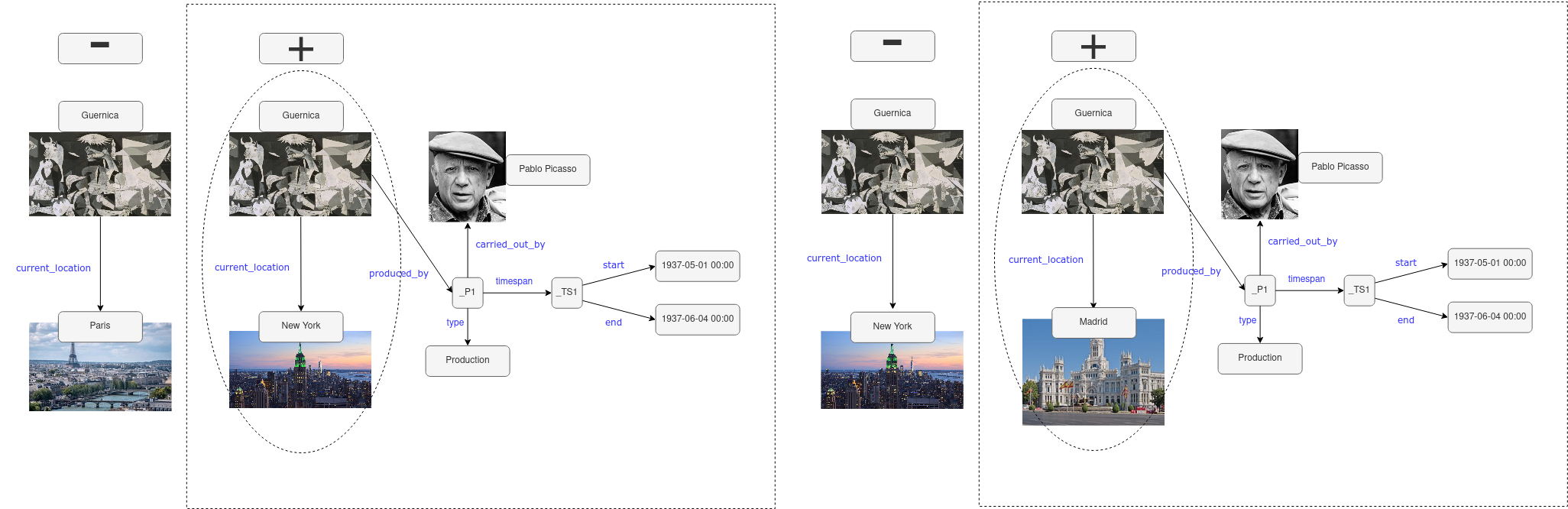

The first perspective considers time, or – more concretely, the temporal validity of information in a KG – as part of the KG itself; we call this the “Temporal KG” perspective. In this context, the evolution depicted by the data pertains to the changes in the “world” it represents, not the evolution of the data itself. Following database terminology, this temporal validity of information in a KG is typically referred to as valid time; see, for instance, [103]. A very simple example of a temporal KG is illustrated in Figure 2, which contains the year of production of Picasso’s “Guernica”, as a slightly simplified subgraph DBpedia [146].111https://www.dbpedia.org/

Time and temporality may be represented with a single temporal literal – as illustrated here a year or a timestamp, or likewise an interval: for instance, the production of “Guernica” itself was not a one-shot process, but its painting took place over a longer period. For instance, the production period of “Guernica” was carried out between 1937-05-01 and 1937-06-04, as illustrated in Figure 3, a simplified graph inspired by the Linked Art project.222https://linked.art/model/

We note here that capturing intervals typically requires extensions of the “flat” directed labelled graph model used to represent simple knowledge graphs, as shown in Figure 3: contextual information about simple statements (such as in this case, the start and end time of a production interval), can be modelled in various ways, either

-

1.

in terms of adding intermediate nodes to a flat graph model, also often referred to as “reification”, or alternatively

-

2.

in terms of bespoke, extended graph models such as so-called property graphs

Let us refer to Section 5.1 for a more in-depth discussion of different data models to capture time and temporality in KGs.

Time-varying KGs: Time as meta-data

The second perspective on evolution is scoped by the time granularity of change in the KG itself; in other words, by how the temporal aspect of the data, i. e. nodes, edges, and structure, of the KG is evolving. We call this the “Time-varying KG” perspective. Again, using database terminology, such changes in data are typically referred to as transaction time [103].

We present an example from the arts. Paintings like “Guernica” and information about their artists and other attributes have been added dynamically to Knowledge Graphs like Wikidata over time. The entry for “Guernica” (Q175036) in the Wikidata [242] KG was created on 28 November 2012,333https://www.wikidata.org/w/index.php?title=Q175036&action=history&dir=prev while its creator “Pablo Picasso” (Q5593) was added on 1 November 2012444https://www.wikidata.org/w/index.php?title=Q5593&action=history&dir=prev. Of course, both of these dates are independent of the birth or production dates of the referred entities themselves. As we will further discuss in Section 3 and also Section 5 below, the granularity and manner of how such changes are stored affect the observability and analysis of a KG’s evolution.

In terms of granularity, we can differentiate between two types of knowledge graphs based on how they are stored:

-

Dynamic KGs - which allow access to all observable atomic changes in the knowledge graph.

-

Versioned KGs - which provide static snapshots of the materialised state of the knowledge graph at specific points in time.

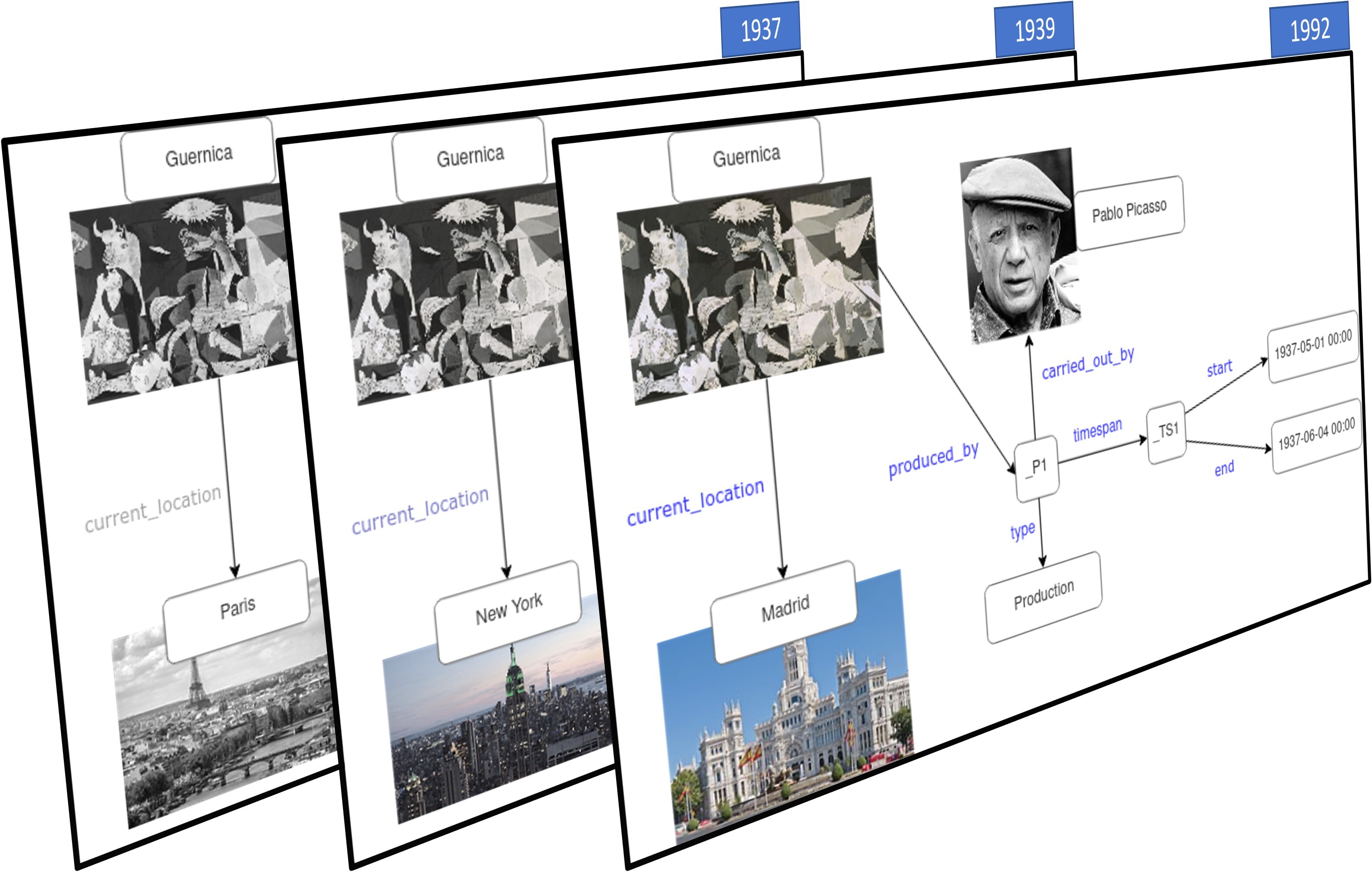

These represent opposite ends of the granularity spectrum. Figures 4 and 5 show two examples of how the changing information regarding the location of “Guernica” over time555The painting was first exhibited in Paris in 1937, and moved to an exhibition in New York in 1939. Since 1992 “Guernica” is displayed in Museo Reina Sofía in Madrid. could be represented in terms of versions or dynamic changes, respectively.

For instance, as discussed above, Wikidata embodies continuous change, accessible through the entities’ edit histories at the level of real-time modifications. At the same time, DBpedia represents both the spectrum’s discrete end, releasing snapshot updates,666https://www.dbpedia.org/resources/snapshot-release/ as well as offering small-scale releases with DBpedia Live777https://www.dbpedia.org/resources/live/ on minute level. Observe that in both cases, the temporal information about neither the materialisation time of a DBpedia snapshot or the edits of single statement claims on Wikidata are available in terms of the (RDF) graph materialisations of these KGs themselves, but only in terms of the publication metadata or edit histories, which is why we may also speak of “time as meta-data”.

We note that this distinction is hardly clear-cut. The difference between dynamic and versioned temporalities is marked by the technical means by which particular KGs evolve. In particular, this boundary is shaped by differences in technical infrastructures supporting these evolutionary processes rather than general characteristics of the KG and the kind of knowledge it captures.

For example, on the one hand, while changes in Wikidata may be recorded down to the level of single statements, Wikibase888https://wikiba.se, Wikidata’s underlying software framework. also supports interfaces for bulk updates. Likewise, each single statement change in Wikidata’s overall edit history may be theoretically materialised in terms of sequential snapshots. On the other hand, DBpedia’s extraction framework constructing a KG from Wikipedia may be analogously applied to any materialised point in time of the fine-granular page edit history of Wikipedia, or even per page [80]. DBpedia’s model has also changed over the past years from irregular, approximately annual, snapshots published in its beginnings, to enable more dynamic publishing (monthly) cycles [111] through the DBpedia Databus.999https://www.dbpedia.org/resources/databus/

Lastly, we note that analogously to the examples in Figures 2 and 3 both timestamps and time intervals can be used to represent not only validity but also transaction and versions, i. e. snapshots of the entire graph in the context of KGs. However, depending on which dimension is considered, it will have an impact on how data should be managed, whether evolution is observable, and how the information about evolution is spread into downstream tasks, see the further discussions in Sections 5 and 6 below.

Both of the aforementioned perspectives can serve the purpose of monitoring the evolution of KGs along different yet interrelated (sub-)dimensions. We outline these dimensions in the following subsections. First, according to Section 2.1, the structural evolution of KGs can be observed through the temporal information captured in them; here, KGs present a distinction between changes on the data and schema levels. Second, one can analyse the dynamics or velocity of evolution in KG over time, see Section 2.1. Finally, when considering the collaborative processes involved in KG editing and evolution, one can analyse the structure and dynamics of these collaborations, see Section 2.2. After exploring these dimensions in detail, we then discuss concrete metrics in Section 4.

2.1 Structural Evolution, Dynamics, Timeliness, and Monotonicity

In the context of evolving KGs (hereafter EKGs), we may consider different forms of change related to the graph structure, dynamics of change or its nature (monotonic or with deletions), and alternative notions of time. The following will briefly elaborate on our running example in Figure 6.

Structural Evolution

The first dimension to measure on a graph is essentially related to its structure: descriptive statistics about nodes and edge distributions, centrality, connectedness, density, and modularity. In KGs, similar static metrics can also be observed concerning the schema, typically the node and edge types, and – if additionally axiomatic knowledge on the schema-level is considered – the complexity of this schema.

For all of these structural properties (both on the instance-level and schema-level), we may also be interested in their development over time, i. e. in quantifying their changes. The existing concrete metrics for this dimension will be discussed in more detail in Section 4 below.

Notably, longitudinal investigations of structural properties are not restricted to the time-varying KG perspective: depending on whether temporal information is present in the KG itself, one may also be interested in analysing and comparing structural evolution in terms of “temporal slices”.

Dynamics

Dynamics for KGs refers to characteristics such as growth and change frequencies over time and per time interval). These may be observed overall but also in terms of subgraphs or topic-wise components of a KG. For instance, one may consider comparing the change dynamics of entities related to different topic areas, such as “arts” and “sports” within a particular KGs like Wikidata. Again, these dynamics may be observed concerning the KG schema. Referring to a concrete elaboration of our running example in Figure 6, we can derive that properties related to the production of paintings evolve more slowly than properties relating to exhibitions. Notably, dynamics and temporal granularity may again be compared and analysed both from secular and time-varying perspectives.

Timeliness

Timeliness, from a data quality perspective, refers to the “freshness” of the data concerning the occurrence of change, the current time, or the time of processing. Timeliness directly links to query answering (or processing in general), as it establishes the value of the retrieved answer considering some requirements. More specifically, the timeliness of data in a KG can be interpreted as

-

“out-of-date” or “stale” information: i. e. in terms of recency of temporal information concerning the current time;

-

“out-of-sync” or “delayed” information, i. e. in terms of the difference between valid times and transaction times of items in the KG, i. e. the interplay between these temporal and time-varying perspectives.

Regarding the former case, considering Figure 6, the question “Where is Guernica currently?” obtains a different answer at different times. While historical events such as the creation of “Guernica” lie far in the past, even far before Wikidata was founded, the location of paintings is an important dimension to analyse over time as it changes with exhibitions or purchases. If neglecting such variations is an issue for the users, e. g. when an accurate current location is needed to recommend a museum visit, then we witness a data quality problem related to timeliness.

A “drastic” example of the latter, i. e. extended out-of-sync information from the art domain is documented in Rembrandt’s “Portrait of a Young Woman” (Q85523581 in Wikidata) from 1632, which was added to Wikidata only in February 2020, after it was recently confirmed to be an authentic Rembrandt.101010https://news.artnet.com/art-world/pennsylvania-museum-rembrandt-discovery-1773954 Users who have asked for the number of Rembrandt paintings before 2020 would have received a stale answer.

Monotonicity

Monotonicity refers to the nature of changes, i. e. if they are positive changes only augmenting the content of the graphs, or if they take the form of an update which may include deletions of past information.

Continuing our examples in the domain of painting, we consider rectifying a painting’s attribution to its artist, which happens repeatedly in arts. A documented case is the painting “Girl with a Flute” (Q3739200) in Wikidata, originally attributed to the Dutch painter Vermeer but later confirmed to be the work of another painter.111111https://www.wikidata.org/w/index.php?title=Q3739200&oldid=803621750 Similar, non-monotonic changes may arise when temporal information itself changes in the KG: imagine, following our running example, that subsequent research may reveal Guernica was actually created in 1936, not 1937.

From this combination of dynamics (i. e. the study of changes), timeliness, and monotonicity (i. e. the frequency of deletions and, therefore, errors and rectifications of incorrect information in a KG), it is also possible to estimate the frequency of future transactions. Together they form an essential dimension of evolving KGs, both in the context of the ability to process evolution technically but in terms of its impact on the validity of updated results of downstream tasks Section 6: as KGs are meant to support sophisticated decision-making tasks, it is often paramount to guarantee up-to-date information and provide answers before they become obsolete.

2.2 Evolution in Collaboration

Knowledge evolution is driven by different types of collaborations [190, 5]. As described by Piscopo et al. [190], collaborative KGs rely on experts for specific types of activities, defining rules and processes for how and by whom some activities should be carried out, or provide tools to facilitate such collaboration.

In the context of KG evolution, we may thus want to analyse the behaviours of single users or user groups over time. To classify the collaboration types, we can distinguish the following roles of users/agents:

-

Anonymous users: These are Users who do not have a registered account or a consistent identity within a project (e. g. anonymous Wikibase users)

-

Registered users: similarly, these are Users who have a registered account or a consistent identity within a project (e. g. registered Wikibase users), ideally also combined with additional information or characteristics which allow to classify such users (e. g. country of origin or other demographic attributes)

-

Authoritative users: These are Users characterised by in-depth domain knowledge or knowledge engineering expertise. This group represents vetted knowledge engineers, domain experts, and moderators.

-

Bots: These are automated agents performing recurring tasks (e. g. Wikibase bot accounts).

Longitudinal analyses of the contributions of such users may include changes in their behaviours (e. g. in terms of edit frequencies), interests (e. g. in terms of editing particular parts or topics of KGs), or role changes. Additionally, based on the aforementioned roles, various collaboration types can be potentially recognised when analysing the evolution of edits in collaboratively edited KGs [191]:

-

Expert-driven collaboration: this type of collaboration involves Authoritative users developing schemas or editing data on the instance-level (creating mapping rules, as in the case of DBpedia, would be an example of such schema-level expert collaboration, whereas the instance data, origins from Wikipedia, thus following another collaboration model).

-

Crowd-sourced collaboration: this type of collaboration involves many Users not considered Authoritative users performing basic editing tasks which neither requires in-depth domain or knowledge engineering expertise nor coordination between the editors (for instance, any users being allowed to edit Wikipedia could be understood as such a crowd-sourced collaboration model, if a more moderated process did not govern it, see below).

-

Resource-dependent collaboration: This type of collaboration is based on integrating information from external resources, potentially governed by different heterogeneous collaboration models (indeed, DBpedia’s extraction of instance data from Wikipedia may be understood as such a resource-dependent “collaboration”).

-

Community-driven collaboration: this type of collaboration relies on self-moderating communities of Users characterised by deep involvement in the project, collective discussion, and decision making (e. g. Item/Property discussions characteristic for Wikidata, but also characteristic for the curation process in Wikipedia).

Table 1 describes the common collaboration models of some existing, collaboratively maintained open general-purpose KGs, according to the literature. We note that the list of KGs shown here is not meant to be exhaustive and that such metrics could be further extended and refined in more fine-grained longitudinal analyses. As described in Section 4.4, for example, topologically identified groups of collaborators could be used to predict outcomes. A concrete methodology to analyse the composition of the collaborators within the KG and assess their effects on quality has been suggested in [189]. Further investigation can also include the different evolution and collaboration approaches and how these influence the possibility of analysing evolution. For example: does the relatively small DBpedia ontology and the limited frequency of updates via mapping changes make the analysis of the evolution of its ontology easier than the direct ontology editing model of Wikidata? Does the extraction and mapping mechanism and changes to the rules that drive them make ontology evolution in turn less flexible for the community in DBpedia? Likewise, does the free-for-all collaboration approach in Wikidata render a structured analysis of ontology evolution impossible, or what are the methods to handle this challenge? For instance (i) can one define “checkpoints” of limited changes that can be used as anchor points to produce useful analyses, or (ii) does it make sense to investigate the evolution of vocabularies specifically scoped to editors’ sub-communities? Another avenue for investigation is a more effective utilisation of machine learning in supporting the collaborative evolution of KGs and their schemas. Specifically, it would be interesting to learn how this evolution is affected and affects the interaction of automated extraction (DBpedia), extraction by statistical learning (YAGO), or in leveraging or improving bots (Wikidata): that is, can ontology extraction rules or curation pipelines be improved by observing and learning from the collaboration and evolution processes over time?

2.3 Semantic Drift

Semantic drift is a crucial concept of evolution in language. It refers to the change in meaning of a concept over time [246, 218] independently from the downstream tasks like querying or reasoning. Before detecting semantic drift, one needs to identify the two concepts to compare between versions. Although early work on identifying semantic drift focused on the definition of the identity of a concept [246], when a concept changes meaning, it might also change its identifying information. Therefore, it is not always possible to rely only on identity-based approaches to understand semantic drift. In such cases, morphing chain-based strategies are more suitable [90]. The morphing chain approach presents the user with a comparison of a concept to all the concepts between the versions of an ontology and lets the user choose or chooses heuristically which is the most likely concept that a previous one evolved into.

For KGs, Meroño-Peñuela et al. [158] studied semantic drift in DBpedia concepts, while Stavropoulous et al. [219] studied semantic drift in the context of the Dutch Historical Consensus and the BBC Sports Ontology. SemaDrift [218] takes a morphing-chain approach, where three aspects are used to identify concepts that have potentially evolved from another: label, intention, and extension. The advantage of this approach is that every concept in a new version will have evolved from some previous concept. Unfortunately, the identity of concepts, such as URI, is not used in SemaDrift. OntoDrift [44] uses a hybrid approach and can be considered an extension of SemaDrift [218]. Additionally to using the label, intention, and extension aspects of concepts, it also considers the subclass relations. The drawback of this approach is that rules need to be defined for every type of predicate, as demonstrated by OntoDrift.

The notion of logical difference [136] between KGs can also be used to evaluate the semantic drift of the KG concepts. The logical difference focuses on the entailments or facts that follow from one KG but not from the other, and vice versa. Jiménez-Ruiz et al. [126] proposed an approach to evaluate the logical difference among different versions of the same ontology. Considering the new logical entailments/axioms involving a given entity, one could define a metric. The entity’s role within the entailment (i. e. the entity is being defined vs. the entity referenced) may also impact the metric.

Potential approaches in the future could make additional use of embeddings, representing concepts in vector space and assessing their neighbourhoods. Pernisch et al. [181] showed that comparing two embeddings to each other is complex, and the similarity between concepts is, e. g. around 0.5 for FB15k-237 with TransE; Verkijk et al. [240] further discuss the difficulties with this approach, especially comparing it to concept shift in natural language. Finally, the lack of domain-specific benchmarks for semantic drift makes comparing methods difficult. For instance, OntoDrift and SemaDrift return very different numbers when detecting drift, but we cannot tell which ones are closer to the truth. Also, the number of studies that look at semantic drift is limited. Not many KGs have been studied, and even though the phenomenon is known, it has not been investigated extensively so far [158, 219].

3 Observe and Analyse the Evolution

This section discusses how far evolution can be observed and analysed along the dimensions defined above in various existing KGs. KGs come in very different flavours and structures, and in particular, we may also assume that their evolution shows very diverse characteristics.

Below, we first characterise different kinds of graphs. In Section 3.1, we discuss tools to observe the historical longitudinal data on the evolution of the most important existing KGs. Section 3.2 provides a respective overview of available studies to analyse and track the dynamics of some of these KGs. We consider both monitoring and analysing the evolution of the instance-level of graph data as well as the schema-level.

Without claiming completeness, we distinguish the following kinds of KGs:

-

General-purpose Open Knowledge Graphs: publicly available open-domain (or, resp., cross-domain) KGs such as DPpedia [146] and Wikidata [242] as two of the most prominent KGs have been developed since more than a decade by now, covering a wide range of comprehensive knowledge. Yet, they differ fundamentally in the process in which knowledge is maintained and developed within the KG: whereas DBpedia relies on extractors to collect data from Wikipedia’s infoboxes regularly, Wikidata comprises a completely collaboratively evolving schema and factbases that, by themselves, feed back into Wikipedia. In particular, we observed significant growth and dynamics in both the instance-level and schema-level of Wikidata over the past years. Collections of structured RDF data and microdata (e. g. schema.org [102] metadata) from Web pages through openly available Web crawls, such as made available regularly by the Webdatacommons121212http://webdatacommons.org/ project [159], may indeed also be perceived as evolving, general purpose, real-world Knowledge Graphs.

-

Domain-specific Special-purpose Open Knowledge Graphs: Many open knowledge graphs available to the public are often overlooked. These graphs are collaboratively developed and serve narrow, special-purpose topics or use cases. An example is Semantic MediaWiki (SMW)[138], which has been around for almost 20 years and is still actively developed and used in various community projects. SMW can be considered a predecessor of Wikibase, the underlying platform for Wikidata. Wikibase is increasingly being used in separate, special-purpose community projects. Other examples of domain-specific knowledge graphs include the UMLS Metathesaurus [34], as well as the ontologies in the OBO Foundry [121], and BioPortal [248]. These graphs focus on the schema and are assumed to have significantly different evolution characteristics [182].

-

Task-specific Knowledge Graphs: One category of Knowledge Graphs that some authors identify is task-specific Knowledge Graphs [122]. These graphs, often used in benchmarks, are typically subsets of larger KGs created to support a specific application or may result from a downstream application (e. g. DBP15K as a subset of DBpedia for cross-lingual entity alignment). However, since these KGs are usually artificially limited and static (i. e. subset of specific snapshots), compared to real-world evolving KGs, we will not discuss them separately in this paper. We note, however, that principled approaches to create evolving subsets of KGs for specific benchmarking tasks are sorely needed to better understand these tasks “in evolution”.

-

Large (and Small) Enterprise Knowledge Graphs Lastly, we see many companies reportedly using and adopting Knowledge Graph technologies in their operations and businesses over the past years, including large firms like Google, Amazon, Facebook, and Apple, as well as many other smaller examples. What these KGs typically have in common is that due to their commercial value, they are non-observable to the community and we may only speculate about their sizes and structures using white papers [170, 209, 117], high-level announcements, and to some extent through industry track reports in conference series such as ISWC (e. g. [97]), SEMANTiCS (e. g. [204]), or recently the Knowledge Graph conference series. Given these limitations, we exclude enterprise KGs from the scope of the present paper.

Except for the latter two cases then, it appears that the research community has built up a large number of publicly accessible and observable KGs that vary in characteristics, and purpose, with unique communities of maintainers that seek to capture a rich variety of knowledge artefacts in evolving graph-like structures. In the remainder of this section, we specifically focus on Open General-purpose KGs rather than attempt to cover all types of KGs.

3.1 Availability of Graph Data

In the following, we start by assessing how and where historical longitudinal data about existing open KGs and their evolution can be found. We specifically focus on KGs that are still available and, therefore, do not include KGs like Freebase [36] and OpenCyc [156]. These two KGs are no longer maintained but are considered pioneering work and predecessors of the KGs investigated in this subsection. Therefore, it is generally possible for KGs to go dark, e. g. through neglect or malign actions.

Here, we give an overview of the datasets regarding the availability of their versions, their schema, and their changelogs in Table 2. The table captures if the versions, schema, or changelogs are queryable and collaborative. Queryable in this context captures if the KG answers queries in any way or form specifically over (historical) versions, schema as well as change logs, for which we then further specify the protocol (HTTP, SPARQL, etc.); for possible temporal queries over RDF archives that should be enabled over evolving KGs, we refer to, for instance, the categorisation in [84, Section 3.2]. Collaborativeness in Table 2 refers to the possibility of reconstructing user information on the different levels. For example, on the changelog level, a “yes” refers to having user information for individual changes. Wikidata and DBpedia allow anonymous edits, which potentially limits a reconstruction of the editing history, indicated with “Partial” in the table.

Further information on formats (RDF, JSON, etc.) is given. Temporality refers to the ability of the KG to capture temporal information for example through reification or other means. With “Event TS”, we indicate that the KG allows for events to be timestamped, whereas with “Graph TS”, we refer to the whole graph having timestamps.

Lastly, timeliness refers to how often the part of the KG is updated.

Wikidata is an open KG read and edited by humans and machines and is hosted by the Wikimedia Foundation. Intuitively, the considerable level of automation and collaboration on Wikidata, and its scale131313with over 15B triples at the time of writing: https://w.wiki/7iez. present significant challenges in Wikidata evolution maintenance.

As for direct queryability, Wikidata’s public SPARQL endpoint141414query.wikidata.org provides query access to the current, regularly synced snapshot; it is undisputed that due to its scale, querying Wikidata in the light of its rapid growth – even on static snapshots – is currently reaching its limits in terms of regular SPARQL engines, as well documented for instance in [13]. Yet, there are various ways to access and potentially – given the respective infrastructure – query the historic versions and change data about Wikidata: Wikidata Entities dumps are available in JSON in a single JSON array, or RDF (using Turtle and N-triples) with Full RDF dumps are available for download151515https://dumps.wikimedia.org/wikidatawiki/entities/ every 2-3 days, and historically for approximately a month. Schema.org metadata is used to describe the dump that contains additional helpful metadata such as the entity revision counter (schema:version), last modification time (schema:dateModified), and the link to the entity node with (schema:about).

As a subset, also truthy dumps are provided, which are limited to direct, truthy statements – since Wikidata offers (validTime) temporal annotations for statements, as well as provenance annotated statements, this “truthy” subset contains only currently valid or preferred ranked statements, where however additional metadata such as qualifiers, ranks, and references are consequently left out. The truthy dump could, therefore, be perceived as a “current truth” snapshot of Wikidata. In contrast, the entire dump also contains outdated (valid time) or disputed (in terms of being lower-ranked alternative statements by particular contributors).

RDF HDT161616https://www.rdfhdt.org/datasets/ hosts roughly annual HDT [83] snapshots of Wikidata’s complete dumps. In addition to these hosted RDF dumps, obtaining the statement-level change log from Wikidata’s aggregated entity and editing history, which are also available via respective APIs, would be possible.

Finally, Wikimedia offers changes (of both Wikipedia and Wikidata) through the Wikimedia Event Streams171717https://stream.wikimedia.org/ Web service that exposes continuous streams of JSON event data. It uses chunked transfer encoding following the Server-Sent Events protocol (SSE) and emits changes events, including Wikidata entity creations, updates, page moves, etc. The usage of edit history and event stream data, apart from RDF dumps, also has the advantage of making (where available) user/contributor information visible, which is helpful for collaboration analyses. Pelisser and Suchanek [225] have presented a prototype to provide this additional information in RDF via a SPARQL interface.

Wikidata Schema/Ontology. Wikidata does not follow a pre-defined formal ontology, meaning it does not formally differentiate between classes and instances. Instead, the terminology is derived from the relationships between the items in the graph and is collectively created by the editors. In other words, Wikidata (deliberately) does not make a formal commitment to the logical meaning of its properties and classes, which could be, for instance, roughly defined as the objects of the P31 (instance of) property.

As a consequence, Wikidata’s schema is evolving entirely in parallel with its data – and analogous considerations for the availability of data about its historic evolution apply as mentioned above. This has been reported to pose significant data quality challenges [190]; moreover, as a primary consequence of such an informal, collaborative process, Wikidata’s ontology may change quickly. In practice, this does not impact the evolution of the graph itself, but it poses an obstacle to downstream tasks and analyses. We note that prior attempts to map the user-defined terminological vocabulary of Wikidata to RDFS and OWL, such as [105], could be used to partially map Wikidata to more standard ontology languages and conduct (approximate) analyses on a logical level. In contrast, we should note that theoretically, OWL/RDFS “mappable” properties could evolve independently in Wikidata.

DBpedia is an openly available KG encoded in RDF, which evolves alongside Wikipedia. It has four releases per year (approximately the 15th of January, April, June, and September, with a five-day tolerance), named using the same date convention as the Wikipedia Dumps that served as the basis for the release.181818https://www.dbpedia.org/resources/snapshot-release/

DBpedia Latest Core Releases191919https://www.dbpedia.org/resources/latest-core/ are published separately as small subsets of the total DBpedia release. Its extraction is fully automated using MARVIN [111]

and then catalogued. The standard release is available on the 15th of each month, five days after Wikimedia releases Wikipedia dumps.

DBpedia Databus202020https://databus.dbpedia.org/ is a platform designed for data developers and consumers to catalogue and version data, not only restricted to DBpedia alone. It enables the smooth release of new data versions and promotes a shift towards more frequent and regular releases. DBpedia takes advantage of this functionality to promptly publish the most up-to-date DBpedia datasets, generating approximately 5,500 triples per second and 21 billion triples per release every month.

DBpedia Live212121https://www.dbpedia.org/resources/live/ is a changelog stream accessible in a pull manner. DBpedia Live monitors edits on Wikipedia and extracts the information of an article after it was changed. A synchronisation API is available to transfer updates to a dedicated online SPARQL endpoint, whereas temporal evolution as such is not directly queryable from that endpoint.

DBpedia Ontology (DBO), the core schema of DBpedia, is currently crowd-sourced by its community: DBpedia mappings are contributed and made automatically available daily, where DBO is generated every time changes in the mappings Wiki have been made. Notably, DBpedia Latest Core and DBpedia Live are based on the latest DBO snapshot available at the point of generation, i. e. one should consider the evolutions of data (Wikipedia edits), schema (mappings), and also the various releases of the actual DBpedia KG, separately.

Finally, we note that a fine-grained historical development, in terms of reproducing any DBpedia page at any point in time in the past, and thereby reconstructing a fine-grained RDF “history” would be theoretically possible by combining DBpedia’s mappings with the Wikipedia edit history API. A prototypical implementation of this approach, the “DBpedia Wayback Machine” – inspired by the Web Archive’s Wayback machine – has been presented by Fernández et al. [80].

YAGO is a large multilingual KG with general knowledge about people, cities, countries, movies, and organisations [220]. At the time of writing, there are six versions of YAGO. In its latest version, 4.5, YAGO combines Wikidata and Schema.org. Older versions integrate different sources such as Wikipedia, WordNet, and GeoNames but are independent of the most recent ones.

YAGO places a strong emphasis on data extraction quality, achieving a precision rate of 95% through manual evaluation [198].

One of YAGO’s unique features is its inclusion of spatial and temporal information for many facts, enabling users to query the data across different locations and time periods. Since version 4, YAGO combines

Schema.org’s structured typing and constraints with Wikidata’s rich instance data. It contains 2 billion type-consistent triples for 64 million entities, providing a consistent ontology for semantic reasoning with OWL 2 description logics. Temporal information in YAGO 4 is sourced from Wikidata qualifiers, which annotate facts with validity periods and other metadata. YAGO 4 adopts the RDF* model for representing temporal scopes, enabling precise assertions about facts within specific timeframes. This approach ensures accurate temporal modelling without implying current states [180]. YAGO can be accessed in different RDF formats, but little information is provided on its evolution or the changes in its schema.

The LOD Cloud,222222https://lod-cloud.net/ is, although regularly re-published and maintained since 2007, a collection/catalogue of (interlinked) Knowledge Graphs, rather than a KG on its own.

Due to its decentralised nature, anyone can submit a dataset, and the evolution of the respective constituent KGs is not observable from this source directly.

While many of its catalogues KGs are accessible via dumps or even SPARQL endpoints, at the same time, many of its datasets have disappeared over time and are no longer (or irregularly available).

As for queryability, the LOD-a-LOT dataset,232323http://lod-a-lot.lod.labs.vu.nl/ which has been created as an attempt to clean and crawl all accessible datasets of the LOD cloud and make it available in HDT [83] compressed form [82] – to the best of our knowledge this remains to date a static, once-off effort. While this dataset has also been re-used in other works, for instance, to analyse cross-linkage and ontology-reuse within the LOD Cloud [104], such investigations are lacking a longitudinal analysis of development over time. Likewise, little is known about the evolution of its schema expressivity: a once-off study from 2012 on the Billion Triple Challenge sample from different LOD Cloud datasets has found for instance that hardly any OWL2 constructs had been used at the time [95], and most of the ontologies in Linked Data had used only a moderately expressive fragment of OWL, which had been called OWL LD in this study. A subsequent or even continuous assessment over time with respect to changes or uptake of OWL constructs in LOD over time is to the best of our knowledge still missing. We note that, while the evolution of the LOD Cloud schema itself was partially studied, e. g. the changes and interlinkage of the RDF vocabularies [1, 2], this study did not include expressivity as such.

Unfortunately, such longitudinal analyses over the LOD cloud’s evolution as a whole are hardly reproducible or observable a posteriori, since, by its nature, availability of versions, separate schemata and change logs, as well as information about temporality and timeliness is highly heterogeneous across the LOD Cloud datasets. Only summary statistics about the individual states of available datasets at the time of updates are available; i. e. the LOD Cloud service as such does not capture the LOD’s historical development itself and older versions of the data itself are typically not provided. External initiatives have attempted to address this problem:

-

the Billion Triples Challenge (BTC)242424https://www.aifb.kit.edu/web/BTC initiative that, starting from a certain set of seeds, collected billions of triples on the LOD using the popular LDspider [118] framework. The first BTC snapshot of the LOD Cloud from 2009 contained about 1B triples. The crawls have been repeated in irregular year-based intervals. The largest version is from 2014, with about 4B triples.

-

The Dynamic Linked Data Observatory (DyLDO) [140]252525http://km.aifb.kit.edu/projects/dyldo/, initiated in 2012, partially overcomes this limitation by providing weekly snapshots of about URIs using the same crawler as the BTC dataset, resembling about to million triples per week. Key characteristics of the dataset are that the weekly crawls are stored as so-called snapshots using the N-Quad format [45]. This means that the full graph data collected per week is available in a single data dump. The variance of the collected data reflects the changes in the LOD Cloud. The main drawback of this approach in evolution analysis is that the seed URLs have not changed since the start of the data collection; this initiative is apparently the longest-running collection of a subset of the LOD Cloud.

While well-known, publicly available Knowledge Graphs (KGs) such as DBpedia and Wikidata play a significant role in the realm of structured knowledge, there are other, perhaps less widely recognised, but equally substantial KGs that deal with highly dynamic data. Two notable examples are the GDELT Global Knowledge Graph262626https://blog.gdeltproject.org/gdelt-global-knowledge-graph/ and Diffbot.

The GDELT project has been providing an integrated event stream for media news events since 2013, and it has evolved into a comprehensive event KG. It separates events and associated entities such as individuals, organisations, locations, emotions, themes, and event counts into a continuously updating KG. The GDELT 1.0 Global Knowledge Graph, initiated on April 1, 2013,

consisted of two data streams – one encoding the complete KG and the other focusing on counts of predefined categories (e. g. protester numbers, casualties). GDELT 2.0’s Global Knowledge Graph (GKG)272727https://www.gdeltproject.org/data.html enhances this with additional features, incorporates 65 translated languages, and updates every 15 minutes.

Notably, mappings of GDELT into RDF stream were proposed, yet it is limited to only the event graphs and the GKG [235, 236].

As for queryability, GDELT can be accessed via Google’s BigQuery282828 https://console.cloud.google.com/marketplace/product/the-gdelt-project/gdelt-2-events in its current state [235], updated every 15 minutes in real-time with temporal information available at the event level at different granularities, with a fixed schema.

Being updated in an automated manner from news sources, this stream KG is not in the same sense collaboratively evolving as Wikidata or DBpedia, in the sense of individual users contributing changes by their edits, but rather from curated news sources. While, to some extent, these sources could also be interpreted as “collaborative” agents contributing to the KG on the one hand, on the other hand, the act of changes has not collaborative nature in the sense that one of these actors could overwrite or undo others’ additions.

Similar to GDELT, Diffbot offers a commercially available Knowledge Graph292929https://www.diffbot.com/products/knowledge-graph/ that combines dynamic event data with information about products, events, and organisations. This Knowledge Graph is only available as a commercial service, wherefore we do not discuss it here in more detail.

3.2 Monitoring Trends

The LOD cloud can be seen as a network of open interconnected KGs, the most prominent of which are Wikidata, DBpedia, DBLP and YAGO. As such, a key part of its evolution has been the open community’s continuous maintenance of these KGs. Indeed, their growth has been central to the expansion of the LOD cloud from B triples and 90 RDF datasets [20], in 2009, to B triples and more than 1,200 datasets [177], by 2020.

With the growth of the LOD cloud comes the desire to analyse its temporal changes and track trends and evolution. Below, we first discuss approaches to analyse at the instance-level the changes in the LOD cloud. Subsequently, we take the perspective of the schema-level and consider methods and works analysing the changes of the LOD cloud in terms of the vocabulary.

3.2.1 Instance-level Monitoring

Several works have sought to capture and understand the nature of KG evolution. One such seminal initiative is DyLDO (see Section 3.1), which has been monitoring Linked Data on the Web since 2012, by collecting continuous LOD snapshots and examining them in terms of their document-level and RDF-level dynamics. The original paper [139] is based on the analysis of 86,696 Linked Data documents for 29 weeks and reveals that of the documents available during that time were, in fact, unchanged. In the remaining, the changes occurred mainly very infrequently, , or very frequently, , with very few documents reporting changes in between. The same polarising trend is recorded for very static domains, , change very infrequently, , or very frequently, . The study also reveals that data changes occurred most frequently at the level of object literals, while schema changes (involving predicates and rdf:type values) were very infrequent, often related to time stamps, and very rarely involved the creation of fresh links.

Analyses of the DyLDO dataset include the work of Nishioka and Scherp [166] who applied time-series clustering over the temporal changes of the DyLDO snapshots and determined the most likely periodicities of the changes using an algorithm from Elfeky et al. [75]. This resulted in the finding of patterns in the evolution of the graph data. Although 78% of the first three considered years of DyLDO snapshots do not change at all, the remaining nodes could be organised into seven clusters of various sizes and periodicity. The latter ranges from periodicity prediction every week to once every half a year or year. Information-theoretic analyses have also been applied to analyse pairwise changes in graph snapshots of the DyLDO dataset [167]. Time-series clustering allowed us to organise the evolution into segments of similar behaviour. The study reveals that nodes of the same type show a similar evolution, even if these nodes are defined in different pay-level domains, i. e., different organisations. Finally, Gottron and Gottron analysed the same dataset but applied perplexity to explain the evolution of graph data [98].

At the level of the individual LOD cloud KGs, Wikidata is an especially interesting example of an evolving KG, having 90M entities and 1.4B revisions by more than 20K users.303030According to https://www.wikidata.org/wiki/Wikidata:Statistics. The recent Wikidated 1.0 dataset [208] records the fine-grained organic evolution of Wikidata from its inception in 2012 until June 2021. The statistical characteristics of Wikidated 1.0 reveal a linear growth in the number of entities, which has been slightly accentuated after the Freebase integration in 2015. Also, almost all entities have less than 100 revisions, with half having less than 10. In terms of revision speed, the analysis highlights that most entities are edited frequently. Specifically, 60% of the revisions of a given entity occurred less than a month after a previous revision of the same entity. Inspecting the types of revisions, the paper indicates that most revisions consist of atomic changes, with approximately 90% containing less than 10 triple additions; moreover, 80% of revisions do not feature triple deletions. Another interesting trend indicates that half of the triples are added less than a day after the creation of their entity, while deletions take much longer, with over half involving triples that are deleted more than 6 months after they have been added. Although the vast majority of Wikidata triples are never deleted, 10% are deleted only once and less than 1% are deleted repeatedly after being added again. The CorHist dataset [224] is also built from Wikidata’s edit histories, although with a focus on constraint violations and their corrections. The study shows that users are more likely to accept corrections for familiar constraints and certain types of constraints favour over-represented entities, highlighting the impact of biases. The evolution of Wikidata has also been studied in terms of editor engagement [207] and impact [191], as well as the quality of provenance information [188]. The work in [169] analyses the changes in Wikidata KG from a topological perspective. As such, it establishes that the evolution of the number of nodes and edges resembles a power law [147], similar to those commonly observed in social network graphs; based on this, it proposes classifiers that verify whether changes are correct.

Levels of Granularity. Alloatti et al. [10] propose to analyse KG evolution trends by capturing their changes across different snapshots at three levels of granularity: atomic focuses on operations at the resource level, local targets the evolution of a resource within its community, and global detects communities at the level of the entire graph. At the level of atomic evolutions, given a set of atomic updates performed between two snapshots, the authors distinguish between statistical changes, quantifiable in terms of the mean and variance with respect to a normal distribution, and so-called noteworthy ones, which capture snapshot features that diverge from the expected KG evolution with respect to a given threshold that is dataset-specific. An example of the former type would be quantifying the number of citations of a paper, while an exceptionally high number of new citations would illustrate the latter. Local evolution would also account for community-level features, such as graph density. As such, a publication may be noteworthy only at the level of its community, and communities themselves may be identified as noteworthy based on specific features, such as topological ones. At the global level, community detection methods can provide insights into the general behaviour of the different entities in the KG. When considering KGs as multi-community networks, various detection algorithms can be applied using custom network metrics, as reviewed in [193, 87]. When it comes to investigating KG evolution at a global level, studies have applied metrics transferred from different disciplines, such as databases [70], information theory [167, 98], web data crawling [68] and machine learning [168, 169].

Future Directions

Even with the large number of analyses already done in the past, there are many avenues to investigate further when it comes to monitoring, but especially analysing evolving KGs at instance-level. One such direction involves exploring the commonality of data sources across different open KGs. For example, knowledge graphs like YAGO3 and Wikidata draw extensively from various language editions of Wikipedia. Investigating the extent of shared data sources and how this commonality has evolved can provide valuable insights into the collaborative dynamics of KG development. By understanding the overlaps and changes in data sources, researchers can gain a more comprehensive understanding of how this influences evolution; for example, an investigation of link evolution and cross-references between KGs over time could deliver new insights here.

Another compelling area for analysis pertains to the role of programmatic intervention in the development of knowledge bases. Many knowledge graphs, including YAGO and DBpedia, rely on automated processes for data extraction and transformation, including, in the case of YAGO, statistical learning. Likewise, Wikidata’s data generation, while predominantly carried out by its users, also relies partially on programs that extract information from external sources through bots. Delving into the balance between manual curation and automated data extraction and its impact on KG growth and quality can offer valuable insights into the mechanisms that drive their evolution.

These future directions in KG analysis provide exciting opportunities to deepen our understanding of how these structures evolve, the factors influencing their development, and their crucial role in the dissemination of structured knowledge. Addressing these challenges will contribute to the ongoing advancement of knowledge representation and dissemination in the digital age.

3.2.2 Schema-level Monitoring

All the aforementioned studies of the evolution of Web graphs focused on the instance-level of the graph data, i. e., the nodes modelling the entities in the domain. Only a few works also considered analysing the evolution of the schema-level of the graph. An early study by Dividino et al. [70] shows that indeed, the schema of a node changes over time when one considers how the available RDF properties and RDF types are combined to a set of edge labels and node types to model a node. We call this set of properties and types the schematic structure of a node. Over one year in the DyLDO dataset, the authors analysed the schema structures of the nodes in terms of both the outgoing properties as well as types. They found that in each snapshot between 20% and 90% of the schema structures change from one version to the next. This means that more or fewer nodes have the same schema structures, nodes with new schema structures are observed, and some schema structures are not used anymore. There are also some combinations of properties and types where the schema structure of the nodes is very stable, i. e. the set of nodes with that specific schema structure did not change for one year [166, 70].

Just like new data nodes appear and change in the Web graph, the vocabularies used to model such data also change, but at a much slower speed. New vocabulary terms are coined to cover additional requirements or reflect changes in the domain. Other existing terms are modified or even deprecated. Previous work analysed the amount and frequency of changes in vocabularies based on different snapshots of the Billion Triples Challenge, DyLDO and Wikidata datasets [1]. Although the evolution of vocabularies is slow [1, 140], i. e., they happen on average a few changes every year only, a change may still have a significant impact due to the large amount of distributed graph data on the Web.

Another insight is that, in the course of an evolving vocabulary, the update of new terms from released vocabulary versions varies greatly and ranges from a few days to years. It is not surprising that even deprecated terms are still used by data publishers. Moreover, it is important to analyse both the change in the vocabulary, as well as how the various terms are used in combination. This can be seen at the schema-level: one can observe changes in the node and property shapes (e. g. SHACL shapes), as well as in their prevalence. For example, a recent study [196] compared the property shapes extracted from two Wikidata snapshots (one from 2015 and one from 2021). The analysis reported that the number of RDF classes increased from 13K to 82K and the number of predicates from 4,906 to 9,017, while the number of distinct property shapes increased from 202K to more than 2M. This calls for an in-depth study of how the different elements of the vocabulary evolve, not only in isolation but also together at the schema-level.

Finally, similar to the LOD Cloud showing the dependencies of different Web graph datasets, one may also consider the Network of Linked Vocabularies (NeLO) where the nodes are the vocabularies and the edges model vocabulary reuse [2]. Vocabulary reuse is generally encouraged, as it improves the interoperability of data, but at the same time, it also introduces dependencies between vocabularies that are to be resolved when vocabulary terms in the network change, are deprecated, or deleted. The NeLO network has been analysed over a history of 17 years based on the data from the Linked Open Vocabulary (LOV) service313131https://lov.linkeddata.es/dataset/lov/ with respect to standard network metrics, such as size, density, degree and importance [2]. LOV collects the temporal information from hundreds of RDF vocabularies added to the service through a review-based process. The evolution of this schema-level graph has been analysed with respect to the impact of vocabulary term changes, term reuse and vocabulary importance [1, 2].

Future Directions

Exploring the schema-level dynamics of open KGs reveals several promising avenues for future research and analysis. These areas of inquiry offer valuable insights into the evolving nature of knowledge graphs and their impact on knowledge representation.

One important aspect of KG analysis pertains to understanding how schemas are structured and evolve within graphs, but also how re-use between graphs evolves. Many open KGs, including Wikidata and DBpedia, make use of RDFS and OWL to organise their ontologies. However, the specific integration of schemas into the data varies. For instance, some graphs incorporate their ontologies directly into the data, while others maintain separate ontology files. Investigating the consequences of these schema design choices on knowledge graph evolution is another possible research direction. Additionally, assessing how expressive power and intended meaning in these schemas evolve and potentially influence KG development is of strong interest.

KGs exhibit varying degrees of semantic underpinnings, ranging from basic RDFS to more complex representations like OWL. Some, like Wikidata, may have intricate intended meanings and collaboratively evolving schema constructs that go beyond OWL’s expressivity, which may necessitate advanced logics for interpretation (for instance the constantly evolving set of Wikidata’s property constraints). Analysing the gap between intended, implied and supported semantics in KGs and its implications for their evolution is a further promising area of investigation. Overall debates within the Semantic Web and Knowledge Graph communities, about additional complex ontology features and the evolution of ontology languages as such, may also raise questions about the role of evolving ontology expressiveness in shaping knowledge graph structures over time.

Comparing the rates of schema/ontology evolution vs instance/data evolution in different knowledge graphs in depth is another potential future direction: preliminary observations may suggest that in some cases, the evolution of ontology structures lags behind changes in the data. Such temporal misalignment raises questions about how it affects the overall coherence and semantics of knowledge graphs over time; as a concrete example, let us again name constraints in Wikidata, which partially become outdated (and even explicitly deprecated) by their actual use – which could indeed be understood as a form of semantic drift.

Comparative analyses between knowledge graphs, especially those with similar characteristics or shared data sources, can provide valuable insights into ontology evolution, schema design and knowledge representation choices. By examining similarities and differences in their evolution processes, researchers can identify best practices and challenges in crowd-sourced ontology development.

These future directions in schema-level analysis offer opportunities to gain a deeper understanding of how knowledge graphs evolve structurally and semantically. By addressing these challenges, researchers can contribute to advancing our knowledge of knowledge representation dynamics and the evolving landscape of open KGs.

4 Study the Evolution

In this section, we discuss methods for studying the evolution of KGs. First, we introduce some relevant static graphs and KG metrics, as they have been defined to inform KG quality and are sometimes used to analyse KG evolution. Second, we address measures that concern consistency and quality specifically using constraints, as opposed to the simple metrics introduced first. In the third part, we discuss measures specifically developed to capture and quantify evolution, and we finish this section with a focus on how network science approaches could be used in the future for the study of KG evolution.

4.1 Basic Graph and Knowledge Graph Metrics

This section introduces metrics designed initially to study the properties of graphs and specifically knowledge graphs, which have been used to assess ontology quality [11, 142, 91, 37, 213, 227, 205] and that has also been used to study KG evolution [250, 252, 73, 71, 172]. Table 3 summarises such metrics, which – however – do not take an evolving KG as input for their calculation as they consider only one graph at a time. We can broadly group these static metrics into two groups: graph metrics and knowledge graph metrics.

Graph metrics are applied to a graph version of the KG or adapted to work on the KG. Examples of these metrics include average depth [71, 73, 91, 142], number of paths [142], tangledness [11, 91, 142] and absolute leaf cardinality [11, 91, 142]. In the work of Alm et al. [11], Gangemi et al. [91] and Lantow et al. [142], the metrics are applied only to the isA graph, whereas Djedidi et al. [71] apply the average depth on the OWL graph, the same as Duque-Ramos et al. [73].

Knowledge Graph metrics can be distinguished from graph metrics based on the idea of taking semantics into account. However, each approach, metric or paper specifies what type of semantics (RDF, RDFS, OWL or other) are considered and if the metrics are applied to materialised KGs or not. We do not make this specification here but leave it up to the interested reader to follow the cited sources. While instance-level analyses focus on the data graph, schema-level analyses focus on the semantic information [33]. Therefore, we divide the metrics into three groups:

-

Schema metrics focus on the schema or T-Box of the KG. Examples of such metrics include Property Class Ratio [250, 252, 172, 73], Depth of Inheritance Tree [250, 172, 73] and Inheritance Richness [71, 73]. For example, most of these metrics are used in the OQuaRE quality assessment by Duque-Ramos [73] to inform about varying quality (sub-)characteristics.

-

Data metrics or A-Box metrics mostly combine an aspect of the A-Box with one from the T-Box. Examples of such metrics include Average Population [73] and Instance Comprehension [71]. Due to their simplicity, data metrics give only a partial view of KG quality and often need to be contextualised for a complete evaluation [73].

In summary, KGs have been analysed by calculating static metrics like the ones in Table 3 on linear/nonlinear series of consecutive snapshots: by combining these measures over some time, as done for instance in [73, 33, 182, 71, 172], one obtains time series data (a versioned or dynamic KG) that allows (and is currently primarily used) for calculating descriptive statistics (e. g. central tendencies, dispersion, distribution) that partially describe the KG evolution over time.

Future Directions

While static metrics can provide valuable insights at little cost, we argue that designing specific metrics and combining those with more sophisticated time-series analyses can lead to more precise monitoring of KG evolution. In particular – for any of the above-mentioned static metrics – investigating time-series trends in metrics variations such as seasonality or stationarity or even more complex models [214] can provide further insights about the KG evolution. We illustrate some ideas for such future metrics by the example questions listed below:

-

Trends: How has the average degree of nodes or centrality developed in KGs such as Wikidata over the past years? How interconnected is the KG becoming over time?

-

Seasonality: Are there recurring periods of increased or decreased growth in the size (number of nodes or edges)? Is there any correlation with specific events?

-

Moving Averages: How does the moving average of additions (new triples) or deletions (removed triples) over 12 months compare to the monthly new triples values? Are there evolutionary anomalies?

-

Autocorrelation: Is there autocorrelation in the time series data of a given ratio metric (e. g. Property Class ratio, etc.) in the KG?

-

Stationarity: Do structural changes in the KG (for instance, lengths of certain paths or other structural metrics) follow a stationary process?

So far, time series analyses with static metrics for LOD characterisation have been traditionally restricted to descriptive statistics, e. g. in [129, 182, 73]. We argue that this is an opportunity for the Semantic Web and Knowledge Graph research community to rethink more sophisticated metrics designed to precisely measure KG dynamics and change overall and in a modular fashion (e. g. instance data vs. schema dynamics, etc.). Likewise, we see a lack of tools and calculation frameworks geared specifically towards running such more complex time series analytics on evolving KGs at scale.

4.2 Consistency-Based Quality Metrics

Assessing data quality within a KG presents significant challenges that worsen if the aim extends to monitoring, ensuring, or improving such quality over time. Consistency-based quality metrics play a crucial role in assessing many dimensions of data quality, for example, measuring the integrity, coherence and general consistency of KGs [245]. Paulheim and Gangemi [176] estimated inconsistency in DBpedia by clustering conflicting statements; they limit their evaluation to a given snapshot, neglecting the evolution of these inconsistencies.

Various languages have been developed to express and represent constraints in KGs, yet not all are equally suited to “measure” consistency and quality. That is, while formal ontology languages such as OWL [101] and the respective underlying Description Logics [21] allow one to determine inconsistency of the whole KG, typically, due to their expressivity, they suffer from ambiguity between pinpointing and counting violations. Earlier work has used rule-based fragments of OWL, OWL RL to – again statically – quantify and repair inconsistencies [113].

More recent specific standards for KG constraint languages have revived the research on quantifying constraint violations. Specifically, the relatively new W3C standard SHACL [135], and similarly ShEx [195], allows validation and counting violations in a KG, w.r.t. a set of (integrity) constraints and target node/edge definitions. Yet, we only see both formal ontology languages such as OWL, e. g. [95], and these novel constraint languages being only slowly, if ever, adopted in (openly available) KGs.

In the following, we dive deeper into the measurability of quality metrics, focusing on consistency. Consistency metrics evaluate the coherence and absence of contradictions within a KG. Constraints can be used to specify rules regarding relationships between entities, ensuring that the graph remains internally consistent. Inconsistencies, such as conflicting assertions or logical contradictions, can be identified with these metrics. There is a trade-off between measuring consistency and simply measuring missing information. However, this trade-off will be explored as part of defining assessment frameworks.

As a first approach towards monitoring consistency w.r.t. constraints over time, Wikidata has leveraged constraint modelling to enhance data quality and usability. Within the Wikidata ecosystem, the Schemas project323232https://www.wikidata.org/wiki/Wikidata:WikiProject_Schemas uses ShEx to define schemas for modelling various Wikidata classes. Additionally, Wikidata uses its own representation model to define constraints on its properties, known as Wikidata property constraints.333333https://www.wikidata.org/wiki/Help:Property_constraints_portal These property constraints serve as valuable guidelines for the community of users, aiding in maintaining data integrity and the development of violations is documented over time in Wikidata’s own published database reports.343434https://www.wikidata.org/wiki/Wikidata:Database_reports/Constraint_violations/Summary In a recent work, Ferranti et al. [86] have attempted to formalise the respective constraints in SHACL and SPARQL, in order to enable generating such violation reports in a standardised manner, on the fly, which may be viewed as a starting point to enable monitoring constraint violation over time.

An alternative approach to quantify violations is to attach the number of violations () for each violated denial constraint () to nodes and edges in the KG. The counting can be done in a bag or set semantics by considering the duplicates in the constraint violations or not. Provenance polynomials can be built by summing the monomials given by . The obtained polynomials and corresponding degrees of quality can be leveraged during query evaluation to characterise the quality of the query results further. Although this approach has been conceived for static relational data [119, 120], the temporal aspects of inconsistency are still largely unexplored.

Despite these starting points, the question of how to measure and monitor quality in terms of consistency in a systematic manner for particular KGs over time seems to be still an open question that opens up engaging scenarios. For example, the presence of time in evolving KGs adds a dynamic perspective to constraint enforcement, facilitating ongoing improvements in the KG through data repairs, as proposed by [57]. Moreover, the analysis of constraints over time can also provide significant insights into the occurrence of semantic drift (see Section 2.3) within the schema layer of a KG. When historical constraint definitions are compared with the current state, it becomes possible to identify schema modifications, shifts in the focus of the schema layer and potential mismatches between the evolving semantics and the intended scope.

Future Directions

As outlined above, consistency is a big factor when assessing the quality of KGs. Hence, we see several potential directions of analyses in the future using constraints to learn more about knowledge evolution concerning quality. For example, before even analysing evolution, an investigation into which KGs use RDFS, SHACL and ShEX but also how expressive their ontologies are and which are entirely based on external data sources. Such questions directly tie into an investigation of quality based on consistency and constraints and how these evolve. First, measures and frameworks must be developed to support these kinds of investigations as they require handling KGs at scale. At the same time, the tradeoff between measuring quality and consistency vs. measuring missing information must be considered in greater detail before applying such approaches to any open general-purpose KGs, as these KGs operate with an open-world assumption.

The analysis directions align well with the dimensions of evolution (dynamics, timeliness and monotonicity), but each requires different approaches or solutions. Thus, we urge the community to use constraint-based metrics to analyse the consistency of the evolution of KGs, the change (trends, seasonality, etc.) of completeness, data freshness, data recency and temporal completeness. Precisely, the last three need to regard time as data rather than meta-data.

4.3 Methods for Quantifying Evolution