Large Language Models and Knowledge Graphs: Opportunities and Challenges

Abstract

Large Language Models (LLMs) have taken Knowledge Representation – and the world – by storm. This inflection point marks a shift from explicit knowledge representation to a renewed focus on the hybrid representation of both explicit knowledge and parametric knowledge. In this position paper, we will discuss some of the common debate points within the community on LLMs (parametric knowledge) and Knowledge Graphs (explicit knowledge) and speculate on opportunities and visions that the renewed focus brings, as well as related research topics and challenges.

Keywords and phrases:

Large Language Models, Pre-trained Language Models, Knowledge Graphs, Ontology, Retrieval Augmented Language ModelsCategory:

PositionCopyright and License:

Hajira Jabeen, Janna Omeliyanenko, Wen Zhang, Matteo Lissandrini, Russa Biswas, Gerard de Melo,

Angela Bonifati, Edlira Vakaj, Mauro Dragoni, and Damien Graux; licensed under Creative Commons License CC-BY 4.0

2012 ACM Subject Classification:

General and reference Surveys and overviews ; Computing methodologies Knowledge representation and reasoning ; Computing methodologies Natural language processingAcknowledgements:

We would like thank Xiaoqi Han’s helpful discussion and support when finalising the camera ready version of the paper.DOI:

10.4230/TGDK.1.1.2Received:

2023-06-26Accepted:

2023-08-31Published:

2023-12-19Part Of:

TGDK, Volume 1, Issue 1 (Trends in Graph Data and Knowledge)Journal and Publisher:

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Large Language Models (LLMs) have taken Knowledge Representation (KR) – and the world – by storm, as they have demonstrated human-level performance on a vast spectrum of natural language tasks [25]. Following this, people are gradually starting to accept the possibility of having knowledge represented in the parameters by some language models, also known as parametric knowledge, which is the information encoded from training datasets into language models’ internal model parameters. The arrival of LLMs announces the era of Knowledge Computing, in which the notion of reasoning within KR is broadened to many computation tasks based on various knowledge representations.

This is a big step for the field of Knowledge Representation. For a long time, people focused on explicit knowledge, such as those embedded in texts, sometimes also known as unstructured data, and those in a structured form, such as in databases and knowledge graphs (KGs) [141]. Historically, for a long time, humans used texts to pass down their knowledge from one generation to another, until around the 1960s, when researchers started to study knowledge representation for better natural language understanding and developed early systems, such as ELIZA [207] at the MIT. In the early 2000s, the Knowledge Representation and the Semantic Web communities worked together to standardize the widely used knowledge representation languages, such as RDF [139] and OWL [67], at web scale, using which the large-scale knowledge bases are then more widely known as KGs [141], due to their helpful graph structures, enabling both logical reasoning and graph-based learning.

This inflection point, with the arrival of LLMs, marks a paradigm shift from explicit knowledge representation to a renewed focus on the hybrid representation, sometimes also referred to as semi-parametric knowledge, of both explicit knowledge and parametric knowledge in neural networks (NNs). As a popular approach for explicit knowledge representation, KGs are now widely investigated for the combination with Transformer-based LLMs, including pre-trained masked language models (PLMs) like BERT [47] and RoBERTa [122], and more recent generative LLMs like the GPT series [24] and LLaMA [191]. Some works use LLMs to augment KGs for, e.g., knowledge extraction, KG construction, and refinement, while others use KGs to augment LLMs for, e.g., training and prompt learning, or knowledge augmentation. In this paper, considering both directions, LLMs for KGs and KGs for LLMs, we present a better understanding of the shift from explicit knowledge representation to a renewed focus on the hybrid representation of both explicit knowledge and parametric knowledge.

There has been some related work on LLMs and KGs. A related survey paper [235] presents a comprehensive review of using LLMs for KG construction and reasoning, while our work provides a more in-depth view of the inflection point, considering not only relational KGs but also KGs with ontologies as schemas, as well as other dimensions of structured knowledge, including tabular data [210] and numerical values [140]. Other works on the intersection of LLMs and KGs have a minor overlap with the topics covered in our paper; e.g., on studies using LLMs as KGs [5], on using KGs to augment LLMs [213], or on comparing GPT-4 with ChatGPT and SOTA fine-tuning methods on three knowledge-related tasks – entity, relation and event extraction, link prediction, and KG question answering [235]. Overall, none of these papers look into the implications of the inflection point with concrete applications. To this end, this paper summarizes the common debate points within the community, introduces the state-of-the-art for a comprehensive set of topics where KGs and LLMs are integrated, and further presents opportunities and challenges.

2 Terminology

Language models.

The term “(large) language model (LLM)” covers quite a set of model families. Although the term already existed before the rise of neural models, it arguably rose to prominence with the publication of the BERT architecture [47], a Transformer-based neural architecture. The BERT model was typically used with parameters pre-trained on a large text corpus and fine-tuned on application specific data. BERT-style (masked) architectures have become a building block of many language-related tasks, where they can be used, for instance, for obtaining latent semantic representations of words or phrases, and are frequently employed in classification, similarity estimation, or retrieval tasks.

A second, slightly more recent paradigm of language models are autoregressive (generative) architectures like the GPT family. These models are suited for the generation of text from given prefixes, and, in contrast to BERT models, can (in principle) directly be used in downstream use cases. At the same time, they represent less mature architecture, with media headlines taken by closed commercial models like ChatGPT and GPT-4 [137], and open models like LLaMA [191], Vicuna [37], or Falcon [149] only recently entering the stage. Although fine-tuning is possible for some of them, autoregressive models are often used without adapting their parameters, e.g., via zero-shot or few-shot text-based prompts.

Where the distinction is important, we will explicitly state to which model family and prompt style we refer.

Explicit knowledge.

Explicit knowledge includes both unstructured knowledge, such as text, images and videos, and structured knowledge, also referred to as symbolic knowledge, which is knowledge codified in a logical representation, such as knowledge graphs and databases. This has been the standard in the AI and KR communities for long and enables an intuitive understanding of assertions. Nevertheless, it has been known for long that not all knowledge is suited for explicit representations, and certain types of knowledge remain typically implicit.

Parametric knowledge.

This is a kind of implicit knowledge. We use the term “parametric knowledge” to refer to the information encoded from training datasets into language models’ internal model parameters. Most importantly, these parameters describe the weights of the neural network and thus consist of a set of numeric values.

Some parametric knowledge cannot be easily translated into symbolic knowledge, for instance, LLMs typically have solid “knowledge” on whether a textual phrase has positive sentiment or not, yet there is typically no lossless conversion into a symbolic formula. At the other end of the spectrum is the parametric knowledge that has been shown possible to be extracted into a crisp symbolic form, for example, “Who was the first person on the moon?”.

As we will show later, parametric knowledge of the latter kind is important for many steps in KG construction and consolidation pipelines, while parametric knowledge of the former kind offers a direct supplement or alternative to existing KGs.

3 Common Debate Points within the Community

The usage of parametric and explicit knowledge together is a topic of debate in the Knowledge Computing community, with proponents and skeptics offering different perspectives. Below are some summaries of common points of contention.

Knowledge Representation and Reasoning.

KGs offer a structured representation of knowledge with explicit relationships, enabling reasoning and inference [128]. Critics argue that parametric knowledge in NNs and LLMs relies on statistical patterns rather than true understanding and reasoning [14]. Proponents of LLMs like ChatGPT highlight their ability to generalize from large-scale text corpora, capturing a range of information, and excellent language understanding capabilities. On the one hand, LLMs could generate plausible but incorrect or nonsensical responses, such as hallucinations, due to a lack of explicit knowledge representation [163, 223]. There are also doubts on whether LLMs have the ability to learn directional entailments [114] or infer subsumption between concepts [75]. On the other hand, KGs can be costly to build. While LLMs can be expensive to train too, they can be readily usable to support many downstream applications, bringing AI from the backstage to center stage. To sum up, in comparison to the classic trade-off between expressiveness and decidability in knowledge representation, here KGs and LLMs have the trade-off between precision and recall considering using explicit and parametric knowledge in knowledge computing tasks.

High Precision Methods.

The success of KGs can largely be attributed to their ability to provide factual information about entities with high accuracy. For instance, YAGO [180] asserts an accuracy rate exceeding 95%. Similarly, Google necessitates high accuracy in its KG for operational use, e.g., the semi-automatic construction method of Knowledge Vault was not utilized in production, partly due to its inability to reach the desired 99% accuracy in their benchmark [206]. Along this line of thought, many LLM-based methodologies for KG completion fail to reach these high-performance levels, as exemplified by the performance of BERT in [115, 193], and GPT-3, equipped with hundreds of billions of parameters [4]. This calls for novel high precision methods for KG construction based on LLMs.

Numerical Values.

It is widely recognized that LLMs grapple with handling numerical values. Even straightforward arithmetic tasks can be a struggle for LMs, as highlighted in a study by Big-bench [179]. This shortcoming also extends to KG completion tasks [95]. Multiple LLMs have been evaluated on their ability to complete KGs using numerical facts from Wikidata [196], such as individuals’ birth and death years. However, none of the tested models accurately predicted even a single year. This raises questions about the capability of current LLMs to correctly memorize numbers during pre-training in a way that enables them for subsequent use in KG completion. While LLMs like PaLM [41] demonstrate some proficiency in dealing with numbers, more commonly used smaller models seem ill-equipped for this task. The complexity escalates when considering the intricacies of metrics and diverse numbering formats and types. Currently, modifying LLMs to handle numerical values remains unresolved, making their utilization for numerical KG completion seem far from practical.

Long-tail Knowledge.

One of the key research questions on LLMs for the Knowledge Computing community (and beyond) is how much knowledge LLMs remember [125]. Investigations indicate that LLMs’ performance significantly deteriorates when dealing with random Wikidata facts, specifically those associated with long-tail entities, in comparison to popular entities, as evidenced in the PopQA dataset [125] and other datasets [152, 193]. This effect can be traced back to a causal relationship between the frequency of an entity’s appearance in the pre-training corpus and the LLMs’ capacity for memorization [53]. Even sizable LLMs face difficulties when trying to retain information about long-tail entities [97]. KGs inherently present an advantage over LLMs through their provision of knowledge about long-tail entities [95, 193] and thus can further help improve the recall for Knowledge Computing tasks.

Bias, Fairness and Beyond.

Critics argue that LLMs can perpetuate and amplify biases present in the training data, leading to biased outputs. LLMs may generate biased or prejudiced responses if the training data contains stereotypes or discriminatory information [131, 108]. On the other hand, proponents argue that bias is not inherent to LLMs but reflects societal biases embedded in the data. They emphasize the importance of addressing bias in the training data and developing mitigation techniques [167, 154, 161]. A survey [17] argued that “bias” in Natural Language Processing (NLP) systems took various conceptualizations without being critically engaged by practitioners. KGs are also used in a plethora of downstream tasks, and social biases engraved in KG embeddings get propagated [68, 104]. Specifically, ontology creation, which generally comprises manual rules factored by opinions, motivations, and personal choices, is a source of bias [89, 51]. Also, automated pipelines for KG construction exhibit gender bias [127]. There are other similar concerns of LLMs beyond bias and fairness, including (but not limited to) copyright violation and misinformation. In general, due to the implicit nature of parametric knowledge, it is less straightforward to forget such toxic information from LLMs, compared to explicit knowledge.

Explainability and Interpretability.

KGs are often preferred in scenarios where explainability and interpretability are crucial [31], as they explicitly represent relationships between entities and provide a structured knowledge representation. Skeptics of LLMs argue that these models lack transparency and interpretability, making it difficult to understand how they arrive at their answers or recommendations. Proponents of LLMs acknowledge the challenge of explainability but argue that recent research efforts [8, 88] are improving LLM’s interpretability through techniques like attention mechanisms, model introspection. Some also argue that Chain-of-Thoughts (CoT) [204] can also improve the explainability of LLMs, although question decomposition and precisely answering sub-questions with LLMs are still far from being solved. Attribution evaluation and augmentation of LLMs with e.g., source paragraphs and sentences is another recent research topic for improving their explainability in question answering [18].

4 Opportunities and Visions

One of the key questions this paper needs to answer is, now with the emergence of parametric knowledge, what new opportunities do we have? Here are some of our thoughts on such new opportunities with the arrival of parametric knowledge and its potential integration with explicit knowledge.

-

1.

Instant access to huge text corpora: As mentioned in the Introduction, for a long time, human beings passed down their knowledge in texts. Thus, a lot of knowledge these days are in textual form. Using LLMs gives access to extremely large text corpora at high speed, and recently even on consumer hardware [79]. This allows AI developers to avoid getting bogged down in previously critical challenges around data gathering, preparation, storage, and querying at scale. It also helps to reduce previously critical dependencies on the field of information retrieval.

-

2.

Richer knowledge for many subtasks: Although the most prominent capabilities of LLMs, question answering and dialogue, are still under critical scrutiny, it should not be overlooked that LLMs have significantly advanced and simplified many traditional tasks of the knowledge engineering pipeline. Out-of-the-box, with fine-tuning on a few examples, or via few-shot prompting, LLMs have advanced many tasks such as dependency and structured parsing, entity recognition, and relation extraction. And just as errors propagate along a pipeline, so do improvements, thus enabling KG construction at unprecedented scale and quality. Furthermore, LLMs are readily usable for many downstream tasks beyond knowledge engineering. By injecting explicit, and in particular structured, knowledge into LLMs, such as through retrieval augmented methods, one can make explicit knowledge more readily usable for such a wide range of downstream tasks, further realizing the vision of ‘Knowledge is power’.

-

3.

Even more advanced language understanding: LLMs alone already significantly advanced the “understanding” of natural language, as evidenced by tasks like textual entailment, summarization, paraphrase detection and generation, etc. These capabilities are critical to making knowledge engineering robust to linguistic variance, typographic errors, redundancy, and other features of human-written, web-scraped, and other noisy forms of text. Now with potential novel approaches to combining parametric knowledge with explicit knowledge, it is possible to have even more advanced language understanding, not only for textual entailments, but also for other NLP tasks, such as summarization and consistent generation.

-

4.

Compression entails consolidation: An important step in traditional knowledge engineering is the consolidation and aggregation of conflicting and concurring pieces of information, requiring often elaborate methods for consolidating observations from sentences, patterns, and constraints [173]. In LLM training, an aggregation occurs automatically. Although this step is not entirely understood, it brings the potential for outsourcing a major challenge in knowledge engineering.

With the above new opportunities brought by the combination of parametric and explicit knowledge, our vision is two-folded:

-

In Explicit-Knowledge-First use cases, our vision is that LLMs will enable, advance, and simplify crucial steps in the knowledge engineering pipeline so much as to enable KGs at unprecedented scale, quality, and utility.

-

In Parametric-Knowledge-First use cases, our vision is that KGs will improve, ground, and verify LLM generations so as to significantly increase reliability and trust in LLM usage.

Both visions are neither alternatives, nor does one build upon the other. Instead, we believe that classes of use cases will continue to exist side-by-side, some of which favor Explicit-Knowledge-First (scrutable) approaches, some of which favor Parametric-Knowledge-First (blackbox) approaches, with either of these having significant potential for benefiting from synergies of the two directions.

5 Key Research Topics and Related Challenges

With the opportunities and visions related to the availability of both parametric and explicit knowledge in place, in this section, we categorize, summarize, and present the recent developments in using LLMs and KGs under five different themes:

-

1.

LLMs for KGs: Knowledge Extraction and Canonicalisation

-

2.

LLMs for KGs: KG Construction

-

3.

LLMs for KGs: Ontological Schema Construction

-

4.

KGs for LLMs: Training and Augmenting LLMs

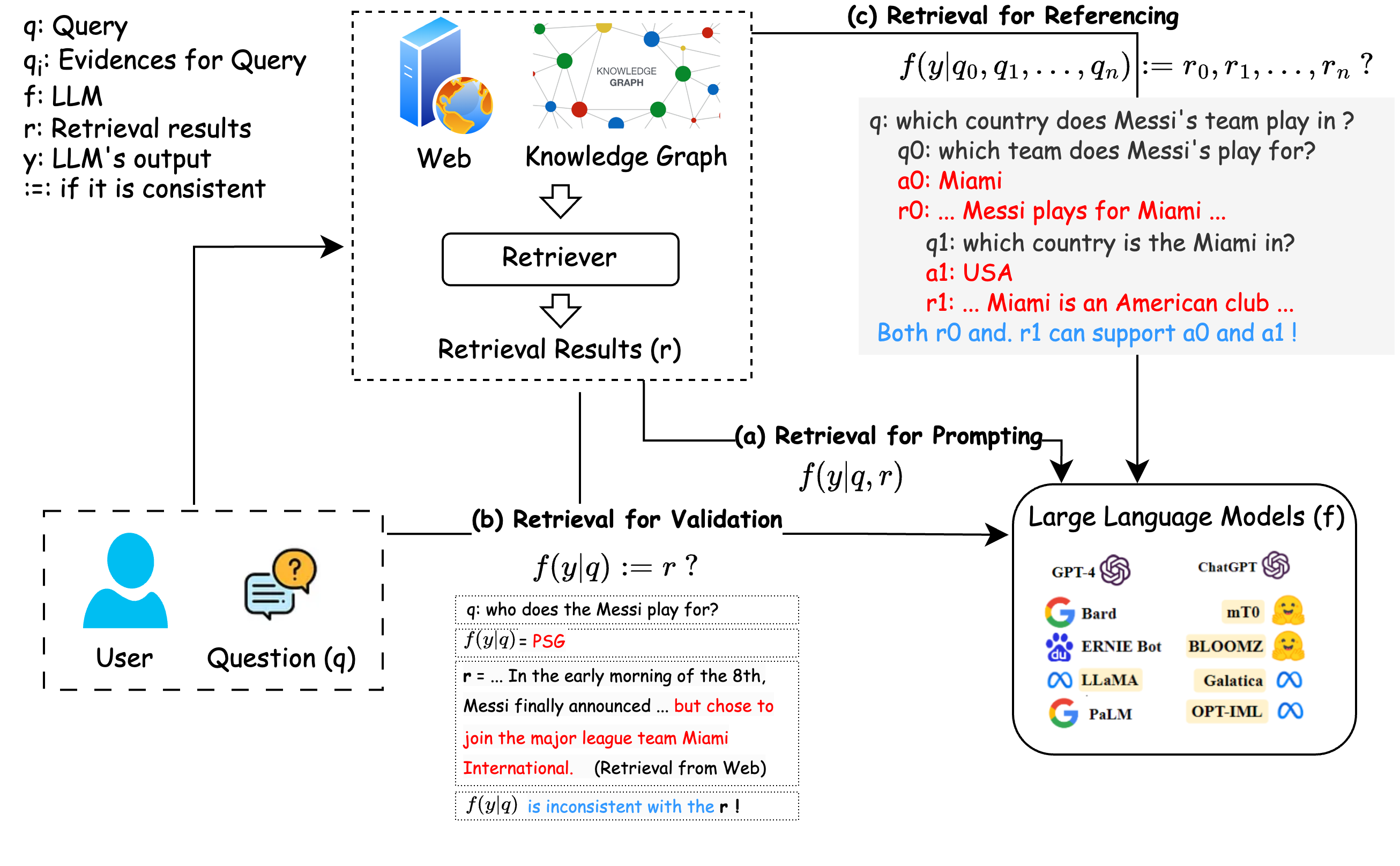

Figure 1 provides an overview. In Section 5.1, we start with the traditional KG construction from text and tables, then show in Sections 5.2 and 5.3 how assertions and ontological information can be extracted from LLMs. We then reverse perspective, and discuss in 5.4 how KGs can help and enhance LLMs in training and deployment.

5.1 LLMs for KGs: Knowledge Extraction and Canonicalisation

KG construction is a complex task that demands collecting and integrating information from a wide array of sources, encompassing structured, semi-structured, and unstructured data. Traditional methods often rely on specific modules designed to process each data type in isolation and struggle when the content is diverse and heterogeneous in structure. However, LLMs are powerful NLP models trained on a broad spectrum of information sources, making them well-suited for knowledge extraction tasks. This section presents work that uses LLMs for knowledge extraction from various sources.

5.1.1 Entity Resolution and Matching

Entity resolution (also known as entity matching, entity linking or entity alignment) is the process of linking pieces of information occurring in multiple heterogeneous datasets and referring to the same world entity [55, 59, 144]. Past research has focused on developing the methodologies and similarity measures among entities represented by flat structured data as well as semi-structured data. Recent benchmarks, among which a recent one [147], have been proposed to compare existing matching systems (including relational ones) on their accuracy, amount of corner cases and robustness to unseen matching pairs. A similar benchmark and survey tailored for knowledge graph entity alignment [225] focuses on the problem of alignment entities in different knowledge graphs using embedding-based techniques. One of the findings of the comparative analysis of existing systems is that semantic information (e.g. attribute triples and relation predicates) allow to achieve better matching accuracy.

Embedding-based entity alignment methods for KGs reduces the symbolic similarities between graph entities to a vector space in order to flatten the heterogeneity of graph components and facilitate reasoning [182]. Specifically, a total of 23 representative embedding alignment approaches are cross-compared in terms of performance but also are shown to require significant supervision in the labeling phase. Therefore, unsupervised methods and methods that cope with large-scale KGs are highly desirable in future research investigations.

CG-MuAlign [234] employs Graph Neural Networks (GNNs) to perform multi-type entity alignment, leverages the neighborhood information and generalizes to unlabeled types, and REA [148] which tackles the multilingual entity alignment problem by combining adversarial training with GNNs to cope with the problem of noisy labeled data provided as input.

LLMs are used in entity resolution and linking for KGs in multiple ways [7].

We envision the following important research challenges on the interplay between entity resolution and linking and LLMs.

-

Generation of labeled training data, which is typically a resource-intensive and time-consuming step, hindering the performance of entity alignment for KGs [94].

-

Generation of corpora of entity matching rules, which will allow to increase the amount of rules available for these tasks on real-world knowledge graphs.

Similarly to how [170] employs Generative Adversarial Networks (GANs) to reduce the effort of labeling data, we argue that LLMs can provide labeled samples of KGs and control the performances of the aforementioned embedding-based approaches. Also, LLMs can help build robust and trustworthy corpora of entity matching rules, modulo the fact that a declarative formalism with a logical language is defined in the graph setting. Training data for this logical language should be provided as input to LLMs, similarly to SQL statements that are readily available for consumption in text corpora. However, prompt engineering is needed in order to produce meaningful rule corpora for real-world large-scale KGs, such as DBpedia [9] and Wikidata [196]. Entity matching rule logs can be envisioned for these real-world large-scale KGs in a similar fashion as query logs for these KGs [19, 20].

Another interesting line of research work revolves around the alignment of factoid questions for building robust question answering (QA) systems. Existing approaches focus on the use of biencoders to efficiently perform both entity mention detection and entity linking in only one pass [110]. Matching of questions and predicate sequences on a knowledge base using both entity linking and deep convolutional neural networks has been investigated in prior work [218].

Other interesting directions to pursue for future developments leading to intertwining symbolic AI and statistical AI are as follows:

-

Combination of entity linking approaches and embedding-based approaches with LLMs, which can lead to better integration of LLMs with knowledge reasoning and question answering [81].

-

Marrying accuracy and interpretability, which will leverage the power of LLMs to generate graph data and rules and improve the accuracy of the entity linking processes but also enhance the interpretability of the processes.

5.1.2 Knowledge Extraction from Tabular Data

Extracting knowledge from tabular data like databases, Web tables and CSV files is a common way for KG construction. For tables whose semantics (meta information) are already known, heuristic rules can be defined and used to transform their data into KG facts. However, real-world tables often have unclear semantics with important meta information, such as table name and column header, not clearly defined. Meanwhile, the original data usually need to be retrieved, explored, integrated and curated, before expected knowledge can be extracted. In recent years, Transformer-based LMs have been investigated for processing tables, especially their textual contents. They can be applied to table vector representation as a foundation of other prediction tasks [194]. TURL [46] is a typical method of table representation learning that uses BERT [47] and has been applied in several tasks such as cell filling, column type annotation, and relation extraction. Similarly, RPT [188] uses BERT and GPT to pre-train a table representation model. Starmie [56] transforms columns into sequences using a template and fine-tunes BERT with a contrast learning framework using unionable and not unionable column pairs as samples.

Among all the table processing tasks, semantic table annotation which matches table data to KG components (e.g., table column to KG class, table cell to KG entity, inter-column relationship to KG property) can be directly applied to extract knowledge for KG construction and population [121, 92]. There have been several attempts that use LLMs for these tasks. Doduo [181] serializes a table into a sequence of tokens and trains BERT for predicting column types and inter-column relationships. Korini et al. [103] prompts ChatGPT to annotate semantic column types. When task-specific samples for demonstration are minimal or absent, ChatGPT achieves a similar performance as the RoBERTa model. Although some attention has been given to utilizing LLMs for tabular data processing and KG construction, there is still much room for investigation.

Challenge and Opportunities.

-

Transforming table contents into sequences: A table or a table element with its structured context needs to be transformed into a sequence before it can be fed into LLMs. Different transformation methods are required for different LLM utilization scenarios, such as fine-tuning LLMs, LLM inference with prompts, and instruction tuning of LLMs.

-

Representing and utilizing non-textual tabular data: A table often contains not only long and short text, but also data of other types, like numbers and dates. There are still few works that consider these data.

-

Extracting tabular knowledge: LLMs are mostly applied to process and understand tables but rarely applied to the final step of knowledge extraction. OntoGPT [27], which extracts instances from texts to populate an ontology using ChatGPT is known, but there are no counterparts for tables. Beyond instances, extracting relational facts is more challenging.

5.1.3 Knowledge Extraction from Text

Knowledge extraction from text generally entails automatic extraction of entities and associated relations, with traditional pipelines processing vast amounts of sentences and documents. This process enables the transformation of raw text into actionable knowledge, facilitating various applications such as information retrieval, recommendation systems, and KG construction. The language understanding capabilities of LLMs have enhanced this process.

For example,

- (1)

- (2)

- (3)

- (4)

These approaches allow LLMs to extract information from text without needing a large amount of explicit training on specific domains, thereby improving their versatility and adaptability [44]. Moreover, LLMs have demonstrated proficiency in extracting knowledge from languages other than English, including low-resource languages, paving the way for cross-lingual knowledge extraction and enabling the utilization of LLMs in diverse linguistic contexts [106].

Furthermore, prompting LLMs has introduced new paradigms and possibilities in the field of NLP. LLMs can generate high-quality synthetic data, which can then be used to fine-tune smaller task-specific models. This approach, known as synthetic data generation, addresses the challenge of limited training data availability and enhances the performance of models [94, 189]. Additionally, instruction tuning has emerged as a powerful technique where LLMs are trained on datasets described by explicit instructions, enabling more precise control and customization of their behavior for specific tasks [205, 201]. Also, for constructing domain-specific KGs, the stakes are higher, and hence scrutinizing the generated text (by experts) is necessary. However, it is still a step forward since human annotation is less expensive than human text generation.

Apart from the obvious substantial computational resource requirements for training and utilizing these LLM, there are various challenges, including the ones presented in Section 3.

Challenge and Opportunities.

-

Efficient extraction from very long documents. Current LLMs are incapable of processing very long documents like novels in one shot. In this regard, modeling long-range dependencies and performing corpus-level information extraction can be further improved.

-

High-coverage information extraction. Almost all extraction pipelines focus on high precision. However, high recall is ignored or under-explored [176]. Building knowledge extractors with high precision and high recall will result in a great leap toward building lifelong information extractors.

5.2 LLMs for KGs: Knowledge Graph Construction

We highlight the important role that LLMs play in improving KG construction, focusing on current trends, issues, and unanswered questions in this field. We start by discussing link prediction, which is a way to generate new facts given an existing KG. Next, we look at inductive link prediction, a method that predicts triples for unseen relationships. Our focus then shifts to a more recent method where triples are directly extracted from the parametric knowledge of an LLM.

As a conclusion of this section, we discuss the challenges of LLM-based methods for KG construction. These involve issues with long-tail entities, numerical values, and also the precision of these methods.

5.2.1 Link Prediction

Link prediction is about predicting a missing element of a triple given the other two elements. It includes head entity prediction , relation prediction , and tail prediction .

KG link prediction methods have mostly been studied for static snapshots of a KG. Many approaches, in fact, assume a training phase in which the current state of the KG is used to learn embeddings and other model parameters. Subsequently, many such models can only operate on entities for which an embedding was learned in the training phase. This leaves them incapable of predicting links for any previously unseen entities, such as newly added people or products. Inductive link prediction (ILP), in contrast, focuses on techniques that can predict links to new entities not originally contained in a KG. Furthermore, existing KG embedding-based KG completion approaches frequently fail to leverage textual information and other literal information [65].

To overcome these challenges, current research focuses on incorporating textual information available in the KGs to improve the KG embeddings, boost performance in downstream tasks, and support ILP. The latent representation is learned from textual information using a variety of encoding models such as linear models, convolutional models, recurrent neural models, and LLMs and studied in [123, 142]. In this work, we focus only on LLM-based methods.

The LLM encoder BERT [47] is used in Pretrain-KGE [227] to generate initial entity embeddings from entity descriptions and relations. These embeddings are then fed into KG embedding models to generate final embeddings. MADLINK [15] uses SBERT to generate embeddings from entity descriptions, together with the entity embeddings obtained from structural information. KEPLER [202] offers a unified approach for KG embedding and pre-trained language representation, embedding text-enhanced knowledge and factual knowledge into LLMs. Nayyeri et al. [132] use LLMs to produce representations at word, sentence, and document levels, merging them with graph structure embeddings. Huang et al. [84] propose a model that combines LLMs with other modalities, such as vision, to generate a multi-model embedding space. CoDEx [3] uses a novel loss function driven by LLMs that helps KG embedding models estimate the likelihood of triples based on textual information. While these approaches can leverage the structural information contained in the graph, KG embeddings can not be directly used to predict unknown entities in the ILP setting. To still predict entities within ILP, existing works that combine text embeddings and traditional KG embeddings use only the text embeddings in the ILP setting [124] or apply similarity based heuristics to generate KG embeddings for unseen entities [198].

Instead of considering the graph structure, another line of research directly leverages LLMs for KG Completion. For example, KG-BERT [216] represents a triple as an ordered sequence of head text, including surface form, descriptions, and attributes, relation text, tail text separated with special tokens. KG-BERT [216] optimizes the BERT model on KGs, followed by KG-GPT2 [16] that fine-tunes the GPT-2 model. MTL-KGC [101] enhances the effectiveness of KG-BERT by combining prediction and relevance ranking tasks. PKGC evaluates triple validity by translating it into natural language sentences, while LLMs process these sentences for binary classification. Masked Language Models were introduced to encode KG text, with MEMKGC [38] predicting masked entities using the MEM classification model. Open world KGC [39] expands MEMKGC with modules Entity Description Prediction (EDP) and Incomplete Triple Prediction (ITP), focusing on predicting entities with given textual descriptions. StAR [199] uses Siamese-style textual encoders for text and a scoring module, while SimKGC [200] leverages a Siamese textual encoder. LP-BERT [112] is a hybrid KG completion method that combines MLM encoding for pre-training with LLM and separated encoding for fine-tuning, using a contrastive learning strategy. Also, LLMs such as GPT-3 and GPT-4 have the in-context learning capability which could adapt to new tasks through careful prompt design without fine-tuning the model parameters. Since LLMs are directly capable of predicting entities that are not yet contained in the KG, many works using direct LLM approaches also evaluate their models in the ILP setting [38, 39, 43, 199, 200, 202, 222].

Beyond the described approaches that construct and leverage embeddings for link prediction, LLMs may also be directly used in a prompting setting to find suitable links between entities, with existing methods described in detail in the following Section 5.2.2. As with the previous approaches that purely use LLMs, prompt-based approaches are directly applicable for ILP and are commonly evaluated in this setting [21, 90, 152, 174, 231]. LLMs make it significantly easier to jointly utilize structural and text information for link prediction, while there are still challenges.

Challenge and Opportunities.

-

The generative language model which uses a decoder-only or encoder-decoder architecture cannot ensure that the generated result is already included in the KG. Also, one entity may have multiple natural language names. Thus it is hard to judge the correctness of the generated results from LLMs.

-

Current link prediction models are mostly evaluated on ranking metrics, such as Hit@k and Mean Reciprocal Rank, which requires methods to give a ranked list of candidates. Considering that the number of candidate entities might be huge (e.g., over ten thousand), scoring each candidate entity in inference requires many model predictions which may be problematic for LLMs due to their large parameter size and high computational cost.

-

The key research question of link prediction is how well a method could learn to infer new triples based on existing ones. LLMs are trained based on a massive corpus that might overlap with KGs such as Wikidata [196]. Thus it is not easy to distinguish whether the LLM completes the prediction by utilizing its memory or reasoning over existing facts.

-

LLMs provide several benefits for ILP as demonstrated by the many existing approaches, but their application also has certain drawbacks. Since ILP entities may occur that are not contained within the underlying KG, the task is inherently reliant on auxiliary information. When leveraging LLMs for ILP, many studies focus on improving the extraction of knowledge from LLMs through prompt engineering, which is, by itself, a current popular research area that may directly benefit the domain.

-

While prompting provides promising results, it requires well-designed strategies for predicting multi-token entities and out-of-vocabulary tokens. Further, if required knowledge is not captured by the LLM, e.g., when querying novel concepts that emerged after LLM pre-training, schemes to incorporate further knowledge into the LLM are required. Meanwhile, as feature selection, finding a suitable prompt also needs much searching with many experiments, which is costly especially for those recent commercial LLMs like GPT-4.

-

Alternative methods rely on available high-quality textual descriptions of unknown entities. These methods generally inherit the limitations of classical link prediction, in that they require one-vs-all comparisons against all entity candidates during inference, which may become computationally prohibitive for large KGs [199, 200]. While there are already approaches that address this problem by applying efficient search heuristics, additional structural information, and pre-computation [44, 153, 199, 200], they involve additional memory overhead. As such, efficient strategies for obtaining predictions may provide a promising research direction.

-

Further, the combination of these methods with classical link prediction models has already shown strong performance and is a promising trend followed by increasing numbers of works that further explore the incorporation of structural information into LLM-driven approaches.

Though challenges exist, opportunities lie in designing efficient and effective link prediction methods combining LLMs preserving the efficiency of traditional methods and robustness of language models.

5.2.2 LLMs for KGs: Triple Extraction from LLMs

Traditionally, retrieval and reasoning of relational knowledge have both relied on symbolic knowledge bases [60], that often are constructed using supervised extraction techniques applied to unstructured corpora, e.g. Web archives [220, 190]. More recently, self-supervised LLMs have been investigated for their ability to directly retrieve relational knowledge [233] from their parameters, e.g. through question answering, prompting through the use of cloze-style questions [76, 166] or statement scoring [183]. In this context, the ability of LLMs to retrieve, infer and generalize relational knowledge is seen as a crucial indicator of their capacity to understand and interpret natural language. Even though a range of terms are used in that context, e.g. fact or knowledge retrieval as well as knowledge inference, we refer to the task of accessing relational knowledge from LLM parameters as knowledge retrieval.

Benchmarks and Baselines.

LAMA is the first benchmark dataset to evaluate knowledge retrieval in LLMs [152]. Related works show that knowledge retrieval through prompts is inconsistent with regard to paraphrasing [54, 76], with some types of information guiding LLMs towards more correct answers [26, 151, 35], while others are harmful to their performance [143, 100]. LLMs struggle to retrieve knowledge from low-frequency phenomena [162] and [86] argue that LLMs fail to express large varieties of knowledge when prompted in a zero-shot manner.

Zhong et al. [231] propose that the models’ accuracy may originate from memorizing training data, not actually inferring knowledge. Similar to LAMA, the experiments on a more recent probing work KAMEL [95] confirm that LLMs are still far from the knowledge access capabilities of symbolic knowledge bases. The Knowledge Memorization, Identification and Reasoning test work KMIR [62] reveals that while LLMs struggle to robustly recall facts, their capacity to retain information is determined more by the number of parameters than the training methods, and while model compression can help preserve the memorization performance, it reduces the ability to identify and reason about the information in LLMs from transformer-based language models. Linzbach et al. [116] also present similar findings. LLMs are known to struggle with more complex reasoning tasks [83, 75]. Branco et al. [22] explore generalizability of common-sense reasoning capabilities and the impact of shortcuts in training data.

Biases in Triple Extraction Evaluation.

LLMs may exhibit various types of biases; representation of the majority viewpoint being a common issue due to distributions prevalent within pretraining data [13], neglecting disagreements among multiple viewpoints (e.g. by majority voting) [42]. Prior works investigate individual factors (such as frequency) or LLM biases in other tasks [126], as well as knowledge retrieval [231].

With respect to the interpretation, reliability and generalizability of knowledge retrieval, several studies [22, 26] investigate whether LLMs actually learn transferable generalizations or only exploit incidental shortcuts in the data. [26] explore biases in three different knowledge retrieval paradigms, namely prompt-based retrieval, case-based analogy, context-based inference, finding that decent performance of existing knowledge retrieval baselines tends to be driven by biased prompts that overfit to artifacts in the data, guide the LLM towards correct entity types or unintentionally leak correct answers or additional constraints applicable to the correct answer. In a similar context, [50] discuss the shortcut learning behavior arising due to skewed training datasets, the model, or the fine-tuning process. [169] demonstrate an intriguing similarity between human cognitive biases and those exhibited by LLMs. Using insights from psychology, they analyze the learning and decision-making processes of black-box models to reveal their biases towards right-and-wrong for decision-making. Therefore, rigorous assessment of existing benchmark datasets is necessary for generalizable insights about knowledge retrieval and inference performance, and to facilitate efficient, unbiased knowledge retrieval from LLMs.

Prompt Engineering for Triple Extraction.

Cao et al. [26] propose three paradigms for factual knowledge extraction from LLMs: prompt-based, case-based, and context-based. Results suggest the prompt-based retrieval is biased towards prompt structure. Prompt engineering [10] aims to create prompts that efficiently elicit desired responses from LLMs for a specific task. However, a limited number of manually created prompts only reveal a portion of the model’s encoded knowledge [90], as the response can be influenced by the phrasing of the question. Thus, prompt engineering is a crucial part of knowledge retrieval from LLMs. LPAQA [90] uses an automated mining-based and paraphrasing-based method to generate diverse high-quality prompts, as well as ensemble methods to combine answers from different prompts. Automatic Prompt Engineer [233] uses LLM models like InstructGPT [138] and instruction induction [78] to generate instruction candidates which are then improved by proposing semantically similar instruction variants to achieve human-level performance. Zhou et al. [233] investigate the ability of LLMs, such as GPT-3, to generate high-quality prompts for a variety of tasks.Initial experiments on the role of syntax in knowledge retrieval [116] find a strong dependency on prompt structure and knowledge retrieval performance.

To summarize, prior works have shown that relational knowledge is captured by LLMs to a certain extent. However, there is still insufficient understanding of how performance differs across different kinds of knowledge or relations, for instance, commonsense knowledge compared to entity-centric encyclopedic facts or transversal versus hierarchical relations. In addition, several studies raise questions about LLMs capacity to infer knowledge beyond already-seen statements.

Challenges and Opportunities.

-

Entity Disambiguation: Entity disambiguation is essential for KG construction to ensure unique identification of entities and to maintain consistency across the graph. However, when extracting facts from LLMs, entity disambiguation presents several challenges, since LLMs only operate on word token level. Hence, polysemy and homonymy make it difficult to determine the correct entity when a term has multiple meanings or is spelled the same as others but has different meanings. Also, the need to resolve co-references, where the same entity is mentioned in various ways within a text, further complicates the process. Moreover, the same piece of text can refer to different entities depending on the context, making it a significant challenge to correctly identify and classify the entities. Entities that were not present in the training data or are less common in general can be particularly hard to disambiguate. This can be a frequent issue with newer, less well-known, or very domain-specific entities. These complexities lead to major challenges that need to be addressed: enhancing disambiguation techniques to better handle long-tail entities; developing methods to better understand and utilize context in entity disambiguation; and improving co-reference resolution in such a way that it can be effectively incorporated into KG construction.

-

Long-tail Entities: Existing LLMs still manifest a low level of precision on long-tail entities. Models may begin to generate incorrect information when they fail to memorize the right facts. The answers provided by these models often lack consistency. Incorrect correlations drawn from the pre-training corpus can lead to various biases in KG completion. Whether retrieval-augmented models serve as a viable solution to this problem remains uncertain, as does the potential necessity to adapt pre-training and fine-tuning processes to enhance model robustness in handling long-tail entities.

-

High-Precision: LLMs face challenges in achieving high-precision predictions when performing knowledge retrieval [193]. A potential strategy to derive high-precision KGs from LLMs is to focus on model calibration. However, there are pressing challenges that remain unsolved. How can LLM training be adapted to prioritize high-precision learning? Can LLMs be used for validation purposes? These questions form the crux of the ongoing exploration in this field.

-

Provenance: Extracting factual knowledge directly from LLMs does not provide provenance, the origin and credibility of the information, which presents multiple issues. Without provenance, verifying the accuracy of information becomes challenging, potentially leading to the spread of misinformation. Additionally, bias detection is hindered, as the lack of source information makes it difficult to account for potential biases in the data used for training. Provenance also provides critical context, without which information can be misunderstood or misapplied. Lastly, the absence of source information compromises model transparency, making it hard to evaluate the accountability of the LLMs.

5.3 LLMs for KGs: Ontological Schema Construction

A KG is often equipped with an ontological schema (including rules, constraints, and ontologies) for ensuring quality, enabling easier knowledge access, and supporting reasoning. At the same time, an independent ontology, which usually represents conceptual knowledge sometimes with logics, can also be regarded as a KG. In this part, we describe the interaction between LLMs and approaches to learn ontological schemas as well techniques that can exploit LLMs to manage ontologies and rules.

5.3.1 Constraint and Rule Mining from KGs

The existing KGs are mostly obtained from large-scale data extraction pipelines, which are notoriously brittle and can introduce errors and inconsistencies in these graphs [48, 158]. Moreover, a KG is never considered complete since the closed world assumption does not hold [48, 146], i.e., it is not possible to conclude that a missing fact is false unless it contradicts another existing fact. Instead, we usually consider that in a KG it holds the open-world assumption, that is a missing fact is simply considered as unknown.

Practical applications impose high demands in terms of (semi-)automatic methods for data quality assessment and validation [102, 157, 2]. Since KGs contain huge amounts of data, it is not feasible to manually inspect and correct their errors. Therefore, a common approach is to instantiate rules and constraints that can be automatically enforced. These constraints express dependencies and conditions that the KG needs to satisfy at all times and that should not be violated by the introduction of new facts or their deletion. In KGs, rules and constraints can take the form of Graph Functional Dependencies [57], declarative first-order logic rules [61], entire ontologies [69], or validating shapes [102, 155]. Once a set of rules or constraints are instantiated, the next step is to either identify which entities or facts in the KG violate any of them, or employ them to delete erroneous information, or, finally, to employ them to deduce any missing information [58, 159].

Example 1.

The following rules could apply for a subset of a graph describing people and their relationships:

Where states that hasChild is the inverse equivalent relation of hasParent, states that for each person in the KGs for which we know the parent-child relationship we should know the birth year, and states that if is a child of then should be born before .

Nonetheless, a fundamental challenge is how to generate such rules and constraints. Specifying them manually is prohibitively difficult and expensive [2, 157]. On the one hand, the domain experts, who know the semantics for the dataset at hand, may not have the skill set or the background necessary to formally express those rules. Even when skilled, domain experts would require a substantial amount of manual work to exhaustively materialize a complete list of such rules [158]. Therefore, in the past decade, we have witnessed an increased interest in methods that can (semi-) automatically extract rules and constraints [158, 33]. In all those cases, the data in the KG is exploited as a silver standard [146]. That is, while the data are not perfect, we can still assume it is at least of reasonable quality. This approach works for rules that can find frequent positive examples. The challenge arises for rules that need to govern entities and facts that are rare, and for negative rules that need to prohibit some facts from appearing. To handle these cases, contextual information, e.g., documents and other user input provided in textual form, can be exploited in many different ways. In this context, LLMs bring a number of important opportunities:

-

ability to extract contextual information from a given text, e.g., a document describing rules in natural language;

-

information extraction from contextual data, like domain knowledge in textual form explicitly used during training;

-

automatic generation of new rules via deductive chain-of-thought prompting;

-

understanding rules vocabulary in context of a given domain description expressed in natural language, allowing to reconcile rules with synonyms and polysemy;

-

providing explanations of rules or generating candidate validating or counterfactual examples.

Consider, for example, a rule where the relationships hasParent has been used, an LLM can be queries to identify whether hasParent between two entities refers more likely to the semantic meaning of being biologically descended or being owned or controlled. LLMs can be used to filter out candidate constraints, e.g., given their ability to mimic simple common sense reasoning, rules can be translated in the form of questions to the LLM, e.g., “if X is child of Y is it true that the birth year of Y comes always before the birth year of X?”. Thus, an LLM can be used in support or replacement of a human annotator towards generated rules.

One of the most promising abilities of LLMs is parsing long texts. In companies and organizations, documents exist that contain reference governing information, e.g., procedures, regulations, and specifications. Here we see an untapped opportunity in parsing these documents in relation to the entities and predicates in the KG to extract constraints. Yet, the challenge arises in the fact that the LLM needs to use the correct vocabulary of entities and relations and the correct rule syntax. Recent advancements in program synthesis show a possible way to the challenge.

Finally, an even more fundamental challenge is that of transcending the usage of LLMs for NLP alone, and using them directly on large sets of facts within a KG. We can think of this setting as a special kind of multi-modal LLMs, where the KG is a specific modality. We have seen similar approaches in the past when word embedding approaches have been translated to knowledge graphs [165]. This brings to the question of whether it is possible to train LLMs to treat the task of rule generation as, for example, a graph embedding or summarization task [11, 28]. This would require then the ability to perform both inductive and abductive reasoning and treat rules as summaries from the set of facts in the KG.

A Rule Mining Case with ChatGPT.

Let’s have a look at the following real example asking ChatGPT to generate rules.

Example 2.

We ask ChatGPT “Please list three rules that could help to infer one person’s father. And output it in a first-order rule form.” The following is the output we get:

“Certainly! Here are three rules expressed in first-order logic form that could help infer a person’s father:

Rule 1: This rule states that for all individuals x and y, if there exists a ’child’ relationship between x and y and y is male, then y is the father of x.

Rule 2: This rule states that for all individuals x and y, if there exists a ’biologicalChild’ relationship between x and y, then y is the father of x.

Rule 3: This rule states that for all individuals x and y, if there exists a ’fatherOf’ relationship between y and x, then y is the father of x.

These rules, expressed in first-order logic, demonstrate how the relationships between individuals can be used to infer the father of a person within a KG.”

From the above example, we can see that ChatGPT gives three rules that can possibly infer the father relationship. Rule 1 is correct which is consistent with the definition of father; Rule 2 is a rule with high probability; and Rule 3 is also correct since father and fatherOf are inverse relations. This demonstrates LLMs have rule knowledge and could express it in a formal form following instruction, thus they could serve as experts for automatic rule construction. To this end, however, we need to align the rules from the LLM to the existing knowledge in the KG, and feed the LLM with the existing knowledge and the context encoded in the KG. Both are consistent with discussion about the company and family examples.

Challenges and Opportunities.

-

Retrieval augmented rule extraction and generation: LLMs can be given access to reference documents and KG and use this access to produce candidate rules informed by the textual content of the documents as well.

-

LLM guided rule explanation and validation: LLMs can be used to identify from a list of generated rules those that are more likely to hold within a given context. Further, it can generate explanations on the meaning of the rule and its validity.

5.3.2 Ontology Refinement

Ontology refinement includes quite a few topics like knowledge completion (e.g., subsumption prediction, complex concept learning and new concept placement), erroneous knowledge detection and repair (e.g., inconsistency checking) and knowledge canonicalization (e.g., entity renaming). Besides formally represented knowledge, real-world ontologies, such as the widely used medical ontology SNOMED CT111https://www.snomed.org/ and food ontology FoodOn222https://foodon.org/, also include a lot of meta information defined by different annotation properties for usability, such as entity labels, synonyms and natural language definition. Taking the concept obo:FOODON_00002809 in FoodOn as an example, it has not only formal knowledge such as named super concepts and logical restrictions, but also labels and synonyms (e.g., “edamame”), definitions (e.g., “Edamame is a preparation of immature soybean …”), comments and so on. These meta information, especially the natural language text, further motivates people to use LLMs for ontology refinement.

For a refinement task, usually there are quite a few existing examples in the original ontology. Therefore, a straightforward solution, which has been adopted by most current methods, is fine-tuning a Pre-trained Language Model such as BERT together with an attached classifier. One typical method is BERTSubs [29] which is to predict the subsumption relationship between two named concepts, or between one named concept and one complex concept. It concatenates the corresponding texts of the two candidate concepts with special tokens as the input of a model composed of a pre-trained BERT and a logistic regression classifier, and fine-tunes the model with the existing subsumptions in the target ontology. For a named concept, the text could be either its name (label or synonym), or its name in combination with a textual description of its surrounding concepts; while for a complex concept, the text is its description (a.k.a. verbalisation). Another typical work is [119] which fine-tunes BERT and predicts the position to place in SNOMED CT for a new concept. Note that there are also some language model-based methods in taxonomy curation, such as [171] which fine-tunes BERT for taxonomy edge completion and GenTaxo [221] which fine-tunes a BERT variant named SciBERT for predicting positions that need new concepts. They can be directly applied or easily extended to refine an ontology’s concept hierarchies.

Exploiting LLMs is a promising direction for ontology refinement, but it still needs much effort before they become practical tools. DeepOnto [73], which is a Python-based package that can support quite a few ontology engineering tasks, has already included some tools for ontology refinement and alignment using LLMs, but more development is needed to make it more accessible and to support generative LLMs like LLaMA and GPT-4. One obvious challenge is that those very recent generative LLMs have been rarely explored for ontology engineering. However, we think the following two research challenges are more fundamental.

Challenges and Opportunities.

-

Exploiting the graph structure and logic of an ontology together with its text. Currently, LLM fine-tuning-based methods can well utilize the text of individual entities, but their other formal semantics are often ignored or not effectively incorporated. Besides fine-tuning with samples constructed by some templates, more LLM techniques such as prompt learning and instruction tuning could be considered.

-

Combing symbolic reasoning with LLM inference. Symbolic reasoning, such as consistency checking in OWL ontologies, can still play a role e.g., in validating the knowledge inferred by LLMs. One aspect of incorporating symbolic reasoning is constructing samples for LLM fine-tuning and extracting prompts for LLM inference, while another aspect is the synergized framework [235] where LLM inference and symbolic reasoning work iteratively.

5.3.3 Ontology Alignment

The content of one single ontology is often incomplete and many real-world applications rely on cross-domain knowledge. Ontology alignment (a.k.a. ontology matching), which is to identify cross-ontology mappings between entities that have an equivalent, subsumption or membership relationship, thus becomes especially important for knowledge integration. The entity can be a concept (class), an individual (instance) or a property. Traditional systems (e.g., LogMap [91]) heavily rely on lexical matching and optionally use symbolic reasoning to remove mappings that lead to logical conflicts; while some recent methods combine these techniques with machine learning techniques like feature engineering, semantic embedding and distant supervision for better performance (e.g., LogMap-ML [30]). Especially, when the ontologies have a large ratio of assertions (large ABoxes) and the task is to discover equivalent individuals, ontology alignment is very close to the KG entity alignment task that has been widely investigated in recent years using KG embeddings [228]. As in ontology refinement, exploiting the textual information by applying LLMs is a promising direction for augmenting ontology alignment.

The study of LLM application in ontology alignment is similar to ontology refinement. Pre-trained language models such as BERT have been applied via fine-tuning [133, 72]. BERTMap [72] is a typical system that has achieved state-of-the-art performance in many biomedical ontology alignment benchmarks. It fine-tunes a pre-trained LM with synonym pairs extracted from the original ontologies and the potentially given mappings, and combines the predicted concept equivalence scores with lexical matching scores and reasoning for the mappings. Those recent LLMs like GPT-4 have not been applied in ontology alignment, as far as we know, and the two fundamental research challenges mentioned in ontology refinement are applicable in ontology alignment. Besides, ontology alignment has another critical challenge.

Challenges and Opportunities.

-

Evaluating LLM-based ontology alignment systems. Novel evaluation protocols with new metrics are needed to fairly and efficiently compare LLM-based systems even with incomplete ground truth mappings [74]. Meanwhile, the semantics from the textual meta information and the LLM may be inconsistent with formal semantics defined in ontologies, and thus it is sometimes hard to determine whether a mapping by an LLM-based system is true or not.

5.4 KGs for LLMs: Training and Augmenting LLMs

In Sections 5.1 to 5.3, we discussed three different aspects on using LLMs for KGs. In this section, we look into the other direction, i.e., using KGs for LLMs. There are a few dimensions here. Firstly, KGs can be used as training data for LLMs. Secondly, triples in KGs can be used for prompt construction. Last but not least, KGs can be used as external knowledge in retrieval-augmented language models.

5.4.1 KGs for (Pre-)Training Language Models

KGs typically incorporate data derived from sources that are deemed highly reliable. The extracted data goes through a post-processing phase before it is certified by human evaluations. This vetted information from KGs has been incorporated into the pre-training corpus to complement natural language text, which on its own, can result in limited information coverage [216, 150, 1, 212].

Using factual knowledge from KGs to pre-train LLMs has also infused structured knowledge [130]. This integration of KGs with LLMs, along with efficient prompts, has made it convenient to inject world knowledge and incorporate new evolving information into language models [49]. Additionally, knowledge expressed in high-resource language KBs has been transferred into LMs tuned for low-resource languages [232, 118].

Furthermore, grounding knowledge from KGs to pre-train LMs has shown improvements in performance on generation and QA tasks [34, 164, 136]. In another approach, [192] proposed an interpretable neuro-symbolic KB, where the memory consists of vector representations of entities and relations from an existing KB. These representations are augmented to an LM during pre-training and fine-tuning, enabling the model to excel in knowledge-intensive QA tasks.

Challenges and Opportunities.

-

High-quality knowledge injection: KGs can prove to be a valuable source of knowledge, fostering a more profound understanding of the relationships between entities within LLMs – this is particularly true as existing KGs such as Wikidata [196] are committed to ensuring high precision. Moreover, KGs’ ability to include dynamic updates with the latest information can provide significant advantages during pre-training in comparison to outdated textual corpus.

-

Information duplication: KGs encapsulate direct and indirect relations, and one can induce the same information using multiple facts. Such a kind of complex linking between entities is generally hard to find in a single textual source. Therefore, leveraging KGs can enhance the LLM’s understanding and reasoning capacities about popular as well as long-tail entities.

5.4.2 KGs for Prompt Construction

The attention received by the integration of KGs and LLMs has grown recently. On the one hand, there is the explored direction of prompting LLMs for collecting and distilling knowledge in order to make it available to the end-users. On the other hand, there is the less explored research direction where KGs are used in synergy with prompts in order to enhance LLMs with capabilities making them more effective and, at the same time, trustworthy. A number of studies have leveraged KGs to enrich and fine-tune prompt creation resulting in a significant increase in prompt quantity, quality, and diversity compared to manual approaches. KGs have been employed in single and in multi-turn scaffolding prompts at scale, powered by numerous traversal paths over KGs with low authoring cost while considering the meaningful learning patterns [107]. Other studies have investigated how incorporating explicit knowledge from external sources like KGs can help prompt engineering, especially by giving additional contexts (e.g., attributes, K-hop neighbors) of the entities in order to help the LLMs to generate better predictions [23]. Approaches like KnowPrompt [35] use KGs to incorporate semantic and prior knowledge among relation labels into prompt-tuning for relation extraction, enhancing the prompt construction process and optimizing their representation with structured constraints. Certain studies have utilized LLMs and prompts in the task of reasoning over KGs [40], e.g., LARK uses entities and relations in queries to find pertinent sub-graph contexts within abstract KGs, and then, performs chain reasoning over these contexts using LLM prompts of decomposed logical queries outperforming previous state-of-the-art approaches by a significant margin.

Challenges and Opportunities.

The current research in the field of KG utilization for prompt creation predominantly centers around LLMs, which are considered to have relatively lower efficacy compared to LLMs. LLMs present significant potential for advancing prompt creation methodologies in conjunction with KGs. We may summarize this perspective within the following four challenges:

-

KGs can be employed to automatically extract and represent relevant knowledge to generate context-aware writing prompts. Analyze and understand the relationships between different writing prompts, enabling the generation of prompts that build upon each other.

-

KGs can be combined with LLMs to facilitate the interactive and dynamic generation of prompts, adapting to user feedback and preferences in real-time. Furthermore, the use of KGs in prompt creation has opened up possibilities for explainability and interpretability. Since KGs provide a transparent representation of knowledge, the prompts generated from KGs can be easily traced back to their underlying sources.

-

KGs can integrate into prompts the definitions of guards exploited during the generative task. Such guards may enhance the trustworthiness of the information generated by LLMs and make them more compliant with specific domain-wise or context-wise constraints.

-

KGs can create prompts that ask questions (e.g., inferring missing relations in an incomplete KG) that trigger KG complex reasoning capabilities and intermediate reasoning steps.

The integration of KGs within the prompt construction activities will allow us to answer the following preparatory set of research questions. For each research question, we provide a link to the challenges mentioned above, aiming to identify appropriate research pathways.

-

RQ1: How can KGs be integrated into existing prompts to enhance the effectiveness (including relevance, absence of bias, privacy-preservation) of the information extracted from LLMs? C1, C2, C3.

-

RQ2: How can KGs be exploited to drive the generative capabilities of LLMs in order to properly address the whole ethical constraints of AI-based solutions? C2, C3.

-

RQ3: What are the optimal approaches to generate KGs-based prompts that enhance reasoning capabilities? C4.

Also, in the following three scenarios, the community would benefit from tackling the above-mentioned challenges and research questions.

-

KGs for Hallucination Detection in LLMs: The reliability of LLMs is greatly affected by the hallucination problem, where they generate inaccurate information. Despite attempts to address it, the issue of hallucination is likely to persist in the realm of LLMs for the foreseeable future. To aid in the detection of hallucinations, KGs-based prompting aims to offer reliable information that can serve as a foundation. By combining LLMs and KGs, researchers can develop a comprehensive prompt-based fact-checking model that can identify hallucinations in various domains.

-

KGs for Editing Knowledge in LLMs: LLMs possess the ability to store extensive real-world knowledge, but they struggle to exploit prompts to update their internal knowledge to reflect real-world changes. KGs-based prompts offer a potential solution for modifying knowledge in LLMs, but they are restricted to handling basic tuple-based knowledge in KGs. Indeed, even if the entire LLM undergoes re-training, the knowledge presented through prompts would likely be assimilated within the vast network structure of the LLM.

-

KGs for Black-box LLMs Knowledge Injection: While pre-training and knowledge editing methods can bring LLMs up to date with the latest information, they require access to the internal structures and parameters of LLMs. However, many cutting-edge large LLMs only offer APIs that allow users and developers to interact with them, keeping their internal workings hidden from the public. Consequently, traditional KG injection techniques that involve modifying LLM structures with additional knowledge fusion modules cannot be employed. One potential solution is to convert various types of knowledge into different text prompts. However, it remains an area of ongoing research to determine if these prompts can effectively adapt to new LLMs. Additionally, the approach of using KGs-based prompts is constrained by the length of input tokens accepted by LLMs. Therefore, the question of how to enable effective knowledge injection for black-box LLMs still remains unanswered.

5.4.3 Retrieval Augmented Methods

There are a few reasons for why retrieval augmented methods are necessary for LLMs to obtain external knowledge. One reason is to address the problem of knowledge cutoff, i.e., LLMs are not aware of the events that happened after their training. Also, although parametric knowledge would increase when the size of parameters increases, training LLMs is expensive; e.g., GPT-3 (175B parameters) costs $4.6 million to train, and PaLM (540B parameters) costs $17 million. In fact, research suggests that the obtained knowledge from such training is mainly about popular entities [125]. Furthermore, for domain specific applications, there might be some significant knowledge that is not yet in LLMs, including private and business critical knowledge that cannot be put into LLMs.

One idea to deal with the above lack of (updated) knowledge is to edit the knowledge in LLMs. Knowledge Editing methods assume that we know which entries are wrong and how to fix them. An obvious strategy is to retrain and fine-tune the model based on the modified data. However, apart from being costly, retraining cannot guarantee that erroneous data will be corrected. Another strategy is to develop a hyper-network to learn a parameter shift for the base model. De Cao et al.[45] trained a hyper-network, KnowledgeEditor, to modify a fact and used Kullback-Leibler (KL) divergence-constrained optimization to alleviate the side effect on other data/knowledge that should not be changed. However, many state of the art methods do not perform well when editing multiple edits, as they use the same strategy to process multiple edits and ignore the relation between different edit gradients, resulting in a “zero-sum” phenomenon, where the inter-gradient conflict will inevitably cause some data modifications to fail. Han et al. [71] design explicit and implicit multi-editor models to learn diverse editing strategies in terms of dynamic structure and dynamic parameters respectively, allowing to deal with the conflict data in an efficient end-to-end manner.

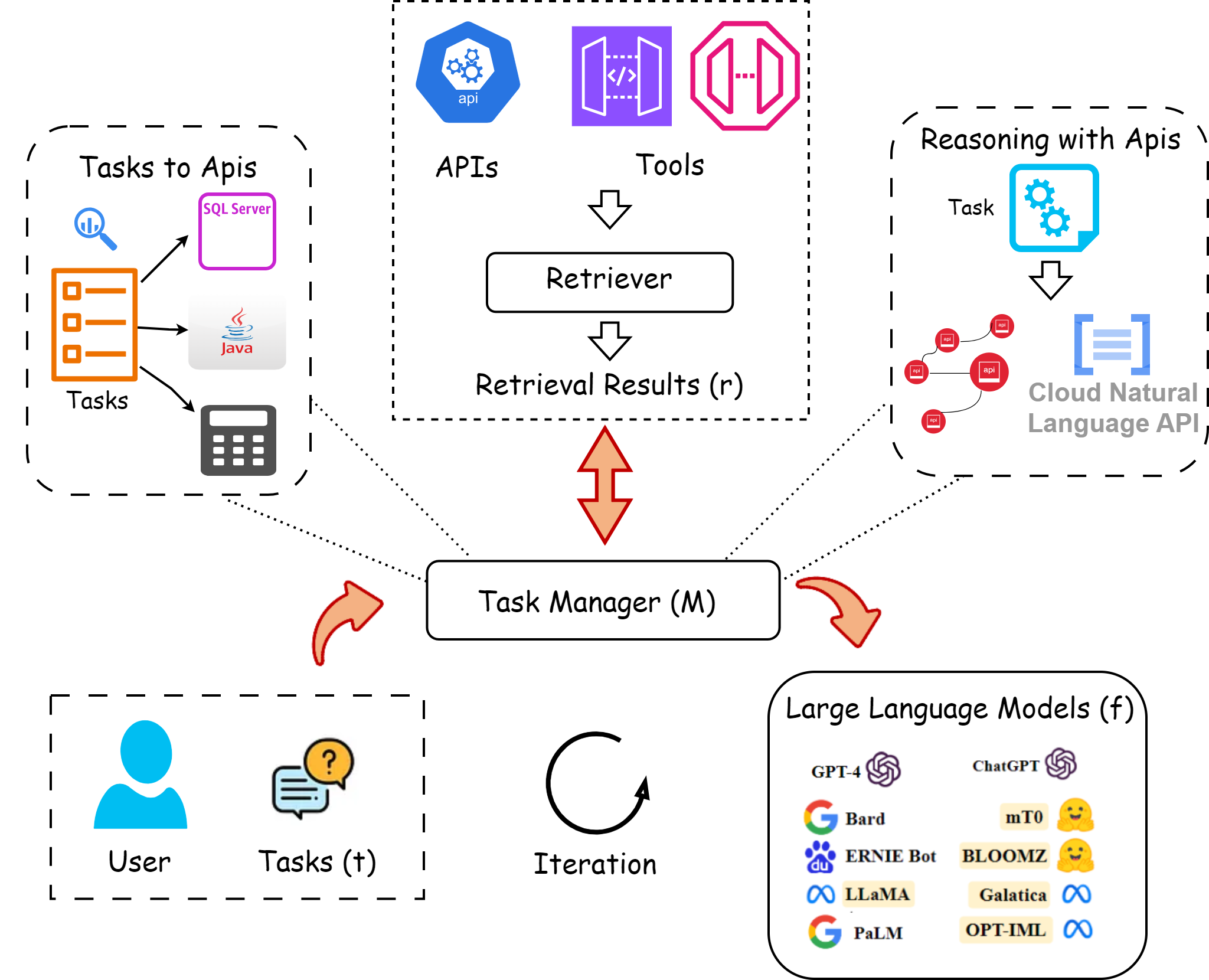

Alternatively, people could look into retrieve-generate architectures for building retrieval augmented generation models. In this setting, we assume that the external sources contain the needed knowledge for addressing users’ requirements. Initially, most such methods use unstructured passages as external knowledge. RAG [109] outperforms DPR [99] by marginalizing the retrieval step to train the generator and retriever jointly with the supervision of the label answer. RAG tends to focus too much on label-relevant passages, rather than question-relevant passages and answers. Huang et al. [85] address this issue by incorporating rich answer encoding through Dense Knowledge Similarity (DKS) and Retriever as Answer Classifier (RAC). FiD [87] encodes the concatenation of the passages retrieved by pre-trained DPR and the original question separately, and then fuses them with concatenation to the decoder. It is expected that structured knowledge will be the main source of external knowledge (cf. Figure 2), as passages often contain noise. Knowledge Graphs can be used directly as external knowledge. For example, KG-FiD [219] utilizes knowledge graphs to further enhance the retrieval quality by establishing the structural relationship among the retrieved passages. Another external source is databases. Vougiouklis et al. [195] propose an efficient NL2SQL approach, transforming questions in natural language into SQL over given database schemas, outperforming ChatGPT under the zero-shot setting while achieving about 10 times speedup over other state of the art NL2SQL engines.

As illustrated in Figure 2, it is important to note that there are different tasks for retrieval augmented methods to enhance large language models. In addition to the above mentioned retrieval augmented methods for prompting, there are also methods for other tasks. For example, Zhao et al. [229] propose the Verify-and-Edit framework, which makes use of retrieval augmented methods to validate texts generated by large language models for individual steps within CoT-style reasoning chains. Yang et al. [214] proposes LLMA accelerator to make use of retrieval augmented methods for speeding up decoding of large language models. Gao et al. [63] propose the RARR system, which retrieve evidence for texts generated by large language models for referencing purpose. Apart from retrieval augmentation for generations, retrieval augmented methods can be used for pre-training and fine-tuning [70] as well. Knowledge graphs are expected to play key roles in these tasks.

Challenges and Opportunities.

To sum up, retrieval augmentation is a very promising direction. There are a few pressing challenges:

-