Trust, Accountability, and Autonomy in Knowledge Graph-Based AI for Self-Determination

Abstract

Knowledge Graphs (KGs) have emerged as fundamental platforms for powering intelligent decision-making and a wide range of Artificial Intelligence (AI) services across major corporations such as Google, Walmart, and AirBnb. KGs complement Machine Learning (ML) algorithms by providing data context and semantics, thereby enabling further inference and question-answering capabilities. The integration of KGs with neuronal learning (e.g., Large Language Models (LLMs)) is currently a topic of active research, commonly named neuro-symbolic AI. Despite the numerous benefits that can be accomplished with KG-based AI, its growing ubiquity within online services may result in the loss of self-determination for citizens as a fundamental societal issue. The more we rely on these technologies, which are often centralised, the less citizens will be able to determine their own destinies. To counter this threat, AI regulation, such as the European Union (EU) AI Act, is being proposed in certain regions. The regulation sets what technologists need to do, leading to questions concerning How the output of AI systems can be trusted? What is needed to ensure that the data fuelling and the inner workings of these artefacts are transparent? How can AI be made accountable for its decision-making? This paper conceptualises the foundational topics and research pillars to support KG-based AI for self-determination. Drawing upon this conceptual framework, challenges and opportunities for citizen self-determination are illustrated and analysed in a real-world scenario. As a result, we propose a research agenda aimed at accomplishing the recommended objectives.

Keywords and phrases:

Trust, Accountability, Autonomy, AI, Knowledge GraphsCategory:

VisionFunding:

Luis-Daniel Ibáñez: Partially funded by the European Union’s Horizon Research and Innovation Actions under Grant Agreement nº 101093216 (UPCAST)Copyright and License:

Maria-Esther Vidal; licensed under Creative Commons License CC-BY 4.0

2012 ACM Subject Classification:

Social and professional topics Computing / technology policy ; Computing methodologies Knowledge representation and reasoning ; Human-centered computing Collaborative and social computing theory, concepts and paradigms ; Security and privacy Human and societal aspects of security and privacy ; Computing methodologies Distributed artificial intelligenceDOI:

10.4230/TGDK.1.1.9Received:

2023-06-30Accepted:

2023-11-17Published:

2023-12-19Part Of:

TGDK, Volume 1, Issue 1 (Trends in Graph Data and Knowledge)Journal and Publisher:

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Modern Artificial Intelligence (AI) can be traced back to a workshop held at Dartmouth College in the summer of 1956 [66] and is most commonly defined as the use of computers to simulate human intelligence, in particular human reasoning, learning, and problem-solving. Since 1956, AI has lived through times of increased interest and funding, and also “AI Winters”, such as after the 1974 Lighthill report [63], when overall funding was reduced. Over the last few years, however, funding and interest in AI have been high and exploded in November 2022, when ChatGPT, a type of Generative AI, was announced by OpenAI, exposing the power of Large Language Models (LLMs) to the general public. Since its release, ChatGPT has become the fastest-growing app in history, reaching 100M users in just two months, and is now estimated to have 200M users. Generative AI will continue to grow following a significant investment by Microsoft into OpenAI and announcements by Microsoft and Google on how Generative AI will be embedded in future products [36]. Data-centric AI [114] recognises the immense value of data as crucial resources for training, optimising, and evaluating AI systems. Databricks, a prominent AI company, has defined data-centric AI as the challenge of designing processes for data collection, labelling, and quality monitoring in machine learning (ML) datasets [85] highlighting the need for continuous re-running and re-training, actionable monitoring, and the difficulties of incorporating data inaccessible to human annotators due to privacy concerns as primary research directions. Knowledge Graphs have been used as a resource and structure to support data-centric AI processes.

The term Knowledge Graph (KG) was first introduced by Google in 2012 and is usually defined as a type of knowledge structure that uses a graph data model to integrate data. KGs are strongly linked to the work of the Semantic Web community, which first began in around 2001 and was introduced in a seminal paper by Tim Berners-Lee [13]. The Semantic Web initiative produced a stack of web standards on which KGs are based. These include the Resource Description Framework (RDF), where data is encoded as subject-predicate-object triples, and the Web Ontology Language (OWL), a set of web-based languages mostly based on description logic. The common theme of these semantic representations is that they facilitate the publishing, use, and re-use of data at the web-scale. In particular, they allow disparate heterogeneous data sources to be integrated continuously at scale. Over the past decade, KGs have become a mainstay for several key large-scale applications found online. For example, KGs underpin Google Search, which saw 5,900,000 searches in just one minute in April 2022. Similarly, the same minute saw 1,700,000 pieces of content shared on Facebook, 1,000,000 hours streamed, and 347,200 tweets shared on Twitter. All of this content and data are linked to a plethora of AI services that have increasingly been based on KGs, as mentioned above, founded upon machine-readable data and schema representations based on a web stack of standards. AI services cover a wide number of areas, including content recommendation, user input prediction, and large-scale search and discovery and form the basis for the business models of companies like Google, Netflix, Spotify, and Facebook. Given the above, we define KG-based AI as an AI system (replicating some aspect of human intelligence) based on a KG that possibly uses the web standards produced by the Semantic Web community.

In addition to privacy concerns, there has been a growing worry about how personal data can be abused and, thus, how AI services impinge on citizen rights. For example, the over-centralisation of data and its misuse led Sir Tim Berners-Lee to call the Web “anti-human” in an interview in 2018 [18]. Since 2016, hundreds of United States (US) Immigration and Customs Enforcement employees have faced investigations into abuse of confidential law enforcement databases, from stalking and harassment, to passing data to criminals [69]. The subject of much of the proposed legislation today is ensuring that digital platforms, including AI platforms, provide real societal benefit. Within Europe, the proposed European Union (EU) AI Act111https://artificialintelligenceact.eu/ aims to support safe AI that respects fundamental human rights. The regulation sets what technologists need to do. The concept of data self-determination, which is often used in a legal context, implies that individuals are not only aware of who knows what about them but can also influence data processing that concerns them [60]. Given that nowadays, data processing is conducted by opaque AI algorithms behind corporate firewalls, sometimes even without our knowledge, data self-determination is harder than ever before. When it comes to trust in web data and services, Berners-Lee and Fischetti [12] envisaged an “Oh yeah?” button embedded into Web browsers that would provide justifications as to why a page or a service should be trusted. Alas, their vision was never realised in popular web browsers222However, a linked browser prototype, the Tabulator, incorporated this feature in an Justification UI (http://dig.csail.mit.edu/TAMI/2008/JustificationUI/howto.html#useTab).. Instead, we have dedicated websites, e.g., the Ecommerce Europe Trustmark333https://ecommercetrustmark.eu/ that are used to perform company reputation checks and fact-checking websites, such as Snopes444https://www.snopes.com/, that can be used to check the validity of information posted online. Although some automated fact-checking techniques have been proposed [87], they are used solely for developing trust in information resources and cannot provide any guarantees with respect to AI-based data processing. As we move beyond trust towards accountability, policies have already been used to specify legal data processing requirements that serve as the basis for automated compliance checking, for example, [82]. But what happens when service providers or AI algorithms do not comply? How far can technology go in terms of helping us determine non-compliance and make service providers accountable for their actions?

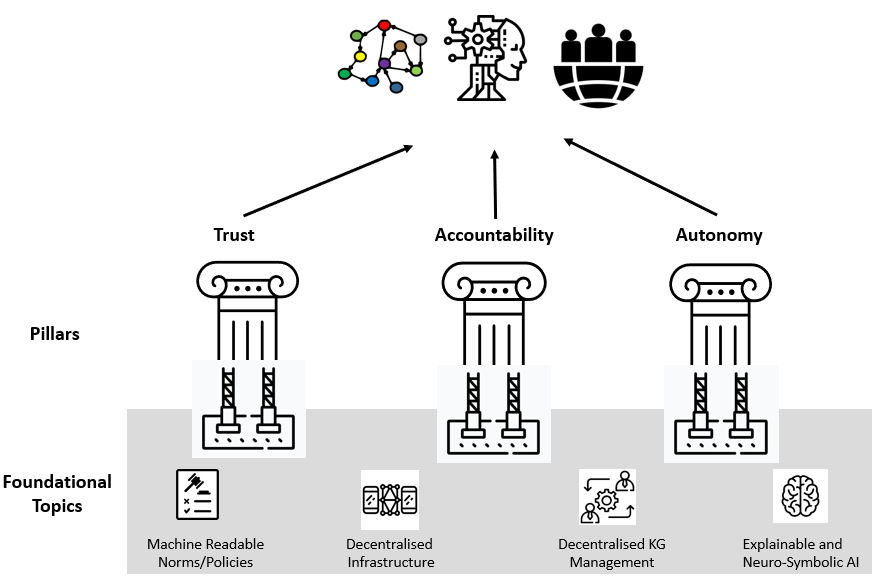

In this paper, we propose a research agenda for ensuring that KG-based AI approaches contribute to user self-determination instead of hindering it. Our vision, which is depicted in Figure 1, is structured around three pillar research topics - trust, accountability, and autonomy - that represent the desired goals for how AI can benefit society and facilitate self-determination. The pillars combine fundamental principles of the proposed EU AI Act and self-determination theory. Both trust and accountability are imperative for safeguarding against adverse impacts caused by AI systems, while autonomy is critical for ensuring individuals are able to determine their own destinies. The pillars are supported via four foundational research topics - machine-readable norms and policies are needed for humans to declare regulatory frameworks, privacy and usage constraints that can be interpreted by the machines that process their data; decentralised KG management and decentralised infrastructure to provide alternatives to approaches where a central entity controls a whole process, that are prone to abuse of power; and explainable neuro-symbolic AI to clearly communicate and prove the decisions AI systems make. We posit the following research questions:

- Q1

-

What are the key requirements for an AI system to produce trustable results?

- Q2

-

How can AI be made accountable for its decision-making?

- Q3

-

How can citizens maintain autonomy as users or subjects of KG-based AI systems?

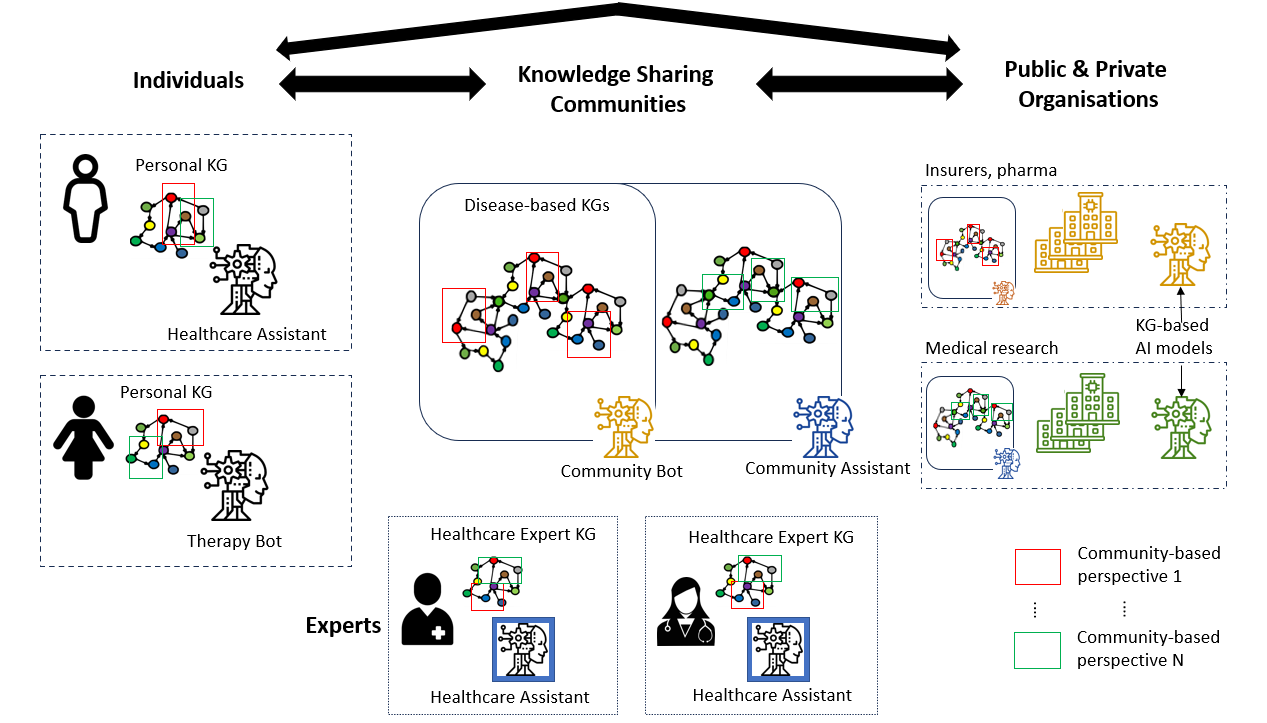

In order to facilitate exposition, we ground our discussion in a healthcare scenario inspired by the recently proposed regulation on European Health Data Space555https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A52022PC0197 that aims to ensure that “natural persons in the EU have increased control in practice over their electronic health data” and to facilitate access to health data by various stakeholders in order to “promote better diagnosis, treatment and well-being of natural persons, and lead to better and well-informed policies”. The proposed healthcare scenario, which is illustrated in Figure 2, is composed of the following actors and interactions:

- Individuals

-

manage their Personal Knowledge Graphs (PKGs) (aligned with the original Semantic Web vision and modern interpretations [7, 47]). They collect knowledge about their medical conditions, symptoms, treatments, reactions to treatments, etc. Individuals get services from KG-based AI applications that utilise their PKGs, e.g., therapy bots or health assistants.

- Experts

-

in healthcare also have PKGs where they collect their knowledge about diseases, results of the treatments they have suggested in the past, links to general medical knowledge graphs, etc. Experts may also be assisted by KG-based AI models.

- Knowledge sharing communities

-

are spaces where individuals and healthcare experts may share subsets of their PKGs in the context of specific knowledge, e.g., diseases. We call these community-based perspectives. Perspectives from different contributors are aggregated into community KGs (e.g., disease-based). AI applications use these KGs for community benefit, e.g., assessing if a treatment that worked for an individual may work for a different individual.

- Public and private organisations

-

may negotiate access to data and knowledge from communities to train large KG-based AI models to either improve internal processes or power products sold to communities, experts, or individuals, completing the cycle.

The remainder of the paper is structured as follows: Section 2 introduces the necessary background in terms of KG-based AI. Section 3 highlights the importance of trust, accountability, and autonomy when it comes to ensuring that AI benefits society. Section 4 presents several KG-based tools and techniques that can be used to facilitate trust, accountability, and self-determination. In Section 5, we propose a research roadmap that includes several challenges and opportunities for KG-based AI that benefits individuals and society. Finally, we conclude and outline important first steps in Section 6.

2 Knowledge Graph-based AI

In his seminal publication, “Thinking, Fast and Slow”, Daniel Kahneman [49] presents a comprehensive theory of human intelligence, offering profound insights into the workings of the human mind. This groundbreaking work separates intuition from rationality when approaching problem-solving tasks, defining them as two sets of abilities or systems. System 1 operates at an unconscious level, generating responses effortlessly and swiftly. In contrast, System 2 requires conscious attention and concentration, enabling the generation of responses needing complex computations. Kahneman’s characterisation of mental cognition aligns with statistical and symbolic learning models that seek to simulate human thinking processes [16]. These systems are known as neuro-symbolic systems [17], and there is a growing interest in emerging hybrid approaches that aim to integrate cognitive capabilities. Specifically, they strive to combine the power of neural networks, such as LLMs, with the interpretability offered by symbolic processing, particularly semantic reasoning over KGs.

2.1 Knowledge Graphs

Google first introduced KGs in 2012 when they enabled “Knowledge Panels” to contain descriptions, including pictures, for search items. For example, if one types “London” in Google Search, the Knowledge Panel displays pictures, the current weather, a map, directions, elevation, and related entities (e.g., Paris). The seed for the Google KG was Freebase – a community knowledge base initially launched in 2007 with an add-on RDF service launched at the International Semantic Web Conference in 2008. In 2010, Google bought Metaweb, the company that owned Freebase and extended the knowledge base into the Google KG666https://en.wikipedia.org/wiki/Schema.org.

In 2011, Bing, Google and Yahoo! launched Schema.org, a reference website for common data schemas related to web search engines. The proposal was that website owners would use the published schemas alongside Semantic Web standards such as RDFa and JSON-LD. A number of the schemas, such as Organisation, influence the results returned by Google KG search. Schema.org is an example of a shared vocabulary for semantic representation; the use of such vocabularies or ontologies in KGs, along with the ability to map between equivalent schemas in them, enables the integration of heterogeneous data at scale.

Today, KGs are used in a wide range of areas and products outside of search. For example, Netflix, Amazon, and Facebook all use KGs as the foundation for their recommendation engines for television programmes and films, consumer products and posts777https://builtin.com/data-science/knowledge-graph, whereas in the healthcare sector, KGs are used to integrate medical knowledge and support drug discovery.888https://www.wisecube.ai/blog/20-real-world-industrial-applications-of-knowledge-graphs/

2.2 Large Language Models

A Large Language Model (LLM) is a specialised machine learning model constructed using a transformer architecture, a category of deep neural networks [120]. LLMs are primarily designed for predicting the next word in a sequence, making them flexible tools for various text-processing tasks, such as text generation, summarisation, translation, and text completion. Examples of existing LLMs include OpenAI’s ChatGPT [93] and Google’s PALM [24]. These models have demonstrated high performance in Natural Language Processing (NLP) tasks like code generation, text generation, tool manipulation, and comprehension across diverse domains, often achieving high-quality results in zero-shot and few-shot settings. This success has stimulated advancements in LLM architectures, training techniques, prompt engineering, and question answering [72].

Despite their unquestionable capabilities in emulating human-like conversations, there is an ongoing debate regarding the intelligence exhibited by LLMs, particularly, since their fluency in language does not necessarily imply a cognitive understanding of real-world problems [72]. Additionally, LLMs can only learn knowledge when it appears in the training data and may perform badly when answering questions involving long-tailed facts [30]. Moreover, they may struggle to absorb new knowledge and are not easy to audit [73], suggesting potential risks of discrimination and information hazards.

2.3 Neurosymbolic AI

LLMs and machine learning models, in general, are trained on extensive datasets, resulting in high-quality outcomes whenever applied to specific prediction tasks. However, LLMs, like OpenAI’s ChatGPT [93], lack causal understanding and may hallucinate in cases which are not statistical in nature (e.g. memories or explanations) [40]. On the other hand, symbolic AI systems are capable of emulating human-like conscious processes required for causality, logic, and counterfactual reasoning, as well as maintaining long-term memory. As a result, symbolic systems can empower LLMs by modelling human learning and combining knowledge extracted (e.g., from KGs) to formulate prompts that allow for more fluent communication with users.

Neuro-symbolic AI provides the basis for integrating the discrete approaches implemented by Symbolic AI with high-dimensional vector spaces managed by LLMs. They must decide when and how to combine both systems, e.g. following a principled integration (combining neural and symbolic while maintaining a clear separation between their roles and representations) or integrated (e.g., a symbolic reasoner integrated into the tuning process of an LLM). Recently, van Bekkum et al. [109] propose 17 fundamental design patterns to model neuro-symbolic systems. These patterns encompass many scenarios where the symbiotic relationship between symbolic reasoning and ML models becomes apparent. Since these combinations may enable symbolic reasoning and enhance contextual knowledge, neuro-symbolic systems may empower explainability and, as a result, also improve transparency by showing how a system works based on the symbolic explanations deduced by the hybrid system.

3 KG-based AI that Benefits Individuals and Society

Considering our vision that KG-based AI can facilitate self-determination, we start by discussing the pertinent role played by trust, accountability, and autonomy when it comes to ensuring that AI benefits society. In each case, we highlight existing challenges and present arguments in favour of a KG-based AI system.

3.1 Trust and KG-based AI

One of the primary objectives of the proposed EU AI Act is the “development of an ecosystem of trust by proposing a legal framework for trustworthy AI”. The Merriam-Webster dictionary definition of trust includes a “firm belief in the reliability, truth, or ability of someone or something” [70]. Questions we address in this paper include understanding how KG-based AI systems can demonstrate reliability, truth, and ability through mechanisms which add transparency to all elements involved in KG-reasoning. These include comprehensive provenance tracking of data sources and data elements used for any output; understanding repeatability for all KG-based AI reasoning (e.g. if datasets are altered or disappear altogether or if other reasoning methods, such as LLMs, are involved); and alleviation mechanisms when KG-based AI system responses are untruthful.

The proliferation of misinformation on the internet has risen significantly in recent years, coinciding with the advancements in generative AI technologies. As AI becomes more sophisticated, it has inadvertently provided tools and techniques for the creation and dissemination of false information, leading to widespread confusion and societal harm [25, 121]. For instance, AI-generated deepfake videos have become a concerning source of misinformation. Deepfakes use AI algorithms to manipulate and superimpose faces onto existing videos, making it difficult to discern real from fabricated content [113]. This technology has been used to create fake videos of public figures saying or doing things they never actually did, leading to potential defamation and manipulation of public opinion. AI-powered chatbots and automated accounts on social media platforms have been employed to spread false information and manipulate public sentiment. These bots can mimic human-like conversations and flood social media platforms with fake news, propaganda, and divisive narratives, influencing public opinion and sowing discord, and have even contributed to misinformation in medical literature [65]. AI-powered recommendation algorithms used by platforms like social media and video-sharing websites can inadvertently contribute to the spread of misinformation. These algorithms aim to maximise user engagement by suggesting content based on user preferences and behaviour. They can create filter bubbles, reinforcing users’ existing beliefs and exposing them to a limited range of perspectives, potentially amplifying false information and preventing users from accessing accurate and diverse sources of information [84].

Amidst these challenges, KG technologies have emerged as a potential solution to curb misinformation and enhance trust. Leveraging the power of crowd-supplied and verified knowledge sources, such as Wikidata [111], KGs enable comprehensive fact-checking capabilities. By integrating diverse and reliable information from various trusted sources, these graphs can potentially identify and flag misleading or inaccurate content more effectively. By utilising the collective intelligence of a crowd, KG technologies empower users to contribute to the verification process, enhancing the accuracy and credibility of the information presented. Through collaborative efforts and the utilisation of KG technologies, it is possible to combat the rising tide of misinformation, safeguard the integrity of online information and foster a more informed digital society. Coupled with distributed ledgers KG-based AI may combat misinformation on the web [95]. There is already a growing body of work in this space, which shows some promise. For example, Mayank et al. [68] and Koloski et al. [57] describe systems that leverage KGs to detect fake news; Kou et al. [59] and Shang et al. [96] describe how crowd-sourced KGs can be used to mitigate COVID-19 misinformation; and Kazenoff et al. [50] use semantic graph analysis to detect cryptocurrency scams propagating in social media.

3.2 Accountability and KG-based AI

According to the proposed EU AI Act, when it comes to high-risk AI, “accuracy, reliability and transparency are particularly important to avoid adverse impacts, retain public trust and ensure accountability and effective redress”. Accountability in a KG-based AI context assumes that data scientists, computer scientists, and software engineers will follow best practices and ensure compliance with relevant legislation. In the purely symbolic world, such properties can be achieved via consistency and compliance checking based on formal requirements specified in policy languages such as LegalRuleML [4] and ODRL [46]. When it comes to the sub-symbolic world, these principles are particularly challenging, as ML algorithms are often opaque and could potentially infer confidential information during the training process. In recent years, various Explainable AI (XAI) techniques have been used to build or to be applied to the output of models such that they can be interpreted and understood by various stakeholders [56]. In the context of KG-based AI, this will require the intersection between two strains of explainability: the explanation of why a statement is in the KG that supports the AI and the explanation of how the model used the statements from the KG to reach a particular decision. KGs can also be used to support the modelling, capturing, and auditing of records useful for accountability throughout the system life cycle [75]

When it comes to AI and accountability, technical research should go hand in hand with the interdisciplinary research conducted in communities like FaccT999https://facctconference.org/index.html. A recent paper [26] revisited the four barriers of accountability that were developed in the 1990s for accountability of computerised systems in the light of the rise of AI, finding that they are even more important than before. The main barrier is the problem of many hands – the large amount of actors involved in the construction of an AI service creates difficulties in the assignment of responsibilities in case of harm. Advancing efficient provenance collection and verifiability will be the key technical intervention to overcome this barrier. Fields such as data science require strong guarantees for provenance to build context-aware KGs [94]. Similar to explainability, we consider two different approaches that need to be combined: the provenance of statements in the KG and the provenance of the pipeline that was followed to construct the ML model.

3.3 Autonomy and KG-based AI

Alongside accountability and trust, the third pillar needed to support self-determination is autonomy, defined from a self-determination theory101010https://en.wikipedia.org/wiki/Self-determination_theory perspective as “the belief that one can choose their own behaviours and actions”. In the current context, we take this to mean that individuals should be able to make their own decisions about their uses of KG-based AI and about its uses of their data (and have their wishes respected). Assuming that AI systems can be made to be trustable and accountable, how can we best support autonomy in this way? That is to say, if we can know that an AI will behave in a desired and known way and that its decisions and processes are transparent and traceable, how can we express and enable control over what it does in regard to an individual? A number of approaches have emerged in recent years which facilitate individuals’ data sovereignty and how they represent and express their identity online.

The concept of a PKG, introduced in our illustrative scenario (Figure 2), is one means of facilitating autonomy; Solid pods [91, 67] are secure decentralised data stores accessible through standard semantic interfaces for applications that generate and consume linked data. Currently, the default model on the Web is for service providers to host and control access to user data by means of a user account. This denies autonomy to the individuals concerned since all access is mediated via applications and interfaces designed and controlled by service providers. The PKG model is that personal data is independent of any application; PKGs are the primary source of data under the control of individuals, and they mediate service access via standard interfaces. On top of shifting control away from service providers, this approach makes it technically simpler to implement data usage policies, as they can be stored with the data and evaluated at the PKG level.

One prominent way of achieving the second goal is through the notion of Self-Sovereign Identity (SSI) [27]. Traditional digital identity (e.g. as in OpenID Authentication [41]) has been modelled in terms of Identity Providers (IdPs). An individual and an IdP establish a relationship, and the IdP generates a digital identity for them. If the individual wants to authenticate with a third party, the IdP confirms the relationship to them and then asserts that identity to the relying party. Crucially, sovereignty over that identity and decisions about who can see it, the data associated with it, or whether it continues to exist are taken by the IdP. With SSI, an individual generates their own digital identity (e.g. a cryptographic key pair), makes their own identity assertions, and therefore has full control over that identity, with correlations between two identities (digital or physical) relying explicitly on attestation by others, and trust relationships with them111111As it ultimately does in traditional digital identity, where trust in a small number of well-known IdPs serves as a simplified proxy for more detailed or fine-grained considerations of trust networks.. The autonomy enabled by SSI makes selective disclosure possible, meaning that what identity information gets shared with whom can be made contextually and on a case-by-base basis – much like presenting different aspects of one’s personal identity in daily life (e.g., work and home personas).

Considerations of identity pervade technical ones for safeguarding self-determination. It seems uncontroversial that there will be scenarios in which an individual’s identity is relevant to what they wish to do with a KG-based AI, whether in training, KG contents, or inference, and indeed, even where anonymity is desired, identity must be considered in order to avoid revealing it. Identity is also fundamental to the concept of trust; trust in a person, organisation, system, AI model, KG, etc., is useful only insofar as it is possible to identify relevant entities as needed, and accountability cannot be tracked or apportioned without it. We consider autonomy in terms of the identity, data, and sovereignty afforded to an individual or organisation in terms of what they or others communicate to a KG-based AI ecosystem or elements thereof, what they or others receive from those, and what happens to those (including respect of choices) as data is processed in the ecosystem, with each of these evaluated through the lenses of selective disclosure, relevant identities, and utility.

4 A KG Toolbox for Trust, Accountability, and Autonomy

In order to ground our pillars, we motivate and introduce relevant literature and highlight open research challenges and opportunities concerning our foundational topics: machine-readable norms and policies, decentralised infrastructure, decentralised KG management, and explainable neuro-symbolic AI, each of which plays a pivotal role in facilitating trust, accountability, and autonomy in KG-based AI.

4.1 Machine-readable Norms and Policies

When it comes to KG-based AI, norms and policies could potentially be used to inform data processing based on legal requirements, social norms, privacy preferences, and licensing. Legal documents are designed in natural language for human consumption; thus in order to enable machines and automated agents to evaluate and enforce the agreements embodied in documents, we need to translate them to formats they can read and process efficiently.

4.1.1 Norm and Policy Encoding

Languages to express policies, including but not limited to data access, can be categorised as either general or specific. In the former, the syntax caters to a diverse range of functional requirements (e.g. access control, query answering, service discovery, negotiation), whereas the latter focuses on just one functional requirement. In the early days of the Semantic Web, research into general policy languages that leverage semantic technologies (e.g. KAoS [108], Rei [48], AIR [51], and Protune [15]) was an active area of research. However, despite the huge potential offered by these general-purpose languages to date, none of them achieved mainstream adoption [55]. More recently, researchers have proposed ontologies that can be used to represent licences, privacy preferences, and regulatory obligations [53]. When it comes to the legal domain specifically, Semantic Web researchers have proposed cross-domain ontologies that can be used to encode legal text in a machine-readable format using LegalRuleML121212https://docs.oasis-open.org/legalruleml/legalruleml-core-spec/v1.0/os/legalruleml-core-spec-v1.0-os.html and adaptations thereof (e.g. [3, 80]). Others focus on facilitating legal document indexing and search using the European Law Identifier (ELI)131313https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52012XG1026(01) and the European Case Law Identifier (ECLI)141414https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52011XG0429(01) (e.g. [78, 22]), or bridging the gap between the EU and member state legal terminology (e.g. [1, 14]). Besides these cross-domain activities, there have also been various domain-specific initiatives. For instance, the ELI ontology has to be extended to facilitate the encoding of the text of the General Data Protection Regulation (GDPR)151515 https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32016R0679&qid=1681238509224 (e.g. [83]). At the same time, others have focused specifically on modelling privacy policies (e.g. [79, 82]). The Open Digital Rights Language (ODRL)161616https://www.w3.org/TR/odrl-model/, which is a W3C recommendation, has gained a lot of traction in recent years in terms of intellectual property rights management (e.g. [42, 74]). Additionally, the ODRL model and vocabularies have been extended in order to model contracts [39], personal data processing consent [32], and data protection regulatory requirements [110]. There has also been some work on automatically extracting rights and conditions from textual documents (e.g. [21, 20]) or extracting important information from legal cases (e.g., [116, 77]). Although many of the proposed approaches are based on existing standards, there is a lot of overhead involved for systems that need to consider different types of policies that are encoded using different languages. General-purpose policy languages are particularly attractive in such scenarios as they lessen the administrative burden. However, considering the potential complexity of such a language, there is a need for policy profiles with well-defined semantics and complexity classes.

4.1.2 Norm and Policy Encoding

From a policy governance perspective, LegalRuleML researchers have proposed automated compliance approaches based on auditing (e.g. [28, 82]) and business processes (e.g. [81, 9]). While [37] shows how LegalRuleML, together with semantic technologies, is used for business process regulatory compliance checking based on a rule-based logic combining defeasible and deontic logic. One of the advantages of description logic-based approaches, when it comes to consistency and compliance checking, is that they can leverage generic reasoners, such as Pellet171717https://github.com/stardog-union/pellet (e.g. [33]). Although there are presently no ODRL-specific reasoning engines, researchers have demonstrated how ODRL can be translated into rules that can be processed by Answer Set Programming (ASP) [8] solvers such as Clingo [35] (e.g. [42, 110]). Additionally, there have been several custom applications that are designed to support ODRL enforcement or compliance checking, such as a licence-based search engine [74]; generalised contract schema and role-based access control enforcement [39]; and access request matching and authorisation [32]. Despite existing efforts, challenges arise when it comes to ensuring that AI and processing algorithms adhere to the policies. This could potentially be achieved either before or during processing using Trusted Execution Environments (TEEs) [10] or after execution by detecting data misuse via automated compliance checking using system logs [54]. The combination of ex-ante and ex-post compliance checking is particularly appealing for supporting risk-based conformance checking such as that envisaged in the proposed EU AI Act. Nevertheless, the practicality, performance and scalability of these proposals remain to be determined. In order to further support self-determination, data owners and processors should be able to engage in on-demand negotiation over policies, assisted by technology that ensures a safe and fair space and helps assess the compliance of negotiated terms with existing regulations. Negotiation between automated agents has been a topic of interest since the early 2000s, but in the context of self-determination, we must pay attention to the right balance between artificial representation and human involvement [5, 6].

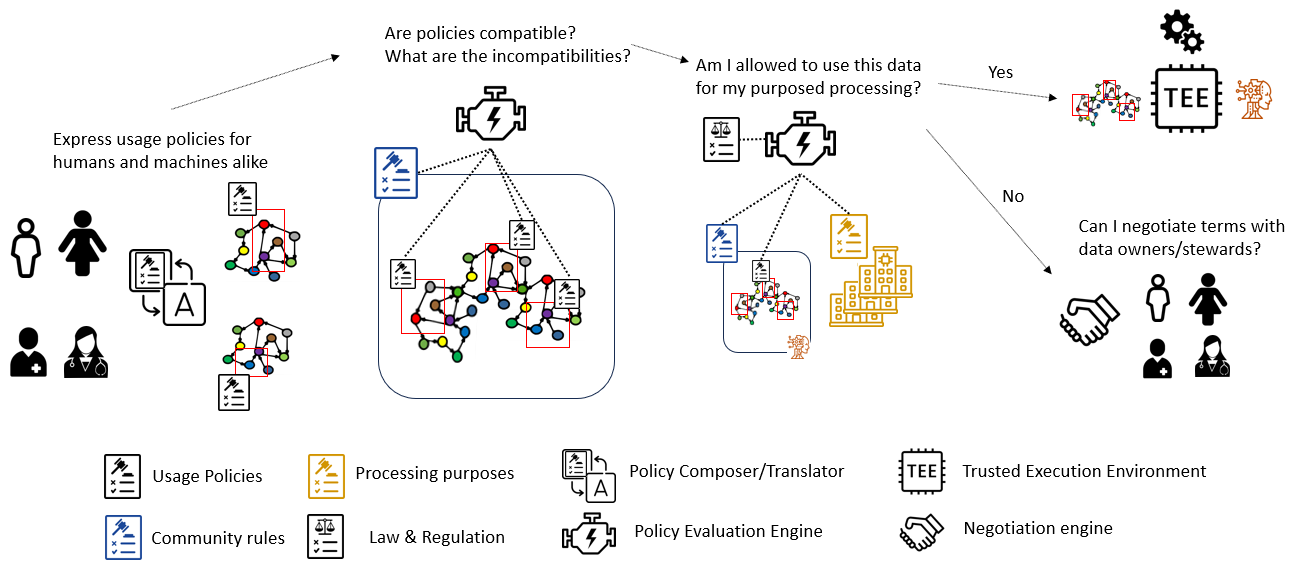

4.1.3 Grounding based on our Illustrative Scenario

Figure 3 illustrates how machine-readable policies and norms can be used to support self-determination. Considering our illustrated scenario (Figure 2), individuals may want to establish policies to precisely define the subset of their PKGs to be shared with communities and what forwarding they allow. For example, share with the diabetes community my blood in sugar values measured by my connected device and the output of my AI healthcare assistant, or only share and forward anonymised aggregates to medical research institutions, or contact me for negotiation if the pharma company is interested in using my data for clinical studies. Communities may do the same, e.g. requiring specific data to be shared to join the community, but also requiring agreements in order to ensure that participants will abide by social and behavioural norms needed for self-regulation. Public and private organisations may need to adhere not only to privacy preferences and licences but also to various general regulations, e.g., the GDPR, the proposed AI Act in the EU or the Health Insurance Portability and Accountability Act (HIPAA)181818https://www.hhs.gov/hipaa/index.html in the US, as well as domain-specific regulations (e.g. advanced therapy medicinal products191919https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A32007R1394 and rare diseases202020https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A32009H0703%2802%29).

4.2 Decentralised Infrastructure

Over the last 15-20 years, a number of communities have come to accept that centralised computing systems, despite many benefits, can lead to issues such as the over-centralisation of power, the risk of single points of failure, potential abuse of personal data and creation of data silos which can inhibit innovation. A boon from this realisation is that we now have a number of technologies, standards, and approaches to decentralisation which offer benefits in terms of scalability, diversity, and privacy, as well as individually-centred flexibility and control, and is an appealing basis for maintaining and increasing trust, accountability, and autonomy with KG-based AI.

4.2.1 Personal Knowledge Graphs

The concept of a Personal Knowledge Graph (PKG) is that an individual can keep their personal or private data in a space belonging to them, rather than with siloed centralised service providers with limited access and control [7]. A Solid pod212121https://solidproject.org/ is an example of a PKG platform, and the key to the vision of Solid is that there should be standard interfaces and authorisation models to grant or deny access to the contents of a PKG at a granular level. This is argued in particular222222https://ruben.verborgh.org/blog/2017/12/20/paradigm-shifts-for-the-decentralized-web/ to enable a highly decentralised architecture for Web applications. Rather than a provider aggregating data from all users into a single location controlled by the provider and application code accessing such data there, an individual permits (or does not permit) Web applications of their choice to access whatever subsets of their data they decide from their PKG. As well as autonomy, this enables greater accountability since access to the PKG can be filtered via personal machine-readable policies at source, and activities can be tracked directly (e.g. [29]). Although PKGs offer great potential, they also come with challenges in terms of performance and scalability, as applications will need to interact with multiple distributed data sources as opposed to a single backend server. These challenges, however, may also simultaneously be opportunities for scalability trade-offs, querying over multiple low-powered data sources rather than a high-powered central one.

4.2.2 Distributed Ledger Technology

Distributed Ledger Technology (DLT) [104] promotes trust and empowerment through the replication of data across contributing nodes, which are geographically distributed across many sites, and the use of consensus algorithms which enable collective fair decision-making with no central control. Blockchains are a type of distributed ledger where an ever-growing list of records in blocks is tied together with cryptographic hashes, often, although not necessarily, associated with a securely exchangeable token system or “cryptocurrency”. This technology rose to prominence following the release of Bitcoin [76] in 2008 - a blockchain-based currency that has now been adopted by El Salvador as their legal tender. Ethereum [115], a blockchain platform released in 2015, contains the notion of a “Smart Contract” [19] (originally coined in the 1990s by Nick Szabo [105]), which is a collection of code that executes in a fully decentralised way. Smart Contracts have been used to implement a range of decentralised applications, including Decentralised Autonomous Organisations (DAOs) [64], which are organisations where decisions are made through blockchain consensus mechanisms. The best-known example of a DAO was “The DAO”, which at one point was worth more than $70M; they have been applied to a number of different activities, including scholarly publishing [43]. Despite the fact that immutability and transparency guarantees offered by DLT are very attractive when dealing with personal data, both the ledgers and the smart contracts themselves will need to be protected against unauthorised access and usage. Personal data itself is neither stored in, or derivable from, immutable DLT records. Smart contracts may also introduce scalability issues: the default Ethereum model involves every contributing node executing every run of a smart contract and thus has inherent scale limitations. Relaxing this model may, however, affect trust.

4.2.3 Self Sovereign Identity

In the Web space, Self-Sovereign Identity (SSI) is being developed through a combination of Decentralised Identifiers (DIDs) [101] and Verifiable Credentials (VCs) [102], W3C standards for identity and verifiable attestation claims, respectively. DLT is one of the ways in which DIDs can be grounded, although, by design, the DID standard is open in terms of method. A DID is a URL (did:<method>:<...>) which can be resolved in a method-specific manner (e.g. HTTP(S) dereferencing, reading from a smart contract, etc.) to obtain a DID document, a Linked Data set containing information about digital identity in a standard form - for example, how to verify it (e.g. a public key), methods for communicating with the entity controlling it, and so on. DIDs enable SSI; the creation and use of DIDs are open and decentralised, and by using different DIDs with different audiences, individuals can minimise how easily their information can be tracked or correlated across services and can contextually and selectively disclose personal information as desired. This grants individuals significantly greater autonomy than current practices. There is a potential trade-off with trust and accountability of an individual when it comes to information that others need to rely on, which is that effective anonymity of a unique DID can be used to misrepresent oneself (e.g. fake a qualification or entitlement) or pretend to be someone else. VCs are a proposed solution to this. The VC data model is for sharing data alongside information that a recipient can use to verify its integrity or origin, such as a digital signature or DLT record. If a DID is presented to a service that is restricted to legal adults, for example, the DID owner may also present a VC issued by a government body confirming their adulthood; methods for selective disclosure supported by both DID and VC standards allow this to be done verifiably without requiring disclosure of real-world identity. These technologies are relatively new in comparison with standard digital identity models, and while intended and designed to address issues in those models, they may also introduce new difficulties or enable different vulnerabilities to, e.g. identity fraud, than current standards.

4.2.4 Federated Learning

In the context of data-driven AI and decentralised infrastructure, there are also techniques for decentralised machine learning. Federated Learning (FL) [118] is the idea that rather than aggregating training data in one location controlled by a model developer (thereby compromising subject privacy), data holders can run learning algorithms to generate model weights for their own data locally and privately, and then send only the weights to the developer to be incorporated into the larger model. An example might be a smartphone text prediction personalisation algorithm, where a user’s own writing is used to generate predictive weights on the device, where periodic selections of these can be aggregated to improve general text prediction models. Refinements of FL approaches include sending not the actual learned model weights but a set of weights with statistically similar properties [112] to further reduce the risk of privacy breaches without affecting model performance. A related approach takes this concept even further, with the idea of embeddings in a larger model, e.g. “Textual Inversion” [34] to personalise large generative image diffusion models. The intuition here is that if someone wants certain personalised types of output from a generative AI, then if a model is sufficiently large, there is a good chance that the desired concept already exists within it. More recently, the idea of federating for preserving privacy has been applied specifically to deep learning, in particular in the context of the Internet of Things. [119] proposes an architecture with a control layer including a distributed ledger, while [117] propose advanced cryptographic mechanisms to reduce the risk of privacy leaks, following more general approaches that apply either differential privacy, homomorphic encryption or secure multi-party computation. Federation also has the positive side effect of potentially speeding up model training when the privacy constraints allow for a helpful distribution of the process [11]. However, when opening the process to multiple parties, there are a number of attack vectors that do not exist in a centralised approach for which we need protection and pay a communication and computation overhead [44].

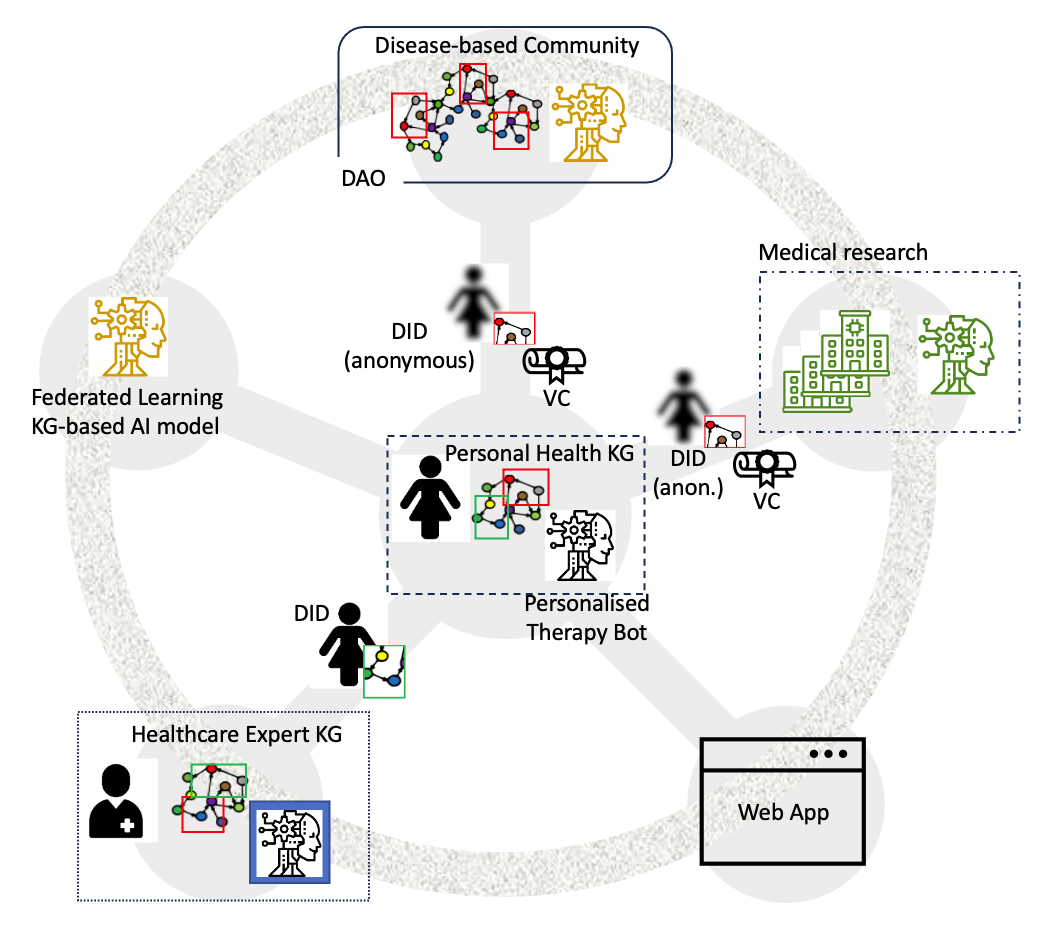

4.2.5 Grounding based on our Illustrative Scenario

A decentralised infrastructure supporting self-determination for our illustrative scenario (Figure 2) is depicted in footnote 24. Health data is highly sensitive and private, and individuals may want or need to interact with multiple services where it is relevant, including KG-based AI systems. It thus makes sense to create a personal health knowledge graph (PKG) to be a comprehensive and interconnected representation of an individual’s health information, including their medical history, lifestyle choices, genetic data, and real-time health monitoring data from IoT devices. Data from various sources, such as wearable devices, mobile applications, electronic health records, and even genomic sequencing, can be linked together to form a holistic view of an individual’s health in such a personal health knowledge graph. An early example of a PKG was in [106], where medical, lifestyle, and IoT health monitoring data in a PKG was integrated into a (patient-focused) decision support system built around a public medically-curated KG representing cardiovascular risk factors, giving individuals the autonomy to gain deeper insights into their own health patterns and risks, identify correlations, and make more informed decisions.

More recently, BlockIoT [97, 98] aims to integrate health data seamlessly in a decentralised PKG using blockchain and KG technologies, addressing this trust aspect and using PKG-driven smart contracts to trigger the personalised recommendations for lifestyle modifications, medication adjustments, or even timely interventions by the healthcare providers. Furthermore, the PKG can serve as a powerful tool for healthcare beyond the individual. Communities of patients, providers, researchers, etc., or combinations thereof, can share knowledge about various aspects of, e.g., particular conditions, whether that is clinical evidence and best practice, peer advice and support on living with a condition, or data on novel or rare symptoms and side effects, with this knowledge used for support, care, or medical research across populations. De-identified and aggregated data from multiple individual KGs can be collected in community KGs, with trust securely established using DIDs and VCs, and accessed by community, practitioner, researcher, and service provider stakeholders, allowing for decentralised large-scale analysis and identification of broader health trends from multiple perspectives and intersecting factors. This can lead to advancements in disease prevention, treatment protocols, and the development of personalised medicine in a collaborative manner [99]. KG-based AI systems can be both trained and used across this ecosystem, with FL being applied to train larger models (e.g., the organisation models in Figure 2) and personalised embeddings used by individuals to get the best experience from their therapy bots and healthcare assistants while maintaining privacy and autonomy.

4.3 Decentralised KG Management

As the amount of data and knowledge grows exponentially, managing and harnessing this vast information becomes increasingly complex. Traditional centralised approaches to KG management face challenges in terms of scalability, privacy, and control over data, and to address these issues, decentralised KG management emerges as a promising solution. This section explores the key aspects and open challenges in decentralised KG management to enable trust, accountability, and self-determination for individuals in a rapidly evolving AI ecosystem.

4.3.1 Decentralised KG Access and Management

Efficient query processing infrastructures are fundamental for traversing decentralised KGs. There have been notable efforts such as Fedbench [92] in the past. However, these infrastructures should be capable of executing queries against the available KGs while respecting privacy and adhering to norms and policies. With the increasing emphasis on privacy protection with regulations such as GDPR, it is crucial to develop mechanisms that allow users to access and extract knowledge from KGs without compromising sensitive information or violating privacy regulations. Several research directions are worth considering to address the open challenges in decentralised KG management. Firstly, developing the formalisms to describe KG management semantically can provide a common ground for understanding and interoperability across different decentralised KG systems. Such formalisms can enable standardised representations of KGs in the form of ontologies and facilitate seamless integration and collaboration among diverse knowledge sources. Architectures supporting new protocols and standards specific to decentralised KGs are essential for establishing interoperability and seamless communication between knowledge sources and systems. By defining and adopting common protocols and standards, decentralised KGs can collaborate more effectively, share insights, and facilitate cross-domain knowledge discovery.

Note that if we add LLMs to the picture, their current training and execution processes are currently centralised. Decentralised KG management is useful for providing transparency in data used for their training. For approaches involving the interaction between LLM and KGs, the transparency of the LLM itself still depends on the owner.

4.3.2 Provenance and Explanations

Furthermore, explainable methods for data integration and curation, as well as KG validation and distribution, such as the Explanation Ontology for user-centric AI, are necessary to ensure the reliability and accuracy of decentralised KGs [23]. By providing transparent and interpretable approaches, users can have better insights into knowledge integration and validation, enhancing trust and accountability of the knowledge contained in the KG and the insights derived. This is especially critical because, in decentralised KGs, data may come from various sources and be represented in different ways. The standardised framework provided in the Explanation Ontology for representing domain-specific explanations of KG entities and relationships helps users and applications understand the meaning and context of the data in the KG. Provenance and traceability also play a vital role in decentralised KG management. Establishing mechanisms to track and validate the origin, history, and lineage of knowledge within KGs is crucial for accountability and the ability to trace back the sources and transformations that contribute to the resulting knowledge. The W3C Provenance Data Management standards [71] provides the basis for encoding provenance attributes in KGs, and subsequent nanopublications specification [38] has gained a lot of traction in the biomedical domains. While these solutions exist, there needs to be a cohesive framework that ties together explanation provenance data management in a decentralised KG context and ensures that users can trace the origins, transformations, and sources of the data, which is crucial for trust, accountability, and data quality assurance. The W3C provenance data management suite of recommendations provides normative interoperable guidance on recording information about data sources, contributors, and how data is collected or transformed, making integrating heterogeneous data into a coherent KG easier. When data quality issues arise, users can trace back to the source of the problem and take corrective actions, ensuring the KG remains accurate and reliable. The W3C recommendations for decentralised provenance management provide a mechanism for attributing data to its sources or contributors. This attribution is essential for accountability, especially when multiple parties contribute to a KG.

4.3.3 Blockchain Technologies and Tokenomics

In recent years, the integration of blockchain technologies and tokenomics has gained attention in the context of decentralised KG management. Projects such as OriginTrail252525https://origintrail.io have contributed to the development of ownable DKGs, which leverage blockchain’s inherent properties to enhance trust, provenance, and accountability. By utilising blockchain, KG management systems can ensure the integrity and traceability of data and metadata across various nodes in the network. The OriginTrail protocol aims to create a trustless environment where data providers, consumers, and verifiers can interact and validate the authenticity and reliability of data stored within the knowledge graph. Their protocol issues tokens as incentives for data contributors, validators, and curators within the KG ecosystem. The integration of blockchain technologies and tokenomics in decentralised KG management addresses several critical aspects. Firstly, blockchain’s immutability and transparency enable the traceability and provenance of data and metadata, ensuring accountability throughout the KG management pipeline. Secondly, the decentralised nature of blockchain mitigates single points of failure and promotes the distribution of knowledge and decision-making power among participants. This decentralised approach aligns with the principles of self-determination, empowering individuals to have control over their data and knowledge. By rewarding contributors, validators, and curators with tokens, these systems encourage continuous improvement, data quality assurance, and community engagement. Token-based economies can drive the development of sustainable KG management pipelines, enabling the growth and evolution of DKGs over time. However, the tokenomics have to be carefully designed and monitored to avoid the possibility contributors have a motivation (possibly extrinsic) to misbehave. There is also the risk that a sudden churn in blockchain participants impacts performance and availability. There is also the question of the performance of the consensus algorithm of a specific blockchain itself.

4.3.4 Grounding based on our Illustrative Scenario

An approach to decentralised knowledge graph management in the context of healthcare in our illustrative scenario (Figure 2), where users retain control over their personal information while benefiting from enhanced privacy measures and seamless collaboration in a community, is illustrated in Figure 5. At the heart of this framework lies the concept of PKGs, such as Solid, which empowers individuals to store and manage their personal health data securely. Central to the architecture are specific components aimed at safeguarding user privacy and ensuring data transparency. The process begins with knowledge sanitisation, which anonymises sensitive information and filters the data according to the user’s preferences and data policies. These policies encompass not only globally recognised regulations like GDPR and HIPAA but also individual data policies, enabling users to set granular restrictions on how their data is used, such as opting out of genetic data usage for medical research. To ensure interoperability and standardisation, the creation of knowledge graphs leverages community-defined ontologies and vocabularies. These shared frameworks facilitate seamless integration and alignment of personal knowledge graphs within the broader ecosystem, promoting data exchange and collaboration. Users are incentivised to aggregate their knowledge graphs, contributing to the construction of community-based knowledge graphs focused on specific diseases. Through community-based verification, validation, and knowledge aggregation processes, these disease-based knowledge graphs are created, providing valuable insights and fostering collaborative efforts among healthcare professionals, researchers, and the wider community. Blockchain-based incentives drive user participation, rewarding both community users and healthcare experts for their verification, validation, and aggregation activities. The utilisation of an immutable ledger and verifiable credentials ensures the integrity and trustworthiness of the verification process. The validation process, powered by RDF SHACL and Shape descriptions, further enhances data quality and consistency, instilling confidence in the aggregated knowledge. The integrated knowledge graphs, encompassing personal, community-based, and healthcare expert knowledge, can be queried using federated querying mechanisms powered by SPARQL. This allows various institutions, including insurers, pharmaceutical companies, and medical research organisations, to access and leverage the rich insights stored within the knowledge graphs, enabling evidence-based decision-making and advancing medical research and healthcare practices. By combining decentralised knowledge graph management, user-centric privacy controls, and collaborative data sharing, this innovative framework represents a significant step forward in transforming decentralised KG management, fostering a secure, privacy-enhanced environment that empowers users, facilitates collaboration, and drives advancements in domains such as medical knowledge and patient care.

4.4 Explainable Neuro-symbolic AI

Neuro-symbolic systems go beyond generating explanations solely based on the trained model or the individual results derived from applying the model to specific data. They can produce symbolic explanations capturing the essence of an AI model itself. These explanations can be classified as either instance-level explanations generated for each specific result of the model or model-level explanations of the structure of a learned model. Previous work on the role of KGs in AI has focused on explainability. [61] frames explainability as a dimension of trustable AI and presents challenges, existing approaches, limitations and opportunities for KGs to bring explainable AI to the right level of semantics and interpretability. [107] and [88] conducted independent systematic reviews of existing explainable AI systems to characterise KGs’ impact. These results put into perspective the role of KGs in providing symbolic reasoning and learning capabilities with the potential to be precise, as shown by Akrami et al. [2], in addition to being explainable.

4.4.1 Reasoning and AI

Despite the unquestionable reasoning features of symbolic systems and the studies reporting limitations of LLMs in human-like tasks (e.g., explanations, memories, and reasoning over factual statements) [40], there is an ongoing debate about LLM’s reasoning their causal inference capabilities [52]. Although LLMs excel at certain reasoning tasks, they do poorly in others, raising the question of whether they genuinely engage in causal reasoning or merely function as unreliable mimics, generating memorised responses (e.g. [45]). Methods to reason can be roughly divided into methods using only the LLM itself (e.g. with prompt engineering) and methods combining the LLM with an external reasoner and/or external source of knowledge (e.g. a Knowledge Graph) [86]. Our vision posits that external help will always be needed, especially for concrete use cases. There are discussions about the need for knowledge graphs in the era of LLMs. Sun et al. [103] and Dong [31] report on an empirical assessment of ChatGPT [93] with respect to DBpedia, illustrating the need of symbolic systems that over-fit for the truth whenever factual statements are collected from KGs. In addition, symbolic approaches can support sanity checking and be easily auditable and traceable. These features position the combination of both approaches in neuro-symbolic AI as a feasible option to provide KG-based AI. Neuro-symbolic AI delivers the basis to integrate the discrete methods implemented by symbolic AI with high-dimensional vector spaces managed by LLMs. They must decide when and how to combine both systems, e.g., following a principled integration (combining neural and symbolic while maintaining a clear separation between their roles and representations) or integrated (e.g. a symbolic reasoner integrated into the tuning process of an LLM).

4.4.2 Trust and AI

Trust in AI systems stems from various factors, including transparency, reproducibility, predictability, and explainability. Neuro-symbolic systems play a vital role in enhancing trustworthiness by enabling communication between modules and facilitating tracing. Modularity enables the specification, verification, and validation of each component and its interactions. As a result, a system’s behaviour can be traced and validated. Specifically, within the domain of KG-based AI for self-determination, the seamless integration of KGs and symbolic semantic reasoning offers a comprehensive and unified perspective on curated knowledge. This integration holds immense value in addressing critical tasks such as validating, refuting, and explaining incorrect, biased, or misleading information that may potentially be generated by LLMs. By combining symbolic reasoning over KGs with LLMs, the propagation of misinformation can be mitigated while simultaneously enhancing the transparency and trustworthiness of AI-generated outputs. Consequently, KG-based AI systems can effectively emulate human behaviour by subjecting mistakes arising from false or incomplete information to a process of validation and enrichment using curated and potentially peer-reviewed sources of knowledge [109].

4.4.3 Quality and AI

A notable application of KGs in neuro-symbolic AI is as a source of informative prior knowledge to increase the quality of machine learning models. An example is the work by Rivas et al. [89], where a deductive database, expressed in Datalog, establishes an axiomatic system of the pharmacokinetic behaviour of a treatment’s drugs and enables the deduction of new drug-drug interactions in cancer treatments. This prior knowledge plays a crucial role in elucidating the characteristics of a therapy and justifying its efficacy by considering all the interactions and the dynamic movement of drugs within the body. It encompasses factors such as the absorption, bioavailability, metabolism, and excretion of drugs over time. A KG embedding model improves its prediction of the effectiveness of a treatment based on prior knowledge, which encodes statements about a treatment’s characteristics; these statements are inferred by a deductive system which comprises the symbolic component of the hybrid approach. An approach for explaining link prediction (e.g. [90]) allows the justification of why this added prior knowledge affects the model’s decisions, potentially improving trust in the model’s results.

4.4.4 Grounding Based on Our Illustrative Scenario

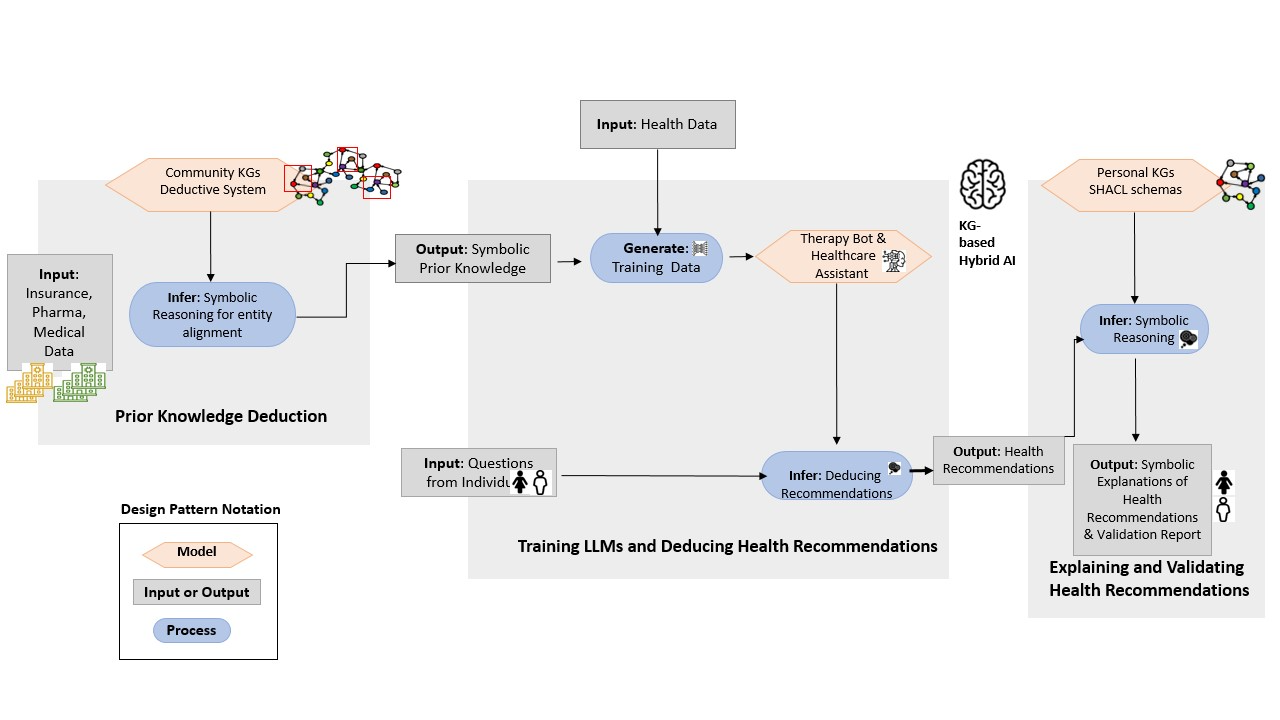

Grounding on the example presented in Figure 2, when individuals and professionals engage in communities with bots and assistants powered by AI models, it is critical to ensure the transparency of their decision-making process. However, despite the increasing focus on LLMs in healthcare and their continual improvement in terms of precision and accuracy [100], their outcomes can still be susceptible to hidden biases and a lack of traceability [62]. To tackle these challenges, the utilisation of a neuro-symbolic system can enhance LLMs by incorporating reasoning capabilities. This system operates as a deductive system on a user’s Knowledge Graph (KG). These hybrid AI systems can be effectively modelled using patterns proposed by [109]. Figure 6 depicts a pattern describing a hybrid AI system that enhances the explainability of the LLMs described in our running example. At the community level, symbolic reasoning applied to the ontology of shared PKGs can generate prior knowledge, enabling precise and concrete questioning of an LLM and providing additional contextual information. Moreover, a symbolic system facilitates the linking of shared PKGs with corresponding entities in KGs related to insurance, pharmaceuticals, and medical research. By incorporating this prior knowledge, the LLM’s answers are improved and validated with the assistance of the symbolic system. The systems operating at the community level and involving heterogeneous sources can be described using the explainable system with prior knowledge pattern; data alignments comprising prior knowledge enhance contextual knowledge provided to the therapy bot, facilitating thoughtful health recommendations.

5 Proposed KG-based AI for Self-determination Research Agenda

In this section, we derive a set of requirements concerning KG-based AI for self-determination and map them to the concrete research goals introduced at the start of this vision paper.

5.1 Trust, Accountability, and Autonomy Foundational Goals

In the following, we highlight five open research challenges and opportunities in each of our proposed foundational topics (machine-readable norms and policies, decentralised infrastructure, decentralised KG management, and explainable neuro-symbolic AI). Considering the complex nature of each of these requirements, an assessment of the maturity of existing technologies with respect to the various requirements is beyond the scope of a vision paper.

- MRP1: Seamless policy translation.

-

There is a need for humans to express policies in machine-readable format and for machines to express them in natural language or via appropriate visualisations. A major challenge involves checking that machine-readable policies faithfully represent their human-readable counterpart.

- MRP2: Multi-level policy evaluation.

-

Several policy languages exist. However, many of them do not have corresponding enforcement mechanisms. Given that usage constraints, community rules, and regulations operate at different yet interconnected levels, there is a need to devise effective and efficient enforcement and/or compliance-checking strategies.

- MRP3: Negotiation.

-

Facilitate autonomy via fair and safe negotiation between individuals, communities, and organisations. Here, there is a need to study the benefits and trade-offs between merely assisting humans in making decisions and developing automated approaches that alleviate individuals from constant affirmations (e.g. the cookie problem).

- MRP4: Compliance verification.

-

Provide support for both ex-ante and ex-post compliance checking mechanisms. Despite their potential, it remains to be seen which machine-readable agreements can actually be enforced by TEEs. Additionally, in scenarios where it does not pay data processors to cheat, game theoretic approaches could be used to underpin honours-based compliance-checking.

- MRP5: Data misuse detection.

-

Instil trust and ensure accountability in KG-based AI by developing mechanisms that can detect if any party violated policies and norms. In this context, causal reasoning and explanations could potentially be used to both detect misuse and to better understand the root cause.

5.1.1 Decentralised Infrastructure

- DI1: Comprehensive recording.

-

A DLT can provide an immutable ledger, but work remains on how best to connect KG-based AI activities, e.g. to a possible federated query engine.

- DI2: Personalised tracing.

-

Providing individual and community owners of PKGs with personalised traces of how acquired data was processed and used will involve dis-aggregating KG-processing and inferencing according to different user data and ensuring that privacy is not violated when individual results are returned.

- DI3: “Decency” check.

-

There is a need for easy-to-use services that allow users and communities to check if an organisation has behaved in a “decent” way when it processes acquired data. Research here will examine how “decency” can be defined and validated by comparing PKG declarations of use (e.g. policies) with generated traces of use.

- DI4: Interoperability.

-

Develop mechanisms that facilitate comprehensive, interoperable identification of human and machine participants in KG-based AI processes. For example, users and communities will wish to know and be able to validate claims that a data request comes from a particular organisation, unit and even individual KG processor. This will provide a foundation for accountability at all levels of granularity.

- DI5: Self-sovereignty.

-

True self-sovereign KG-based AI needs to be: (i) based upon easy-to-use self-sovereign identities and data management; and (ii) capable of supporting the continuous monitoring of organisational behaviours in a transparent fashion.

5.1.2 Decentralised KG Management

- DKG1: Knowledge Sanitisation.

-

Develop robust techniques for knowledge sanitisation that ensure user privacy by anonymising and filtering sensitive information based on data policies. These policies can be regulations such as GDPR and HIPAA, as well as individual-level data policies enforced at their personal data store, empowering users to specify their sharing preferences and control the aspects of data they disclose.

- DKG2: Knowledge Graph Aggregation.

-

Design and implement mechanisms to encourage users to contribute their PKGs towards aggregated knowledge graphs, such as a concerted effort towards developing specific disease KGs. Blockchain-based incentive models that reward users for contributing to constructing such knowledge graphs, fostering collaborative efforts, and enriching the overall quality of shared knowledge are components of this goal.

- DKG3: Knowledge Verification.

-

Develop community-based and expert processes to verify the knowledge available in the global KGs. On the community front, it is critical to ensure that a knowledge item that was previously contributed through an individual has not been altered (either through error or with malicious intent), for instance, via blockchain primitives, as explained in the previous section.

- DKG4: Knowledge Validation.

-

Validation of knowledge is paramount to ensure KG interoperability and the consumption of knowledge in target applications. By employing RDF and SHACL technologies, we ensure that the DKGs across different data stores conform to a specific template, thus enabling their integration with community-supported KGs.

- DKG5: Federated Querying.

-

Explore and implement federated querying mechanisms, specifically utilising SPARQL, to enable efficient querying across integrated KGs. This process includes developing techniques to support various institutions, such as insurers, pharmaceutical companies, and medical research organisations, accessing and extracting insights from the knowledge graphs to enhance decision-making and advance their respective domains.

5.1.3 Explainable Neuro-Symbolic AI

- XNS1: User-dependent Recommendations.

-

Neuro-symbolic systems need to be empowered to present results transparently to the users according to their interests. For example, in our illustrative scenario (Figure 2), an individual may not expect the same level of detail in a health recommendation as a medical doctor or a community representative.

- XNS2: Adaptive Hybrid AI.

-

Define models that can adaptively combine predictive models with logical reasoning, encompassing abilities such as generalisation and causal inference. For accountability, the neuro-symbolic system should explain when the combination of logical reasoning with a therapy bot or healthcare assistant will be beneficial. For autonomy, the neuro-symbolic system should include the user in the loop and consider their opinion in this decision. Finally, trust requires verifying and validating these decisions.

- XNS3: Contextual-based Hybrid AI.

-

Equip neuro-symbolic systems with contextual knowledge, reasoning capabilities, and causal inference to effectively evaluate the strengths and limitations of machine learning components. This goal empowers the system to identify optimal combinations of statistical and symbolic AI methods, requiring the definition of causal models on top of KGs capable of combining reasoning over KGs with causal inference.

- XNS4: Symbolic Reasoning.

-

Employ inference processes, both inductive and deductive, on knowledge graphs to enable ML models, and LLMs in particular, to adjust hyper-parameters and a model’s configuration to new environments (i.e., Personal, community-based, and integrated healthcare KGs) and provide explanations for their decisions. Despite the advances of Automated Machine Learning (AutoML) systems (e.g., AutoML262626https://www.automl.org/ and AutoWeka [58], to the best of our knowledge, there are no developments for AutoML over KGs or for neuro-symbolic systems, which will enhance accountability, autonomy, and trust.

- XNS5: Learning Transparency.

-

Investigate if existing XAI mechanisms can be tailored for learning transparency, such that it is possible to explain what action was taken, how the decision making was performed, and why this was perceived as the outcome offering the greatest expected satisfaction.

| Machine-readable norms and policies | |||

| Trust | Accountability | Autonomy | |

| MRP1 | ✓ | ✓ | |

| MRP2 | ✓ | ✓ | |

| MRP3 | ✓ | ||

| MRP4 | ✓ | ✓ | |

| MRP5 | ✓ | ✓ | ✓ |

| Decentralised Infrastructure | |||

| Trust | Accountability | Autonomy | |

| DI1 | ✓ | ✓ | |

| DI2 | ✓ | ✓ | |

| DI3 | ✓ | ✓ | |

| DI4 | ✓ | ||

| DI5 | ✓ | ||

| Decentralised KG Management. | |||

| Trust | Accountability | Autonomy | |

| DKG1 | ✓ | ||

| DKG2 | ✓ | ✓ | |

| DKG3 | ✓ | ||

| DKG4 | ✓ | ||

| DKG5 | ✓ | ||

| Explainable Neuro-Symbolic AI | |||

| Trust | Accountability | Autonomy | |

| XNS1 | ✓ | ||

| XNS2 | ✓ | ✓ | |

| XNS3 | ✓ | ✓ | |

| XNS4 | ✓ | ||

| XNS5 | ✓ | ||

5.2 AI for Self-determination

The identified foundational research topic challenges and opportunities can be used to better contextualise concrete goals in relation to trust, accountability, and autonomy from a KG-based AI for self-determination perspective. An overview of this mapping, which is depicted in Table 1, is provided by attempting to answer the overarching questions that guide our vision paper.

- (Q1) What are the key requirements for an AI system to produce trustable results?

-

From a trust perspective, it is important that machine-readable policies faithfully represent the human-readable policies (MRP1) in a manner that can be verified automatically (MRP2). Regardless of whether systems are automated or semi-automated, we need to be able to verify that processes behave as expected (MRP4) and any misuse can be detected and rectified (MRP5). Trust could potentially be facilitated via auditing (DI1) and tracing (DI2), as well as certification mechanisms that support decency checks (DI3) and (semi-)automated knowledge verification (DKG3) and validation (DKG4) techniques. While human involvement is paramount to establishing trust in adaptive (XNS2) and contextualised (XNS3) hybrid AI.

- (Q2) How can AI be made accountable for its decision-making?

-