Semantic Web: Past, Present, and Future

Abstract

Ever since the vision was formulated, the Semantic Web has inspired many generations of innovations. Semantic technologies have been used to share vast amounts of information on the Web, enhance them with semantics to give them meaning, and enable inference and reasoning on them. Throughout the years, semantic technologies, and in particular knowledge graphs, have been used in search engines, data integration, enterprise settings, and machine learning.

In this paper, we recap the classical concepts and foundations of the Semantic Web as well as modern and recent concepts and applications, building upon these foundations. The classical topics we cover include knowledge representation, creating and validating knowledge on the Web, reasoning and linking, and distributed querying. We enhance this classical view of the so-called “Semantic Web Layer Cake” with an update of recent concepts that include provenance, security and trust, as well as a discussion of practical impacts from industry-led contributions. We conclude with an outlook on the future directions of the Semantic Web.

This is a living document. If you like to contribute, please contact the first author and visit: https://github.com/ascherp/semantic-web-primer

Keywords and phrases:

Linked Open Data, Semantic Web Graphs, Knowledge GraphsCategory:

SurveyFunding:

Maria-Esther Vidal: Partially funded by Leibniz Association, program “Leibniz Best Minds: Programme for Women Professors”, project TrustKG-Transforming Data in Trustable Insights; Grant P99/2020.Copyright and License:

2012 ACM Subject Classification:

Information systems Semantic web description languages ; Information systems Markup languages ; Computing methodologies Ontology engineering ; Computing methodologies Knowledge representation and reasoningRelated Version:

Lifelong versionAcknowledgements:

This article is a living document. The first version was written by Jannik, Scherp, and Staab in 2011 [88]. It was translated to German and updated by Gröner, Scherp, and Staab in 2013 [67] and updated again by Gröner and Scherp in 2020 [130]. This release in 2024 reflects on the latest developments in knowledge graphs and large language models.DOI:

10.4230/TGDK.2.1.3Received:

2023-07-03Accepted:

2024-01-29Published:

2024-05-03Part Of:

TGDK, Volume 2, Issue 1 (Trends in Graph Data and Knowledge - Part 2)Journal and Publisher:

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

The vision of the Semantic Web as coined by Tim Berners-Lee, James Hendler, and Orla Lassila [19] in 2001 is to develop intelligent agents that can automatically gather semantic information from distributed sources accessible over the Web, integrate that knowledge, use automated reasoning [64], and solve complex tasks as such as schedule appointments in negotiation of the preferences of the involved parties. We have come a long way since then. In this paper, we reflect on the past, i. e., the ideas and components developed in the early days of the Semantic Web. Since the beginning, the Semantic Web has tremendously developed and undergone multiple waves of innovation. The Linked Data movement has especially seen uptake by industries, governments, and non-profit organizations, alike. We discuss those present components and concepts that have been added over the years and shown to be very useful. Although many concepts of the initial idea of the Semantic Web have been implemented and put into practice, still further research is needed to reach the full vision. Thus, this paper concludes with an outlook to future directions and steps that may be taken.

For the novice reader of the Semantic Web, we provide a brief historical overview of the developments and innovation waves of the Semantic Web: At the beginning of the Semantic Web, we were mainly talking about publishing Linked Data on the Web [73], i. e., semantic data typically structured using the Resource Description Framework (RDF)111http://www.w3.org/TR/rdf-primer/ that is accessible on the Web using URIs/IRIs to identify entities, classes, predicates, etc. By referencing entities from other websites and Web-accessible sources, i. e., dereferencable via HTTP, the data becomes naturally linked. By using standardized vocabularies and ontologies the information then becomes more aligned and easier to use across sources. These principles have allowed non-profit organizations, companies, governments, and individuals to publish and share large amounts of interlinked data, which has led to the success of the Linked Open Data cloud222https://lod-cloud.net/ since 2007. Since many of the large interconnected semantic sources are accessible via interfaces understanding structured query languages (SPARQL endpoints), federated query processing methods were developed that allow exploiting the strengths of structured query languages to precisely formulate an information need and optimize the query for efficient execution in a distributed setting.

When Google launched its Knowledge Graph in 2012333https://blog.google/products/search/introducing-knowledge-graph-things-not/, semantic technologies experienced another wave of new applications in the context of searching information. Whereas search engines before mainly relied on keyword search and string-based matches of the keywords in the websites’ text, the knowledge graph enabled including semantics to capture the user’s information need as well as the meaning of potentially relevant documents. To achieve this purpose, Google’s knowledge graph integrates large amounts of machine-processable data available on the Web and uses this information not only to improve search results but also to display infoboxes for entities identified in the user’s keywords. It is only since 2012 that we have widely used the term “knowledge graph” to refer to semantic data, where entities are connected via relationships and form large graphs of interconnected information, typically with RDF as a common standard language. In recent years though (labeled) property graphs (LPG) have been used to manage knowledge graphs. We refer to the literature for a detailed comparison of RDF graphs and LPGs [78] and also like to point out that they can be converted into each other [23]. In this article, we consider knowledge graphs from the perspective of the Semantic Web, i. e., we consider RDF graphs.

A few years later, semantic technologies found another novel application in enterprise settings as enterprise knowledge graphs [55, 108] and data integration [33, 160]. Since all kinds of information can be structured as a graph, knowledge graphs can be used as a common structure to integrate heterogeneous information that is otherwise locked up in silos in different branches of a company. Integrating the data into a knowledge graph then allows for an integrated view, efficiently retrieving relevant information from this view once needed, and integrating external information that is available in the form of knowledge graphs, for instance on the Semantic Web. After all, online analytical processing-style queries can also be formulated with SPARQL444http://www.w3.org/TR/sparql11-query/ and evaluated on (distributed) knowledge graphs.

In the past few years, learning on graph data became one of the fastest growing and most active areas in machine learning [71]. Graph representation learning has created a new wave of graph embedding models and graph neural networks on knowledge graphs for tasks such as entity classification and link prediction. Natural Language Processing (NLP) has been another important field of the Semantic Web since the early years to extract knowledge from textual data and make it machine readable. Another field where NLP meets the Semantic Web is user interfaces for search on structured data to enable intuitive, natural language querying for graph data [69] similar to web search engines. At the end of 2022, ChatGPT555https://chat.openai.com/ emerged as the first publicly available end-consumer tool based on a Large Language Model (LLM). Since then, the GPT-based family of LLMs has stirred up research and business alike and demonstrated impressive performance on many NLP tasks, including generating structured queries from user prompts and extracting structured knowledge from text [145].

The capabilities of tools like Bing Chat666http://bing.com with underlying access to the World Wide Web are reminding one of the intelligent agents that were envisioned 20 years before. For example, at the time of writing, the GPT4-based tool Bing777https://www.bing.com/ can internally generate SPARQL queries and execute them, while the structured response is seamlessly embedded into its natural language outputs to the users.888Based on a sequence of prompts ran on January 22, 2024, using the GPT-4 model provided on the Bing Chat mobile app. The prompt sequence is: “do you have access to DBpedia”, “how do you access DBpedia”, “please give me an example where you access DBpedia in response”. While it already addresses some of the early visions of the Semantic Web, particularly the complex planning and reasoning capabilities of LLMs are – due to their nature of focusing on generating and processing text – still limited. We hypothesize that advances in neuro-symbolic AI and semantic technologies will be key for improving LLMs and bringing generative AI tools like Bing and the Semantic Web further together. We are keen to witness this next era of the Semantic Web.

In this paper, we provide a comprehensive overview of the Semantic Web with its semantic technologies and underlying principles that have been inspiring and driving the multiple waves of innovations in the past two decades. Section 2 provides a motivating example for the classic Semantic Web. We refer back to this example throughout the paper. Section 3 presents the principles and the general architecture of the Semantic Web along with the basic semantic technologies it is founded upon. Besides classical components, we are also describing recent developments, and pointing out components that are still being researched and developed. Section 4 shows how to represent distributed knowledge on the Semantic Web. The creation and maintenance of graph data is described in Section 5. Section 6 discusses the principle of reasoning and logical inference. Section 7 then shows how to query over the (distributed) graph data on the Semantic Web. We discuss the trustworthiness and provenance of data on the Semantic Web in Section 8. We provide extensive examples of applications based on and using the Semantic Web and its technologies in Section 9. Finally, we reflect on the impact the Semantic Web has on practitioners in Section 10. Finally, we conclude with a brief outlook on future developments for the Semantic Web.

2 Motivating Example

On the Semantic Web, knowledge components from different sources can be intelligently integrated with each other. As a result, complex questions can be answered, questions like “What types of music are played on British radio stations? At which time and day of the week?” or “Which radio station plays songs by Swedish artists?” In this section, we provide an overview of how the Semantic Web can be employed to answer those questions. We provide details of the components of the Semantic Web in the following sections.

We consider the example of the BBC program ontology with links to various other ontologies such as for music, events, and social networks as shown in Figure 1. We start with the BBC playlists of its radio stations. The playlists are published online in Semantic Web formats. We can leverage the playlist to get unique identifiers of played artists and bands. For example, the music group “ABBA” has a unique identifier in the form of a URI (https://www.bbc.co.uk/programmes/b03lyzpr). This URI can be used to link the music group to information from the MusicBrainz999http://musicbrainz.org/ music portal. MusicBrainz knows the members of the band, such as Benny Andersson, as well as the genre and songs. In addition, MusicBrainz is linked to Wikipedia101010https://www.wikipedia.org/ (not shown in the figure), e. g., to provide information about artists, such as biographies on DBpedia [11]. Information about British radio stations can be found in the form of lists on Web pages such as Radio UK111111https://www.radio-uk.co.uk, which can also be converted into a representation in the Semantic Web.

We can see that the required information is distributed across multiple knowledge components, e. g., BBC Program, MusicBrainz, and others. Each knowledge component can in principle provide different access to the data and utilize various ways to describe the data. Consequently, to answer the questions the data must be integrated. On the Semantic Web, data integration relies on ontologies describing data and the meaning of relations in data.

Colloquially, an ontology is a description of concepts and their relationships. Ontologies are used to formally represent knowledge on the Semantic Web.121212An ontology definition is provided in Section 4.2. For example, Dublin Core131313http://dublincore.org/documents/dc-rdf/ provides a metadata schema for describing common properties of objects, such as the creator of the information, type, date, title, usage rights, and so on. Figure 1 presents ontologies used to describe data in our example. For example, the Playcount ontology141414http://dbtune.org/bbc/playcount/ of the BBC is used to model which artist was played and how many times in the programs. Ontologies can be interconnected in the Semantic Web. For example, the MusicBrainz ontology is connected to the BBC ontology using the Playcount ontology. Different ontologies with varying degrees of formality and different relationships to each other are used by the BBC to describe their data (see also [122]).

As all this data is available and interconnected by ontologies, a user of the Semantic Web can directly ask for answers to questions in this and other scenarios. To make this possible, the Semantic Web requires generic software components, languages, and protocols that can interact seamlessly with each other. We introduce the classical and modern components of the architecture of the Semantic Web in Section 3.

In addition to the above example, the Semantic Web can be used for a variety of other applications (see examples in Section 9). Apart from technical aspects, the Semantic Web should also be understood as a socio-political phenomenon. Similar to the World Wide Web, various individuals and organizations publish their data on the Semantic Web and collaborate to link and improve this data. This impact on practitioners is discussed in Section 10.

3 Architecture of the Semantic Web

The example in Section 2 describes what the Semantic Web is as an infrastructure, but not how this is achieved. In fact, the capabilities of the Semantic Web in a small scale have already been implemented by some knowledge-based systems originating from artificial intelligence research, e. g., Heinsohn et al [76]. However, for the implementation of the vision on a large scale, i. e, the Web, these knowledge-based systems lacked flexibility, robustness, and scalability. In part, this was due to the complexity of the algorithms used. For example, knowledge bases in description logic in the 1990s, which serve as the basis of Web ontologies, were limited regarding their size such that they could handle at the most some hundred concepts [76].

In the meantime, enormous improvements have been achieved. Greatly increased computational power and optimized algorithms allow a practical handling of large ontologies like Simple Knowledge Organization System (SKOS)151515https://www.w3.org/TR/skos-reference/, Gene Ontology161616https://geneontology.org/, Schema.org, and SNOMED-CT171717https://www.snomed.org/. However, there are some fundamental differences between traditional knowledge-based systems and the Semantic Web. Data management in traditional knowledge-based systems has weaknesses in terms of handling large amounts of data and data sources, among other things because of different underlying formalisms, distributed locations, different authorities, different data quality, and a high frequency of change in the data used.

The Semantic Web applies fundamental principles to deal with these problems; they represent the basis for the architecture of the Semantic Web. This architecture’s building blocks can roughly be categorized into groups, covering the entire life cycle of handling and managing graph data on the Web. These groups are graph data representation, creation and validation of graph data, reasoning over and linking of graph data, (distributed) querying of graph data, crypto, provenance, and trustworthiness of graph data, and user interfaces and applications.

Below, we first introduce the principles of the Semantic Web, from which we derive the architecture and its main components. Subsequently, we describe the groups of the architecture. The principles of the Semantic Web are:

-

1.

Explicit and simple data representation: A general data representation abstracts from the underlying formats and captures only the essentials.

-

2.

Distributed systems: A distributed system operates on a large set of data sources without centralized control that regulates which information belongs where and to whom.

-

3.

Cross-references: The advantages of a network of data in answering queries are not based solely on the sheer quantities of data but on their interconnection, which allows reusing data and data definitions from other sources.

-

4.

Loose coupling with common language constructs: The World Wide Web and likewise the Semantic Web are mega-systems, i. e., systems consisting of many subsystems, which are themselves large and complex. In such a mega-system, individual components must be loosely coupled in order to achieve the greatest possible flexibility. Communication between the components is based on standardized protocols and languages, whereby these can be individually adapted to specific systems.

-

5.

Easy publishing and easy consumption: Especially in a mega-system, participation, i. e., publishing and consumption of data, must be as simple as possible.

These principles are achieved through a mix of protocols, language definitions, and software components. Some of these components have already been standardized by the W3C, which has defined both syntax and formal semantics of languages and protocols. Other components are not yet standardized, but they are already provided for the so-called Semantic Web Layer Cake by Tim Berners-Lee (cf. http://www.w3.org/2007/03/layerCake.png). We present a variant of the Semantic Web architecture, distinguishing between standardized languages and current developments. A graphical representation of the architecture can be found in Figure 2.

Identifier for Resources: HTTP, URL, DID

Entities (also called resources) are identified on the Internet by so-called Uniform Resource Identifiers (URIs) [17]. When a URI holds a dereferenceable location of the resource, in other words, it can be employed to get access to the resource via HTTP, it is called a Uniform Resource Locator (URL) [20, 18]. Furthermore, Internationalized Resource Identifiers (IRIs) [45] supplement URIs with international character sets from Unicode/ISO10646. URIs are globally and universally used but are usually not under our control. A recent W3C recommendation, the Decentralized Identifiers (DIDs), introduces an alternative approach to the above identifiers [137]. A DID is by default decentralized and allows for self-sovereign management of the identity, i. e., the control of a DID and its associated data is with the users.

In our example in Section 2, a URI 181818http://www.bbc.co.uk/music/artists/2f031686-3f01-4f33-a4fc-fb3944532efa#artist describes the musician Benny Andersson of the Swedish pop group ABBA. A user can dereference a URI that refers to ABBA, e. g., by performing a so-called look-up using HTTP to obtain a detailed description of the URI. We refer to the referenced standards for details.

For a detailed discussion of the role of dereferenceable URIs on the Semantic Web, we refer to the Linked Data principles described in Section 4.1.

Syntax for Data Exchange: XML, JSON-LD, RDFa

The Extensible Markup Language (XML)191919https://www.w3.org/TR/xml/ is used to structure documents and enables the specification and serialization of structured data. In addition, other data formats were introduced to facilitate for serialization of RDF data, often replacing XML. We can view those formats as forming two groups. The first group consists of formats designed specifically for RDF data, such as Turtle202020https://www.w3.org/TR/turtle/, N-triple212121https://www.w3.org/TR/n-triples/, and TRIG222222https://www.w3.org/TR/trig/. These are easier to view in a text editor, compared to XML, and thus easier to understand and modify. While initially not included in standards, their popularity has led to them being official W3C recommendations since 2014. The other group of formats is built by extending existing data formats. As a result, those can be employed to add RDF to existing systems. Examples of such formats are JSON-LD232323https://www.w3.org/TR/json-ld/, CSV on the Web (CSVW)242424https://www.w3.org/TR/tabular-data-primer/, and RDFa252525https://www.w3.org/TR/rdfa-primer/ extending JSON, CSV, and (X)HTML, respectively.

Graph Data Representation: RDF

In addition to the referencing of resources and a uniform syntax for the exchange of data, a data model is required that allows resources to be described both individually and in their entirety and how they are linked [73, 75]. An integrated representation of data from multiple sources is provided by a data model based on directed graphs [114]. The corresponding W3C standard language is RDF (Resource Description Framework)262626https://www.w3.org/RDF/.

An RDF graph consists of a set of RDF triples, where a triple consists of a subject, predicate (property), and object. An RDF graph can be given an identifier, such a graph is called a named graph [29]. RDF graphs can be serialized in several ways (see Syntax for Data Exchange above). It is important to note that some formats (e. g., Turtle) do not support named graphs. Finally, RDF-star (aka RDF*)272727https://w3c.github.io/rdf-star/,282828https://blog.liu.se/olafhartig/2019/01/10/position-statement-rdf-star-and-sparql-star/ was introduced to allow nesting of triples and thus enables an efficient way to make statements about statements while avoiding reification and the increased number of triples and complexity that come along with it.

The representation of graph data is further discussed in Section 4.

Creation and Validation of Graph Data: RIF, SWRL, [R2]RML, and SHACL

In the RDF context, a rule is a logical statement employed to infer new facts from existing graph data or to validate the data itself. RIF (Rule Interchange Format)292929http://www.w3.org/2005/rules/wiki/RIF_Working_Group is a W3C recommendation format designed to facilitate the seamless interchange of rules between different rule engines. This enables the extraction of rules from one engine, their translation into RIF, publication, and subsequent conversion into the native syntax of another rule engine for execution. SWRL303030https://www.w3.org/submissions/SWRL/ is a rule-based language designed for representing complex relationships and reasoning.

Rules can also be used to state the correspondence between data sources and RDF graphs. The RDB to RDF Mapping Language (R2RML)313131https://www.w3.org/TR/r2rml/ and the RDF Mapping Language (RML)323232https://rml.io/specs/rml/ correspond to rule-based mapping languages for the declarative definition of RDF graphs. R2RML is the W3C recommendation for representing mappings from relational databases to RDF datasets, while RML extends R2RML to express rules not only from relational databases but also from data in the format of CSV, JSON, or XML.

Validating constraints, representing syntactic and semantic restrictions in RDF graphs, is essential for ensuring data quality. In addition to rule-based languages, shapes allow for the specification of conditions to meet data quality criteria and integrity constraints. A shape encompasses a conjunction of constraints representing conditions that nodes in an RDF graph must satisfy [79]. A shapes graph is a labeled directed graph where nodes correspond to shapes, and edges denote interrelated constraints. The Shapes Constraint Language (SHACL) [95] and Shape Expressions (ShEx) [117]) are two W3C-recommendations to express shapes graphs over RDF [119].

The creation and validation of graph data are described in detail in Section 5.

Reasoning and Linking of Graph Data: RDFS, OWL

Data from different sources may be heterogeneous. In order to deal with this heterogeneity and to model the semantic relationships between resources, the RDF Schema (RDFS)333333https://www.w3.org/TR/rdf11-schema/ vocabulary extends RDF by modeling types of resources (so-called RDF classes) and semantic relationships on types and properties in the form of generalizations and specializations. Likewise, it can be used to model the domain and range of properties.

RDFS is not expressive enough to merge data from different sources and define consistency criteria about it, such as the disjointness of classes or the equivalence of resources. The Web Ontology Language (OWL)343434https://www.w3.org/OWL/ [155] is an ontology language with formally defined meaning based on description logic. This allows for reasoning services to be provided by knowledge-based systems for OWL ontologies. OWL can be exchanged using RDF data formats. Compared to RDFS, OWL provides more expressive language constructs. For example, OWL allows the specification of equivalences between classes and cardinality constraints on properties [155].

Reasoning over RDF graphs, incorporating RDFS and OWL models enhances semantic expressiveness and inferential capabilities. This involves making implicit information explicit, inferring new triples, and validating the RDF graph’s consistency against defined ontological constraints. RDFS provides basic entailment regimens, creating hierarchies and simple inferencing via sub-class and sub-property relationships. In contrast, OWL introduces advanced constructs such as property characteristics (e. g., functional, inverse, symmetric properties), cardinalities, and disjointness axioms, enabling more expressive and complex modeling. Integrating RDFS and OWL reasoning mechanisms empowers applications to derive insights, discover implicit knowledge, and ensure adherence to specified ontological constraints within RDF-based knowledge representations.

Graph data aggregated from many data sources, such as in our example in Section 2, may contain many different identities. But those identities may represent the same set of real-world objects. Integration and linkage mechanisms allow references to be made between data from different sources. A popular approach to state the identity of two resources and is the owl:sameAs feature of OWL.

We discuss the reasoning over and linking of graph data in Section 6.

Querying of Graph Data: SPARQL

Since RDF makes it possible to integrate data from different sources, a query language is needed that allows formulating queries over individual RDF graphs as well as over the combination of multiple RDF graphs across multiple sources. SPARQL353535http://www.w3.org/TR/rdf-sparql-query/ (a recursive acronym for SPARQL Protocol and RDF Query Language) is a declarative query language for RDF graphs that enables us to formulate such queries. SPARQL 1.1363636http://www.w3.org/TR/sparql11-query/ is the current version of SPARQL, which includes the capability to formulate federated queries over distributed data sources.

The basic building blocks of a SPARQL query are triple and graph patterns. A triple pattern corresponds to an RDF triple but where one, two, or all three of its components are replaced by variables (denoted with a leading “?”). These triple patterns with variables are to be matched in the queried graph. Multiple triple patterns can be combined into more complex graph patterns describing the connections between multiple nodes in the graph. The solution to such a SPARQL query then corresponds to all the subgraphs in an RDF graph matching this pattern.

Finally, there is RDF-star (aka RDF*)373737https://w3c.github.io/rdf-star/,383838https://blog.liu.se/olafhartig/2019/01/10/position-statement-rdf-star-and-sparql-star/ – along with the corresponding SPARQL-star/ SPARQL* extension – was proposed and since then was implemented by several triple stores [2] that often provide publicly accessible SPARQL endpoints. The key idea with RDF/SPARQL-star is to allow the nesting of triples to enable an efficient way to allow statements about statements while avoiding reification and the increased number of triples and complexity that come along with it.

We describe SPARQL and federated querying in Section 7.

Crypto, Provenance, and Trustworthiness of Graph Data

Other aspects of the Semantic Web are encryption and authentication to ensure that data transmissions cannot be intercepted, read, or modified. Crypto modules, such as SSL (Secure Socket Layer), verify digital certificates and enable data protection and authentication. In addition, there are digital signatures for graphs that integrate seamlessly into the architecture of the Semantic Web and are themselves modeled as graphs again [93]. This allows graph signatures to be applied iteratively and enables to building trust networks. The Verifiable Credentials Data Model, a recent W3C recommendation, introduces a standard to model trustworthy credentials for graphs on the web393939https://www.w3.org/TR/vc-data-model/. Data on the Semantic Web can be augmented with additional information about its trustworthiness and provenance.

Aspects of trustworthiness and provenance of graph data as well as crypto are discussed in Section 8.

User Interfaces and Applications

A user interface enables users to interact with data on the Semantic Web. From a functional perspective, some user interfaces are generic and operate on the graph structure of the data, whereas others are tailored to specific tasks, applications, or ontologies. New paradigms are exploring the spectrum of possible user interfaces between generality and specific end-user requirements.

4 Representation of Graph Data

The Linked Open Data principles are notably the most successful and widely adopted choice for representing RDF graph data on the web. Thus, we first introduce the reader to how to represent graph data as Linked Data. Subsequently, we introduce the notion of ontologies. This is followed by a more detailed analysis of the different types of ontologies. We give examples of ontologies throughout the sections. With this background in mind, we reconsider our running example from Section 2 and analyze the given distributed network of ontologies. In this context, we also introduce and discuss the notion of ontology design patterns.

4.1 Linked Graph Data on the Web

The Linked Data principles404040http://www.w3.org/DesignIssues/LinkedData.html define the methods for representing, publishing, and using data on the Semantic Web. They can be summarized as follows:

-

1.

URIs are used as names for entities.

-

2.

The HTTP protocol’s GET method is used to retrieve descriptions for a URI.

-

3.

Data providers shall return relevant information in response to HTTP GET requests on URIs using standards, e. g., in RDF.

-

4.

Links to other URIs shall be used to facilitate knowledge discovery and use of additional information.

Publishing data using Linked Data principles allows easy access to data via HTTP. This allows exploration of resources and navigation across resources on the Semantic Web. URIs (see 1.) are dereferenced using HTTP requests (2.) to obtain additional information about a given resource. In particular, using standardized syntax (3.), this information may also contain links to other resources (4.).

Figure 3 represents an example of Linked Data about the pop group ABBA. The example describes several relationships linking entities to ABBA’s URI, such as foaf:member and rdf:type. In the figure “ABBA”, or more precisely the URI of ABBA, is the subject, “Property” refers to relationships, and “Value” represents objects of the RDF triples. The relation owl:sameAs will be explained in more in Section 6. The prefixes foaf, rdf, and owl refer to vocabularies of the FOAF ontology414141http://xmlns.com/foaf/spec/, and the W3C language specifications of RDF and OWL, respectively.

4.2 Ontologies

An ontology is commonly defined as a formal, machine-readable representation of key concepts and relationships within a specific domain [111, 109]. In essence, ontologies capture a shared perspective [111] that is, the formal conceptualization of ontologies expresses a consensus view among different stakeholders. Visualizing ontologies is akin to viewing a spectrum, with a specificity of concepts, their relationships, and the granularity of meaning representation varying along this continuum [101, 149, 148]. A controlled vocabulary corresponds to the less expressive form of ontology, comprising a restrictive list of words or terms used for labeling, indexing, or categorization. The Clinical Data Interchange Standards Consortium (CDISC) Terminology is an exemplary vocabulary that harmonizes definitions in clinical research.424242https://datascience.cancer.gov/resources/cancer-vocabulary/cdisc-terminology A thesaurus is located next in the spectrum; they enhance controlled vocabularies with information about terms and their synonyms and broader/narrower relationships. The Unified Medical Language System (UMLS) integrates medical terms and their synonyms.434343https://www.nlm.nih.gov/research/umls/index.html Next, taxonomies are built over controlled vocabularies to provide a hierarchical structure, e. g., parent/child relationship. SNOMED-CT444444https://www.snomed.org/value-of-snomedct (Systematized Nomenclature of Medicine Clinical Terms) provides a terminology and coding system used in healthcare and medical fields; medical concepts organized in a hierarchical structure enabling a granular representation of clinical information. The Simple Knowledge Organization System (SKOS)454545https://www.w3.org/TR/skos-reference/ is a W3C standard to describe knowledge about organizational systems. Lastly, ontologies are at the highest extreme of the spectrum, integrating sets of concepts with attributes and relationships to define a domain of knowledge.

Note, SKOS is a popular standard for modeling domain-specific taxonomies in the different scientific communities such as economics, social sciences, etc. to represent concepts and their relationships, most importantly narrower, broader, and related. However, it does not have the expressiveness of OWL with its complex expressions on classes and relations. For a detailed discussion, we refer to the literature such as [89] and the W3C on using OWL and SKOS464646https://www.w3.org/2006/07/SWD/SKOS/skos-and-owl/master.html.

4.3 Types and Examples of Ontologies

A network of ontologies, such as the example shown in Figure 1, may consist of a variety of ontologies created by different actors and communities. Ontologies may be the result of a transformation or reengineering activity of a legacy system, such as a relational database or existing taxonomy such as the Dewey Decimal Classification474747http://dewey.info/ or Dublin Core. Other ontologies are created from scratch. This involves applying existing methods and tools for ontology engineering and choosing an appropriate representation language for the ontology (see Section 6).

Ontology engineering deals with the methods for creating ontologies [65] and has its origins in software engineering in the creation of domain models and in database design in the creation of conceptual models. A good overview of ontology engineering can be found in several reference books [65]. Ontologies vary greatly in their structure, size, development methods applied, and application domains considered. Complex ontologies are also distinguished in terms of their purpose and granularity.

Domain Ontologies represent knowledge specific to a particular domain [48, 109]. Domain ontologies are used as external sources of background knowledge [48]. They can be built on foundational ontologies [110] or core ontologies [131], which provide precise structuring to the domain ontology and thus improve interoperability between different domain ontologies. Domain ontologies can be simple such as the FOAF ontology or the event ontology mentioned above, or very complex and extensive, having been developed by domain experts, such as the SNOMED medical ontology.

Core Ontologies represent a precise definition of structured knowledge in a particular domain spanning multiple application domains [131, 109]. Examples of core ontologies include core ontologies for software components and web services [109], for events and event relationships [129], or for multimedia metadata [126]. Core ontologies should thereby build on foundational ontologies to benefit from their formalization and strong axiomatization [131]. For this purpose, new concepts and relations are added to core ontologies for the application domain under consideration and are specialized by foundational ontologies.

Foundational Ontologies have a very wide scope and can be reused in a wide variety of modeling scenarios [24]. They are therefore used for reference purposes [109] and aim to model the most general and generic concepts and relations that can be used to describe almost any aspect of our world [24, 109], such as objects and events. An example is the Descriptive Ontology for Linguistic and Cognitive Engineering (DOLCE) [24]. Such basic ontologies have a rich axiomatization that is important at the developmental stage of ontologies. They help ontology engineers to have a formal and internally consistent conceptualization of the world, which can be modeled and checked for consistency. For the use of foundational ontologies in a concrete application, i. e., during the runtime of an application, the rich axiomatization can often be removed and replaced by a more lightweight version of the foundational ontology.

In contrast, domain ontologies are built specifically to allow automatic reasoning at runtime. Therefore, when designing and developing ontologies, completeness and complexity on the one hand must always be balanced with the efficiency of reasoning mechanisms on the other. In order to represent structured knowledge, such as the scenario depicted in Figure 1, interconnected ontologies are needed, which are spanned in a network over the Internet. For this purpose, the ontologies used must match and be aligned with each other.

4.4 Distributed Network of Ontologies and Ontology Patterns

A network of ontologies must be flexible with respect to the functional requirements imposed on it. This is because systems are modified, extended, combined, or integrated over time. In addition, the networked ontologies must lead to a common understanding of the modeled domain. This common understanding can be achieved through a sufficient level of formalization and axiomatization, and through the use of ontology patterns. An ontology pattern, similar to a design pattern in software engineering, represents a generic solution to a recurring modeling problem [131]. Ontology patterns allow to select parts from the original ontology. Either all or only certain patterns of an ontology can be reused in the network. Thus, to create a network of ontologies, e. g., existing ontologies and ontology patterns can be merged on the Web. The ontology engineer can drive or explicitly provide for the modularization of ontologies using ontology patterns. Core ontologies represent one approach to designing a network of ontologies (see in detail [131]). They allow to capture and exchange structured knowledge in complex domains. Well-defined core ontologies fulfill the properties mentioned in the previous section and allow easy integration and smooth interaction of ontologies (see also [131]). The networked ontologies approach leads to a flat structure, as shown in Figure 1, where all ontologies are used on the same level. Such structures can be managed up to a certain level of complexity.

The approach of networked core ontologies is illustrated by the example of ontology layers starting from foundational to core to domain ontologies. As shown in Figure 4, DOLCE is the foundational ontology at the bottom layer, the Multimedia Metadata Ontology (M3O) [126] as the core ontology for multimedia metadata, and an extension of M3O for the music domain. Core ontologies are typically defined in description logic and cover a field larger than the specific application domain requires [57]. Concrete information systems will typically use only a subset of core ontologies. To achieve modularization of core ontologies, they should be designed using ontology patterns. By precisely matching the concepts in the core ontology with the concepts provided in the foundational ontology, they provide a solid foundation for future extensions. New patterns can be added and existing patterns can be extended by specializing the concepts and roles. Figure 4 shows different patterns of the M3O and DOLCE ontologies.

Ideally, the ontology patterns of the core ontologies are reused in the domain ontologies [57], as shown in Figure 4. However, since it cannot be assumed that all domain ontologies are aligned with a foundational or core ontology, the option that domain ontologies are developed and maintained independently must also be considered. In this case, domain knowledge can be reused in core ontologies by applying the Descriptions and Situations (DnS) ontology pattern of the foundational ontology DOLCE. The DnS ontology pattern is an ontological formalization of context [109] by defining different views using roles. These roles can refer to domain ontologies and allow a clear separation of the structured knowledge of the core ontology and domain-specific knowledge. To model a network of ontologies, such as the example described above, the Web Ontology Language (OWL) and its ability to axiomatize using description logic [12] is used. In addition to being used to model a distributed knowledge representation and integration, OWL, is also used in particular to derive inferences from this knowledge, which is described in Section 6.

5 Creation and Validation of Graph Data

In this section, we describe the creation of graph data from legacy data. Many tools are available for this task, which support various mappings and transformations. Subsequently, we discuss data quality and the validation of knowledge graphs, including the recent approaches on shapes. We also reflect on the role of the open-world versus closed-world assumption with respect to validating data.

5.1 Graph Data Creation

Graph data can be created by transforming legacy data via a data integration system [98], which consists of a unified schema, data sources, and mapping rules. These mapping rules define the concepts within the schema and establish links to the data sources. By employing declarative definitions, knowledge graph creation promotes modularity and reusability. This approach allows users to trace the entire graph creation process, leading to improved transparency and ease of maintenance.

To enable comprehensive and extensive graph specification, mappings and transformations have been developed to convert data from various storage models into Semantic Web data models like RDF. These mappings and transformations facilitate the mapping of data into RDF, thereby supporting the integration of diverse data sources into the Semantic Web.

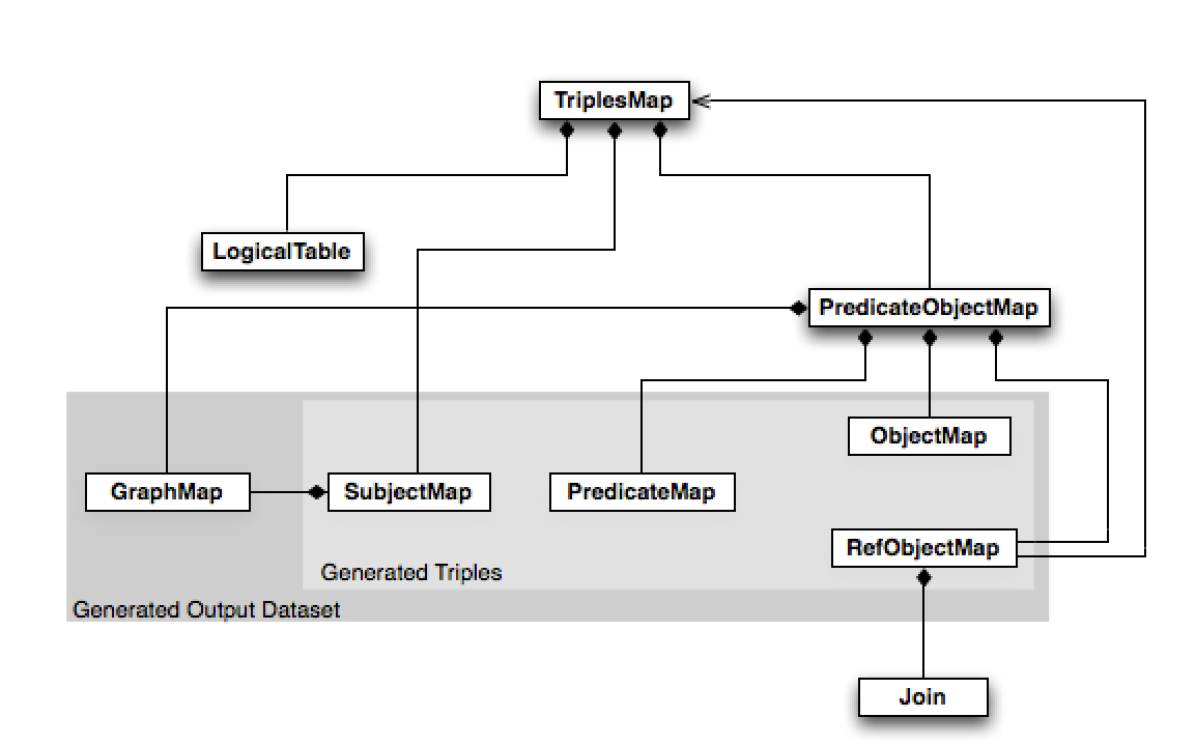

The mapping language R2RML [37] defines mapping rules from relational databases (relational data models) to RDF graphs. These mappings themselves are also RDF triples [15]. Because of its compact representation, Turtle is considered a user-friendly notation of RDF graphs. The structure of R2RML is illustrated in Figure 5; essentially, table contents are mapped to triples by the classes SubjectMap, PredicateMap, and ObjectMap. If the object is a reference to another table, this reference is called RefObjectMap. Here, SubjectMap contains primary key attributes of the corresponding table. Thus, there exists a mapping rule representable in RDF graphs by means of which tables of relational databases can be represented as RDF graphs.

The RDF Mapping Language (RML)[42] extends R2RML to encompass the definition of logical sources in various formats, including CSV, JSON, XML, and HTML. This enhancement enables RML to introduce new operators that facilitate the integration of data from diverse sources into the Semantic Web. Thus, instead of LogicalTable, RML includes the tag LogicalSource, to allow for the retrieval of data in several formats. Additionally, RML resorts to W3C-standardized vocabularies and enables the definition of retrieval procedures to collect data from Web APIs or databases. R2RML and RDF mapping rules are expressed in RDF, and their graphs document how classes and properties in one or various ontologies that are part of an RDF graph are populated from data collected from potentially heterogeneous data sources.

Over time, the Semantic Web community has actively contributed to addressing the challenge of integrating heterogeneous datasets, resulting in the development of several frameworks for executing declarative mapping rules [34, 28, 116]. A rich spectrum of tools (e. g., RMLMapper [42], RocketRML [136], CARML484848https://github.com/carml/carml, SDM-RDFizer [87], Morph-KGC [8], and RMLStreamer [112]) offers the possibility of executing R2RML and RML rules and efficiently materializing the transformed data into RDF graphs. Van Assche et al. [10] provided an extensive survey detailing the main characteristics of these engines. Despite significant efforts in developing these solutions, certain parameters can impact the performance of the graph creation process [31]. Existing engines may face challenges when handling complex mapping rules or large data sources. Nonetheless, the community continues to collaborate and address these issues. An example of such collaboration is the Knowledge Graph Construction Workshop 2023 Challenge494949https://zenodo.org/record/7689310 that took place at ESWC 2023. This community event aims to understand the strengths and weaknesses of existing approaches and devise effective methods to overcome existing limitations.

RDF graphs can also be dynamically created through the execution of queries over data sources. These queries involve the rewriting of queries expressed in terms of an ontology, based on mapping rules that establish correspondences between data sources and the ontology. Tools such as Ontop [28], Ultrawrap [135], Morph [116], Squerall [100], and Morph-CSV [32] exemplify systems that facilitate the virtual creation of RDF graphs.

5.2 Quality and Validation of Graph Data

Quality and validation of the graph data are crucial to maintaining the integrity of the Semantic Web [44, 38, 163]. The evaluation of integrity constraints allows for the identification of inconsistencies, inaccuracies, or contradictions within the data. They also help maintain consistency by ensuring related data elements remain coherent. Constraints are logical statements – expressed in a particular language – that impose restrictions on the values taken for target nodes in a given property.

Constraints can be expressed using OWL [144], SPARQL queries [97], or using shapes. However, the interpretation of the results depends on the semantics followed to interpret the failure of an integrity constraint. For example, constraints expressed in OWL are validated using an Open-World Assumption (OWA) (i. e., a statement cannot be inferred to be false based on failures to prove it) and under the absence of the Unique Name Assumption (UNA) (i. e., two different names may refer to the same object). These two features make it difficult to validate data in applications where data is supposed to be complete. Definitions of integrity constraint semantics in OWL using the Closed-World Assumption [103, 104, 144] overcome these issues.

Contrarily, constraints expressed using SPARQL queries or shapes will be evaluated under the Closed-World Assumption (CWA) and following the Unique Name Assumption (UNA). Nevertheless, some constraints may be difficult to express in SPARQL, and the specification process is prone to errors and difficult to maintain.

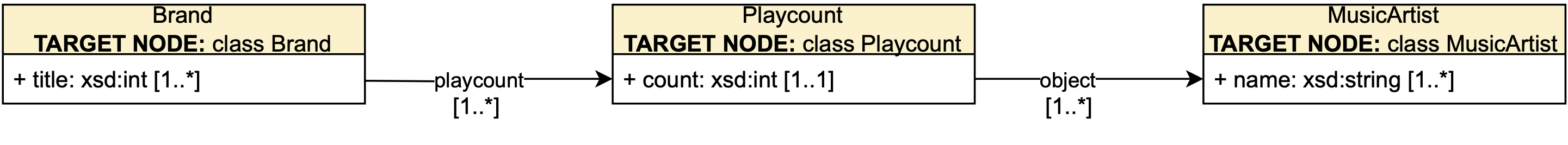

Data quality conditions and integrity constraints can also be expressed as graphs of shapes or the so-called shapes schema. A shape corresponds to a conjunction of constraints that a set of nodes in an RDF graph must satisfy [79]. These constraints can restrict the types of nodes, the cardinality of certain properties, and the expected data types or values for specific properties. A shape can target the instances of a class, the domain or range of a property, or a specific node in the RDF graph. A shape or node in a shapes graph is validated in an RDF graph, if and only if, all the target nodes in the RDF graph satisfy all the constraints in the shape. Figure 6 presents a shapes graph of three shapes targeting the classes Brand, Playcount, and MusicArtist. Each of the shapes comprises one constraint. In the shapes Brand and MusicArtist the properties title and name can take more than one value, while the shape Playcount states that each instance of the class Playcount must have exactly one value of the property count. Additionally, the instances of the class Brand must be related to valid instances of the class Playcount which should also be related to valid instances of the class MusicArtist.

There are two standards for defining shapes, ShEx (Shape Expressions) [117]) and SHACL (Shapes Constraint Language) [95]). Both define shapes over the attributes (i. e., owl:DatatypeProperties), and constraints on incoming/outgoing arcs, cardinalities, RDF syntax, and extension mechanism. These inter-class constraints induce a shape network used to validate the integrity and data quality properties of an RDF graph.

SHACL and ShEx, although sharing a common goal, adopt distinct approaches. ShEx seeks to offer a language serving as a grammar or schema for RDF graphs, delineating RDF graph structures for validation. On the other hand, SHACL is positioned as the W3C recommendation for validating RDF graphs against a conjunction of constraints, emphasizing a constraint language for RDF. Despite their analogous roles in specifying shapes and constraints for RDF data, ShEx and SHACL differ in syntax, expressiveness, and community adoption [59].

The evaluation results of a SHACL shape network over an RDF graph are presented in validation reports using a controlled vocabulary. A validation report includes explanations about the violations, the severity of the violation, and a message describing the violation. SHACL is the language selected by the International Data Space (IDS) to express the restrictions that state the integrity over RDF graphs [96]. Besides the integrity validation of an RDF graph, SHACL can be utilized to describe data sources and the certification of a query answer [124], as metadata to enhance the performance of a SPARQL query engine [118], to certify access policies [125], and to provide provenance as a result of the validation of integrity constraints [40].

In the context of a quality assessment pipeline, one crucial step involves validating the shape schema against a graph. It is important to mention that the validation of recursive shape schemas is not explicitly addressed in the SHACL specification [95]. To address this gap, Corman et al. [36] introduce a semantic framework for validating recursive SHACL. They also demonstrated that validating full SHACL features is an NP-hard problem. Building on these insights, they proposed specific fragments of SHACL that are computationally tractable, along with a fundamental algorithm for validating shape schemas using SPARQL [35]. In a related vein, Andresel et al. [7] propose a stricter semantics for recursive SHACL, drawing inspiration from stable models employed in Answer Set Programming (ASP). This innovative approach enables the representation of SHACL constraints as logic programs and leverages existing ASP solvers for shape schema validation. Importantly, this approach allows for the inclusion of negations in recursive validations. Further, Figuera et al. [51] present Trav-SHACL, an approach that focuses on query optimization techniques aimed at enhancing the incremental behavior and scalability of shape schema validation.

While SHACL has been adopted in a broad range of use cases, given a large graph it remains a challenge how to define shapes efficiently [119]. In many industrial settings with billions of entities and facts [108] creating shapes manually simply is not an option. The current state of the art can automatically extract shapes on WikiData (ca. 2 Billion facts) in less than 1.5 hours while filtering shapes based on the well-established notions of support and confidence to avoid reporting thousands of shapes that are so rare or apply to such a small subset of the data that they become meaningless [120]. Still, more work is needed to increase scalability further and also to help users make good use of the mined shapes [121] and, e. g., interactively use them to correct and improve the quality of their graphs.

6 Reasoning over and Linking of Graph Data

Section 3 introduced several formal languages for knowledge representation on the Semantic Web. RDF allows the description of simple facts (statements with subject, predicate, and object, so-called RDF triples), e. g., “Anni-Frid Lyngstad” “is a member of” “ABBA”. RDFS allows the definition of types of entities (classes), relationships between classes, and a subclass and superclass hierarchy between types (analogously for relations). OWL is even more expressive than RDF and RDFS. For example, OWL allows the definition of disjoint classes or the description of classes in terms of intersection, union, and complement of other classes.

Below, we first introduce the reasoning over RDFS and OWL at the example of our BBC scenario from Section 2. Subsequently, we discuss works on linking data objects and concepts.

6.1 Reasoning over Graph Data

Based on formal languages representing graph data and their semantics, further (implicit) facts can be derived from the knowledge base by deductive inference. In the following, we exemplify the derivation of implicit facts from a set of explicitly given facts using the RDFS construct rdfs:subClassOf and the OWL construct owl:sameAs. The property rdfs:subClassOf describes hierarchical relationships between classes and with owl:sameAs two resources can be defined as identical.

As a first example, we consider the class foaf:Person, which is defined in the FOAF ontology, and the classes mo:Musician and mo:Group, which are defined in the music ontology. In the music ontology, there is an additional axiom that defines mo:Musician as a subclass of foaf:Person using rdfs:subClassOf. Given this axiom, it can be deduced by deductive inference that instances of mo:Musician are also instances of foaf:Person. Now if there is such a hierarchy of classes and in addition a statement that Anni-Frid Lyngstad is of type mo:Musician, then it can be inferred by inference that Anni-Frid Lyngstad is also of type foaf:Person. This means that all queries asking for entities of type foaf:Person will also include Anni-Frid Lyngstad in the query result, even if that entity is not explicitly defined as an instance of foaf:Person. Figure 7 represents these facts and the corresponding class hierarchy in RDFS as a directed graph.

In the second example, the OWL construct owl:sameAs is used to define two resources as identical, for example http://www.bbc.co.uk/music/artists/d87e52c5-bb8d-4da8-b941-9f4928627dc8#artist and http://dbpedia.org/resource/ABBA. Identical here means that these two URIs represent the same real-world object. By inference, information about ABBA from different sources can now be linked. Since ontologies are created independently on the web, and URIs are subject to local naming conventions, a real-world object may be represented by multiple URIs (in different ontologies).

OWL offers a variety of other constructs for the description of classes, relationships, and concrete facts. For example, OWL allows the declaration of transitive relations and inverse relations. For example, the relation “is-member” is inverse to “has-member”. OWL reasoning allows, among other things, consistency checking of an ontology or checking the satisfiability of classes [80]. A class is satisfiable if there can be instances of that class.

For a detailed discussion about OWL reasoning, we refer to the literature such as [80, 13]. Different reasoners for OWL have seen widespread adoption in the community such as the well-known Pellet505050https://github.com/stardog-union/pellet and Hermit [62]. Finally, a combination of description logic and rules is also possible. For example, Motik et al. [105] presented a combination of description logic and rules that allows tractable inference on OWL ontologies.

6.2 Linking of Objects and Concepts

In the Semantic Web, it cannot be assumed that two URIs refer to two different real-world objects (cf. unique name assumption in Section 5.2). A URI by itself, or in itself, has no identity [70]. Rather, the identity or interpretation of a URI is revealed by the context in which it is used on the Semantic Web. Determining whether or not two URIs refer to the same entity is not a simple task and has been studied extensively in data mining and language understanding in the past. For example, to identify whether or not the author names of research papers refer to the same person, it is often not sufficient to resolve the name, venue, title, and co-authors [90]. The process of determining the identity of a resource is often referred to as entity resolution [90], coreference resolution [156], object identification [123], and normalization [156, 157]. Correctly determining the identity of entities on the Web is important as more and more records appear on the Web and this presents a significant hurdle for very large Semantic Web applications [61].

To address this, a number of services exist that can recognize entities and determine their identity: Thomson Reuters offers OpenCalais515151https://www.refinitiv.com/en/products/intelligent-tagging-text-analytics, a service that can link natural language text to other resources using entity recognition. Another commercial tool that allows for extracting knowledge graphs from text is provided by DiffBot.525252https://www.diffbot.com/ Recently, the language model ChatGPT has been compared to the specialized entity and relation extraction tool REBEL [27] for the task of creating knowledge graphs from sustainability-related text [145]. The experiments suggest that large language models improve the accuracy of creating knowledge graphs [145]. The sameAs535353http://sameas.org/ service aims to detect duplicate resources on the Semantic Web using the OWL relationship owl:sameAs. This can be used to resolve coreferences between different datasets. For example, for the query with the URI http://dbpedia.org/resource/ABBA, a list of over 100 URIs is returned that also references the music group ABBA. One of them is BBC with the resource http://www.bbc.co.uk/music/artists/d87e52c5-bb8d-4da8-b941-9f4928627dc8#artist.

Furthermore, the problem of schema matching [157] is very related to the problem of entity resolution, co-reference resolution, and normalization. The goal of schema matching is to address the question of how to integrate data [157], which is non-trivial even for small schemas. In the Semantic Web, schema matching means the matching of different ontologies, respectively the concepts defined in these ontologies. Various (semi-)automatic or machine learning techniques for matching ontologies have been developed in the past [49, 46, 22]. Core ontologies as illustrated in Figure 4.3 represent generic modeling frameworks for integration and alignment with other ontologies. In addition, core ontologies can also integrate Linked Open Data, which typically contains no or very little schema information. The YAGO ontology [140] was generated from the fusion of Wikipedia and Wordnet using rule-based and heuristic methods. A manual assessment showed an accuracy of 95%.

Manual matching of different data sources is also pursued in the Linked Open Data project of the German National Library545454http://www.d-nb.de/. For example, the database containing the authors of all documents published in Germany was manually linked with DBpedia and other data sources. A particular challenge was to identify the authors, as described above. For example, former German Chancellor Helmut Kohl has a namesake whose work should not be linked to the chancellor’s DBpedia entry. Relationships between keywords used to describe publications are asserted using the SKOS (Simple Knowledge Organization System) vocabulary.555555https://www.w3.org/TR/2009/REC-skos-reference-20090818/ For example, keywords are related to each other using the relation skos:related. Hyponyms and hypernyms are expressed by the relations skos:narrower and skos:broader. Finally, the Ontology Alignment Evaluation Initiative565656http://oaei.ontologymatching.org/ should be mentioned, which aims to achieve an established consensus for evaluating ontology matching methods.

7 Querying of Linked Data

Queries over Linked Data can be processed using link traversal [74], i. e., the query processor would use one of those IRIs given directly in the query as starting point and query the respective source for more triples involving the IRI. By iteratively doing this for more IRIs and with respect to the graph pattern defined in the query, a local set of triples is collected over which the given query can be evaluated.

More conveniently, queries over RDF and Linked Data can be formulated in SPARQL575757http://www.w3.org/TR/sparql11-query/, if a corresponding endpoint to the graph data is made available. Whereas such queries can target graphs that are stored in a single graph store, Linked Data often requires formulating and executing queries across multiple graphs that are stored at distributed data sources.

Below, we first introduce the basic query processing of SPARQL queries along with our running example. This is followed by discussing RDFS/OWL entailment regimes and querying. Finally, we present approaches for distributed querying over multiple SPARQL endpoints.

7.1 Basic Query Processing

In principle, a SPARQL query is evaluated by comparing the graph pattern defined in the query to the RDF graph and reporting all matches as results. The set of results can be restricted by additional criteria, such as filters, i. e., conditions on variables and triple patterns that additionally need to be fulfilled.

As an example, let us consider the query illustrated in Figures 8 and 9 that we want to execute over our example MusicBrainz graph from Section 2. We are now interested in the musicians of ABBA who are also members of other bands. If we follow the Linked Data principles and evaluate the query using link traversal [74], this would mean first querying for triples including the IRI that represents ABBA, then navigating to the individual band members, and then following the links to all of the members’ bands and query more relevant triples.

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

PREFIX mo: <http://purl.org/ontology/mo/>

PREFIX foaf: <http://xmlns.com/foaf/0.1/>

PREFIX bbc: <http://www.bbc.co.uk/music/>

SELECT ?memberName ?groupName

WHERE { bbc:artists/d87e52c5-bb8d-4da8-b941-9f4928627dc8#artist mo:member ?m .

?x mo:member ?m .

?x rdf:type mo:MusicGroup .

?m foaf:name ?memberName .

?x foaf:name ?groupName }

FILTER (?groupName <> "ABBA")

Similar to relational database systems, there exist several dedicated graph stores (aka triple stores) that are optimized for RDF graphs and evaluating SPARQL queries. Some of the most popular triple stores are RDF4J [26], Jena [159], Virtuoso585858http://virtuoso.openlinksw.com, and GraphDB595959https://graphdb.ontotext.com/. They are building upon concepts and techniques known from relational database systems [83, 106] and expand them with graph-specific optimizations [153, 138, 68, 99, 50].

7.2 Entailment Regimes and Query Processing

In addition to explicitly querying existing facts, SPARQL provides inferencing support through so-called entailment regimes. They correspond to logical consequences describing the relationship between the statements that are true when one statement logically follows from one or more statements. Entailment regimes specify an entailment relation between well-formed RDF graphs, assuming that a graph entails another graph (denoted ) if there is a logical consequence from to . A regime extends the query of explicitly existing facts with facts that can be inferred using RDFS and OWL constructs (cf. Section 6), such as the extension of facts about subclasses using rdfs:subClassOf.

Depending on the feature set of the respective SPARQL triple store, different (or even no) entailment regimes are supported. They differ in terms of their power in the supported inference capabilities over RDFS/OWL classes and relationships. SPARQL query engines such as GraphDB adopt a materialized approach, wherein they compute the closure of the input RDF graph over a set of relevant entailment rules . Conversely, approaches grounded in query rewriting expand the SPARQL query itself rather than altering the RDF graph. Sub-queries are aligned with the entailment rules through backward chaining, and when the consequent of an entailment rule is matched, the antecedent of the rule is added to the query in the form of disjunctions.

Although both approaches yield equivalent answers for a given SPARQL query, their performance can diverge significantly. Materialized RDF query processing may outperform on-the-fly execution of the rewritten query, but it may consume more memory [63]. Nevertheless, various optimization techniques have been proposed to mitigate the overhead caused by the on-the-fly evaluation of entailment regimes [146]. These optimizations are required particularly in the presence of the owl:sameAs. This predicate corresponds to logical equivalence and involves the application of the Leibniz Inference Rule [66] to deduce all the equivalent triples entailed by equivalent resources based on owl:sameAs relation. This process may lead to many intermediate results, impacting the query engine’s performance. Xiao et al. [161] propose query rewriting techniques to efficiently evaluate SPARQL queries with owl:sameAs employing equivalent SQL queries.

7.3 Federated Query Processing

Federations provide another perspective on querying linked data over multiple sources. A federation of knowledge graphs shares common entities while potentially providing different perspectives on those entities. Each knowledge graph within the federation operates autonomously and can be accessed through various Web interfaces, such as SPARQL endpoints or Linked Data Fragments (LDFs) [151]. SPARQL endpoints offer users the ability to execute any SPARQL query against multiple SPARQL endpoints. In contrast, LDFs enable access to specific graph patterns, such as triple patterns [150] or star-shaped graph patterns [5], allowing retrieval of fragments from an RDF knowledge graph. A star-shaped subquery is a conjunction of triple patterns in a SPARQL query that share the same subject variable [153]. An LDF client can submit requests to a server, which then delivers results based on a data shipping policy and partitions results into batches of specified page sizes. Query processing in a federation of graphs differs from querying a single source because it enables real-time data integration of graphs from multiple sources. For example, Figure 10 depicts a SPARQL query whose execution requires the evaluation of subqueries over three knowledge graphs: a Cancer Knowledge Graph (CKG) [6], DBpedia, and Wikidata. This query could not be executed over a single data source unless the three knowledge graphs were physically materialized into one. Subqueries with a specific shape (e. g., star-shaped subqueries) need to be identified and posed against the knowledge graph(s) that is able to answer a particular part of the query. The federated query engine has to decompose input queries into these subqueries, find a plan to execute them and collect and merge the answers from the subqueries to produce a federated answer.

A federated SPARQL query engine typically follows a mediator and wrapper architecture, which has been established in previous research [158, 162]. Wrappers play a crucial role in translating SPARQL subqueries into requests sent to SPARQL endpoints, while also converting the endpoint responses into internal structures that the query engine can process. The mediator, on the other hand, is responsible for rewriting the original queries into subqueries that can be executed by the data sources within the federation. Additionally, the mediator collects and merges the results obtained from evaluating the subqueries to produce the final answer to the federated query. Essentially, the mediator consists of three main components:

-

Source selection and query decomposition. This component decomposes queries into subqueries and selects the appropriate graphs (sources) capable of executing each subquery. Simple subqueries typically consist of a list of triple patterns that can be evaluated against at least one graph. Formally, source selection corresponds to the problem of finding the minimal number of knowledge graphs from the federation that can produce a complete answer to the input query. On the other hand, query decomposition requires partitioning the triple patterns of a query into a minimal number of subqueries, such that each subquery can be executed over at least one of the selected knowledge graphs. Commonly, federated query engines follow heuristic-based methods to solve these two problems. For example, for query decomposition, heuristics based on exclusive groups [134] or star-shaped subqueries [153, 152, 102] enable to efficiently solve source selection and query decomposition in queries free of general predicates (e. g., owl:sameAs or rdf:type).

-

Query optimizer. This component identifies execution plans by combining star-shaped subqueries (SSQs) and utilizing physical operators implemented by the query engine. Formally, optimizing a query corresponds to the problem of finding a physical plan for the query that minimizes the values of a utility function (e. g., execution time or memory consumption). To maximize the utility function, query optimizers consider plans with different orders of executing operators, alternative implementations of operators, such as joins, as well as particular execution alternatives for certain query types, e. g., queries involving aggregation [86]. In general, finding an optimal solution is computationally intractable [85], while the problems of constructing a bushy tree plan [132] and finding an optimal query decomposition over the graphs [152] are NP-Hard. A bushy tree plan is a query execution plan that represents a query as a tree structure with multiple branches or subqueries, which can also be bushy-tree plans. Query plans can be generated following the traditional optimize-then-execute paradigm or re-optimize and adapt a plan on the fly according to the conditions and availability of selected graphs [47]. Alternatively, the query optimizer may resort to a cost model to guide the search on the space of query plans and identify the one that minimizes the values of the utility function [102].

-

Query engine. This component of a federated query engine implements the physical operators necessary to combine tuples obtained from the graphs. These physical operators are designed to support logical SPARQL operations such as JOIN, UNION, or OPTIONAL [115]. Physical operators can be empowered to adapt execution schedulers to the current conditions of a group of selected graphs. Thus, adaptivity can be achieved at the intra-operator level, where the operators can detect when graphs become blocked or data traffic bursts. Additionally, intra-operator opportunistically produce results as quickly as data arrives from the graphs, and can produce results incrementally. Some opportunistic approaches [4, 82, 56, 3] combine producing results quickly in an incremental fashion with greedy source selection so that the system stops querying additional graphs once the user’s wishes, e. g., in terms of the minimum number of obtained results, are fulfilled.

During the query optimization process, a plan is generated as a bushy tree that comprises four join operators. This is shown in Figure 10.

8 Trustworthiness and Provenance of Graph Data

Trustworthiness of web pages and data on the web can be detected by various indicators, e. g., by certificates, by the placement of search engine results, and by links (forward and backward links) to other pages. However, on the Semantic Web, there are few ways for users to assess the trustworthiness of individual data. Rules can be utilized to define policies and business logic over the web of data, and transparently used to infer data that validate or do not validate these policies. The trustworthiness of inferred data can be assessed through its provenance, which encompasses metadata detailing how the data was acquired and verified [94].

The trustworthiness of data on the web can be inferred from the trustworthiness of other users (“Who said that?”), the temporal validity of facts (“When was a fact described?”), or in terms of uncertainty of statements (“To what degree is the statement true?”). Artz and Gil [9] summarize trustworthiness as follows: “Trust is not a new research topic in computer science, spanning areas as diverse as security and access control in computer networks, reliability in distributed systems, game theory and agent systems, and policies for decision-making under uncertainty. The concept of trust in these different communities varies in how it is represented, computed, and used.” Although trustworthiness has long been considered in these areas, the provision and publication of data by many users to multiple sources on the Semantic Web introduces new and unique challenges.

One way of facilitating trust on the Semantic Web is to capture and provide the provenance of data with the PROV ontology (PROV-O)606060https://www.w3.org/TR/prov-o/. It captures information about which Agents cause data Entities to be processed by which Activities. Capturing such information requires the use of known tools for modeling metadata for RDF data, e. g., reification, singleton properties, named graphs, or RDF-star616161https://w3c.github.io/rdf-star/. While some approaches use these constructs to capture provenance information for each triple individually [54], others exploit the fact that typically multiple triples share the same provenance [72] so that they can be combined into the same named graph encoding the provenance information only once for a set of triples. Delva et al. [40] introduce the notion of shape fragments, which entail the validation of a given shape through the neighborhood of a node, along with the node’s provenance and the rationale behind its validation.

Furthermore, trustworthiness also plays a role in inference services on the Semantic Web, as data inference must consider specifications related to trustworthiness and data must be evaluated for trustworthiness. Important aspects for trustworthiness of data include [9]: the origin of the data, trust already gained based on previous interactions, ratings assigned by policies of a system, and access controls and, in some cases, security and importance of information. These aspects are realized in different systems.

In general, data provenance and trustworthiness of data on the Semantic Web have been addressed for RDF data [43, 52] as well as for OWL and rules in [43]. In addition, there are some recent approaches on supporting how-provenance for SPARQL queries [60, 77, 53] with the goal of providing users with explanations on how the answers to their queries were derived from the underlying graphs. Other work deals with access controls over distributed data on the Semantic Web [58]. Furthermore, there are approaches to computing trust values [139] and informativeness of subgraphs [91]. There are also digital signatures for graphs [16]. Analogous to digital signatures for documents, entire graphs or selected vertices and edges of a graph are provided with a digital signature to ensure the authenticity of the data and thus detect unauthorized modifications [92]. In the approach for digital graph signatures developed by Kasten et al., graph data on the Web is supported in RDF format as well as in OWL [93]. The digital graph signature is itself represented as a graph again and can thus be published together with the data on the Web. The link between the signature graph and the signed graph is established by the named graph mechanism [93], although other mechanisms are also possible. Through this mechanism, it is possible to combine and nest signed graphs. It is thus possible to re-sign already signed graphs together with other, new graph data, etc. This makes it possible to build complex chains of trust between publishers of graph data and to be able to prove the origin of data [93, 92].

9 Applications