Uncertainty Management in the Construction of Knowledge Graphs: A Survey

Abstract

Knowledge Graphs (KGs) are a major asset for companies thanks to their great flexibility in data representation and their numerous applications, e.g., vocabulary sharing, Q&A or recommendation systems. To build a KG, it is a common practice to rely on automatic methods for extracting knowledge from various heterogeneous sources. However, in a noisy and uncertain world, knowledge may not be reliable and conflicts between data sources may occur. Integrating unreliable data would directly impact the use of the KG, therefore such conflicts must be resolved. This could be done manually by selecting the best data to integrate. This first approach is highly accurate, but costly and time-consuming. That is why recent efforts focus on automatic approaches, which represent a challenging task since it requires handling the uncertainty of extracted knowledge throughout its integration into the KG. We survey state-of-the-art approaches in this direction and present constructions of both open and enterprise KGs. We then describe different knowledge extraction methods and discuss downstream tasks after knowledge acquisition, including KG completion using embedding models, knowledge alignment, and knowledge fusion in order to address the problem of knowledge uncertainty in KG construction. We conclude with a discussion on the remaining challenges and perspectives when constructing a KG taking into account uncertainty.

Keywords and phrases:

Knowledge reconciliation, Uncertainty, Heterogeneous sources, Knowledge graph constructionCategory:

SurveyCopyright and License:

2012 ACM Subject Classification:

Information systems Information integration ; Information systems Uncertainty ; Information systems Graph-based database modelsDOI:

10.4230/TGDK.3.1.3Received:

2024-07-19Accepted:

2025-04-25Published:

2025-06-20Part Of:

TGDK, Volume 3, Issue 1Journal and Publisher:

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

Transactions on Graph Data and Knowledge, Schloss Dagstuhl – Leibniz-Zentrum für Informatik

1 Introduction

Huge amounts of data expressed in the form of tables, texts, or databases are generated by organizations every day. When using these data within an organization, we have to deal with uncertainty, as the data often suffer from contradictions and differences in specificity leading to conflicts. These are the effects of incompleteness, vagueness, fuzziness, invalidity, ambiguity, and timeliness, leading to uncertainty about the correctness of the data [33]. The uncertainty can be due to the source of the data (e.g., a document written by an expert versus a non-expert in the field concerned) or in the data itself (e.g., a scientific supposition where the fact is not yet well-defined but accepted by consensus). For example, on the French Wikipedia page of the former president of France Jacques Chirac222https://fr.wikipedia.org/wiki/Jacques_Chirac, we can read that he was the mayor of Paris from March 25, 1977 to May 16, 1995 while on Wikidata333https://www.wikidata.org/wiki/Q2105 it is mentioned that he was the mayor of Paris from March 20, 1977 to May 16, 1995 as depicted in Figure 1.

In addition, data are not stable over time [133]. Some facts are known to change including all facts that involve a period of time for which the fact is valid (e.g., the mandate of a president or the place of residence of a person) or knowledge in specific domains may change regularly. For instance, in paleontology where excavations reveal new discoveries and modify knowledge previously established.

This raises questions about the meaning of data and knowledge. Data are uninterpretable signals (e.g., numbers or characters). Information is data equipped with a meaning. In [138], the authors define knowledge as data and information that enter into a generative process supporting tasks and creating new information. A knowledge graph (KG) is “a graph of data intended to accumulate and convey knowledge of the real world, whose nodes represent entities of interest and whose edges represent potentially different relations between these entities” [69]. Many knowledge graphs (KGs) have been built to represent such data in recent years, and they have become a major asset for organizations, since KGs can support various downstream tasks such as knowledge and vocabulary indexing, as well as other applications in recommendation systems, question-answering systems, knowledge management, or search engine systems [68, 122]. To build or enrich a KG and reconcile uncertain data, we can rely on manual approaches (e.g., domain experts) but this is a time-consuming and tedious process. Alternatively, it is common to leverage automatic knowledge extraction approaches that handle large volumes of data from various heterogeneous sources, e.g., texts [35], tables [100], or databases to ensure the coverage of the KG. These automatic approaches are usually based on three main steps:

-

1.

Extraction of knowledge from documents.

-

2.

Detection of duplicates and conflicts between extracted knowledge. Conflicts occur from differences in specificity or knowledge contradictions.

-

3.

Fusion of aligned knowledge: once detection is completed, conflicting knowledge should be reconciled.

However, each of the aforementioned steps is error-prone and increases the uncertainty on extracted knowledge due to the performance of the algorithms [81, 163, 93].

Uncertainty can also be found in knowledge and we can distinguish two types of knowledge: objective knowledge where a single value is accepted (e.g., the mandate of Jacques Chirac, where only one period of time is the true value), and subjective knowledge where multiple values can be accepted depending on their context and point of view (e.g., the number of participants in a protest depending on the counting technique). Most KG construction methods do not take into account noisy facts and the uncertainty inherent in the extraction algorithms and knowledge, which may impact downstream applications. Therefore, there is a need to reconcile knowledge units extracted from heterogeneous sources before integrating them into the KG in order to obtain a single or multiple representations that are as reliable and accurate as possible [122]. In this survey, we review different approaches to integrate knowledge uncertainty in the main steps of KG construction with current knowledge fusion methods and its representation in the graph. [127] surveys approaches and evaluation methods for KG refinement, particularly KG completion and error detection methods. In [98], the authors review truth discovery methods used in knowledge fusion before 2015. However, to the best of our knowledge, no survey specifically addresses uncertainty handling in KG construction.

The remainder of this survey is structured as follows. In Section 2, we describe our research methodology which allowed us to write this survey. We introduce the definition of KGs with some well-known KGs that have been built in recent years, then tools and quality metrics considered in KGs construction in Section 3. We present some knowledge extraction approaches and why they lead to uncertain knowledge in Section 4. An ideal knowledge integration pipeline for handling the uncertainty of knowledge is provided in Section 5, while the steps of knowledge refinement from the pipeline are described in Section 6 for uncertainty consideration in KG representation learning, in Section 7 for knowledge alignment, and in Section 8 for knowledge fusion. The solutions for uncertainty representation in KGs are then listed and depicted in Section 9. We also discuss some perspectives on the use of uncertainty in the KG ecosystem in Section 10, before concluding this survey in Section 11.

2 Research Methodology

This paper surveys methods to construct a KG from uncertain knowledge. In this section, we present our research methodology for finding and selecting papers for this purpose. To find papers of interest, we mainly used the Google Scholar search engine and created alerts with the following keywords: KG fusion, multi-source knowledge fusion, KG resolution, KG quality, knowledge fusion, KG reconciliation, KG alignment, KG matching, KG resolution, KG cleaning. The aforementioned keywords were combined with the keyword “uncertain” and the terms “knowledge”, “data”, and “information” used interchangeably, e.g., “uncertain knowledge fusion”, “uncertain data fusion”, or “uncertain information fusion”.

For the selection of the papers, we proceeded as follows: (1) we first looked at the title of the paper, if it is relevant and seems to be related to one of the topics we were looking for, then we read the abstract; (2) if the paper presents a method related to uncertain KG construction or a method that is not related to KGs but which could be extrapolated to a KG, we selected it. We provide Figure 2 that depicts the distribution of paper publication years according to four applications of KG refinement: uncertain embedding, knowledge fusion, knowledge alignment, and uncertainty representation. This survey covers the works addressing the representation of uncertainty in a KG published after 2004, while the representation of uncertainty in a vector space, which is a more recent research topic, covers those published since 2016. The distribution of publication years for data fusion methods presented in this survey is rather spread out starting from 2007. This is due to new models based on deep learning that are being explored to tackle these tasks.

3 Knowledge Graphs

Before going into further detail on reconciliation approaches, it is important to define KGs, which form the core of this survey. KGs provide a structural representation of knowledge that is captured by the relations between entities in the graph. The KGs provide a concise and intuitive representation and abstraction of data, making them an ideal tool to manage knowledge of organizations in a sustainable way or to support search and querying applications [69], which led several companies to build their own KGs [122].

In this section, we provide a definition of KG, and we describe some well-known KGs including open KGs and Enterprise Knowledge Graphs (EKGs) and how their consistency is maintained in Section 3.2 and Section 3.3.

3.1 What Is a Knowledge Graph?

Formally, KGs are directed and labeled multigraphs , where is the set of entities, is the set of relations, and is the set of triples , which are the atomic elements of KGs, also called facts. The subject and object are represented by nodes, the predicate indicates the nature of the relationship holding between the subject and object represented by an edge in the KG [69, 122]. For instance, such a triple could be as illustrated in Figure 3. The classes and relationships of entities are defined by a schema or otherwise named an ontology, which itself can be represented as a graph embedded in the KG [42, 36]. To build a schema or ontology, different vocabularies or languages are available, such as RDF Schema444https://www.w3.org/TR/rdf12-schema/ (RDFS) or OWL (Ontology Web Language) and OWL 2 [52]. RDFS is designed to describe RDF vocabularies specific to a domain by defining classes, subclass relationships, properties, domain, range and sub-property relationships. OWL is an ontology language and an extension of RDFS defined by Description Logics (DLs), which are a family of formal knowledge representation that enable reasoning about ontologies. Specifically, OWL DL is based on the description logic [52] and OWL 2 DL is based on [70]. OWL and OWL 2 allow the creation of ontologies with more complex constraints than RDFS (e.g., cardinality restrictions). They include several sub-languages, each offering different levels of expressiveness depending on the constructors applied (e.g., disjunction, inverse roles, role transitivity, etc.) in the description logic that defines the language. In a KG with an ontology defined using a language based on DLs, two boxes coexist, namely the Terminology Box (TBox) and the Assertion Box (ABox). The TBox defines classes and properties and the ABox contains instances of classes defined in the TBox. For example, in Figure 3, “Company” and “Country” are concepts defined by the ontology, “Galaxy S23”, “Samsung”, and “Korea” are the instances of these concepts while “2023”, “800€” are literals i.e., attributes that characterize an entity. This semi-structured representation of data, defined by its ontology, provides a clear and flexible semantic representation whose classes and relations can be easily added and connect a large number of domains [36].

3.2 Open Knowledge Graphs

For the last few years, some KG construction projects that link general knowledge about the world have appeared. The best known is probably Wikidata555https://www.wikidata.org/wiki/Wikidata:Main_Page, a large, free, and collaborative KG supported by the Wikimedia Foundation. It is a multilingual general-purpose KG that contains more than 100 million elements [155]. The structure of Wikidata is based on property-value pairs, where each property and entity is an element. A typical entity also contains labels, aliases, descriptions, and references to Wikipedia articles. To maintain the quality of data, some constraints such as property or unique value constraints alert the user in case of suspicious input (e.g., constraint violations). Wikidata also keeps the sources and references of provenance of entities to ensure their traceability, which is one of the requirements for KG quality [162].

NELL is an intelligent computer agent that ran continuously between January 2010 and September 2018 according to the official NELL website666http://rtw.ml.cmu.edu/rtw/ and that every day extracted knowledge from texts, tables, and lists on the web to feed a Knowledge Base (KB) [19]. To maintain the consistency of the KB, the knowledge integrator of NELL exploits relationships between predicates by respecting mutual exclusion (e.g., an instance of a Human class cannot be an instance of a Car class, since Human and Car are mutually exclusive) and type checking information. In addition, NELL components provide a probability for each candidate and a summary of the source supporting it.

YAGO is an ontology built on statements of Wikipedia that combines high coverage with high quality [145]. The data model of YAGO is based on entities and binary relations extracted from WordNet and Wikipedia. A manual evaluation is performed to verify the quality of data. To do this, facts are randomly selected with their respective Wikipedia pages that are used as Ground Truth (GT).

DBpedia is a multilingual KB built by extracting structured information from Wikipedia (e.g., infoboxes) and making this information available on the Web [7]. Since DBpedia is populated from Wikipedia pages in different languages, the data retrieved can sometimes be conflicting. To manage these conflicts, DBpedia has a module called Sieve which performs quality assessments by computing some metrics such as the “recency” or the “reputation” of data before applying a fusion step based on these dimensions [111, 16].

Freebase is a graph created in 2007 which provides general human knowledge and which aims to be a public directory of world knowledge. A component included in Freebase called Mass Typer, allows users to complete and semi-automatically reconcile data with data already present in Freebase by performing three actions: merge, skip, or add the data. Then acquired by Google and used to support systems such as Google Search, Google Maps, and Google KG, nowadays, Freebase is closed, and its knowledge has been transferred to Wikidata [11].

ConceptNet is the KG version of the Open Mind Common Sense project that contains information about words from several languages and their roles in natural language. It was built by collecting knowledge from multiple data sources namely Open Mind Common Sense, Wiktionary, games with a purpose for harvesting common knowledge, Open Multilingual WordNet, JMDict, OpenCyc, and DBPedia. Each node corresponds to a word or a sentence, and the relations between nodes are associated with numerical values that intend to represent the level of uncertainty about the relation [142].

3.3 Enterprise Knowledge Graphs

EKGs are major assets for companies since they can support various downstream applications including knowledge/vocabulary sharing and reuse, data integration, information system unification, search, or question answering [51, 122, 139]. This led companies such as Google, Microsoft, Amazon, Facebook, Orange and IBM, to build their own KGs [122, 77].

For instance, Microsoft built Bing KG to answer any kind of question through Bing search engine. With a size of about two billion entities and 55 billion facts according to [122], it contains general information about the world such as people, or places and allows users to take actions such as watching a video or buying a song. Alternatively, KGs can also increase understanding of user behavior.

It is the case of the Facebook KG that establishes links between the users as well as interests of users, e.g., movies or music tastes. The Facebook KG is the largest social graph with about 50 million entities and 500 million statements in 2019. To handle conflicting information, the Facebook KG removes information if the associated confidence is low, otherwise, conflicting information is integrated with its provenance and the estimated confidence of the information.

Yahoo KG [117] provides various services such as a search engine, a discovery system to relate entities, or for entity recognition in queries and text. To build their KG, they leverage Wikipedia and Freebase as the backbone of the KG and use various complementary data sources to maximize the relevance, comprehensiveness, correctness, freshness, and consistency of knowledge. They mainly validate the data w.r.t. the ontology and through a user interface that enables entities to be corrected and updated.

Also, Orange bootstraps its KG from a set of terms of interest from a enterprise repository [77]. These terms of interest are aligned with their equivalent Wikidata entities. Then, an expansion is performed by retrieving their N-hops neighborhood to identify additional entities of interest. To ensure the quality of this initial KG, pruning methods based on Euclidean distance in the embedding space, degrees of Wikidata entities, or a method based on analogical inference are used [77, 76].

In [122], the authors mention future challenges including disambiguation, knowledge extraction from unstructured and heterogeneous sources, and knowledge evolution management in the process of KG construction. We discuss some of these challenges in the next section.

4 Knowledge Acquisition

The previous section introduced some KGs including open KGs and EKGs. To build such KGs, we could rely on knowledge extraction, which is the first step in the knowledge integration process. In this section, we present what knowledge extraction is and some well-known automatic approaches in Section 4.1 that extract knowledge from texts (Section 4.1.1), Web (Section 4.1.2), and Large Language Models (LLMs) with the recent interest in probing methods (Section 4.1.3). These approaches inherently introduce uncertainty due to their imperfect accuracy in extracting facts. Finally, the definition of KG quality and related metrics is provided in Section 4.2.

4.1 Knowledge Extraction

Methods for populating a KG depend on the knowledge domain and the desired graph coverage. For example, one method could rely on the knowledge of domain experts and populate the graph manually (e.g., by crowdsourcing, such as Wikidata [155] or Freebase [11]). However, this is a time-consuming process, especially if the graph is intended to be large and may suffer from quality issues [15, 140]. Furthermore, such open KGs have a large community that enterprises or specific KGs may not have. Therefore, large KGs such as Google, Amazon and Bing rely on automatic construction methods [122]. In the following sections, we present the different tasks involved in knowledge extraction from various data sources.

4.1.1 From Texts

For a long time, the majority of data has been represented and exchanged in the form of text [106, 125]. Texts in all their forms (e.g. reports, articles, or any other textual documents) are an invaluable source of information, as they are the most widely used data formats in the world (e.g., in the scientific research domain, where knowledge is communicated through scientific articles [74]). To leverage knowledge from texts as data sources to enrich a KG, we rely on a task called Information Extraction (IE) (or Knowledge Acquisition). IE transforms unstructured information in text form into structured information, i.e., triples [120]. The aim of information extraction is to identify entities, their attributes, and their relationships with other entities in text [165]. In general, this task is divided into several sub-tasks: Entity Recognition (ER) or Named Entity Recognition (NER) and Relation Extraction (RE). Figure 4 depicts the input and output of a text-based knowledge extraction task. NER aims to identify named entities into the text and classify them to general types, while RE extracts semantic relationships that occur at least between two entities [184, 58].

There are two main IE approaches in the literature [120]: Traditional Information Extraction (Traditional IE) and Open Information Extraction (Open IE). Traditional IE relies on manually defined extraction patterns or patterns learned from manually labeled training examples [120]. However, if the domain of interest evolves, the user must redefine the extraction patterns. Open IE does not rely on predefined patterns and faces three challenges [174, 120]: automation, text heterogeneity and scalability. Automation means that the information extraction system must rely on unsupervised extraction strategies. Heterogeneity stands for the different domains and genres of text e.g., a scholar journal versus a popular science journal. Since the extractions are performed in an unsupervised manner i.e., without any labeling or predefined schema to support the extraction, this implies higher uncertainty in the extracted knowledge. Furthermore, as text types are heterogeneous due to its unstructured form, knowledge extraction patterns are more general and can lead to different levels of specificity of knowledge. Finally, the system must be able to handle large volumes of text for scalability reasons. The most common approaches to address these issues consist of pipelines composed of methods based on Natural Language Processing (NLP) [165].

One of the earliest examples of a traditional IE system is KnowItAll [43], which automates the domain-independent extraction of large collections of facts (i.e., triples) from the Web. However, it is supported by an extensible ontology and a minimal set of generic rules for extracting entities and relations contained in its ontology. KnowItAll consists of four components: an extractor, a search system, an evaluator, and a database. Its extractor instantiates a set of extraction rules for each class and relation based on a generic domain-independent pattern, for example deduces that Paris and Stockholm are instances of a “City” class. The search component, which includes 12 search engines such as Google, applies queries based on the extraction rules, i.e., “cities such as”, then retrieves the web pages and applies the extractor. An evaluator leverages the statistics provided by search engines to assess the probability that the extracted relationships are valid. Once the extracted data has passed through these three components, it is stored into a relational database.

Traditional approaches for information extraction rely on an extractor for each target relation based on labeled training examples (e.g., pre-designed extraction patterns). However, these approaches do not address the problem of extraction on large corpora whose relations are not all specified in advance [46], whereas Open IE no longer relies on predefined patterns and allows new information to be explored [120].

For example, TextRunner [174] which introduced the concept of Open IE, extracts a set of relational tuples without requiring human input. TextRunner is described by three components. The first one is a single-pass extractor that labels the text with part-of-speech tags (PoS) (i.e., grammatical tagging) and extracts triples. The second component is a self-supervised classifier trained to detect the correctness of the extraction. Finally, the last component is a synonym resolver which groups synonymous entities and relations together, since TextRunner has no predefined relations to guide extractions.

A slightly more recent approach is ReVerb [46]. Using constraints, this method aims to resolve the inconsistent extractions of previous Open IE models due to predicates composed of a verb and a noun. Two types of constraints on relational sentences are introduced: a syntactic constraint and a lexical constraint. First, the syntactic constraint imposes the relational sentence to start either with a verb, a verb followed by a noun, or a verb followed by nouns, adjectives, or adverbs. Regarding the lexical constraint, it focuses on relations that can take many arguments and not on very specific relations. According to the results, these additional constraints allow ReVerb to outperform TextRunner. In addition, ReVerb assigns a confidence score to extractions from a sentence by applying logistic regression classification. To do this, extractions of the form from a sentence and for 1,000 sentences were labeled as valid or invalid, and 19 features such as “ begins with ”, “ is a proper noun”, “ covers all words in ” were used as input variables for the logistic regression model. Such confidence scores can be used for downstream knowledge extraction tasks to support their integration into the KG (see Section 5.3).

OLLIE [108] extends the syntactic scope of relations phrases to cover much larger number of expressions and allows additional context information such as attribution and clausal modifiers. The authors argue that other models lack context on extracted relations. Hence, compared to previous methods, OLLIE introduces a new component that analyzes the context of an extraction when the extracted relation is not factual. This context is attached to each extracted relation and models the validity of the information expressed (e.g., mentions of “according to” in a sentence). In [75], the authors present multiple components involved in different IE pipelines in the literature. They propose several combinations of these components and evaluate them in a complete pipeline that includes four steps in the PLUMBER framework: Coreference Resolution, Triples Extraction, Entity Linking and Relation Linking. 40 reusable search components are combined, representing 432 distinct information extraction pipelines. Further information is provided in [75].

4.1.2 From the Web

The Web contains a huge amount of data. It is probably the most widely used tool for exchanging knowledge between people (e.g., in the form of HTML texts). Therefore, it represents an invaluable data source for building KGs. However, the latter suffers from uncertain facts, partly due to the fact that anyone can edit it. In this context, it is necessary to select reliable data sources from the Web and to implement approaches for assessing the reliability of the extracted knowledge. In this section, we present some KGs that have been built from the Web.

NELL [112] is an agent that takes an initial ontology consisting of categories and relations, which is used to define learning tasks such as category classification, relation classification, or entity resolution. The core of NELL consists of learning thousands of tasks to classify extracted noun phrases into categories, to find the confident relations for each pair of noun phrases, and to identify synonymous noun phrases. NELL reads facts from the Web and incrementally refines its KB by removing incorrect ones from a set of labeled data and user feedback on the trustworthiness of the extracted facts. The extracted facts are then stored in the KB with their provenance and confidence score computed during the relation classification step.

Knowledge Vault [35] is a probabilistic KB that combines extractions from Web content and prior knowledge derived from existing knowledge repositories such as Freebase. They rely on the Local Closed World Assumption, i.e., for a set of existing object values from an existing KG containing a set of triples, a candidate triple is correct if . However, if and , the triple is incorrect. Hence, this assumption can be difficult to adopt in the construction of an EKG. Fact extraction is performed using four different extractors from: text documents, HTML trees, HTML tables, and human annotated pages. To merge the extractors they construct a feature vector for each extracted triple and apply a binary classifier to compute the probability of the fact being true given the feature vector. Each predicate is associated with a different classifier. The feature vector contains, for each extractor, the square root of the number of sources from which the triple was extracted and the mean score of the extractions across these sources. They assume that the confidence scores of each extractor are not necessarily on the same scale. Therefore, to cope with this issue, they apply a Platt scaling method that fits a logistic regression model to the confidence scores in order to obtain a probability distribution.

Probase [164] does not consider knowledge extracted from the Web to be deterministic but rather models it using probabilities. The authors argue that existing KBs and taxonomy construction methods do not have sufficient concept coverage for a machine to understand text in natural language. Probase includes the uncertainties of the extracted knowledge (specifically vagueness and inconsistencies that are due to the knowledge and to flawed construction methods). It was built from 1.6 billion web pages using an iterative learning algorithm that extracts pairs that verify an isA relation between and , and then a taxonomy construction algorithm organizes these extracted pairs into a hierarchy. In Probase, facts have probabilities that measure their plausibility and typicality. Plausibility is computed from multiple features, e.g., the PageRank score, the patterns used to extract isA pairs, or the number of sentences where x or y is present with its respective role (sub or super concept). Typicality is then computed as a function of plausibility and the number of pieces of evidence of the fact, i.e., the number of sentences in which the fact is mentioned.

4.1.3 Probing

With the arrival of deep learning models and Large Language Models (LLMs), some triple extraction tasks are now successfully carried out by such models. [114] reviews some of them such as Graph-Based Neural Models, CNN-based model, Attention-Based Neural model and others applied to a specific knowledge domain. Also, with significant advances in LLMs and the fact that they are trained on a wide variety of information sources, some researchers have shifted their attention to KG construction by leveraging the knowledge learned by LLMs [125]. For example, a workshop on KB construction from pre-trained language models (KBC-LM777https://lm-kbc.github.io/workshop2024/) and a challenge on language models for KB construction (LM-KBC888https://lm-kbc.github.io/challenge2024/) are now proposed at the International Semantic Web Conference (ISWC999https://iswc2024.semanticweb.org/).

In [58], the authors use the BERT model for NER and RE tasks to build a biomedical KG. In [57], the authors exploit knowledge encoded in LLM parameters (a.k.a. parametric knowledge [125]) to feed a KG by harvesting knowledge for relations of interest. To illustrate their method, they provide an example of knowledge extraction for the “potential_risk” relation. The input contains a prompt such as “The potential risk of A is B” with a few shots of seed entity pairs that validate the relationship, e.g., (eating candy, tooth decay). Then, the entity pairs obtained at the output of the LLM are ranked according to a consistency score computed w.r.t. the compatibility scores between entity pairs.

However, Pan et al. [125] explore possible interactions and synergies between KGs and LLMs including the construction of KGs from LLMs and raise several issues. LLMs can be used to extract knowledge directly, but they are mainly applied to generic domains and perform poorly on specific domains. They also lack accuracy with numerical facts (e.g., the birthday of a person) and have difficulty retaining knowledge related to long-tail entities. In addition, LLMs are subject to various biases (e.g., gender bias) that are inherent in the training data. Finally, LLMs do not provide any provenance or reliability information for the extracted knowledge [125], which can be an obstacle for many knowledge fusion approaches presented in Section 8. In [187], the authors evaluate the ability of LLMs, particularly different GPT models, on KG construction and reasoning tasks (i.e., link prediction and question answering) under zero-shot and one-shot settings. The authors also point out that LLMs do not outperform state-of-the-art models for KG construction and have limitations in recognizing long-tail knowledge.

4.2 Quality and Metrics

Assessing the quality of the constructed KG is important since it is practically impossible to obtain a perfect KG, especially when it is very large and populated by automatic approaches from multiple data sources, or by manual approaches where human contributors are not necessarily familiar with KGs and have different levels of expertise.

Furthermore, the world is uncertain and knowledge is constantly evolving.

To evaluate a KG, we can rely on five quality dimensions [160, 170, 69, 67]: completeness, accuracy, timeliness, availability, and redundancy.

Completeness refers to the coverage of knowledge within the specific domain the KG is intended to represent.

The evaluation of this dimension depends on the assumption made about the KG [50]: the Open World Assumption (OWA), the Closed World Assumption (CWA), and the Local Closed World Assumption (LCWA).

Under the OWA, if a triple is not present in the KG, it is not necessarily incorrect but rather unknown.

Conversely, under the CWA if a triple is not present in the KG, then it is considered incorrect.

Finally, under the LCWA if the KG knows at least one object or value for a predicate associated with the subject , it is assumed to know all the values of the pair .

For example, this dimension can be measured as the number of instances represented in the KG relative to the total expected number of instances.

Some studies have focused on estimating the expected total number.

More details on these measures can be found in [160].

Accuracy corresponds to the correctness of the facts in the KG.

In [162], Weikum et al. define some metrics to assess the quality of a KB such as precision that captures the accuracy (these terms are sometimes used to describe the same thing), and recall that captures the completeness, in the following way:

where is a set of statements from the KB to be evaluated, and is the ground truth set for the domain of interest.

To deal with uncertain statements that are associated with a confidence score, a threshold is chosen, for which all statements with a score above this threshold are kept.

They also provide an evaluation method that involves uniformly taking a sample of statements and representative of the KB and evaluating it, for example manually, where several annotators may be involved and a consensus or large majority must be found for each annotation.

Timeliness represents how up-to-date the KG is. The KG can contain temporal facts or facts that evolve and are valid only over a fixed period of time.

Availability measures the access to KG data, involving its querying and representation.

For example, this dimension can be measured by the response time to queries or by the level of accessibility to KG data (e.g., RDF, Turtle, JSON-LD, or SPARQL query service).

Redundancy assesses whether different statements express the same fact, which may require an entity resolution task where duplicates are aligned and then the triples associated with these duplicates are fused.

Another aspect of data quality is the preservation and representation of its provenance and certainty in the form of metadata, which can be used for data selection issues in terms of both source and quality. The metadata can also support knowledge fusion approaches by serving as prior knowledge, as we describe in Section 8. Other metrics are proposed in the survey [160] for each quality dimension.

5 Knowledge Graph Refinement

A KG can be populated by human effort, or by automatic knowlegde extraction approaches from heterogeneous sources (e.g., tables, texts, databases, etc.) as presented in Section 4. The advantage of using multiple sources is twofold: ensuring knowledge coverage and identifying inconsistencies by leveraging collective wisdom [41]. However, the world is uncertain and data sources are of varying quality leading to uncertain knowledge, which must be handled in the integration process w.r.t. the quality dimensions listed in Section 4.2. The causes of uncertainty are presented in Section 5.1. We provide a brief overview of several methods for integrating data into a KB under uncertainty in Section 5.2. Section 5.3 presents our theoretical data integration pipeline, designed to address knowledge uncertainty and enrich the KG.

5.1 Knowledge Deltas

Uncertainty is everywhere in knowledge and can take the form of invalidity, vagueness, fuzziness, timeliness, ambiguity, and incompleteness according to [34]. We adopt this definition of uncertainty in this survey. We distinguish two types of uncertainty: epistemic, i.e., knowledge about a piece of information is incomplete or unknown; and ontic, i.e., uncertainty is inherent in the information [156]. The possible causes of uncertainty are [1, 156]: (i) a lack of knowledge; (ii) a semantic mismatch or a lack of semantic precision, and (iii) a lack of machine precision.

When a KG is constructed from multiple heterogeneous data sources, uncertainty can lead to the emergence of knowledge deltas, characterized by differences in the information or facts they contain. These knowledge deltas may occur between two data sources on the same subject, e.g., differences in specificity and contradictions. For example, a very specific data source and a generic data source may provide information on the same topic but with different terms, increasing the risk of knowledge delta. It is also possible for a data source to contradict itself, one possible way to detect these deltas is to compare the data source with itself by “reflecting on data patterns or extrapolation to complete missing information and/or detect wrong ones” according to [33]. On the other hand, duplicates can also occur when the two data sources provide exactly the same knowledge, which needs to be managed for reasons of scalability and KG quality.

We use some examples to illustrate the various forms that uncertainty can take. Suppose that is a statement. Among the possible knowledge deltas, we find six causes:

-

Invalidity: is invalid. As illustrated in Figure 5 (a), the Wikipedia text provides invalid information: the date of renaming of the Paris region to “Île-de-France” is invalid in the Wikipedia page101010https://en.wikipedia.org/w/index.php?title=Paris&oldid=1197869134;

-

Vagueness: provides vague, imprecise information. As depicted in Figure 5 (a), the date mentioned on Wikipedia is more vague than the date provided by Wikidata111111https://www.wikidata.org/w/index.php?title=Q90&oldid=2058313448 for the “located in the administrative territorial entity” property, which contains additional information such as the day, month, and year;

-

Fuzziness: states a fuzzy truth, where the range of values is itself imprecise. If we focus only on the sentence within the black box in Figure 5 (b) of the Wikipedia article121212https://en.wikipedia.org/wiki/5G, it indicates that the 5G network has a higher peak download speed, but without specified lower and upper bounds.

-

Timeliness: a data source may provide the statement which is no longer valid at the current time, unlike another source, which may provide an updated version of . As in Figure 5 (c), on the Wikipedia page131313https://en.wikipedia.org/w/index.php?title=Twitter,_Inc.&oldid=1087087372 of May 10 2022, “Twitter” had not yet been renamed “X”. This information has now been changed, otherwise there would have been an update issue;

-

Ambiguity: has multiple interpretations. As shown in Figure 5 (d), Mercury141414https://en.wikipedia.org/wiki/Mercury can be a planet, an element, or a god in mythology;

-

Incompleteness: gives incomplete information. As in Figure 5 (e), the tracklist of the album “Evolve” by the group Imagine Dragons on Wikidata151515https://www.wikidata.org/w/index.php?title=Q29868187&oldid=2009666363 contains fewer songs than in Wikipedia161616https://en.wikipedia.org/w/index.php?title=Evolve_(Imagine_Dragons_album)&oldid=1197244329.

The appearance of knowledge deltas can be involuntary or voluntary. An involuntary delta could be the result of uncertain knowledge about a domain (e.g., popular science article vs expert article), a typing error, or an outdated data source. A voluntary delta could simply stem from sabotage by a malicious person (for example, spreading fake news). Deltas are closely related to the quality dimensions of a KG, since they have a direct impact on them. For example, a delta due to the invalidity of an information from a data source directly affects the accuracy of a KG. We propose to classify these types of deltas leading to conflicts into two classes as depicted in Figure 6, namely Specificity that stands for a difference between two data sources in the specificity of knowledge and Contradictory that stands for an incompatibility of knowledge. We classify Fuzziness, Incompleteness, and Vagueness deltas in the specificity category. These deltas lead to different levels of specificity between the knowledge of two data sources. This knowledge is not necessarily wrong, but may be in conflict e.g., a city vs. a country to describe the location of an event. On the other hand, Invalidity, Ambiguity, and Timeliness deltas lead to contradictory knowledge, where some parts of the knowledge are necessarily wrong.

In [9], the authors distinguish two types of data conflicts from a data fusion perspective: contradictions and uncertainties. The authors define contradictions as follows: “a contradiction is a conflict between two or more different non-null values that are all used to describe the same property of an object” and uncertainties as follows: “an uncertainty is a conflict between a non-null value and one or more null values that are all used to describe the same property of an object”. We adopt the same definition of contradictions, but adopt a different definition of uncertainty. We define the second type of conflict as a difference in the specificity of knowledge, as illustrated in Figure 6. In this survey, “uncertainty” is a more general term whose sources lie in knowledge deltas and the inaccuracy of each step in the knowledge integration pipeline, including knowledge acquisition.

In [5, 177], the authors assume that uncertainty is a common feature of the knowledge we handle daily. In this sense, exploiting uncertain data sources by ignoring uncertainty to enrich a KG would impact downstream applications of the graph. The life cycle for exploiting uncertain data sources requires the quantification, and the integration of uncertainty in the KG. In such a view, the uncertainty should be considered everywhere in the data integration pipeline, including its representation in the KG. In Section 5.3, we present our ideal data integration pipeline that addresses the aforementioned requirements.

5.2 Integrating Data Under Uncertainty

Integrating data from multiple sources can introduce inconsistencies and uncertainty into a database. Bleiholder and Naumann [9] describe the data integration process in three steps: 1) schema mapping, 2) duplicate detection, and 3) data merging. The first step establishes a common schema among data sources, the second step aligns duplicates and detects inconsistent representations for the same entity, and the final step combines and resolves the various inconsistencies (e.g., contradictions) to produce a unified representation. In [39], the authors mention that uncertainty can arise for several reasons, such as the approximation of semantic mappings between data sources and the mediated schema (i.e., the integration schema grouping all sources), the extraction techniques used to extract data from unstructured sources, and also mention uncertainty at the application level when querying with the transformation of keywords into a set of candidate structured queries. In [39, 136], the authors address the schema mapping step, considering uncertainty through probabilistic schema mappings and how to answer queries on the mediated schema. Different strategies can be used to deal with inconsistencies. One approach involves blaming the most recent assertion that caused the inconsistency in the KB [107]. In [28], Amo et al. consider two methods. The first is to allow inconsistencies to remain in the KB and reason about them using paraconsistent logic [8], while the second approach seeks to get rid of inconsistencies to obtain a coherent KB. Belief revision addresses the latter approach by updating the knowledge in a KB when a contradiction is encountered. The update is done by revising the KB, where some beliefs must be retracted [56]. The formalism used to represent beliefs and the nature of the relationship between explicit and implicit beliefs play an important role in the belief revision process [56]. In [107], Martins and Shapiro propose a tailored logic for belief revision systems. Their system tracks the support for each proposition in the KB and applies inference rules of the logic to compute dependencies between propositions. These dependencies help identifying sources of inconsistency and guide the revision process.

5.3 Requirements for an Ideal Data Integration Pipeline

All ways of enriching a KG (e.g., crowdsourcing, extraction from texts or tables, etc.) are error-prone methods, since humans cannot be experts in every domain, involving mistakes and extraction algorithms rarely achieve perfect precision. Errors can occur at various stages in the data integration process that encompasses extraction, alignment, or fusion. Probably one of the most natural ways of capturing and quantifying uncertainty caused by knowledge deltas or the reliability of knowledge integration components is to use confidence scores. As mentioned in Section 4, several extraction approaches provide confidence scores about the triples they extract. For example, each triple outputted by ReVerb [46] is associated with a confidence score obtained from a logistic regression. Another work [96] focuses on estimating a confidence score for the slot filling task, which consists of filling predefined attributes for entities in a KB population case. This confidence score is intended to support the aggregation of values from different slot filling systems. The authors have shown that confidence estimation improves the performance of the task and that the correctness of the values and the estimated confidence are strongly correlated. In [163], the authors estimate confidence scores for an entity alignment task that represent the marginal probability that a set of mentions all refer to the same entity. Therefore, there is a need to consider these confidence scores and represent them as triple metadata along with their provenance information.

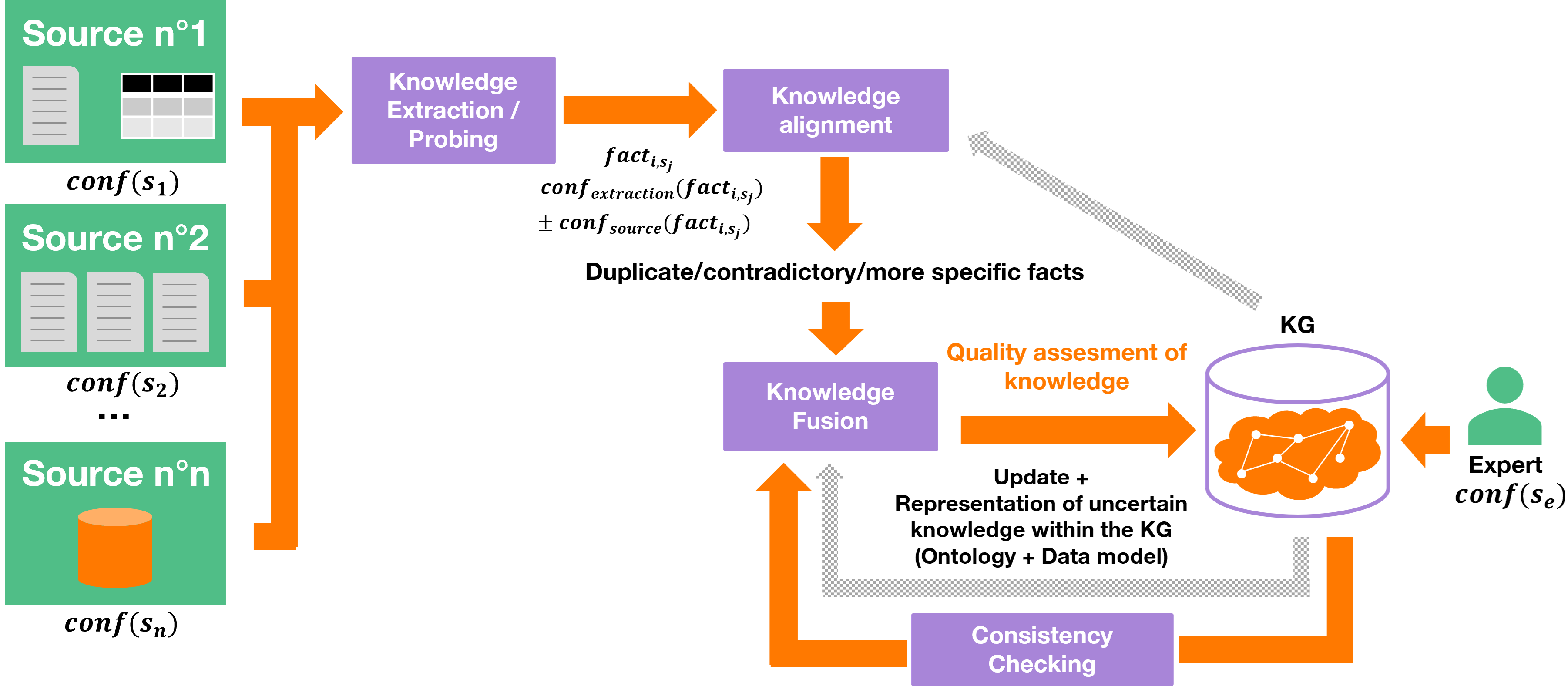

From this perspective, we propose an ideal pipeline of data integration from heterogeneous sources depicted in Figure 7. After extraction, knowledge integration often involves the following two modules [10]: Knowledge Alignment and Knowledge Fusion. In this pipeline, we propose a third module called Consistency Checking, which actually takes place after the data integration and identifies and repairs inconsistencies in the KG, improving future knowledge enrichment. The inputs of the pipeline are multiple heterogeneous sources whose final purpose is to feed the KG. From these data sources , facts are extracted with different confidence scores such as a confidence score in the fact by the extraction algorithm , possibly a confidence score in the fact by the source , and a confidence score in the source . In addition to these multiple data sources, an expert can also populate the KG with a confidence score . Before providing these facts to the KG, several tasks are required due to potential knowledge deltas. The first task Knowledge Alignment is the identification of duplicates, differences in specificity, and contradictions between the extracted facts and the KG. The next step Knowledge Fusion defines a policy to resolve conflicting facts and keep the information as consistent, specific, and complete as possible. In addition, it estimates the quality of both data sources and extracted facts by assigning them confidence scores. Then, the knowledge in the KG is updated with its confidence scores and their provenance information since a user may want to query the KG about the confidence of triples w.r.t. quality dimensions. The last step checks the consistency within the KG. Since this step is performed after the enrichment of the KG, and since this survey focuses on uncertainty management in the construction of KGs, we do not provide further details on it in the following sections. The aim of this pipeline is to take into account all confidence scores in the knowledge alignment and fusion modules. This is not the case in existing work, where only the confidence scores in the data sources are leveraged by the fusion module. However, methods for KG completion purpose (i.e., predicting new relations using only the KG itself) taking into account the uncertainty in an embedding space have recently been investigated.

We describe the ideal data integration policy through an example. The integration pipeline takes as input a set of sources containing a set of facts and aims to construct a as output. Each fact is represented as a triple of the form where is an entity, is a relation, and is either a literal or another entity. The alignment of each fact with consists in finding a correspondence between the pair from the fact and an existing pair in the current state of . If such a correspondence does not exist, the fact is added to along with its associated confidence score and provenance information. Otherwise, if such a correspondence exists, i.e., and share the same pair, the pipeline applies the following integration policy:

-

(1)

If is more specific than , then is replaced by and the confidence score of the source is increased. If such comparisons are not possible, the strategy is to keep both facts;

-

(2)

Otherwise, if is a duplicate of , only the provenance of is added to the existing fact and the confidence scores of the sources providing it are increased;

-

(3)

Otherwise, if contradicts , the integration pipeline resolves the conflict by finding the most trustworthy value. The confidence of the source providing the least trustworthy fact is decreased, while the confidence score of the source providing the most trustworthy one is increased.

This integration policy aligns with the fusion approaches presented in Section 8.2. These approaches follow the intuition that the reliability of a source increases when it provides facts that are estimated to be correct, and a fact is estimated to be correct if it is supported by reliable sources. The implementation of this policy relies on the following key elements:

-

Estimating specificity cannot be performed globally across all knowledge domains, as it is a context-sensitive and relation-dependent task. A KG does not have a total order, but contextualized partial orders can be deduced from the KG taxonomy when available with relations such as subclassOf, instanceOf, or partOf and can help determine whether one value is more specific than another [6, 81]. For example, (White house, location, United States) versus (White house, location, Washington): the most specific fact, namely should be kept by the integration policy. In addition, it may be interesting to leverage the generic information United States by deviating from it such as (White house, country, United States) to enrich the graph.

-

Confidence scores from the upstream steps of knowledge fusion must be calibrated to represent them as probabilities on the same scale (e.g. via Platt scaling or isotonic regression) [35] to make them comparable and initialize the fusion models summarized in Section 8. Only the confidence scores estimated within the fusion model itself, namely those representing the reliability of the sources and the trustworthiness of facts are updated during the fusion process.

Algorithm 1 illustrates our vision of the policy for integrating conflicting data. It provides a straightforward guideline and corresponds closely to the framework of knowledge fusion models presented in Section 8.

As mentioned in the process above, we need to maintain a history of the provenance of the facts, as provenance information is essential for the quality of the KG, but could also be used for future conflict resolution or KG updating [73]. For this purpose, there exists a normative ontology called PROV-O [89] that includes provenance information. It can be used in RDF-based KGs. The three main classes of the PROV-O ontology are prov:Entity, prov:Activity, and prov:Agent (prov:Entity is something that can be changed by an activity, prov:Activity is something that acts upon or with entities, and prov:Agent can be a human who performs an activity).

In the following sections, we detail three steps of the pipeline namely: Knowledge Alignment, Knowledge Fusion, and Uncertainty Representation within the KG. We propose a section that describes uncertain KG embedding methods for KG completion and confidence prediction tasks (Section 6) before presenting the aforementioned steps. Knowledge alignment is discussed in Section 7. In Section 8, we summarize knowledge fusion methods. Finally, we explore the different mechanisms available for representing triple uncertainty in a KG in Section 9.

6 Uncertain Knowledge Graph Embedding

Embedding methods allow the KG to be represented in an -dimensional vector space, i.e., its entities and relations are -vectors. These embeddings attempt to preserve the structural properties of the graph, making it easier to manipulate the graph for machine learning applications such as link prediction, completion, or node classification [78]. A wide range of embedding models have emerged, such as TransE [12], DistMult [171], ComplEx [154], RotatE [150], neural networks applied to graphs such as RGCN [137], and GCN [85]. Additionally, embeddings are also increasingly used in the construction of KGs, for example, for knowledge alignment [48], or other KG refinement tasks [68]. Most embedding approaches do not include knowledge uncertainty in their models. However, when constructing KBs, the knowledge is often uncertain or noisy and not taking into account uncertainty during the representation learning can introduce bias into the representation and impact further applications. Given the importance of embedding methods in both KG applications and construction, we believe it is useful to gather such methods that incorporate uncertainty, expressed as a confidence score in their modeling. This section describes some of these models and the datasets used to evaluate them.

6.1 Uncertain KG Embedding Models

In this paper, we define uncertain KGs as follows. An uncertain knowledge graph (UKG) is represented as a set of weighted triples , where is a triple representing a fact and is a confidence score for this fact to be true. The uncertainty associated with triples in the KG relies on the plausibility of the triples, but most KG embedding (KGE) methods do not consider this information in their modeling, making the assumption that all triples are deterministic. Such an assumption does not reflect the reality where many triples are uncertain due to the reasons described in Section 5. Table 1 summarizes the UKG embedding approaches with their associated tasks, scoring function, the year of publication, and the datasets on which the experiments were conducted. We can notice that uncertain graph embeddings have been studied only recently. Each of the following models has its own specific approach to incorporating a confidence score into their modeling. However, most models use a scoring function derived from existing deterministic KG embedding models, incorporating confidence scores, for instance, into the loss function used to train the embeddings.

UKGE [25] improves traditional KGE models by using the Probabilistic Soft Logic (PSL) framework to infer confidence scores for unseen relational triples. Thus, UKGE encodes the KG according to confidence scores for both observed and unseen triples. It maps the scoring function results to confidence scores using two different mapping functions: a logistic function and the bounded rectifier function. For the tasks of fact classification, global ranking, and confidence prediction tasks, UKGE outperforms the deterministic KG embedding models such as TransE, DistMult, ComplEx and the URGE model on CN15k, NL27k and PPI5k datasets.

SUKE [158] argues that UKGE does not fully exploit the structural information of facts. To improve this, SUKE has two components: an evaluator and a confidence generator. The evaluator assigns a structural score and an uncertainty score to each fact, which are jointly used to define the rationality of a fact. This rationality score is then used to generate a set of candidate triples, which are then fed into the confidence generator. The generator outputs confidence scores for these triples based on the uncertainty score provided by the evaluator. The plausibility of facts is computed with the DistMult [171] scoring function, then it applies a different mapping function with two parameters for the structural score and the uncertain score before merging them. The confidence generator only uses the uncertainty score to approximate the true confidence value of triples.

BEURRE [24] models entities as probabilistic boxes and relations between two entities as an affine transformation. The confidence score of the relation between two entities is represented as the volume of the intersection of their boxes. Constraints such as transitivity and composition are inserted into the modeling of embeddings to preserve these properties on relations in the embedding space. These constraints act as a loss regularization in the global loss function. Then, embeddings are trained by optimizing a loss function for a regression task and a regularization loss to apply transitivity and composition constraints.

GTransE [83] embeds uncertainty in a translational model by extending the well-known TransE [12]. Uncertainty, represented by a confidence score, is incorporated at the level of the loss function on a hyperparameter of the margin loss function when training the embeddings:

where =(head, relation, tail, confidence score), M is a margin parameter and represents the positive part of . The scoring function corresponds to the L1 or L2-norm. Thus, with this loss function if a triple has a high confidence score, it will tend to satisfy , otherwise the entity will tend to diverge from . Before GTransE, the same authors introduced CTransE [82] which is a related model that omits the hyperparameter used as an exponent of the confidence score.

IIKE [47] models confidence within the embedding space using a probabilistic model. The authors propose an embedding model that takes uncertainty into account by minimizing a loss function to fit the output confidence of triples acquisition (e.g., NELL, or crowdsourcing) to the scoring function of the triples given by a probability function. The plausibility of a triple is modeled as a joint probability of the head entity, the relation and the tail depending on , , and . For the loss function, the authors minimize the difference between the logarithm of the triple probabilities and the logarithm of the confidence scores from knowledge extraction. They then apply stochastic gradient descent to refine the embeddings iteratively.

PASSLEAF [26] decomposes the model into two components: a confidence score prediction framework that adapts scoring function from existing models, e.g., ComplEx [154] or RotatE [150], and a semi-supervised learning framework.

For the UKG completion task, each relation must have sufficient training examples to perform correctly. GMUC [178] tackles the few-shot UKG completion task for long-tail relations. GMUC learns a Gaussian similarity metric that enables the prediction of missing facts and their confidence scores from a limited number of training examples. The model encodes a support set, comprising a few facts along with their confidence scores, and a query into multidimensional Gaussian distributions. The query consists of (head, relation) pairs where the tail and confidence score must be predicted. A Gaussian matching function is then applied to generate a similarity distribution between the query and the support set. GMUC outperforms the UKGE model on link prediction and confidence prediction tasks on NL27K and three NL27K-derived datasets with added noise.

UOKGE [14] learns embeddings of uncertain ontology-aware KGs based on confidence scores. It encodes an instance as a point represented by an n-dimensional vector, a class as a sphere where denotes the center of the sphere and its the radius, and a property as a sphere where defines the center of the sphere, with representing the domain, representing the range, and is the radius. It then introduces a mapping function to rescale values between 0 and 1 to represent uncertainty. Six distinct gap functions are defined to encode uncertainty for six types of relations: type, domain, range, subclass, sup-property, and remaining properties. The model then minimizes the mean squared error (MSE) between the confidence scores and the corresponding gap functions.

FocusE [124] improves KG embeddings with numerical values on edges by intervening between the scoring function of traditional models (e.g., TransE, ComplEx, or DistMult) and the loss function. They introduce numerical values on edges in a manner that maximizes the margin between the scores of true triples and their corruptions. Given the score function of an embedding model , where is a triple, they use a nonlinear Softplus function such that the score provided by is greater than or equal to zero:

The numerical value associated with an edge is then expressed through as follows:

where is a hyperparameter controlling the importance of the topological structure of the graph and is the numerical value on the edge. The final function of FocusE is then: .

ConfE [182] encodes the tuples , where is an entity and is an entity type, by considering the uncertainty in each tuple. They treat entities and entity types as distinct elements in a KG and learn embeddings of entities and entity types in two separate spaces with an asymmetric matrix to model their interactions. The scoring function is defined as , where is the asymmetric matrix. Uncertainty is incorporated into the loss function as follows:

where is the set of entities and their types, and is the set of corrupted tuples.

CKRL [168] introduces multiple levels of confidence, namely a local triple confidence, a global path confidence, a prior path confidence, and an adaptive path confidence. These confidence scores are integrated into the energy function as follows:

where and the triple confidence score aggregating all levels of confidence.

WaExt [86] embeds triples of a KG by incorporating the weight associated with an edge into the scoring function as follows:

and then minimizes a margin ranking loss function.

Wang et al. [159] model each entity and each relation as a multidimensional Gaussian distribution , where is a mean vector representing its position and is a diagonal covariance matrix representing its uncertainty.

MUKGE [101] aims to improve the generation of unseen facts for KGE training. The authors argue that PSL cannot leverage global multi-path information, leading to information loss when estimating the confidence of unseen facts. Indeed, PSL only considers information from simple logical rules with a path length of two, as used in UKGE [25] (e.g., ), and does not consider other paths in the graph between the subject and object of the inferred relation. To address this issue, MUKGE introduces an algorithm called Uncertain ResourceRank, which infers confidence scores for unseen triples based on the relevance of the entity pairs (subject, object). The relevance of an entity pair is computed with respect to the directed paths between subject and object in the KG. MUKGE uses circular correlation as the scoring function, and applies either the sigmoid or the bounded rectifier function as the function to obtain the triple confidence. Then the authors design the loss function to align each positive triple to its corresponding confidence score. The authors evaluate their model on confidence prediction, relation fact ranking, and relation fact classification. For these three tasks, MUKGE outperforms the BEURRE and UKGE models, particularly focusing on asymmetric relations. However, for all relation types, the performance is competitive with other models, except for confidence prediction, where MUKGE is the best alternative.

6.2 Datasets with Numerical Values on Edges

In the literature, five datasets derived from uncertain KBs are commonly used in UKG completion or confidence prediction tasks, as presented in the previous section [124]. CN15K is a subset of ConceptNet (discussed in Section 3.2) where the numerical values represent the uncertainty of the triples [142]. The confidence scores for each triple are computed w.r.t. the number of sources and their reliability. NL27K is a subset of NELL dataset (presented in Section 4.1.2) where the confidence scores are computed and refined using an Expectation Maximization (EM) algorithm and a semi-supervised learning method. PPI5K is a KG that represents the protein-protein interactions, where the numerical values indicate the confidence of the relations [151]. O*NET20K is a dataset introduced by [124], containing descriptions of jobs and skills. The numerical values represent the strength of the relations. Some embedding models also generate their own synthetic noisy datasets with fictitious confidence scores following specific probability distributions.

7 Knowledge Alignment

In Section 4, we discussed enriching a KG through extraction methods, however, it is also possible to extend a KG by leveraging existing KGs. To achieve this, the two KGs must be aligned. In this section, we first introduce the task and mainly present embedding-based entity alignment approaches. Knowledge alignment, also known as knowledge resolution or knowledge matching, is the process of finding relationships or correspondences between entities of different ontologies [45]. It represents one of the steps in identifying candidate entities for knowledge fusion. For example, in Figure 8, the entity “Galaxy S23” in both graphs refers to the same real world entity but originates from two different sources. This task copes with the “redundancy” quality dimension (Section 4.2). Whether at the instance level or at the ontology level, many works tackle the knowledge alignment task. This section aims to provide a brief overview of the knowledge alignment task and approaches by collecting various existing surveys [48, 44, 148].

The authors of [45] distinguish different types of matching including semantic and syntactic approaches, such as string-based, language-based, subgraph-based, rule-based, embedding-based, or relational-based methods. An example of a rule-based method is presented in [79]. For each relation across the two domains, the following rules are defined:

| (1) | |||

| (2) | |||

| (3) |

where is a relation present in both graphs. To reduce complexity and avoid scalability issues, some approaches use blocking methods that avoid unnecessary comparisons by grouping entities. For example, Nguyen et al. [115] propose different strategies for blocking based on the description of entities, such as token blocking, i.e., entities in the same cluster share at least one common token in their description, attribute clustering blocking, i.e., clusters the entities in the same group if their attributes are similar, and prefix-infix(-suffix) blocking, i.e., exploits the pattern in the description of the URI (e.g., URI infix) to create new blocks. After an optional blocking step, knowledge alignment methods are performed. Most recent KG alignment methods are deep learning methods based on graph embeddings. Among them, [48] distinguish three strategies: Sharing, Swapping, and Mapping. Sharing updates the entity embeddings produced by the embedding module according to the seed alignments. Swapping updates the entity embeddings produced by the embedding module according to the seed alignments but adds positive triples by leveraging aligned pairs, e.g., , . Mapping learns a linear transformation between the two embedding spaces of the aligned KGs.

Furthermore, alignment approaches are diverse and varied, some of them leverage attributes of entities, or use only relations between entities where different depths of context (e.g., neighboring entities) are considered, while for others the path in the graph is an important aspect. We provide Table 2, which is strongly inspired by [48, 148] and summarizes these different existing alignment approaches to give an overview.

The authors of [48] highlight that BERT_INT outperforms all models in terms of effectiveness and efficiency, especially when the KGs contain very similar factual information. In fact, the alignment models that use language models, such as BERT_INT, are the most efficient for this task. They also highlight the critical factors that affect the effectiveness of relation-based and attribute-based alignment methods, such as:

-

the depth of neighbors considered;

-

the strategy of negative sampling (for training), as the number of negatives are considered, the performances decrease;

-

the input KGs to align, e.g., for OpenEA datasets, it is not necessary to use attribute information; factual information is sufficient.

With the development of KGE models, KG alignment approaches based on embeddings have gained significant interest in recent years. However, probabilistic models also address this task. For example, in [144], the authors introduce a holistic model called PARIS, which aligns both instances and schemas of KGs. The model employs a probabilistic algorithm centered around the notion of “functionality”. They define the functionality of a relation as follows:

Where and are instances. In this way, they incorporate the functionality of relations into the computation of probabilities for equivalences between instances. They also define the computations of the probabilities that a relation is a sub-relation of another based on the probabilities of equivalence between instances, and similarly for classes. Finally, to find equivalences they use an iterative approach that first computes the probabilities of equivalence between instances, then the probabilities for sub-relations, and the probabilities of equivalence between classes until the maximum assignments stabilize. PRASEMap [129, 130] is an unsupervised KG alignment approach that combines probabilistic reasoning (PR module) and semantic embeddings (SE module). The PR module uses the PARIS [144] model, while the SE module relies on GCNAlign [161] (other embedding models can be used). The PR module identifies cross-KG relations, literals, and entity mappings. The SE module leverages the entity mappings generated by the PR module as seed alignments for training and outputs additional entity mappings. Finally, the PR module is performed by incorporating embeddings and mappings from the SE module to perform more precise probabilistic reasoning. These last two steps can be iteratively performed to find more alignments. The integration of embeddings into the PR module is achieved by adding a weighted term that computes the similarity between two entities using the cosine similarity of their embeddings.

In the literature, handling uncertainty in KG alignment is often associated with the alignment of ontologies. In [1], the authors present and distinguish various operations on ontologies, such as mapping, merging, and alignment. They then describe methods for handling these operations under uncertainty, using probabilistic and logical models (such as Markov Logic Networks), fuzzy logic, Dempster-Shafer theory and hybrid methods combining machine learning with probabilistic approaches.

8 Uncertain Knowledge Fusion

In the previous section, we introduced the KG alignment task, along with a summary of the embedding-based approaches that tackle it. This task aims to identify equivalent entities and group them into clusters. Once these clusters have been formed, the next step consists in fusing the attributes of the entities within each cluster (as illustrated in Figure 7), as these attributes may be redundant, inconsistent, contradictory, or expressed at different levels of specificity. We first define the task in Section 8.1, and we present the various fusion approaches in Section 8.2.

8.1 Task Definition

The knowledge fusion step involves combining various pieces of information about the same entity or concept from multiple data sources into a consistent and a unified form, addressing the different deltas listed in Section 5.1 [67, 121]. The authors of [40] identify three broad goals to be achieved in this challenging task:

-

Completeness: measures the expected amount of data (number of tuples and number of attributes) at the output of the fusion task;

-

Conciseness: measures the uniqueness of object representations in the integrated data (number of unique objects and number of unique attributes of objects);

-

Correctness: measures the correctness of data, i.e., its conformity to the real world.

Therefore, data fusion involves resolving conflicts in the data to maximize these three goals. Indeed, when integrating knowledge from multiple heterogeneous sources, the quality of the information varies, and we need to determine the trustworthy information by performing a Truth Inference (TI) task. According to Rekatsinas [132], different TI strategies can be adopted. There are simple strategies that estimate the true values of the entities compared to other values provided by the sources by applying a majority vote or an average on them. Additionally, there are strategies that use the trustworthiness of the sources to quantify the true values of the objects, and it is even possible to establish a precision metric for each class of object and for each source. The problem with simple strategies is that they do not take into account the varying quality of data sources [94], but they are often used to initialize the true values to start iterative TI methods.

For example, suppose that knowledge in the form of triples (subject, predicate, object) has previously been extracted about the cell phone “Galaxy S23” from several sources , , …, resulting in the table at the bottom of Figure 8, where entities have already been aligned. We also represent the table as a graph for sources and .